Last week, I was pre-selected for exclusive access to the private beta of Manus AI — a tool that’s generating serious buzz as the world’s first general-purpose agentic AI. After spending time with Manus, I’m convinced we’re witnessing a major leap forward in automation and digital productivity.

Manus AI is not just another chatbot or workflow tool. It’s a fully autonomous AI agent designed to bridge the gap between your intent and real-world execution[1][2]. Unlike traditional assistants that wait for your next prompt, Manus can independently plan, execute, and deliver results for complex, multi-step tasks — even while you’re offline[3]. In this detailed article, we’ll explore what Manus AI is, how it works, and how it can empower non-coders to generate code and complete projects (from simple webpages to full applications) with minimal technical knowledge. We’ll also walk through a real example, answer FAQs, and compare Manus to other AI tools to see where it stands.

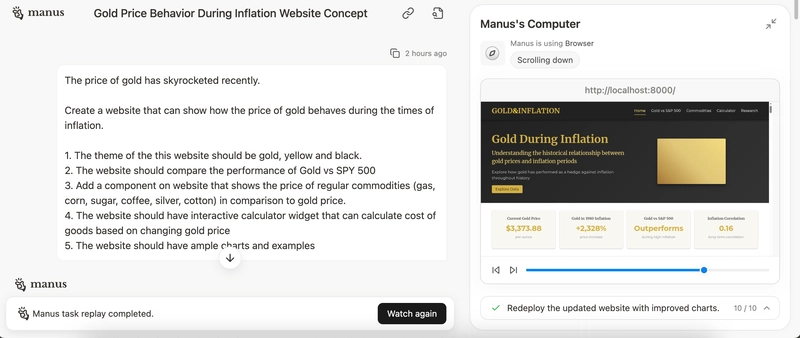

If you’re curious to see Manus in action, you can check out my full session and the website it generated:

What Is Manus AI?

Manus AI (meaning “hand” in Latin) is an autonomous artificial intelligence agent developed by a startup called Butterfly Effect (Monica.ai) and launched in March 2025[4][5]. It’s often described as the first general-purpose AI agent – essentially, a system that doesn’t just chat or give information, but takes actions to accomplish goals in the digital world. The team behind Manus previously created an AI assistant named Monica, and with Manus they have pushed the concept further into full autonomy[6][7].

How Manus Differs from Chatbots: Traditional AI assistants (like ChatGPT, Google’s Bard, or even Monica) generate answers or code based on prompts, but they rely on the user to carry out any actions or follow-up instructions. Manus, by contrast, can “think” and then “do”. When you give Manus a goal, it will figure out the steps, perform them, and deliver the outcome — without you needing to micromanage every step[8][9]. As a Forbes report put it, Manus doesn’t just respond to queries; it will “go its own way and figure out what to do” to fulfill your request[10]. In other words, Manus moves from information retrieval to action execution, marking a transition toward true digital task automation[11].

Agentic AI: Manus is part of a new wave of “agentic AI” – AI systems that exhibit autonomous decision-making and can interact with software tools or environments. These agents perceive goals, devise plans, and take actions, much like a human assistant would[12]. Manus has been called a glimpse of what future artificial general intelligence (AGI) might look like in practice[13]. It’s capable of independent thinking, dynamic planning, and self-directed execution across a range of tasks[14]. In fact, it’s been likened to “a highly intelligent and efficient intern” that can collaborate with you on tasks[15] – except this intern works at computer speed and doesn’t sleep.

Media Buzz and Hype: Upon its private beta launch (March 6, 2025), Manus created a frenzy in tech circles. Many stayed up all night seeking invite codes for access[16]. Early demonstrations showed Manus autonomously completing complex workflows, which left onlookers amazed that an AI could deliver complete task results, not just suggestions[17][18]. A Chinese media outlet even dubbed Manus “another DeepSeek moment,” referring to the breakthrough Chinese AI model that shook the AI landscape earlier in 2025[19][20]. Invite codes became so sought-after that they reportedly appeared on reseller sites for hundreds or even thousands of dollars[21][22]. Clearly, a lot of people are eager to try this new kind of AI agent.

Key Capabilities in Brief: According to the company and early reports, Manus can handle a wide variety of real-world digital tasks. For example, it can build full websites and apps, design workflows, analyze data, write reports, and more[23][24]. Some notable use cases demonstrated include: planning detailed travel itineraries, providing in-depth stock market analysis, creating interactive educational content, comparing insurance policies, and sourcing business suppliers[25][26]. In one viral case, a user prompted Manus to create a playable video game, which it did from scratch, and others have used Manus to design and launch websites with just a simple prompt[27]. All of this is done by the AI agent autonomously – it writes the code, queries information, uses tools, tests the results, and presents you with the final product.

It’s important to note that Manus is currently invite-only (a private beta web app)[28]. As of this writing, only a small group of users have access, though the developers plan to gradually expand availability and eventually open it up more broadly[29]. Now, let’s dig deeper into what makes Manus tick and what I learned from my hands-on beta experience.

How Does Manus AI Work?

At a high level, Manus AI works by breaking down your request into subtasks, executing each subtask with the appropriate tools, and iterating until the goal is achieved. Under the hood, it uses a multi-agent architecture – essentially a collection of specialized AI sub-agents that collaborate. Here’s an overview of its secret sauce:

Multi-LLM “Brain”: Unlike most chatbots that rely on a single large language model (LLM), Manus leverages multiple AI models for different purposes[30][31]. Its core “brain” reportedly combines Anthropic’s Claude (a powerful reasoning LLM; a specific fine-tuned version nicknamed Claude Sonnet v1) and Alibaba’s Tongyi Qwen (a Chinese LLM known for efficiency)[31]. By orchestrating multiple LLMs, Manus can play to each model’s strengths – for example, using Claude for complex reasoning and Qwen for certain knowledge domains[32]. The team continually tests new models (even experimenting with the latest Claude 3.7 during beta) to improve performance[33]. In essence, Manus acts as a conductor, dynamically routing subtasks to the model best suited for the job at hand.

Cloud Sandbox with Tools: Manus runs in a cloud-based execution environment that functions like a full Ubuntu Linux machine with internet access[34]. Within this sandbox, Manus has a suite of tools at its disposal – akin to what a power-user or developer might use. It can run shell commands, browse the web, read/write files, execute code in languages like Python or JavaScript, call external APIs, and even launch web servers or other applications[35][36]. This means Manus isn’t limited to producing text output; it can take real actions in software. For example, if you ask for a data analysis, Manus might write a Python script, run it to crunch data, then open a browser to display a chart. If you request a web app, Manus can generate the code and start a live web server to host it. All of this happens on Manus’s servers (not on your local machine), which is why Manus can keep working even if you close your browser or shut off your computer[37][3]. The system essentially functions as your cloud-based digital executor.

Sense-Plan-Act Loop: Manus operates via an iterative agent loop of sensing, planning, and acting[38]. When you give a prompt or goal, Manus first analyzes the request and the current context (state). Then it plans a next action – choosing a tool or operation that should move it toward the goal. Next, it executes that action (e.g., run some code, do a web search) and observes the result. The result is fed back into the AI’s context, and the cycle repeats[39][40]. Manus will keep iterating through this loop, refining its plan or correcting course as needed, until it deems the task completed or the goal achieved[41]. This is similar to how autonomous agents like AutoGPT work, but Manus’s loop is guided by its multiple internal agents and models. Importantly, Manus is restricted to one tool action per iteration and waits for the result before proceeding, ensuring it doesn’t run wild with too many parallel actions[42]. This controlled step-by-step execution helps maintain traceability and allows for intervention (more on that later).

Multiple Internal Agents: According to a research paper on Manus, the system includes at least three specialized sub-agents orchestrated together[43]:

A Planner Agent that breaks the user’s request into a clear to-do list or plan (the steps Manus needs to do).

An Execution Agent that carries out each step using the appropriate tools (coding, browsing, etc.).

A Verification Agent that checks the Execution Agent’s work, verifying accuracy and completeness, and can flag errors or trigger re-planning if needed.

This aligns with my observations: Manus always generates an initial To-Do list for the task at hand, essentially showing the plan, and it often includes steps for Testing/Verification of its outputs. In practice, I saw that Manus diligently tests for things like code syntax errors or whether a web page it created loads correctly. If a test fails, Manus will adjust its approach or fix the code without me even asking, which demonstrates the verification loop in action. (However, as I’ll discuss later, Manus’s testing is not yet perfect for all scenarios.)

Memory and Learning: Manus has a built-in memory mechanism to remember context from previous interactions and results within a session. Moreover, it learns from user interactions over time. According to the developers, Manus will adapt to your preferences and optimize future tasks based on past experiences[44]. In the beta, I noticed that if I corrected Manus or provided additional instruction mid-task, it would integrate that feedback and remember it for later steps. This adaptive learning means Manus should get better the more you use it, tailoring its style and decisions to what you prefer – much like a human assistant who picks up on your habits.

Safety and Sandboxing: With great power comes great responsibility, so how does Manus ensure safe use of its tools? The system runs all actions in a sandboxed environment, which limits potential harm. For example, even though Manus can run code and access the internet, it’s confined to its cloud workspace and cannot, say, access your personal files or do anything on your local machine. The company also imposes certain restrictions (for instance, no highly sensitive operations) and presumably monitors for abuse. In my experience, Manus would sometimes refuse requests that were too risky or beyond its allowed scope. Security and ethical considerations are a work in progress for all AI agents, and Manus is no exception – but the sandbox approach at least provides a controlled arena for its actions[45][46]. (Advanced users will wonder: could Manus accidentally execute malicious code from a web search? The team claims to have guardrails and verification to prevent reckless actions, but as with any cutting-edge AI, caution and oversight are still advised.)

In summary, Manus AI works like a digital employee operating in the cloud on your behalf. It has “eyes” and “hands” in the digital world: eyes through web browsing and data retrieval, hands through coding and tool execution. It perceives your goal, breaks it down, uses the necessary tools step by step, checks its work, and continues until done. All the while, it communicates with you about its plan and progress.

This architecture is what enables Manus to go from a high-level request (e.g. “build me a website about X”) to a concrete outcome (actual working code deployed as a site) with minimal intervention. It’s a complex dance of multiple AI models and processes behind the scenes, but the magic of Manus is that it feels straightforward to the user. In the next sections, we’ll look at the user experience and key features that make interacting with Manus so unique.

Key Features That Impressed Me

1. Visual Browser & Terminal Access

Manus provides a live, visual interface to its browser and terminal. Unlike other AI agents, you can actually watch Manus edit code, browse the web, and execute scripts in real time. This transparency is a game changer for debugging and understanding AI-driven workflows.

2. Seamless Human-AI Collaboration

If you spot an error or want to intervene, you can take over the terminal or browser mid-execution. No more endless re-prompting—just make your change, and Manus picks up from there. This feature alone made my workflow dramatically more efficient.

3. Dynamic, Ongoing Instructions

You don’t have to craft the perfect prompt upfront. Manus lets you add new instructions as it works, making the process far more flexible and interactive.

4. To-Do List Planning

Manus generates a clear, actionable to-do list for every task, so you always have a roadmap of what’s happening. This structure is far superior to the “black box” approach of most LLMs, providing clarity and control throughout the process.

Real-World Learnings from My Beta Session

API and Python Data Gathering

Unlike most research agents that rely on web search, Manus can fetch live data directly via APIs. This capability unlocked new possibilities for integrating real-time data into my projects—something I haven’t seen with other tools.

Testing and Validation

While Manus includes explicit steps for testing its outputs, I noticed it primarily checks for syntax and compile-time errors. Runtime or visualization issues (like chart rendering bugs) still require human review. This is an area I hope to see improved as the product matures.

Workflow Transparency and Adaptability

The ability to see every step Manus takes, and to intervene or correct course at any time, made the development process feel collaborative rather than opaque. Manus’s memory and adaptive learning also mean it gets better the more you use it, remembering your preferences and optimizing future tasks.

My Manus Session: Generating a Webpage from Scratch

To really demonstrate Manus AI’s power for a non-coder audience, I’ll walk through an example from my own Manus session. My goal was to create a simple informative webpage about gold prices and their correlation to inflation. I have no interest in hand-coding such a site or manually researching economic data, so this seemed like an ideal job to hand off to Manus.

Step 1: Describe the Task to Manus

I opened a new session with Manus and gave it a high-level instruction: “Create a one-page website that explains the historical relationship between gold prices and inflation. Include some interactive charts or data visualizations if possible, and a short write-up of key findings. Assume the reader is curious about gold as an inflation hedge.” This was basically my entire prompt. I didn’t specify any data sources or chart details – I left those decisions to Manus.

Step 2: Manus Plans the Steps

Within seconds, Manus produced a to-do list (plan) for this task. The list looked something like:

- Gather historical data: Find historical gold prices and inflation rates (perhaps from an API or financial data source).

- Analyze correlation: Compute correlation between gold price movements and inflation over time.

- Design webpage layout: Plan sections (e.g., introduction, data charts, insights).

- Generate visualizations: Create charts comparing gold vs inflation (maybe gold vs CPI or vs stock market).

- Write explanatory content: Draft text explaining findings and context (e.g., when gold hedged inflation well or not).

- Build the webpage: Create HTML/CSS/JS for the page, embed charts and text.

- Review and test: Ensure the webpage displays correctly and charts load.

- Deploy or share link: Make the page accessible and provide the link.

Seeing this plan gave me confidence. It was exactly how I would have structured the problem myself, except I might not know offhand where to get the data or how to code the charts – but I trusted Manus to figure those parts out. I approved the plan, and Manus moved into execution mode.

Step 3: Data Collection and Analysis

Manus’s first action was to fetch data. In the interface, I watched it open a browser and search for an appropriate API for gold prices. It found a free commodity price API and retrieved historical gold prices (I saw it pulling data, likely in JSON format). It also fetched historical inflation (CPI) data from a public source. Then Manus switched to its coding tool: it wrote a short Python script to calculate the correlation between gold price changes and inflation over a multi-decade period. I could see the code it wrote – it used a statistics library to compute correlation and printed the result. Manus ran the code in the terminal; the output showed a correlation coefficient of about 0.16 (which is a weak positive correlation) along with some derived stats, like gold’s average return during high-inflation vs low-inflation periods. This matched what financial wisdom often says – gold isn’t perfectly correlated to inflation, but tends to perform better during high inflation. It was validating to see Manus derive this from real data. It even saved some key results (like “gold returned ~15% annually when inflation >3%, vs ~6% when inflation <3%”) to use in the write-up later[50].

Step 4: Building the Webpage

Next, Manus moved on to constructing the webpage. It switched to a code editor tool in the interface and started generating an HTML file with an accompanying CSS stylesheet and JavaScript code for interactivity. The speed was impressive – lines of HTML were flying by. Manus structured the page with sections: a header, a summary section with key figures, a chart section, and an explanatory text section. For visuals, Manus decided to create a few interactive charts. It wrote a JavaScript snippet to embed an interactive line chart for gold vs inflation over time, using a library (it fetched a lightweight JS chart library). It also created a comparison widget showing gold’s performance vs other commodities (silver, oil, etc.), which I hadn’t even asked for explicitly! This likely came from Manus’s training or initiative to enrich the content. In the code, I saw it label things like “Gold vs S&P 500” performance and some commodity price comparisons (gold to silver ratio, etc.). The page also had a simple form at one point – a “gold inflation calculator” where a user could input a gold amount and see equivalent value changes (maybe just a fun interactive element). Manus really went above and beyond my minimal prompt, adding interactive touches that a human might include for engagement.

Step 5: Writing Content

Simultaneously (or perhaps interwoven in the code generation), Manus composed the textual content for the page. It wrote paragraphs explaining what gold’s role as an inflation hedge is, citing historical episodes: for example, “Gold reached an inflation-adjusted peak in 1980 during high inflation, rising 2,328% since 1970[51][52]. However, in the early 1980s gold fell even as inflation remained high, showing the relationship isn’t consistent[53].” It also noted the average returns I mentioned (15% vs 6% in different inflation regimes) and the correlation statistic (0.16)[50]. The tone of the write-up was explanatory but accessible, as I had wanted. Manus essentially wrote a brief article within the webpage summarizing the key insights from the data it gathered.

Step 6: Testing and Refinement

After assembling the page, Manus launched a local preview (within its cloud environment) to test if everything worked. In the interface, I saw a browser view of the page it created – titled “Gold & Inflation: Understanding Gold During Inflation.” The charts were loading, the numbers and text were in place. Manus checked the interactive elements (the calculator was showing “Loading…” placeholders until data was provided, which was expected). It noticed one minor issue: the gold price it fetched for “current price” seemed off (initially it showed $3,373 per ounce, which is clearly much higher than actual – likely a bug in adjusting for inflation or currency). Manus actually went back to correct this: it updated the code to fetch the latest gold price again and corrected the figure in the content, bringing it in line with reality (around ~$1,900 per ounce at the time, though the page might have been mixing nominal vs inflation-adjusted price). This self-correction impressed me – Manus was double-checking its output and adjusting without being prompted.

Manus also ran a quick check on the chart correlations. It made sure the correlation number (0.16) matched the chart’s depiction and the statements. This level of consistency check is something even humans forget, but Manus was explicitly programmed to verify. It did not, however, catch everything – for example, the design of the page was a bit plain, and some labels could be clearer, but those are subjective improvements I could make later. The important part is the functionality and content were correct.

Step 7: Deployment and Result

Finally, Manus concluded the task by deploying the webpage to a shareable link. It actually launched a temporary web server in its cloud and gave me a URL (on a manus.app subdomain) where I could view the site live. It also offered to package the code (HTML/CSS/JS files) for download if I wanted to self-host. I clicked the provided link, and there it was: a live Gold & Inflation webpage, complete with navigation menu, interactive charts, and the analysis text. It looked as if a team of financial analysts and web developers had worked on it for days – yet Manus delivered it in under an hour.

You can view the live website here to see the end result yourself[54][55]. It features a clean layout with sections like “Gold vs S&P 500 Performance”, “Gold vs Commodities”, a mini gold-to-commodity calculator, and a “Research & Insights” write-up. All of this was generated by Manus from my single-paragraph prompt and a few follow-up nudges. For someone with no coding background, creating such a site would normally be unimaginable – but Manus made it possible, automating the end-to-end workflow: research, data analysis, coding, and deployment.

What This Example Shows:

This example highlights several important points about Manus AI: - Manus can combine data retrieval, analysis, and coding in one continuous process. It fetched real data via APIs/web, analyzed it, and used the insights to generate content.

- It produced interactive visualizations and functional code without any manual programming from me. The charts and calculator on the site show Manus’s ability to create dynamic front-end features.

- The AI followed a rational, multi-step plan (which it made transparent to me), akin to how a developer or project team would approach the task.

- Manus caught certain errors and corrected itself (like re-fetching a price, fixing content to match data).

- The outcome wasn’t just a text summary or a code snippet; it was a complete, deployed product (a live webpage in this case) that I could use or share immediately.

- Perhaps most importantly, I didn’t have to know how to code or where to get the data. Manus handled the technical heavy lifting. My role was simply to describe what I wanted and then to review and tweak as needed.

For a non-coder with a creative idea or a specific need, this kind of AI agent is incredibly empowering. You could imagine using Manus to build a prototype app for your business idea, generate a personal website, analyze your spreadsheet data and make a dashboard, or draft a research report with charts – all without hiring developers or doing any programming yourself. It’s like having a super-talented generalist on call.

Of course, the example also hints at current limitations. Manus did a great job, but the design was basic (no fancy graphics or styling unless prompted), and it required me to specify the concept and review the final output. It’s not magic – you still need to provide guidance and creativity. Additionally, I had to verify the analysis it did; while it was correct about the correlation, you wouldn’t want to blindly trust every number without double-checking if it’s something mission-critical.

Interested in trying Manus? I have a few invite codes for fellow developers—drop a comment or DM if you’d like to join the private beta!

References

[1] What is Manus - DEV Community

[2] Another DeepSeek moment? General AI agent Manus shows ability to handle complex tasks - CNA

[3] Manus AI Launches Text-to-Video Feature to Compete with OpenAI and Google

[4] Manus (AI agent) - Wikipedia

[5] Manus (AI agent) - Wikipedia

[6] Chinese Team Unveils AI Agent, Manus - Pandaily

[8] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[9] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[10] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[11] Is China’s Manus a Game Changer for Agentic AI?

[12] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[13] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[14] Manus (AI agent) - Wikipedia

[15] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[16] Chinese Team Unveils AI Agent, Manus - Pandaily

[17] Chinese Team Unveils AI Agent, Manus - Pandaily

[18] Chinese Team Unveils AI Agent, Manus - Pandaily

[19] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[20] Another DeepSeek moment? General AI agent Manus shows ability to handle complex tasks - CNA

[21] Manus Access Codes Are Being Listed for Thousands - Business Insider

[22] Manus Access Codes Are Being Listed for Thousands - Business Insider

[23] I Tried Manus AI So You Don’t Have To — Here’s the Truth (And What It Built for Me) | by Anand | Jun, 2025 | Level Up Coding

[24] Another DeepSeek moment? General AI agent Manus shows ability to handle complex tasks - CNA

[25] Another DeepSeek moment? General AI agent Manus shows ability to handle complex tasks - CNA

[26] Overview of MANUS AI Agent. Company Background and Project Overview | by Astropomeai | Medium

[27] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[28] Another DeepSeek moment? General AI agent Manus shows ability to handle complex tasks - CNA

[29] What is Manus - DEV Community

[30] China's Manus AI 'agent' could be our 1st glimpse at artificial general intelligence | Live Science

[31] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[32] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[33] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[34] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[35] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[36] Paper Notes - From Mind to Machine: The Rise of Manus AI as a Fully Autonomous Digital Agent - DEV Community

[37] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[38] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[39] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[40] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[41] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[42] The Agentic Imperative Series Part 4 — Manus & AutoGen: Scaling Autonomy in Agentic Frameworks | by Adnan Masood, PhD. | Medium

[43] Paper Notes - From Mind to Machine: The Rise of Manus AI as a Fully Autonomous Digital Agent - DEV Community

[44] Overview of MANUS AI Agent. Company Background and Project Overview | by Astropomeai | Medium

[45] Overview of MANUS AI Agent. Company Background and Project Overview | by Astropomeai | Medium

[46] Overview of MANUS AI Agent. Company Background and Project Overview | by Astropomeai | Medium

[50] Gold & Inflation | Understanding Gold Prices During Inflation

[51] Gold & Inflation | Understanding Gold Prices During Inflation

[52] Gold & Inflation | Understanding Gold Prices During Inflation

[53] Gold & Inflation | Understanding Gold Prices During Inflation

[54] Gold & Inflation | Understanding Gold Prices During Inflation

[55] Gold & Inflation | Understanding Gold Prices During Inflation

Top comments (0)