*products versions - Software AG webMethods API Gateway 10.15

Usecase Scenario

A customer has implemented SoftwareAG webMethods APIGateway on a Kubernetes cluster consisting of three nodes. Through a comprehensive analysis, it has been observed that the API Gateway encounters high utilization during specific timeframes but experiences minimal traffic during regular intervals.

Currently, the scaling of instances necessitates manual intervention by the platform administrator, demanding constant monitoring to assess the runtime’s health. This reactive approach has proven to be inefficient. Therefore, there is a need to identify a more proactive and efficient solution to address this scenario.

Solution: Enabling Autoscaling for SoftwareAG webMethods API Gateway in Kubernetes

Kubernetes offers robust autoscaling capabilities, and SoftwareAG webMethods API Gateway leverages Horizontal Pods Autoscaling (HPA) based on its configuration. This empowers webMethods API Gateway to seamlessly adjust its scale within the Kubernetes environment. Achieving this can be accomplished through two distinct methods.

Option 1: Basic CPU and Memory Monitoring using Metric Server

The Metric Server, a critical Kubernetes component, is responsible for gathering resource metrics, such as CPU and memory utilization, from various sources, including kubelet on every node. Subsequently, these metrics are exposed and accessible via the Kubernetes API server. This accessibility facilitates real-time monitoring and informed scaling decisions based on these metrics.

Option 2: Crafting Custom PromQL Solutions (Prometheus + Prometheus Adapter + Metrics Server)

Option 2 introduces the Prometheus adapter, a tool designed to harness metrics collected by Prometheus for dynamic scaling decisions. This approach further extends the possibilities, enabling the creation of custom metrics to monitor API performance and facilitate the autoscaling of webMethods API Gateway.

By exploring these two options, you can tailor your autoscaling strategy to meet your specific needs and optimize the performance of webMethods API Gateway within your Kubernetes environment.

Pre-requisite

- Kubernetes Environment (Minikube or a Cluster)

- Access to Github.com

- Access to Kubernetes via kubectl

- Helm Charts

Steps to follow - Exploring Option 1: SoftwareAG APIGateway with Metric Server

In this section, we will delve into the details of Option 1, focusing on how to implement it. Option 1 entails using the Metric Server in conjunction with SoftwareAG APIGateway.

Kubernetes comes equipped with the Metric Server, a critical component that actively monitors CPU and memory utilization. It effectively tracks designated SoftwareAG pods and, when combined with the Horizontal Pod Autoscaler (HPA), enables automatic scaling based on predefined configurations.

Metrics Server offers: (Reference: GitHub - kubernetes-sigs/metrics-server: Scalable and efficient source of container resource metrics for Kubernetes built-in autoscaling pipelines.)

• A single deployment that works on most clusters

• Fast autoscaling, collecting metrics every 15 seconds.

• Resource efficiency, using 1 milli core of CPU and 2 MB of memory for each node in a cluster.

• Scalable support up to 5,000 node clusters.

It’s important to note that not all Kubernetes service providers include the Metric Server by default. Therefore, provisioning the Metric Server is necessary before proceeding with autoscaling configurations.

Configuring Metric Server

To set up the Metric Server in Kubernetes, follow these steps:

Check and Install Metric Server in Kubernetes

Step1: Execute the following command to check if the Metric Server already exists. This command is to check for the existence of Metric Server pods in the “kube-system” namespace. It’s a good first step to ensure the Metric Server is not already running.

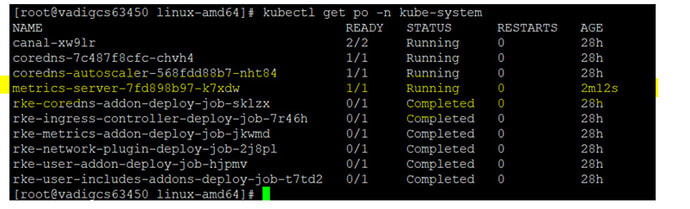

kubectl get pods -n kube-system

Step 2: If the metrics server already exists in your Kubernetes cluster, then do not perform the next steps. Kindly move on to (Configuring External Elasticsearch section) to proceed with the installation of webMethods API Gateway.

Step 3: Apply the script by executing the below command. The first command applies the YAML configuration from the GitHub release, and the second command checks for pods in the “kube-system” namespace to confirm the Metric Server’s deployment.

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

kubectl get po -n kube-system

Step 4 : Use the following commands to check the pod statistics. It allows the user to view node and pod resource usage statistics, which is a useful way to confirm that the Metric Server is working as expected.

kubectl top nodes

kubectl top pods

An APIService is used to verify if the metric server is enabled after the installation. It checks for the existence of API services, which can be used to verify if the Metric Server is enabled and registered in the Kubernetes cluster.

kubectl get apiservices

Configuring External Elasticsearch

To setup external Elasticsearch we will be using Helm Charts which contains all the resource definitions necessary to run an application. To Install Helm Charts - Helm | Installing Helm in minikube or Kubernetes cluster. Once Helm charts are installed in the Kubernetes cluster use GitHub repo link for Elasticsearch charts.

Step 1: Download the GitHub Elasticsearch Helm charts https://github.com/elastic/helm-charts/tree/main/elasticsearch

Step 2: Update elasticsearch image tag to 8.2.3 in values.yaml file as webMethods API Gateway 10.15 recommend 8.2.3 Elasticsearch version.

Step 3: To install Elasticsearch use the command.

helm install release name file location of elasticsearch helm chart

Configuring SoftwareAG API Gateway

To provision a SoftwareAG API Gateway instance in Kubernetes, follow these instructions. Please ensure that you replace placeholders like with the actual file path and make sure you have the necessary files and configurations ready before following these steps. Additionally, consider specifying the namespace where you want to deploy the APIGateway if it’s different from the default namespace.

Step 1: Create the secret to store the Software AG’s container registry credentials.

kubectl create secret docker-registry regcred --docker-server=sagcr.azurecr.io --docker-username= --docker-password= --docker-email=

Step 2 : Apply the APIGateway’s license by creating a ConfigMap with the license.xml file. Ensure you have the license file (license.xml) in the current directory. This command will create a ConfigMap named myapiconfigfile and include the license file as a key-value pair.

kubectl create configmap API-gateway-license --from-file=license.xml

Step 3: Provision the SoftwareAG APIGateway by applying the provided deployment script. Customize the script to specify the APIGateway image you have available. Software AG’s official container repository is at https://containers.softwareag.com. You can log in with your empower credentials to access this repository.

apiVersion: apps/v1

kind: Deployment

metadata:

name: api-apigateway-deployment

labels:

app: "api-apigateway-deployment"

spec:

replicas: 1

selector:

matchLabels:

app: api-apigateway-deployment

template:

metadata:

annotations:

prometheus.io/scrape: "true"

name: api-apigateway-deployment

labels:

app: "api-apigateway-deployment"

spec:

containers:

- name: api-apigateway-deployment

image: sagcr.azurecr.io/apigateway:10.15

imagePullPolicy: IfNotPresent

env:

- name: apigw_elasticsearch_hosts

value: elasticsearch-master-0.elasticsearch-master-headless.default.svc.cluster.local:9200,elasticsearch-master-1.elasticsearch-master-headless.default.svc.cluster.local:9200,elasticsearch-master-2.elasticsearch-master-headless.default.svc.cluster.local:9200

- name: IS_THREAD_MIN

value: "100"

- name: IS_THREAD_MAX

value: "150"

ports:

- containerPort: 5555

name: 5555tcp01

protocol: TCP

- containerPort: 9072

name: 9072tcp01

protocol: TCP

resources:

requests:

cpu: 200m

memory: 500Mi

livenessProbe:

failureThreshold: 4

initialDelaySeconds: 300

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 5555

timeoutSeconds: 2

readinessProbe:

failureThreshold: 3

httpGet:

path: /rest/apigateway/health

port: 5555

scheme: HTTP

initialDelaySeconds: 300

periodSeconds: 10

successThreshold: 2

timeoutSeconds: 2

volumeMounts:

# Name of this mount

- name: licensekey

# Path for container file system running inside the pod

mountPath: /opt/softwareag/IntegrationServer/instances/default/config/licenseKey.xml

subPath: licenseKey.xml

volumes:

- name: licensekey

# Refering to configmap volume with license key file details

configMap:

name: myapiconfigfile

kubectl apply -f path to the deployment yaml

Step 4 : Execute the below command to check whether APIGateway is ready. This command will list all the pods in the current namespace, allowing you to verify if the APIGateway pods are running and ready.

kubectl get pods

kubectl get svc

kubectl get endpoints

Configuring Horizontal Pod Autoscalar

To set up HPA in Kubernetes, follow these steps:

Step 1 : Create and apply the HPA specification with the following configuration.

Apply the attached hpa.yml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: api-apigateway-deployment-scaling

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: api-apigateway-deployment #your apigateway

minReplicas: 1

maxReplicas: 4

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

kubectl apply -f hpa.yaml

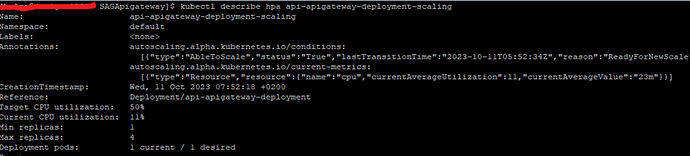

Step 2: To check the status of the HPA and see how it’s performing, run the following command.

kubectl get hpa

Step 3: After a few minutes, HPA will start monitoring CPU usage. If the average CPU utilization exceeds 50%, it will automatically scale up the pods associated with the specified deployment. To get detailed information about the HPA and its scaling behavior, you can run.

kubectl describe hpa API-apigateway-deployment-scaling

Load Generation

Let us try to generate some load to simulate the autoscaling behavior. To generate load, we are going to use JMeter to perform load testing. The Apache JMeter is an open-source, purely Java-based software. This software is used to perform performance testing and load testing of web applications,

- simple Pet store swagger API - https://petstore.swagger.io/

- JMeter as a load generation tool. Apache JMeter - Download Apache JMeter

Step 1 : Download and Install JMeter from the official website: Apache JMeter - Download Apache JMeter.

Step 2: Import the JMeter test plan and make sure to configure the endpoint to the target you want to test. For example, you can use the simple Pet store Swagger API at https://petstore.swagger.io/.

Step 3 : Execute the load test using JMeter. After the execution, you will observe that the number of Replicas (pods) in your Kubernetes deployment will increase to handle the high volume of traffic.

Using JMeter generated high volume test case -

To check the status of Horizontal Pod Autoscaling (HPA) during and after load testing, use the following commands:

To check HPA status during and after load testing.

kubectl get hpa

To get detailed information about the HPA and its scaling behavior after the load testing is completed.

kubectl describe hpa

After the load testing is completed, you should notice that the API Gateway automatically scales down to its minimum configured replicas (e.g., 1) when the traffic load decreases.

Conclusion

In this article, we’ve explored how to implement autoscaling for webMethods APIGateway using the metrics server. We can simulate the same process for Microservices runtime or Integration Server (please give it a try!). We’ve just scratched the surface, as there are two more exciting options to discover in the next installment. Stay tuned for our upcoming article, where we’ll delve into these alternatives and further enhance your Kubernetes expertise.

Useful links | Relevant resources

webMethods Containerization and Orchestration Guide

Helm Charts: Deploying webMethods Components in Kubernetes

Top comments (0)