In this blog post, I've shared my approach to solve this low level design problem, Design a Cache system.

Code for this can be found on my Github repo:

https://github.com/the-arcade-01/golang-low-level-design/tree/main/cache-system

Requirements

Our cache should support:

- Basic operations:

-

Put(key, value, ttl): Add a key-value pair with a time-to-live (TTL). -

Get(key): Retrieve the value for a key if it exists and hasn't expired. -

Delete(key): Remove a key manually.

-

- Eviction Policies:

-

LRU(Least Recently Used) -

LFU(Least Frequently Used) -

FIFO(First In First Out) - Evict entries when the cache reaches its maximum capacity.

-

- TTL Expiry:

- Automatically remove keys that have expired based on their TTL.

- Code should be extensible and adhere best practices.

Code structure

First of all, defining the structure of the code. I follow this folder structure:

├── cmd

│ └── main.go

├── go.mod

└── internal

├── cache

│ └── cache.go

├── evictionpolicy

│ ├── evictionpolicy.go

│ ├── fifo.go

│ ├── lfu.go

│ └── lru.go

└── models

└── node.go

-

cmdentry point for our main app. -

cachefolder, we define the cache struct and its operations. -

evictionpolicy(could be named better xD), contains interface declaration with its implementations. -

models, contains common models.

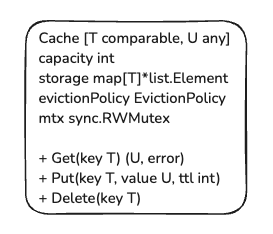

Now, the classes (or I should say structs, as Golang formally don't have OOP definitions)

-

Cachestruct holds and expose all the public methods which are mentioned in requirements.

-

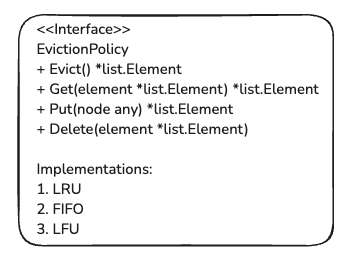

EvictionPolicy, since we've multiple algorithms which we can implement, so our code should be extensible and allow us to choose which algorithm we would like to have in our cache. Hence, we created an interface holding all the methods which are necessary in defining the algorithms.

In a system we can have different instances of cache running with different algorithms. This is an example of Factory Pattern.

Using a constructor we can create different eviction policies.

func NewEvictionPolicy(policy PolicyType) (EvictionPolicy, error) {

switch policy {

case LRU:

return newLRUCache(), nil

case FIFO:

return newFIFOCache(), nil

case LFU:

return newLFUCache(), nil

}

return nil, fmt.Errorf("policy not found")

}

-

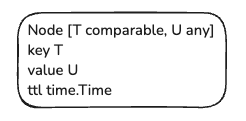

Nodeis just a data model which represents a key-value pair,

Code explanation

Before diving into the code part, if you're not familiar with the algorithms mentioned above, please read about them then it will be easier for you to understand the code, though I'll explain my things are required but I won't explain all the algorithms. Plus a basic understanding of Generics is also required.

Our Cache storage first of all has a variable capacity which tells us the maximum count of keys which it can store. Then we've the eviction policy which decides which logic we apply to remove the keys, when the size of cache exceeds the capacity. We also have storage map which stores the keys to their respective data pointers. This gives us O(1) lookup & insert.

- Why are we using

*list.Elementas a type for the value?

Because the implementation of the algorithms mentioned above requires a doubly linked list to maintain the specific order of the elements. Eg: in LRU algorithm, the elements which are least recently used will be present at the bottom end of the list, while the ones which are frequently used will be towards the front.

type Cache[T comparable, U any] struct {

mtx sync.Mutex

capacity int

expireInterval int

storage map[T]*list.Element

evictionpolicy evictionpolicy.EvictionPolicy

}

Now, let's look at the individual methods exposed by the Cache struct.

-

GETmethod logic- If the key is present in the map, then we perform the re-ordering of that element in the eviction list (eg: in case of LRU, the element is moved to front, in case of LFU, its frequency is increased and is moved to new frequency list).

- Before returning we also check whether its expired or not which is a simple time check

node.ttl.Before(time.Now()). - If not found, then we return a zero value with an error not found.

func (c *Cache[T, U]) Get(key T) (U, error) {

c.mtx.Lock()

defer c.mtx.Unlock()

if element, ok := c.storage[key]; ok {

element = c.evictionpolicy.Get(element)

c.storage[key] = element

if node, ok := element.Value.(*models.Node[T, U]); ok {

if !node.IsExpire() {

return node.GetValue(), nil

}

}

}

var zeroValue U

return zeroValue, fmt.Errorf("key not found")

}

-

Putmethod logic- If the key is present in the storage, then we update its value and TTL and then again perform the re-ordering of the element.

- If the key is not present, in that case, first we check if the size of cache is full or not. If full, then we need to perform eviction based on the policy (eg: in case of LRU, the last element of the list is removed, in case of FIFO, the first element is removed). We delete the element from both storage map and eviction list.

- Then we insert in new key value in storage.

func (c *Cache[T, U]) Put(key T, value U, ttl int) {

c.mtx.Lock()

defer c.mtx.Unlock()

if c.capacity == 0 {

return

}

if element, ok := c.storage[key]; ok {

element.Value.(*models.Node[T, U]).UpdateValue(value).UpdateTTL(ttl)

element = c.evictionpolicy.Get(element)

c.storage[key] = element

} else {

if c.capacity <= len(c.storage) {

del := c.evictionpolicy.Evict()

if del != nil {

delete(c.storage, del.Value.(*models.Node[T, U]).GetKey())

}

}

c.storage[key] = c.evictionpolicy.Put(models.NewNode(key, value, ttl))

}

}

-

Deletemethod We delete the given key from both the eviction list and storage map.

func (c *Cache[T, U]) Delete(key T) {

c.mtx.Lock()

defer c.mtx.Unlock()

c.delete(key)

}

func (c *Cache[T, U]) delete(key T) {

if element, ok := c.storage[key]; ok {

c.evictionpolicy.Delete(element)

delete(c.storage, key)

}

}

Now, let's look at the eviction policy interface and LRU cache implementation.

type EvictionPolicy interface {

Get(element *list.Element) *list.Element

Evict() *list.Element

Put(node any) *list.Element

Delete(element *list.Element)

}

-

Getmethod is responsible for re-ordering the element to its correct position. -

Evictcontains logic for evicting the element if the size of cache reaches its limit. -

Putinserts the data into the list. -

DeleteI guess deletes the element, xD.

An example of LRU implementation looks like this,

type LRUCache struct {

list *list.List

}

func (c *LRUCache) Get(element *list.Element) *list.Element {

c.list.MoveToFront(element)

return element

}

func (c *LRUCache) Evict() *list.Element {

last := c.list.Back()

if last != nil {

c.list.Remove(last)

}

return last

}

func (c *LRUCache) Put(node any) *list.Element {

return c.list.PushFront(node)

}

func (c *LRUCache) Delete(element *list.Element) {

if element != nil {

c.list.Remove(element)

}

}

Further improvements

-

Optimizing the TTL based keys removal

- Currently we loop through all the keys in map and check their TTL which is

$O(n)time complexity and is not efficient.

func (c *Cache[T, U]) expire() { ticker := time.NewTicker(time.Duration(c.expireInterval) * time.Second) fmt.Printf("Cache expire interval started, interval: %v\n", c.expireInterval) for range ticker.C { c.mtx.Lock() for key, value := range c.storage { if value.Value.(*models.Node[T, U]).IsExpire() { c.delete(key) } } c.mtx.Unlock() } }- We might optimize this by maintaining a heap of (keys, TTL), this way we won't have to loop for all the keys, and only those keys will be removed which have expired, just need to periodically check the top of the heap, time complexity of heapify

$O(logn).

- Currently we loop through all the keys in map and check their TTL which is

Storage map is initialized in Cache struct itself which can be moved out as a separate interface.

Conclusion

Thanks for reading it, and if you've any suggestions or find any bugs in my code or want to share your own approach, feel free to open an Issue on my github repo with the format below:

Title: "Approach: <Problem Name>"

Example: "Approach: Design a Cache System"

Or you can leave any comments here.

Thanks for reading it :).

Top comments (2)

Thanks for the article. I enjoyed reading it.

Glad to hear that