It was a late afternoon, and six of us were sitting in the office, unwinding over a few beers. It had been a great day, one of my colleagues had just deployed a major feature, while my team had finally squashed a stubborn bug that had been haunting us for days.

As we chatted about the day’s wins, someone threw out an interesting question: What do we prefer in our daily work: building new features, refactoring, or fixing bugs?

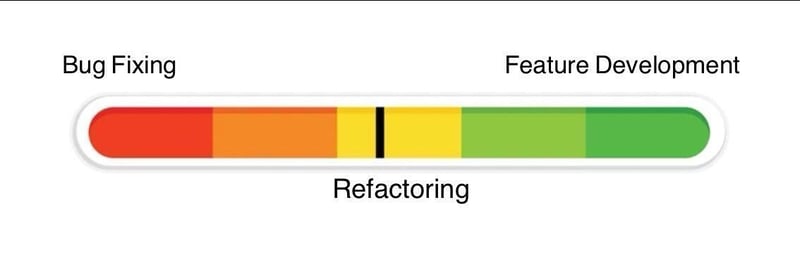

For some reason, refactoring didn’t spark much debate. But when it came to feature development versus bug fixing, the room was split right down the middle. That conversation left me with a mental image of a spectrum when it comes to software engineering preferences: bug fixing on one end, feature development on the other, with refactoring sitting somewhere in between.

When AI Meets DRY

Personally, I lean toward the bug-fixing side of that spectrum, so when I came across GitClear’s analysis on AI Copilot Code Quality, it immediately caught my attention.

The key takeaway? AI-powered coding assistants boost developer productivity but at a cost. The analysis pointed out that these tools often suggest code that already exists somewhere in the codebase. Engineers accept these suggestions without checking if the same logic has already been implemented elsewhere. In other words, AI is subtly encouraging code duplication, which flies in the face of the DRY (Don’t Repeat Yourself) principle.

This is mainly driven by the fact that engineering productivity is still largely measured by lines of code written, number of commits or features delivered, completely overlooking the long-term maintenance costs that are introduced.

We already know that duplicated code leads to more bugs, and more bugs mean more time spent fixing issues instead of innovating. So while AI might make us feel more productive in the short term, it could be setting us up for a future where maintenance and defect remediation become our primary job.

The analysis even makes this prediction:

...in the coming years, we may find "defect remediation" as the leading day-to-day developer responsibility.

As someone who enjoys bug fixing and refactoring, that doesn’t sound too bad but from a software quality perspective, it’s a worrying trend.

Measuring What Matters

The analysis references a well-known quote from Peter Drucker:

What gets measured gets done.

and continues with:

There is a great hunger for more developer productivity. If "developer productivity" continues being measured by "commit count" or by "lines added," AI-driven maintainability decay will continue to expand.

As an Engineering Manager, I see our core software engineering principles - DRY, KISS, YAGNI, as fundamentally designed to keep long-term maintenance costs low.

To break this downward spiral, we need to rethink how we measure developer impact. Instead of focusing purely on velocity, we should define metrics that reflect an engineer’s contribution to long-term maintainability.

Ironically, our senior engineers have been telling us this for years. It turns out we needed a productivity multiplier like AI to fully understand the consequences of ignoring their advice.

Top comments (0)