When users browse the web, they want to know that the content they are reading is reliable and trustworthy. In 2009, facebook launched the “share” button for publishers which showed analytics on articles for how many times the article has been shared on facebook. This was quickly followed by twitter with their own “tweet” button in 2010. And for the next few years, several more “share” buttons popped up like one from Pinterest for “pinning”. The key reason for publishers to adopt these “share” buttons is to provide a sense of trust back to the visitor on the site that others have read and found the content useful. It is the herd mentality that if many before you have read this then something must be right here.

deletemydata.io aims to offer a single reliable place on the web to find out how to delete anything. In order to increase reliability and the trust factor among visitors, I wanted to adopt the same growth strategy - show a live counter of users who have found the content valuable. And they tell content is useful by clicking a simple Yes/ No button for Was this helpful? At the bottom of the page.

In this article, I’m going to share how I implemented this live counter using my existing tech stack leveraging FaunaDB in a simple and efficient manner. To start with, it will help to understand deletemydata.io's tech stack first.

deletemydata.io tech stack

The three pillars of my stack are:

- Netlify

- React-Static

- Imgix - Hosting images

Netlify is the best platform I’ve seen to date to build websites if you are a developer. React-Static is a static site generator that adopts JAMStack principles. JAMStack is the terminology used for pre-rendering files and serving them via a CDN without the need for having a backend server. It has a lot of advantages to the traditional way of using servers to build and render the same page over and over again.

Options For Implementing A Counter

There are several ways to implement a live counter. Some of the common ways are:

- Using the facebook share toolbar i mentioned above.

- Using redis and update

With the fb share toolbar, it's extremely simple to implement. But you don’t have control over the design of the toolbar itself and you’d need to share data of your visitors with facebook. This opens my site up to support GDPR and CCPA legislations for a user.

With redis, you’ll have control over the design unlike the toolbar. When you are setting up a new system, it's a lot of time consuming operational work - evaluating between GCP and AWS., opening up the system to internet access, adding a security layer on top is not trivial so that it's not abused etc.

There was this third option I ran into that was more friendly to my existing stack - FaunaDB. Although it was something I wasn’t familiar with early, on reading about Netlify’s add-on support for FaunaDB and its support for temporality natively, I decided it was worth looking into.

- Using FaunaDB

What's temporality

Temporality is the concept of offering retention for a piece of data. FaunaDB offers this functionality by supporting ttl (time to live) for each document you create. So now the collection is simply a journal with time stamped entries leveraging FaunaDB’s native ability to enforce retention on it. Each entry would look similar to the one below:

| id | record | ttl |

|---|---|---|

| 1 | {pageid: xxxxxx} | 30 days |

The record above is the document that would be added. id and ttl are illustrated just to show how temporality would work.

I’d like to simply provide the ability to tell my users - how many before you have found the info reliable and have deleted this account in the last month. So, if for each page on the site, I have entries for users who find the page useful with an entry timestamp and combined that with a retention period of a month, I technically should be able to get # of users who have found this page useful in the last month.

This support was quite important for deletemydata.io. Any time you have content on a site, it’s important to keep it relevant. As a new user it gives me more confidence in the site when I know that the information is not stale. In this case, we share metrics showing that several others have deleted the same account you are looking for very recently.

With the options considered, FaunaDB had more to offer than the rest. It showed a lot of promise for me to quickly try out a POC to validate.

Getting Started with Netlify and Fauna

There are several resources out there for integrating FaunaDB with your netlify app. I’ll link the ones I used at the bottom.

Step 1: Install netlify cli

npm install netlify-cli -g

Step 2: Create fauna db instance for the site

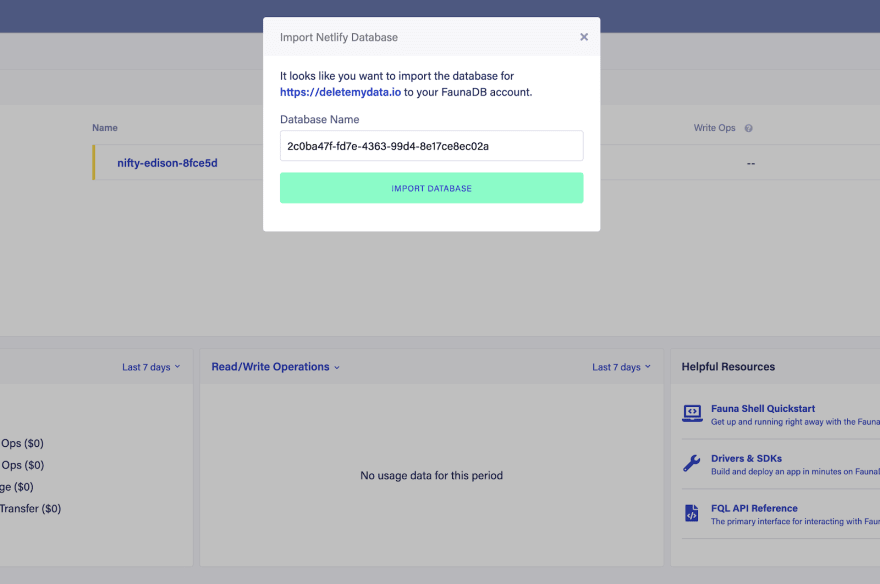

netlify addons:create fauna

Add-on "fauna" created for nifty-8fce5d

Step 3: Create account with fauna

netlify addons:auth fauna

Opening fauna add-on admin URL:

https://dashboard.fauna.com/#auth={auth_token}

Fauna has an Oauth integration with Netlify. This is nice since you don’t have to create another account and can just sign in with Netlify.

Once you authorize it, netlify will “import” a db for you into your fauna account.

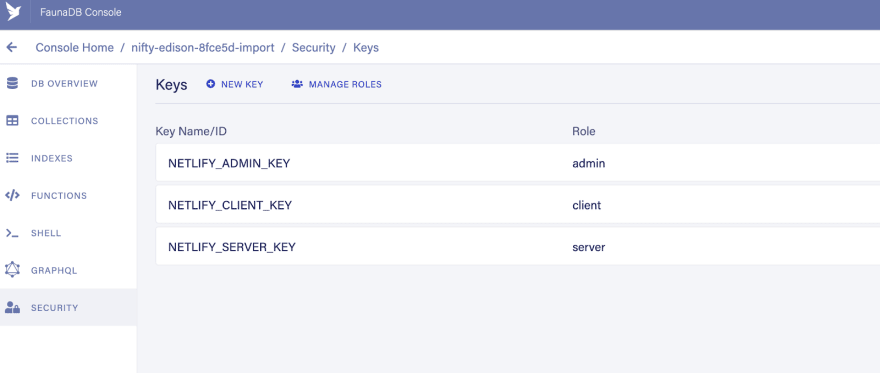

Although nothing is present in your db, you’ll have keys setup for access via the netlify app.

These keys will be injected automatically by netlify as an addon env variable. You can see this when you netlify dev command in your local environment.

netlify dev

◈ Netlify Dev ◈

◈ Injected addon env var: FAUNADB_ADMIN_SECRET

◈ Injected addon env var: FAUNADB_SERVER_SECRET

◈ Injected addon env var: FAUNADB_CLIENT_SECRET

Before diving too deep into how we’ll write code in netlify to talk to fauna, I wanted to get a feel for fauna as a language, semantics and how this would look like in fauna. Fauna shell was an awesome place for that. You can see the fauna shell in the dashboard on the web or have it run locally.

Architecture

Each page in deletemydata.io has a unique slug. For a live counter, we want to have a counter per page that will also take into account that each counter lasts only for 30 days. 30 days is arbitrary, not too short to keep the counter values low and not too long to give the user a bad impression for stale content.

While I share with you the structure of the db in fauna, I’ll also share how I used the fauna shell to create and test this at the same time.

Data Model

Single table with a single field - pageid containing a ttl of 30days for each entry.

We’ll call this collection (or table) deletes:

CreateCollection({ name: "deletes" }

We want the client to do two things:

- Create an entry

- Fetch the count

If you want to add a document into the collection, it's pretty straightforward. But we also want to have a ttl on this entry so that temporality can take effect

Create(Collection("deletes"), {

data: {

pageid: "test-1"

},

ttl: TimeAdd(Now(), 30, "days")

}

Each entry is a counter by itself. This will suffice for the first use case assuming FaunaDB adheres to its ttl for the document.

In order to support, fetching per pageid, we need to create an index for faster lookup.

CreateIndex({

name: "deletes_by_pageid",

source: Collection("deletes"),

unique: false,

terms: [{ field: ["data", "pageid"] }]

})

So now when you issue a count query for the pageid, we’ll get back the count of existing documents that match this value

Count(Match(Index("deletes_by_pageid"), "test-1"))

Note that using a count function is a risky proposition since if you have a large set of documents you could exceed the transaction limit of 30 seconds. It works a good starting point given that all documents are short lived to only be alive for 30 days.

After a few tests on documents with shortened ttls, there was enough confidence that this would work for this use case. One concern that might come with this is how fast it will count since we are creating a new document for each page id per feedback (this is how we know a user has found this valuable). But since we have an index on this document for the field, lookups were quite fast.

Netlify function

Now that we were able to test how things will look like with FaunaDB, I moved to implement the same with the app. In netlify, per JAMStack principles, although you don’t have a backend, you have access to run serverless lambda functions that your client can call.

Creation Flow

Here’s how the data flow for creation looked like

User ===> Clicks feedback-YES ===> Call deletes-create ===> Create a document

Client Code:

const faunadb = require('faunadb')

/* configure faunaDB Client with our secret */

const q = faunadb.query

const client = new faunadb.Client({

secret: process.env.FAUNADB_SERVER_SECRET

})

/* export our lambda function as named "handler" export */

exports.handler = (event, context, callback) => {

/* parse the string body into a useable JS object */

const data = JSON.parse(event.body)

console.log('Function `deletes-create` invoked', data)

const item = {

data: data,

ttl: q.TimeAdd(q.Now(), 30, "days")

}

/* construct the fauna query */

return client

.query(q.Create(q.Collection("deletes"), item))

.then(response => {

console.log('success', response)

/* Success! return the response with statusCode 200 */

return callback(null,{

statusCode: 200,

body: JSON.stringify(response)

})

})

.catch(error => {

console.log('error', error)

/* Error! return the error with statusCode 400 */

return callback(null,{

statusCode: 400,

body: JSON.stringify(error)

})

})

}

Counter Flow

During rendering, the page will make a call to fetch the count from fauna.

Client Code:

const faunadb = require('faunadb')

const q = faunadb.query

const client = new faunadb.Client({

secret: process.env.FAUNADB_SERVER_SECRET

})

/* export our lambda function as named "handler" export */

exports.handler = (event, context, callback) => {

/* parse the string body into a useable JS object */

console.log("Function `deletes-count` invoked")

if(event && event.queryStringParameters && event.queryStringParameters.pageid) {

/* construct the fauna query */

return client.query(q.Count(q.Match(q.Index("deletes_by_pageid"), event.queryStringParameters.pageid)))

.then((response) => {

console.log("success", response)

/* Success! return the response with statusCode 200 */

return callback(null, {

statusCode: 200,

body: JSON.stringify(response)

})

}).catch((error) => {

console.log("error", error)

/* Error! return the error with statusCode 400 */

return callback(null, {

statusCode: 400,

body: JSON.stringify(error)

})

})

}

return callback(null, {

statusCode: 400,

body: JSON.stringify("No query parameter pageid found")

})

}

Production

Since the launch of the two functions, the response times are under 20ms for both creation and count queries. Several pages have already been counted several hundred times as being relevant by users. Here is a video of this in production: https://www.youtube.com/watch?v=AdTN0KYNz4A

Conclusion

FaunaDB is incredibly easy to use with netlify and simple to integrate with. It just took little over half a day to get this to production with ease. I’m sure that this architecture will need to evolve as pages gain traction to keep meeting strict SLAs. One way to do that will be to pre-aggregate values and store them. I’m surprised that this is able to perform as well without a cache in front of it. It’s awesome to see databases support temporality natively out of the box. It is such a time saver that goes great with such an expressive, easy to read programming language.

Resources:

- Announcing the FaunaDB Add-on for Netlify

- netlify/netlify-faunadb-example: Using FaunaDB with netlify functions

- FaunaDB Shell

Top comments (0)