Table Of Contents

- Preface

- Introduction (Tools | An idea)

- Docker-Compose & Dockerfiles

- Create test-docker-compose.yml

- Pytest Plugin

- Define conftest.py

- Create Tests Module

- Run tests

Preface

Hi! This is my first article on dev.to. Previously I wrote Tutorials on Medium (link must be somewhere in profile). Today I want to share a guide on how to set up integration tests for a bunch of services. Last month I worked on a similar task and for some members of the team the result looked like something new. I thought that maybe it would make sense to write a quick guide about it.

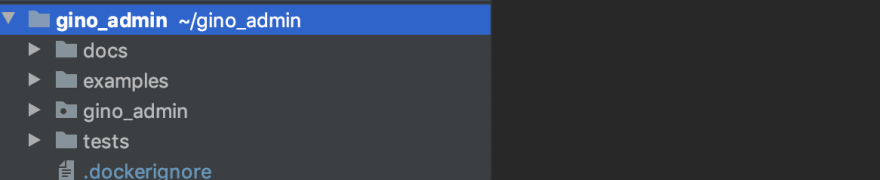

Besides, I really need to write tests for https://github.com/xnuinside/gino-admin. Of course, I need a set of different tests, but today I will prepare a set up for tests, that will check examples are run correctly with new changes in "Gino Admin" code.

Introduction

In guide I will use:

- Python

- pytest

- pytest-docker-compose

- requests (we can easily use something asyncio httpx or aiohttp, but in this case no make sense, because I will not add any concurrency ro tests in this tutorial, so the run one-by-one)

- docker & docker compose

Main idea:

You have a project with several microservices or services (does not matter in this context) and DB. And you need to run tests on this infrastructure, to be sure that components works together.

My Demo Project setup:

For the tutorial I will take fastapi_as_main_app from Gino Admin examples, because it contains Web App on FastAPI, Admin panel & DB PostgreSQL. Source code of this tiny project here: https://github.com/xnuinside/gino-admin/tree/master/examples/fastapi_as_main_app

Tutorial

At the beginning we need 2 things:

- up & run our services

- be sure that they are see each other and can connect

Docker-Compose

If in 2020 you still don’t use/try docker-compose - just google tutorials about it and give it a chance. It’s really irreplaceable in case of several-services backends at least for dev and test envs.

For up&run we will use Docker Compose, as you might have guessed.

Before start work on docker-compose.yml file you need to prepare Dockerfiles for services. First of all, let’s take a look on my source structure:

It would be a bad idea to mix 'take & play' examples with tests, so I will put all files that needed for tests inside tests/ dir.

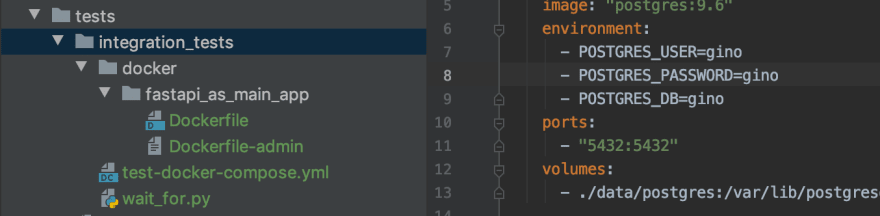

Let’s create a folder integration_tests/ and inside create a docker/ folder, where we will store all Dockerfiles that I will use for integration tests. Now I will create 2 Dockerfiles - one for Main App, one for Admin Panel. And I will have this structure in tests folder:

Docker context

Now, I need to pay attention to the Docker context. Because I need to have access to the examples/ folder in Dockerfile (and as you know docker does not allow access any paths outside Docker context) my Docker context will be а main gino_admin/ folder that contains 'examples'. So when I define Dockerfiles I remember, that my workdir will be 'gino_admin' folder, not directory 'docker/' where Dockerfiles are placed in.

In my case there will be two Docker files - one for the admin panel, one for the main app. PostgreSQL will be builded from the official image.

Dockerfiles

Pretty simple Dockerfiles. Install requirements, copy source code, run.

For admin panel I also need to add gino_admin sources and install from them inside, because this is what I test - code, not releases from PyPi:

Create test-docker-compose.yml

Let’s go and create a test-docker-compose.yml file with all our services that are needed for a correct test.

Go into tests/integration_tests/. Create test-docker-compose.yml. Pay attention to Context and Dockerfile path.

Our test-docker-compose.yml will be:

I need to set var DB_HOST=postgres because it uses inside code (here:) to decide that PostgreSQL host connects. If it runs inside docker-compose cluster - host postgres, if not - localhost.

Cool. Now time to run:

$ docker-compose -f test-docker-compose.yml up --build

And we got:

Aha, we set depends_on but anyway see an error Connection refused in services. Because servers try to connect to DB before it is really up. depends_on only waits for the docker container run, but it does not know anything about the real start of the server/process inside the container.

Wait-for script

We need to wait for the DB before running our services. For this exists a wait-for pattern-script. It can bash, python, any would you like. Idea of this script - wait till you got 'success' on some condition.

In our case we need to wait till PostgresDB will be ready to accept connections.

Let’s create wait_for.py. We will place it in same folder near compose .yml file

Content of the script will be very simple, to check DB connections I will use the same libraries that already exist as dependencies, but in your case you can use anything you want. Most simple & popular way - with psycopg2.

I will use Gino, because as I said I use it anyway in App:

Not much logic - just try to connect, if error sleep 8 sec, try again.

Now we need to modify Dockerfiles, before running servers we need to run this script. And when it returns 0 - run servers.

Change our last line in main app Dockerfile to:

COPY ../../tests/wait_for.py /wait_for.py

CMD python /wait_for.py && uvicorn main:app --host 0.0.0.0 --port 5050

And in admin panel to:

COPY tests/integration_tests/wait_for.py /wait_for.py

CMD python /wait_for.py && python admin.py

Note: same way you can run any pre-setup action that you need to make your service works.

Now run the cluster again with:

$ docker-compose -f test-docker-compose.yml up --build

And we get a successful result. All services up & work.

Great. We have a test cluster, now we need something that allows a run test inside this cluster.

Pytest plugin for Docker Compose

If you never worked with Pytest make sense first of all take a look at official documentation and some tutorials. At least you need to understand that it is fixtures - https://docs.pytest.org/en/latest/fixture.html.

Exist 3 different plugins for Pytest to run tests on docker-compose infrastructure:

- https://github.com/pytest-docker-compose/pytest-docker-compose

- https://github.com/avast/pytest-docker

- https://github.com/lovelysystems/lovely-pytest-docker

I checked all 3, but at the end of the day stop on pytest-docker-compose . It works for me better than others in scope of speed and usage clearness.

Let’s start with packages installation:

$ pip install pytest

$ pip install pytest-docker-compose

Define conftest.py

Now, let’s create our conftest.py

(https://docs.pytest.org/en/2.7.3/plugins.html) for pytest where we will define our custom path to docker-compose file that we use to run tests and plugin.

And right now we finished prepare our common infrastructure.

Next setup our first test module.

Create Tests Module

As you remember I have several examples and each of them - set of services, that works with DB. For each example, I will have own test module and own fixtures.

Services Fixtures

Create our first test_module: I will keep naming consistent and call it test_fastapi_as_main_app_example.py.

Now our tests folder looks like:

Inside test_fastapi_as_main_app_example.py first of all we need to define fixtures with our services:

- To check that they up & run success

- To get their uri, that we will use in tests

That need to pay attention:

Module_scoped_container_getter - this is a special fixture, provided by pytest-docker-compose plugin. I use Module_scoped_container_getter, because each of my examples - a set of separate groups of apps with their own DB schema - for each module I will need to drop DB Tables and create them for a concrete example that I test in the module.

Plugin provides 4 different fixtures that define behaviour about how often build and run containers:

- Function_scoped_container_getter

- Class_scoped_container_getter

- Module_scoped_container_getter

- Session_scoped_container_getter

Next pay attention to line:

service = module_scoped_container_getter.get("fastapi_main_app_main").network_info[0]

In the .get(...) method you need to provide a service name as it is defined in docker-compose yml file.

Now let's define 2 simple tests - check '/' page and assert that for both services status code 200. Add to the same test_fastapi_as_main_app_example.py file:

Time to run tests.

How to run tests

To run the test you have 2 possible ways.

First way - build & up & run with one command.

pytest . --docker-compose=test-docker-compose.yml -v

# will build and run docker compose & execute the tests

Second way allow you to reduce time in process of tests creating/debugging because you don’t rebuild each run containers.

docker-compose -f test-docker-compose.yml up --build

# build & run test cluster

# when in new terminal window:

pytest . --docker-compose=test-docker-compose.yml --docker-compose-no-build --use-running-containers -v

Choose any would you like and run test.

Great, all works. Services up and we can use them from tests.

Auth fixtures

Now let’s add more fixtures. I want to test the REST API of the admin panel, but to make API calls I need to get an auth token first. So I need to add a fixture with an auth step.

Also, I need to upload a preset on DB - some Initial Data Set. Special for this in Gino Admin exist feature Presets, that allow defining a list of CSV files that will be uploaded to DB. You can have multiple different DB Presets and load them with drop db or just insert to existing tables - as you want.

So I will define 2 fixtures - 1 to auth, 2 to upload preset

As you see you can use one fixtures in another.

Add two more test: one that will use our new fixture 'initdb', second only 'admin_auth_headers'.

I will write a test for Main App endpoint /users. This endpoint returns count of users in DB. So /users endpoint must returns {"count_users": 5} based on data that I load with Preset.

And test for /api/drop_db endpoint of Admin Panel.

Add tests:

Pay attention to initdb fixture, we don’t use it in method call, but we need to call initdb fixture, because for this test we need to have Data in DB.

Because our initdb fixture has scope=”module” it means that it will be executed only once per module, if you want recreate db for each test - change it to “function”

Run tests

Awesome. That's it.

In my case next steps was - add more modules and more tests for each example. And source code with tests samples you can find here: https://github.com/xnuinside/gino-admin/tree/master/tests/integration_tests

In you case maybe need to use scope="session" if you care about tests speed and you code don't have sides effects or maybe opposite you need scope="functions" - check more about it in Pytest docs.

Such integrations tests very helpful when you have 4-5 services that call each other and you need to be sure that they works together correct.

I hope it will be useful for someone. If you will see any errors - feel free to comment, I will try to fix them fast.

Top comments (0)