Natural Language Processing models have traditionally relied on social media for training corpuses, with Twitter being the biggest platform to source text-based data sets. Numerous companies have built their ML services based on those public data sets, and just as many researchers used them for academic articles.

One of the hottest topics in NLP for the past couple of years has been user geolocation - building models to identify one’s location by posted text. Numerous researchers have experimented with text-based geolocation for gun control policy automation, COVID spread predictive models, and marketing. To approach this enticing topic, our team joined hands with several other experts in the field, researched the biggest text-based geolocation projects, tested different methodologies suggested, and improved both the data set and the architecture to reach a Median Error of 30km in Haversine Distance.

That, of course, required jumping over the hurdles of data processing, GPU search, LLM/clustering algorithm selection, and the never-ending games of loss reduction. But now that we’ve done the hard work, we’re here to share our test results, our methodologies, and the decision-making process behind the project.

We’ll start small, focusing on the most common approaches to the task, telling you more about the publicly available data, and experimenting with different clustering algorithms.

Stay tuned for more articles and stories about how we’ve trained our models to distinguish messages like "I live in New York" from those like "New York is the best city in the world".

Previous publications

The two most common approaches in most cases trained on publicly available corpuses are classification and regression.

We reviewed previous papers and found several interesting publications. Here are a couple to start:

- In 2019, Huang и Carley published an article on a hierarchical model used to identify discrete locations (countries and cities) using a classification approach applied on clustered data.

- In 2023, Alsaqer published a paper on the use of a regressive approach to determine geolocation.

- In 2019, Tommaso Fornaciari и Dirk Hovy published a paper on a multi-task approach that combines several different methods.

We will try all of the above: we will filter and cluster the dataset, then test the results for both regression and classification. We’ll also test three different clustering algorithms to see which is the best fit for the task.

Training corpus

After reviewing existing publications and datasets, we opted to use TwWorld, the dataset used by Han, Cook, and Baldwin (2014) to train their models. The choice was primarily based on the size, availability, and number of previous scholarly uses so as to facilitate an easier comparison with previous results.

The two biggest challenges with the existing datasets in our case were:

- Many datasets were created for user-level geolocation, i.e., created with a goal of geolocating dozens of texts posted by the same user.

- Others performed specific steps for clustering on the corpus (in many cases, State or Province-based).

To address both issues, we have tested and performed several different algorithms on filtering, label smoothing, and clustering.

Clustering

Classification calls for data clustering. Let’s look at some of the popular clustering algorithms:

- DBSCAN is a density-based spatial clustering method. Unlike K-Means, DBSCAN doesn't require a pre-defined number of clusters. Instead, the algorithm creates a new cluster every time it finds a certain number of objects within a specific radius. Read the article to learn more.

- K-Means is an iterative clustering algorithm. It starts by randomly assigning K centers and grouping objects into a cluster based on their proximity to its center. The process is then iterated with the objective of minimizing the distance between objects and cluster centers.

- Hierarchical agglomeration begins by clustering at the minimum unit size and progressively merges smaller clusters until it reaches the defined number of clusters.

Model’s architecture

For our tests, we rely on a character-level CNN as used by Izbicki, Papalexakis, and Tsotras in 2019. Here’s what we did:

- Generated a dictionary of all tokens in the corpus.

- Added an embedding layer on the input

- On the output we set:

- For regression, a linear classifier for latitude and longitude

- For classification, a linear classifier with the number of outputs corresponding to the number of clusters.

The model was trained in two epochs: the first epoch with a learning rate of 1e(-3) and the second epoch with a learning rate of 1e(-4).

Test Results

We’ve trained and tested both a regression model and a classification model on the same publicly available corpus, replicating the algorithms and architectures mentioned in previous studies. This experiment setup allowed both:

- a clear comparison between the two approaches and three different clustering algorithms, and

- a comparison of our tests with previous studies.

It’s important to note that we performed all tests on different numbers of clusters. Having too many clusters implied having fewer texts posted from sparsely populated areas. Having too few clusters, on the other hand, created the problem of identifying densely populated locations.

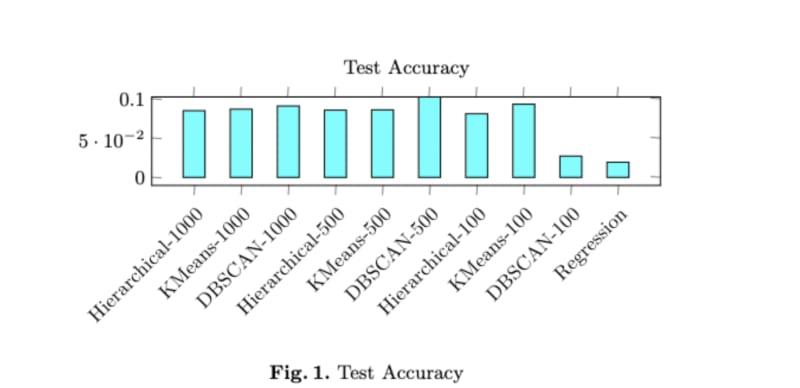

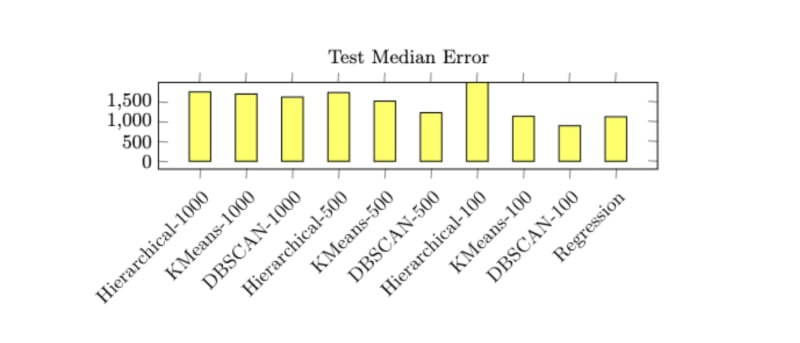

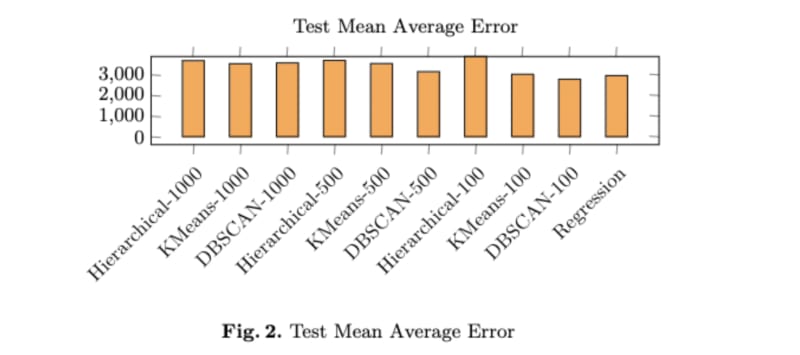

All the algorithms were tested on 100, 500, and 1000 clusters.

Considering the key metrics such as accuracy, mean average error, and median average error, the results are as follows:

- With a number of clusters between 100 and 500, DBSCAN outperforms other methods in terms of accuracy.

- Hierarchical clustering demonstrates the best results in terms of median and mean errors.

- Regression-based clustering performance is significantly lower in accuracy and median error.

Top comments (0)