How to retarget facial animations recorded with the Live Link Face iPhone app onto an Unreal MetaHuman character, using MotionBuilder and Python.

You can find the project files on my Github repo.

About MetaHumans

I recently started playing around with the mind-blowing MetaHuman (MH) feature from Unreal Engine. Through the Quixel Bridge editor, you can create and customize a highly realistic avatar in a few clicks, and send it to your Unreal project. Everything is fine-tuned to get the best of Unreal shaders and will leave you speechless 😍 I invite you to take a peek at it, plenty of resources out there.

About Live Link Face

As a mocap director, my interest quickly shifted over the live-retarget capabilities, i.e. how to transfer an actor's facial performance onto a metahuman, in real-time. Epic offers an app for that, which takes advantage of the iPhone's TrueDepth camera: Live Link Face (LLF). It also takes a few click to link your phone to your Unreal project and start driving your MetaHuman's face. Again, it's working 'Epic-ly' fast and well!

But there is the snag... The app was clearly designed to work in real-time with Unreal, where you can use the Sequencer to record facial data along body mocap, blueprints functions and so on. However, if used as a stand-alone app, it's another story.

LLF saves for each take 4 files:

- A CSV file containing the animation

- A video file of the performance

- A json file containing some technical information

- A jpg thumbnail

No maya scene, no unreal asset, not even a simple fbx file that we could use to import and clean the motion in a 3rd-party-software like MotionBuilder. Just a CSV file filled with random blendshape names and millions of values. Like that zucchini-peeler you got from aunt Edna for xmas, it's a nice gesture but what the heck am I gonna do with that!? 🤔

A quick look into the documentation confirmed the idea:

This data file is not currently used by Unreal Engine or by the Live Link Face app. However, the raw data in this file may be useful for developers who want to build additional tools around the facial capture.

So, I guess we are on our own now!

Let's dig in

It's not the most convenient format, but if you take a good look at this CSV file, it's all there: For each timecode frame (first column), you get a value of all the shapes defining the facial expression at that frame. These shapes come from Apple's ARKit Framework, which uses the iphone's TrueDepth camera to detect facial expressions and generate blendshape coefficients. All we have to do is extract those time/value pairs and store then in some handy container. This is where the Pandas library 🐼 and its dataframes are gonna do wonders!

Now what should I do with all this?

I need to recopy the animation values onto a target model that Unreal can import and read. Let's have a look at the MetaHuman project, that you can find on the UE Marketplace.

Facial and body motions are handled separately. A blueprint is applied to a facial version of the skeleton, which contains only the root, spine, neck, head and numerous facial joints, as Unreal relies on joint-based motions.

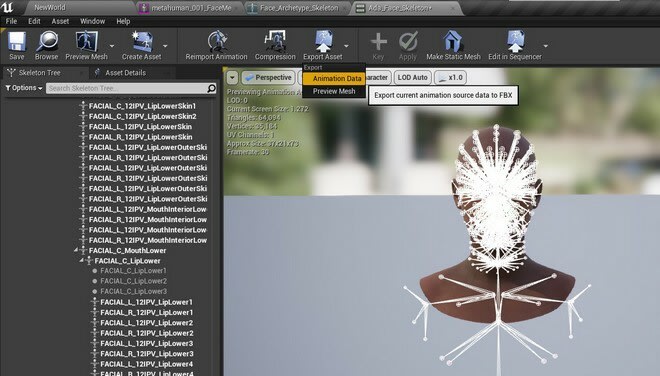

I can get this skeleton out of Unreal by creating an animation pose and exporting it as FBX. Now we can open it in motionbuilder and... HOLY MOLY GUACAMOLE, what's all this!? 😵 I'm not talking about the Hellraiser facial rig but about the root joint properties. It contains a huge amount of custom properties!

I run a quick line of code and count about 1100 entries:

- 254 properties starting with CTRL_expressions_, which are clearly blendshapes, like CTRL_expressions_jawOpen

- 757 properties starting with head_, which seem to be additional correctives (fixing for instance a collision between two blendshapes activated at the same time).

- 35 properties starting with cartilage_, eyeLeft_, eyeRight_ or teeth_, which are probably more correctives?

- Last but not least: 53 properties, whose name are identical to the ARKit shapes in the CSV files from LLF, like EyeBlinkLeft or JawOpen.

We can therefore assume that these last 53 properties are correspond to the ARKit blendshapes from the app and should receive our key values during retargeting.

How is Unreal computing animations?

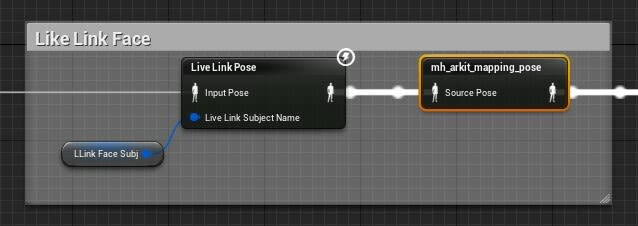

Before jumping into our favourite code editor, let's see how Unreal is reading and applying an animation onto the MetaHuman. I open the Face_AnimBP blueprint from the project, which is the one handling live-retargeting facial animations from the LLF app onto the rig.

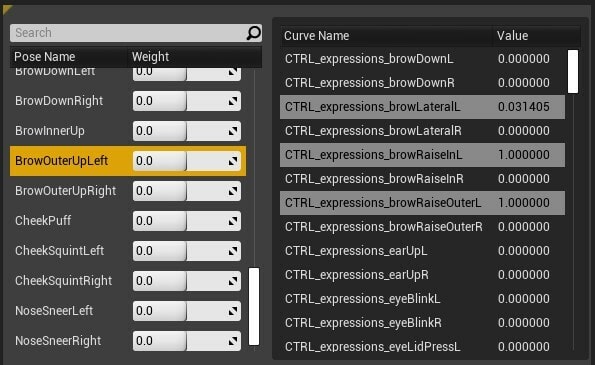

BINGO, there it is! Inside a Live Link Face box, I find what seems to be a mapping block. It's doing all the connections. For each ARKit blendshape used by LLF, it is mapping the corresponding CTRL expressions and head correctives on the metahuman, by specifying an influence value between 0 (no influence) and 1 (full influence). You can see below how BrowOuterUpLeft triggers the 3 expressions browLateralL, browRaiseInL and browRaiseOuterL with different weights, while having no effect on any other expression.

To confirm, I create random keys on the different properties in MotionBuilder and export the takes as FBX files to Unreal. It's working like a charm!

- Keys on the ARKit custom properties are read and retarget, but only if the animation goes through the mapping block first, so the animation blueprint is mandatory.

- However, if I put keys on the CTRL shapes and head correctives, I can apply my animation directy onto the MH, without the need of an animation blueprint.

As you can see, we have two solutions taking shape here: The easy road where we simply transfer animation values from the CSV file to the ARKit blendshape properties, or the long road where we convert them into MH expression and corrective values. Trying both?

Retargeting on ARKit blendshapes

As said, this is the easy stroll in the park. Our CSV file and our root bone are sharing the same ARKit blendshape information. All we need to do is transfer the keys. Here are the main steps I am following:

- Use Pandas to read the CSV file and extract all data. I create for this a BlendShape class, which receives the ARKit shape name, as well as animation keys, as pairs of timecode/values.

- For all BlendShape objects created, I search on the skeleton root for the corresponding property name and create keys for all timecode/value pairs.

- The scene framerate is very important here and should match the app's one, or keys won't be created at the correct frames.

Once done, adjust your timespan to frame the animation and have a look at the ARKit properties of the root. They should be animated.

Export your animation as FBX to Unreal and test it in the Face_AnimBP blueprint. Don't forget it needs to go through that mapping block so Unreal can convert ARKit blendshape values to MH property values, which will be then turned into joint information. Cool beans!

Retargeting on MetaHuman properties

Now let's get our hands dirty and explore that second solution! We want to be able to use a retarget file directly onto our MH character without going through all this mapping knick-knacks. But for that we need to find a way to recreate this mapping node in MotionBuilder. As a reminder, it is linking each ARKit shape to the corresponding MH ctrl and head properties with weighted values ranging from 0 to 1. So we first need to extract these values and weights from Unreal 🥵

After looking around for a while, I noticed that Unreal nodes can be exported as T3D ascii files, which contain all information on what the node is doing. I tried and I must admit that it wasn't love at first sight. Fortunately, it's only 13 lines long!

But let's not judge a book by its cover. Again, if you take a closer look, everything we need is here. Time to see if my regex ninja training has paid off. After playing around with three patterns, I manage to extract from the T3D file:

- The ARKit blendshapes names

- The MH properties names

- The mapping weights for each property I add to my BlendShape class (characterising an ARKit shape), the target MH properties and the mapping weights. Done! I now have all the information I need for retargeting.

Back to MotionBuilder, I can run my magic loops again. This time we have to be a bit more careful though:

- Read the T3D file and create BlendShape instances for all ARKit shapes.

- Use Pandas to read the CSV file and extract the animation keys for each ARKit shape. I fill up these timecode/value pairs into my BlendShape class instances.

- MotionBuilder is not very fast at finding root properties, maybe due to the high amount. To avoid searching for the same ones multiple times, I loop through the MH properties first and then through the timecode. For each frame, I check which ARKit blendshapes triggers my property and get the animation value weighted by my mapping information. For instance, if the ARKit value is 0.8 and the mapping value for this property is 0.5, then my animation key will be 0.8x0.5=0.4. If multiple ARKit shapes are triggering it, I take the max value corresponding to the highest influence.

- Again, be careful to retarget at the same framerate you used when recording takes.

I see keys on my root's properties! Let's export it as FBX to Unreal and assign it to my Face component. WUNDERBAR! Unreal is reading the animation and transfering it to the facial joints without having to go through that mapping node anymore.

Wrapping up

To finalise the script and make it more user-friendly, I added the following features:

- A batching option, in case you point to a folder containing multiple CSV files

- The possibility to offset the starting timecode, to synchronise the animation file with other sources

- A simple PySyde UI for the user to enter the required paths files

- Some logs and exception handlings to make sure that our batch is performing properly

We are done, good job everyone! 😉

Conclusion

It only took patience and observation, as well as some ancient Regex voodoo to transfer the animation values recorded by the Live Link Face app as CSV files onto our brand new MetaHuman in Unreal. While not perfect, the result is good enough to move the animation to a cleaning stage.

This is therefore a quick way to record facial animations from your actors, even if they cannot perform in real-time. The ability to process them at a later stage using an animation software like MotionBuilder and a fairly simple python script like this one makes of Live Link Face a cheap and reliable facial motion-capture solution for MetaHumans characters.

I hope this will help you write your own solution. Feel free to message me if you need any help or buy me a croissant and cappuccino next time you're in town 🥐☕ Cheers!

Top comments (2)

THIS! This is exactly what I was looking for :D

While I did read your entire article. What's the chances of you making a youtube video for a lunkhead noob like me to follow along with.

Thanks for all the work so far ^_^

Thank you for your sharing!

As a newbe of UE, I tried to follow these steps, but get myself stuck at extracting data from T3D file:

I don't quite understand the relation between the desired "mapping weights" and the "SourceCurveData"/"CurveData" in T3D file. Here's what I observe: both "SourceCurveData" and "CurveData" are arrays of length 333, however the target dimension of MH properties should be 254, so what do these extra 79 dims represent? Besides, what are the difference between "SourceCurveData" and "CurveData", which one should we use?

Sincerely hoping for some hints here