Today, I saw a short video of a problem in JavaScript.

Video link-https://youtu.be/EtWgLQIlhOg

JavaScript puzzle: What will be the output of this JavaScript program:

var a;

а = 0;

a++;

alert(а);

If you think the answer is 1, WRONG!! Try to run it yourself and check!

This video gives the explanation, why the answer is not 1.

The reason is, two different Unicode characters are used.

One is “a” -Latin Small Letter A (U+0061) and the other is “а”- Cyrillic Small Letter A (U+0430).

After watching the video, I was still not able to figure out what it meant. So, I posted my query on the #javascript channel of our JODC discord server.

github.com/daemon1024 and github.com/arvindpunk solved my query.They shared their valuable knowledge on this subject and I was able to differentiate between the two.

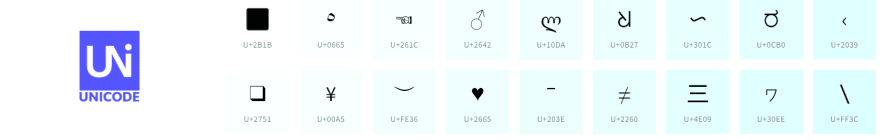

Let me tell you a little about Unicode.

What is Unicode?

Unicode, formally known as Unicode Standard, is a universal character set to define all the characters needed for writing the majority of known languages on computers.

It is the superset of all other character sets that have been encoded.

Why was Unicode introduced?

Before the introduction of Unicode in cyber space, there were hundreds of different encoding systems used all over the world. ASCII (American Standard Code for Information Interchange), ISO8859-1 (Western Europe) and KOI8-R (RFC 1489) (Russian Code) were some of them.

But no single encoding system could contain enough characters. For example, the European Union alone requires several different encodings to cover all its languages. Even for a single language like English, no single encoding was adequate for all the letters, punctuation, and technical symbols in common use.

The encoding systems were also in conflict with one another. Like, two different encoding systems used the same number for different characters while different numbers for the same character.

Benefits of Unicode encoding system:

Unicode is capable of representing 144,697 different characters and a much wider range of character sets.

It is a space-efficient encoding scheme for data storage.

It resolves the problem of using different encoding systems for various characters.

It supports mixed script computing environment.

It is more efficient coding system than ISO or IEC.

Unicode Encoding Schemes:

Unicode defines multiple encoding systems to represent characters. These are of three types- UTF-8, UTF-16, UTF-32.

UTF-8 (Unicode Transformation Format)-8

UTF-8 is a variable width encoding that can represent every character in Unicode character set. The code unit of UTF-8 is 8 bits, called an octet. UTF-8 can use 1 to maximum 6 octets to represent code points depending on their size.

UTF-8 is a type of multibyte encoding. Following are some of the ways Unicode represents different code points with varying lengths-

• UTF-8 1 Octet (8-bits) Representation

• UTF-8 2 Octet (16-bits) Representation

• UTF-8 3 Octet (24-bits) Representation

• UTF-8 4 Octet (32-bits) Representation

UTF-16 (Unicode Transformation Format)-16

UTF-16 is the encoding type in which each character is composed of either one or two 16-bit elements. UTF-16 allows all of the basic multilingual plane (BMP) to be represented as single code units. Unicode code points beyond U+FFFF are represented by surrogate pairs.

The interesting thing is that Java and Windows (and other systems that use UTF-16) all operate at the code unit level, not the Unicode code point level.

UTF-32(Unicode Transformation Format)-32

UTF-32 is a fixed length encoding scheme that uses exactly 4 bytes to represent all Unicode code points.it directly stores the binary code of any Unicode code point in 4 bytes.

Some important definitions:

Code point- refers to a code (from a code space) that represents a single character from the character set represented by an encoding scheme.

Example, 0x42 is one code point of ASCII that represents character ‘B’.

Code Unit- refers to unit of storage (number of bits) used to represent one encoded code point.

Example, UTF-8 encoding scheme uses 8 bits’ units to represent characters, but it is a variable length scheme. For some characters it just uses 8 bits, for others it may use more number of 8 bits units.

You all can check out

https://home.unicode.org/

https://en.wikipedia.org/wiki/List_of_Unicode_characters to know more about Unicode Characters.

Also don't forget to challenge your friends with this question:)

Image Credits

www.compart.com/en/unicode

https://en.wikipedia.org/wiki/Unicode

https://home.unicode.org/

https://convertcodes.com/utf32-encode-decode-convert-string/

Cover Image Credits

https://deliciousbrains.com/how-unicode-works/

References

Computer Science with python- Sumita Arora- Data Representation

https://en.wikipedia.org/wiki/List_of_Unicode_characters

https://stackoverflow.com/questions/2241348/what-is-unicode-utf-8-utf-16

https://docs.oracle.com/cd/E19455-01/806-5584/6jej8rb0j/index.html

Top comments (0)