The Data Access Layer (DAL) is the most critical part of any application. This is where the code integrates with an external source of truth like a database.

In this take, we'll show you how to build a DAL that talks to Postgres via Node. Then we'll go into best practices like Clean Architecture and what this means in practical terms.

Ready? Let’s go!

First, a quick note about the code:

The code will have modern niceties like TypeScript, unit tests, and will tackle potential scalability issues. It will run on Node, with a local copy of Postgres running on a machine. The unit tests run in isolation, so they will work even when the database isn’t available, which is perfect for build servers.

Feel free to nab the working code from GitHub, or follow along!

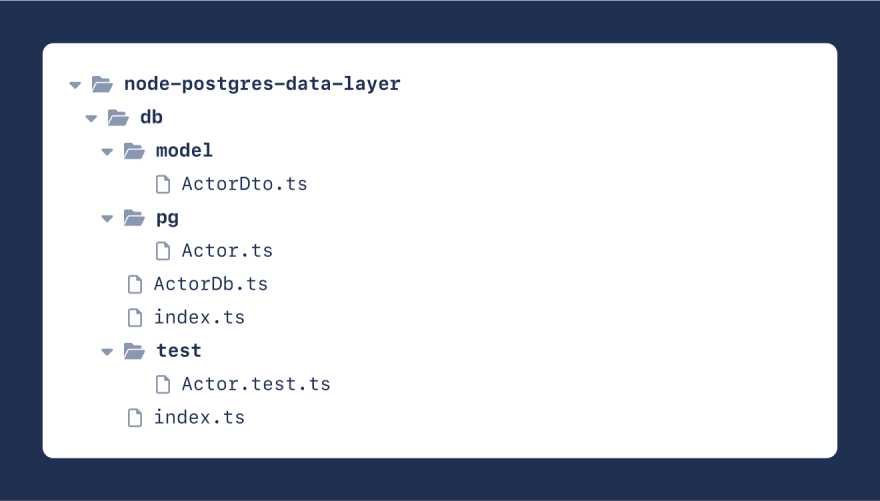

Project Scaffold to Build a Node and Postgres DAL

The main project has many sub-folders. So, fire up the console and type:

> mkdir node-postgres-data-layer

> cd node-postgres-data-layer

> mkdir db

> mkdir test

> mkdir db/model

> mkdir db/pg

Be sure to create the following files within the folder structure:

Here's what each file is for:

-

ActorDto- Entity type which defines the actor model -

pg/Actor- Postgres implementation to find and update actor data -

ActorDb- Interface that's useful outside the DAL -

Actor.test- Unit tests — no Postgres database necessary

The index file is mostly there to glue the code together. The index file under the root folder works as the entry point.

Fire up npm init to create a basic package.json file. Once complete, execute the following NPM commands:

> npm i @types/chai @types/chai-as-promised @types/mocha @types/pg @types/sinon chai chai-as-promised mocha sinon ts-node typescript --save-dev

> npm i pg --save

You can initialize the tsconfig.json file via tsc --init. Be sure to run this command at the project's root. The tsc command is available when you install TypeScript globally or in the node_modules/.bin folder. Enable "outDir": "./dist", and add "exclude": ["test"] to the default config file.

Most of these packages are developer dependencies that make our lives easier. The only real dependency is pg which is the node-postgres package that talks to Postgres from a Node codebase.

In the package.json under scripts, put the following commands:

{

"start": "tsc && node ./dist/index",

"type-check": "tsc",

"test": "mocha --require ts-node/register ./test/*.ts"

}

Lastly, be sure to have a working copy of Postgres running on your local machine. The database is a sample relational db called dvdrental.

Go ahead and download the zip file, unpack it, and restore the dvdrental database on your local machine. This DAL only focuses on the actor, film_actor, and film relational tables. It is also possible to explore the rest of the schema via this PDF download.

Set Up the Bare Necessities in TypeScript

Next, write the bare skeleton code to be fleshed out later. To make things easier, the file name goes on top of the implementation. To follow along, simply find the file in the project structure and copy-paste the code. All these files are in the db folder.

ActorDto:

export type ActorDto = {

// data contract

actorId: number;

firstName: string;

lastName: string;

movie: string;

rentalRate: number;

lastUpdate: string;

};

pg/Actor:

import { Pool, QueryResult } from "pg";

import { ActorDto } from "../model/ActorDto";

export class Actor {

#pool: Pool; // connection pool

constructor(pool: Pool) {

this.#pool = pool;

}

async findByYearAndLastName(

year: number,

lastName: string

): Promise<ActorDto[]> {

return [];

}

async updateLastNameByIds(lastName: string, ids: number[]): Promise<number> {

return 0;

}

async updateFirstNameByIds(

firstName: string,

ids: number[]

): Promise<number> {

return 0;

}

private static mapActorResult = (

res: QueryResult

): ActorDto[] => // projection

res.rows.map((r) => ({

actorId: r.actor_id,

firstName: r.first_name,

lastName: r.last_name,

movie: r.title,

rentalRate: r.rental_rate,

lastUpdate: r.last_update,

}));

}

ActorDb:

import { ActorDto } from "./model/ActorDto";

export interface ActorDb {

// external interface

findByYearAndLastName(year: number, lastName: string): Promise<ActorDto[]>;

updateLastNameByIds(lastName: string, ids: number[]): Promise<number>;

updateFirstNameByIds(firstName: string, ids: number[]): Promise<number>;

}

index:

import { Pool, types } from "pg";

import { ActorDb } from "./ActorDb";

import { Actor } from "./pg/Actor";

const connectionString =

"postgres://postgres:postgres@127.0.0.1:5432/dvdrental";

const pool = new Pool({

// single pool

connectionString,

});

export const actor: ActorDb = new Actor(pool); // strongly-typed

Be sure to include the correct connection string. It follows this format: <username>:<password>@<server>:<port>/<database>.

Actor.test:

import chai, { expect } from "chai";

import chaiAsPromised from "chai-as-promised";

import sinon, { SinonMock, SinonStub } from "sinon";

import { Pool } from "pg";

import { actor } from "../db"; // system under test

chai.use(chaiAsPromised);

class ClientMock {

query() {}

release() {}

}

describe("Actor", () => {

let query: SinonStub;

let connect: SinonStub;

let client: SinonMock;

beforeEach(() => {

query = sinon.stub(Pool.prototype, "query");

connect = sinon.stub(Pool.prototype, "connect");

client = sinon.mock(ClientMock.prototype);

});

afterEach(() => {

query.restore(); // reset stub/mock

connect.restore();

client.restore();

});

});

Clean Architecture Principles in Node

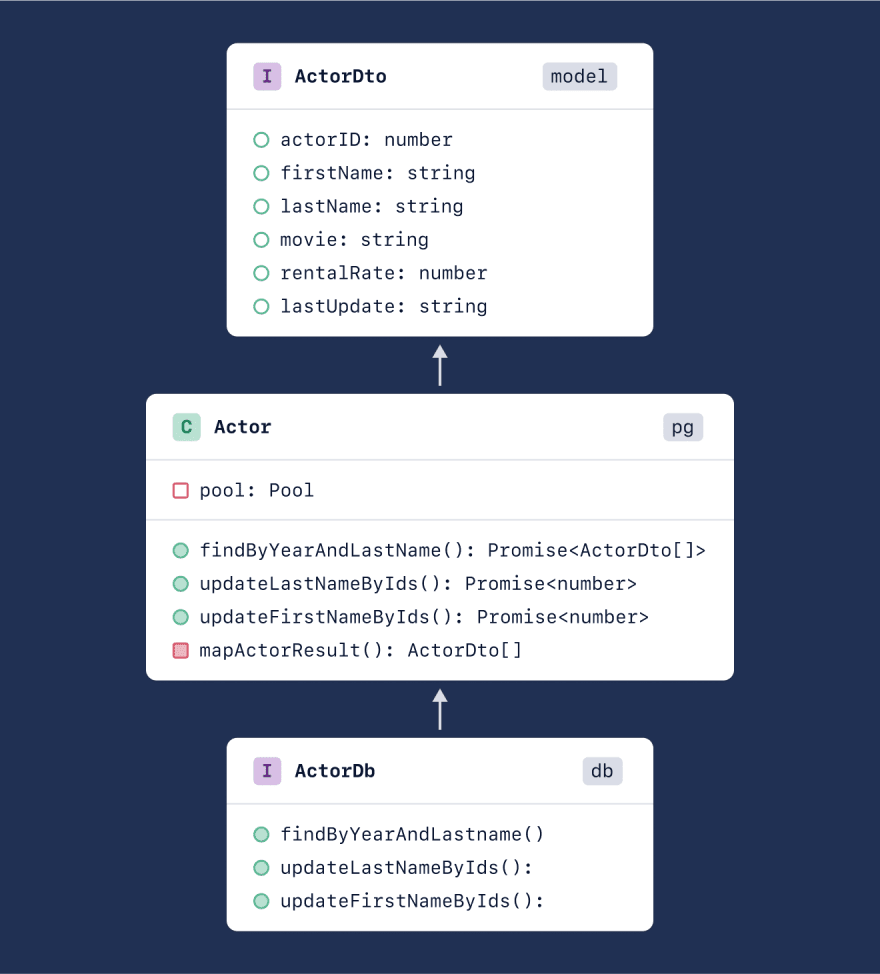

The basic skeleton above follows Clean Architecture as dependencies physically pull the entity away from implementation details.

In Node, dependencies should flow in a single direction, and ActorDto, for example, sits in the innermost layer. This is the Data Transfer Object (DTO) which declares the data contract that the business understands.

At the outermost layer is pg/Actor, which actually talks to the Postgres database. The projection in mapActorResult translates from table columns in the db into the DTO. The interface ActorDb declares the external interface, which is useful outside the DAL. This way, it is possible to swap out databases, from Postgres to Mongo for example, without risky invasive surgery. As long as the contracts hold, the changes will theoretically be minimal.

Since TypeScript strongly-typed pg/Actor, the code then becomes testable. In Actor.test, we bring in the index file in db to test the DAL. Stubs and mocks are put in place, so tests never actually talk to Postgres but just verify the code we wrote.

To illustrate, this is what the “onion” looks like in Clean Architecture:

Source for original diagram: Uncle Bob's blog - The Clean Architecture

In practical terms, this is what the implementation looks like in Node:

Dependencies are unidirectional, and the DTO is the business entity. Implementation details in pg/Actor adhere to a strict interface that acts much like a contract. This, in some way, follows the dependency of inversion principle because the contract declares what the code must do. The use cases outside the DAL can then take the contract and not care about how it finds or updates data.

Queries in TypeScript

To practice TDD, we simply write the test first, and look for a failure. Put this in Actor.test:

it("findByYearAndLastName", async () => {

query.resolves({

rows: [{}],

});

const result = await actor.findByYearAndLastName(0, "");

expect(result.length).to.equal(1);

});

Add in a passing implementation by replacing findByYearAndLastName in pg/Actor:

async findByYearAndLastName(

year: number, lastName: string): Promise<ActorDto[]> {

const res = await this.#pool.query(`

SELECT a.actor_id,

a.first_name,

a.last_name,

f.title,

f.rental_rate,

a.last_update

FROM actor AS a

INNER JOIN film_actor AS fa ON a.actor_id = fa.actor_id

INNER JOIN film AS f ON fa.film_id = f.film_id

WHERE f.release_year = $1 AND a.last_name = $2

`, [year, lastName]);

return Actor.mapActorResult(res);

}

Notice the call at the end that returns a Promise<ActorDto>. This is a projection that contains the DTO, an external entity, and is how people who are not engineers reason about the business domain.

The #pool object remains hidden, even during runtime, and abstracts implementation details away from use cases.

Transactions in TypeScript

For transactions, let's say there is a list of actor ids with a new last name.

it("updateLastNameByIds#commit", async () => {

client.expects("release").once(); // release back to the pool

client.expects("query").exactly(4).resolves({

rowCount: 1,

});

connect.resolves(new ClientMock());

const count = await actor.updateLastNameByIds("", [0, 0]);

client.verify();

expect(count).to.equal(2);

});

it("updateLastNameByIds#rollback", async () => {

client.expects("release").once();

client.expects("query").twice().rejects().onSecondCall().resolves();

connect.resolves(new ClientMock());

await expect(actor.updateLastNameByIds("", [0, 0])).to.eventually.be.rejected;

client.verify();

});

In the rollback test, the client mock expects the code to execute query exactly twice. Then, it rejects the promise to throw an exception and resolves the subsequent promise when the exception gets caught. This technique shows how sinon can really help to keep tests fluent and somewhat easy to follow.

There is the potential of a failure mid-changes, so this tests both the happy path and the rollback. Here are the implementation details:

async updateLastNameByIds(

lastName: string, ids: number[]): Promise<number> {

let count = 0;

const client = await this.#pool.connect();

try {

await client.query('BEGIN');

const result = await Promise.all(ids.map(id => // loops

client.query(`

UPDATE actor

SET last_name = $1

WHERE actor_id = $2

`, [lastName, id])));

await client.query('COMMIT');

count = result.map(r => r.rowCount).reduce((c, v) => c + v, count);

} catch (e) {

await client.query('ROLLBACK'); // query must resolve

throw e;

} finally {

client.release();

}

return count;

}

The map method iterates through the list of ids. A promise fires all requests in parallel, so the client waits less. Once all requests complete, it returns the result array used to gather a count via a reduce.

If there are any issues mid-changes, an exception is thrown that rollbacks the changes and rethrows this same exception to unwind the call stack. It is important not to swallow the error, or mask the error with a different exception.

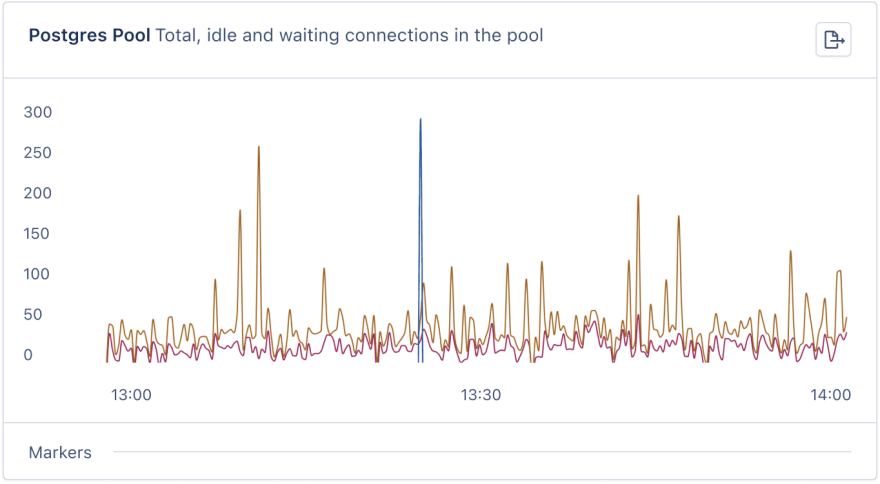

The finally block must release the client back to the pool otherwise the connection pool might run dry.

Scalability in Postgres

Too many queries in a single transaction can cause a performance bottleneck in Postgres. One technique to combat this is to shorten long-running connections by sending a single query instead.

async updateFirstNameByIds(

firstName: string, ids: number[]): Promise<number> {

const res = await this.#pool.query(`

UPDATE actor

SET first_name = $1

WHERE actor_id = ANY($2) -- int[]

`, [firstName, ids]);

return res.rowCount;

}

This time, the query updates the first name instead of the last name. But, this uses an array of integers as a parameter via ANY instead of a transaction. The unit test is similar to what has already been shown, so feel free to take a peek at the GitHub repo.

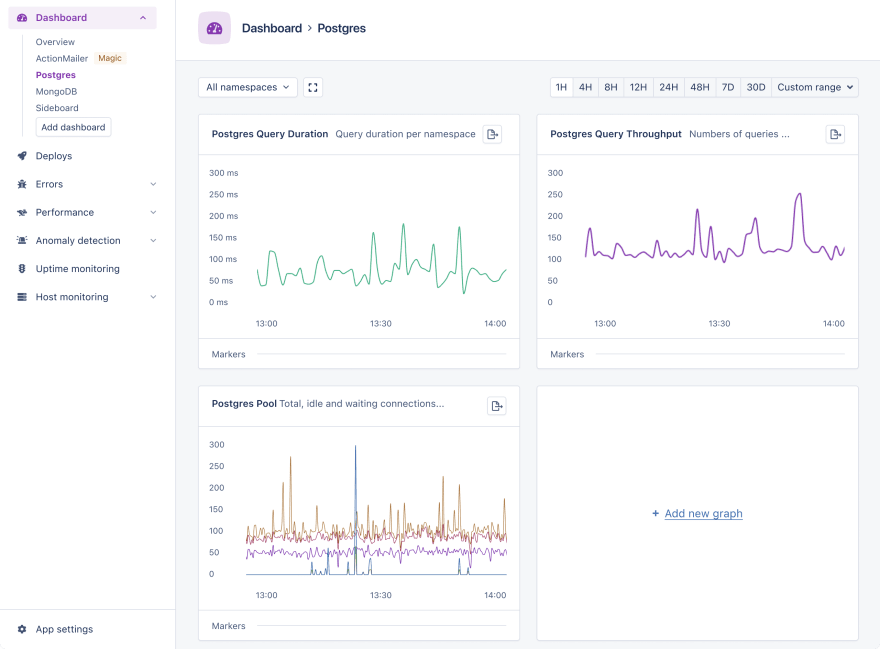

AppSignal offers instrumentation for Postgres, with a magic dashboard on top that visualizes your database performance metrics. Magic dashboards are automatically created for you when you integrate AppSignal into your app, so there is no need to configure the graphs manually.

You can check for starving pool connections via a Postgres magic dashboard.

The Postgres Pool metric captures idle and waiting connections in the pool. This is one effective way to monitor database performance and find ways to reduce SQL pressure.

Putting It All Together: Execute the DAL

Lastly, in the index file under the root folder, do:

import { actor } from "./db";

(async () => {

const actors = await actor.findByYearAndLastName(2006, "Goldberg");

console.log(actors);

let count: number;

count = await actor.updateLastNameByIds("Goldberg", [-1, 0, -1, -1, 0]);

console.log("Trans update: " + count);

count = await actor.updateFirstNameByIds("Parker", [-1, 0, -1, -1, 0]);

console.log("Array update: " + count);

})().then(() => console.log("DONE"));

With this in place, run npm start to execute the entire DAL. Notice we bring in the strongly-typed actor dependency. In Clean Architecture, the business use cases can use this same technique to interact with persisted data.

Data Types

You may come across a gotcha in the output after running the code:

The rentalRate is declared as a number, but the DAL actually returns a string. To tackle this problem, simply add this to db/index:

const NUMERIC_OID = 1700;

types.setTypeParser(NUMERIC_OID, (val) => parseFloat(val));

This tells node-postgres to parse from a numeric type in Postgres, based on an OID, into a number type in Node.

Wrap Up: Now Our DAL Talks to Postgres via Node

In this post, we built a DAL that communicates to Postgres through Node. We saw that connecting to Postgres from Node is possible via node-postgres. This package supports a connection pool, transactions, and parsing db types. We also looked at Clean Architecture principles and how they work in practice.

Until next time, happy coding!

P.S. If you liked this post, subscribe to our JavaScript Sorcery list for a monthly deep dive into more magical JavaScript tips and tricks.

P.P.S. If you need an APM for your Node.js app, go and check out the AppSignal APM for Node.js.

Top comments (0)