As a Platform Team, when you host Kubernetes Cluster for the rest of application teams to run their services, there are few things that you expect the Teams to follow to make everyone’s life easier. For instance — you expect every team to define —

- CPU/Memory requirement for their application pods [Governance Policy]

- The minimum set of labels as per Organization standard. Like — application name, cost center, etc. [Governance Policy]

- Image repository should be from the approved list and not just any public repository [Security Policy]

- On Development environment, the replica count should always be set to 1 — may be to save on the cost [Governance Policy, to save on Cost]

- And the list goes on and on…

This is a fair ask from Teams to follow the best practices, but we all know, based on prior experience, until you enforce these rules, things will not be in place. And this blogs talks exactly about this —

"How to enforce Security and Governance policies to have fine grain control on the services running in a Kubernetes Cluster"

Logically, here is what we would need to achieve it —

Step #1 — A component which can understand the Governance and/or Security related policies and apply them as needed.

Step #2 — A way to define the policies we want to enforce

Step #3 — Evaluation of these polices whenever a Kubernetes resource is getting created/edited/deleted and if all looks good, let the resource be created, else flag the error.

BTW, if a resource is found not to follow the guidelines from the set of policies, one has an option to fail the resource creation OR, make changes to the resource manifest file at runtime based on the policy and then let the resource be created.

Let's do a bit of deep dive into each of the steps -

For Step #1, we will use Open Policy Agent (referred as OPA from here on), an open source Policy Engine which can help to enforce policies across the stack.

For more details on OPA, check this link —

We will be using Gatekeeper to implement the OPA.

Thinking about Gatekeeper??? OPA Gatekeeper is a specialised project providing first-class integration between OPA and Kubernetes. It leverages OPA Constraint framework to describe and enforce policy. OPA Constraint has a semantic of Constraint and ConstraintTemplate. Let’s understand what it means by taking an example.

Assume, we want to enforce a policy to have a minimum set of labels needed on a Kubernetes resource.

For this to work, we will first define a ConstraintTemplate, which will describe —

- The constraint we want to enforce, written in Rego

- The schema of the constraint

apiVersion: templates.gatekeeper.sh/v1

kind: ConstraintTemplate

metadata:

name: requiredlabelsconstrainttemplate

annotations:

metadata.gatekeeper.sh/title: "Required Labels"

metadata.gatekeeper.sh/version: 1.0.0

spec:

crd:

spec:

names:

kind: RequiredLabelsConstraintTemplate

validation:

openAPIV3Schema:

type: object

properties:

labels:

type: array

items:

type: string

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package requiredlabelsconstrainttemplate

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("Kubernetes resource must have following labels: %v", [missing])

}

And then we define the actual Constraints, which will be used by Gatekeeper to enforce the policy. And this Constraint is based on the ConstraintTemplate we have defined above.

Example — Below Constraint enforces costCenter, serviceName and teamName labels on a Pod resource.

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: RequiredLabelsConstraintTemplate

metadata:

name: pod-required-labels

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Pod"]

parameters:

labels: ["teamName","serviceName","costCenter"]

And below one enforces the “purpose” label on a Namespace resource.

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: RequiredLabelsConstraintTemplate

metadata:

name: namespace-required-labels

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

labels: ["purpose"]

Both the Constraints defined above leverage the same ConstraintTemplate and use match fields to help Gatekeeper to identify which Constraint to be applied for which Kubernetes resource.

For Step #2 , we will be using Rego, a purpose-built declarative policy language that supports OPA.

If we want to define a Policy to ensure that expected labels are present in the Pods to be created, this is how it would look like.

For more details on Rego, check this link —

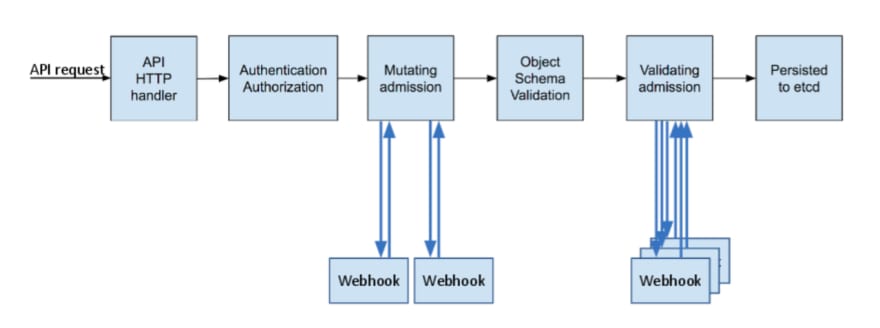

For Step #3, we will leverage the Admission Control construct of Kubernetes. Admission Controller can be thought of as an interceptor that intercepts (authenticated) API requests and may change the request object or deny the request altogether. They are implemented via Webhook mechanism.

Admission Controllers can be of two types, Mutating and Validating. Mutating Admission Controller can mutate the resource definition and Validation Admission Controller just validates the final resource definition before the object is saved to etcd.

For more details on the Kubernetes Admission Controller, check this link —

So, our final flow would be something like this now —

- The Platform Team installs OPA Gatekeeper in the Kubernetes Cluster.

- The Platform Team also creates Policies as Constraints and ConstraintTemplates and applies these in Kubernetes Cluster.

- Application Team triggers service deployment pipeline to deploy their service in Kubernetes.

- At the time of pod creation, Kubernetes Admission Controller intercepts and uses OPA Gatekeeper to validate the request.

- OPA Gatekeeper checks the list of Constraints deployed in the Cluster and if a match is found against the Kubernetes resource to be created, it performs the validation by evaluating the policy written in Rego inside the corresponding ConstraintTemplate.

- And based on the evaluation, Gatekeeper responds with an Allow or Deny response to the Kubernetes API Server.

Let’s see things in action —

We will be using AWS EKS managed service for hosting our Kubernetes cluster and eksctl tool for creating the cluster.

Step #0 — Install the tools needed to create the Kubernetes infrastructure. The commands are tested with Linux OS.

# Install eksctl tool

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

sudo mv /tmp/eksctl /usr/local/bin

# Install kubectl tool

curl -O https://s3.us-west-2.amazonaws.com/amazon-eks/1.23.13/2022-10-31/bin/linux/amd64/kubectl

# Install or update AWS CLI

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

Step #1 — Create EKS cluster using eksctl tool and deploy the Kubernetes Metrics service inside it.

Clone this Public repository from Github — https://github.com/waswani/kubernetes-opa, navigate to the folder kubernetes-opa and execute commands as mentioned below —

# Create EKS Cluster with version 1.24

eksctl create cluster -f eks-cluster.yaml

# Output like below shows cluster has been successfully created

2023-01-24 18:25:50 [ℹ] kubectl command should work with "/home/ec2-user/.kube/config", try 'kubectl get nodes'

2023-01-24 18:25:50 [✔] EKS cluster "opa-demo" in "us-west-2" region is ready

Step #2 — Deploy OPA Gatekeeper

kubectl apply -f gatekeeper.yaml

# Check the pods deployed in the gatekeeper-system namespace

kubectl get pods -n gatekeeper-system

NAME READY STATUS RESTARTS AGE

gatekeeper-audit-5cd987f4b7-b24qk 1/1 Running 0 10m

gatekeeper-controller-manager-856954594c-2bbsc 1/1 Running 0 10m

gatekeeper-controller-manager-856954594c-7qnft 1/1 Running 0 10m

gatekeeper-controller-manager-856954594c-8szl9 1/1 Running 0 10m

Step #3 — Define ConstraintTemplate and deploy it into Kubernetes cluster.

We will use the same template that we created earlier in the blog for enforcing labels.

kubectl apply -f requiredLabelsConstraintTemplate.yaml

#To check installed templates

kubectl get constrainttemplate

#Output

NAME AGE

requiredlabelsconstrainttemplate 40s

Step #4 — Define Constraint and deploy it into Kubernetes cluster.

We will use the same constraints that we created earlier in the blog for enforcing labels on Pods and Namespace Kubernetes objects.

#For Pod

kubectl apply -f podRequiredLabelsConstraint.yaml

#For Namespace

kubectl apply -f namespaceRequiredLabelsConstraint.yaml

#To check installed constraints

kubectl get constraints

#Output

NAME ENFORCEMENT-ACTION TOTAL-VIOLATIONS

namespace-required-labels

pod-required-labels 13

Step #5 — It’s time to test by creating Pods and Namespaces with and without required labels and see the outcome.

Create Pod with no labels applied —

run nginx --image=nginx

#Output - Failed pod creation, as expected

Error from server (Forbidden): admission webhook "validation.gatekeeper.sh" denied the request: [pod-required-labels] Kubernetes resource must have following labels: {"costCenter", "serviceName", "teamName"}

Create Pod with required labels applied —

kubectl run nginx --image=nginx --labels=costCenter=Marketing,teamName=HR,serviceName=HRFrontend

#Output - Pod created successfully

pod/nginx created

Create Namespace with no labels applied —

kubectl create ns observability

#Output, creation failed

Error from server (Forbidden): admission webhook "validation.gatekeeper.sh" denied the request: [namespace-required-labels] Kubernetes resource must have following labels: {"purpose"}

Create Namespace with required labels applied —

kubectl apply -f test-ns.yaml

#Output, NS created as it has the label "Purpose" applied to it.

namespace/test created

To summarise, we created a single Constraint Template file to enforce mandatory labels and depending on the type of Kubernetes resource for whom we want to enforce the labels, we created constraint files and enforced the labels as per our need. And all this was possible with the help of OPA Gatekeeper.

Hope you enjoyed reading this blog. Do share this blog with your friends if this has helped you in any way.

Happy Blogging…Cheers!!!

Top comments (0)