Amazon Bedrock is a powerful AWS service that lets you build and scale generative AI applications using foundation models from top providers such as Mistral AI, Anthropic, Meta, Cohere, Amazon Titan, and others all without managing infrastructure.

In this blog, we'll walk through how to invoke a foundation model on Amazon Bedrock using Python, with Mistral AI as our example. You’ll see the full code, key setup steps, and how to avoid common errors.

🚧 Step 1: Enable Model Access in Amazon Bedrock

Before using any models on Bedrock, you must explicitly request access for each one.

Here's how:

- Go to the Amazon Bedrock Console.

- From the sidebar, click "Model access".

- Choose the providers you want access to (e.g., Mistral, Anthropic, Meta).

- Click "Request model access" for each.

🌍 Step 2: Set Your Region

Amazon Bedrock model availability depends on the AWS region.

To access all models and the latest features, it's recommended to use the us-west-2 region (Oregon), where most models including Mistral, Meta, Claude, and others are available.

However, for this time, I’m using the eu-north-1 region (Stockholm), which currently supports a limited set of models that suit my needs.

If you're trying to use a specific model and it's not available in your current region, try switching to us-west-2, eu-north-1, or even eu-central-1 depending on availability.

Update your AWS configuration:

aws configure

Or set environment variables:

export AWS_ACCESS_KEY_ID=your-access-key-id

export AWS_SECRET_ACCESS_KEY=your-secret-access-key

export AWS_REGION=eu-north-1 # Change this to your desired region

🐍 Step 3: Install Dependencies

Make sure Python is installed (≥ 3.7), and install boto3:

pip install boto3

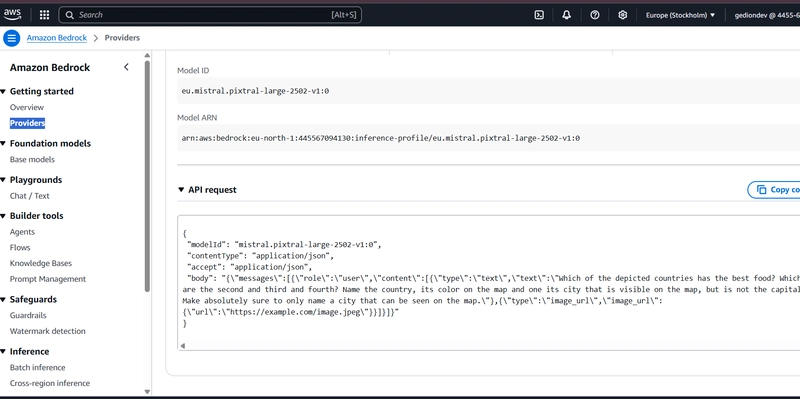

🔑 Step 4: Get the API Request Format for Any Model

To find the exact kwargs payload structure for invoking a model via API:

- Go to the Amazon Bedrock Console.

- Choose "providers" from the sidebar.

- Select a model (e.g., Mistral, Claude, Titan).

- Scroll down to the "API Requests" section.

- You’ll see ready-to-use API request samples.

You can copy and adapt this request for any model.

For example, if you're switching from Mistral to Claude or LLaMA, just update the modelId and keep the rest of the format similar.

In my example, I removed the

type=imagecontent block I’m only using"type": "text"in my prompt.

If you want to include images, just keep the image type block and provide a valid URL or upload method.

💻 Step 5: Write Python Code to Invoke a Bedrock Model

We’ll now write a Python script that:

- Connects to Bedrock via

boto3 - Sends a prompt to a model

- Prints the response

Create a file called invoke_bedrock.py:

import boto3

import json

# Initialize the Bedrock runtime client for the correct region

bedrock_runtime = boto3.client("bedrock-runtime", region_name="eu-north-1")

# Your question to the model

prompt = "What is the capital city of Italy?"

kwargs = {

"modelId": "eu.mistral.pixtral-large-2502-v1:0",

"contentType": "application/json",

"accept": "application/json",

"body": json.dumps({

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt

}

]

}

]

})

}

# Call Bedrock

response = bedrock_runtime.invoke_model(**kwargs)

# Parse the response

response_body = response['body'].read()

output = json.loads(response_body)

# Print the assistant's reply

assistant_message = output['choices'][0]['message']['content']

print("Model response:")

print(assistant_message)

▶️ Step 6: Run the Script

In your terminal or command prompt:

python invoke_bedrock.py

Output:

Model response:

The capital city of Italy is Rome (in Italian, "Roma"). Rome is ...

⚠️ Common Errors and Fixes

| Error | Solution |

|---|---|

NoCredentialsError |

Run aws configure or set environment variables. |

ValidationException or "inference profile required" |

Use the correct model ID for your region. For general use, prefer us-west-2. |

modelId not found |

Use a model ID valid for your region (e.g., mistral.mixtral-8x7b-instruct-v0:1 in us-west-2). |

✅ Summary

You’ve now successfully:

✅ Set up AWS credentials

✅ Requested model access

✅ Written a Python script to invoke Mistral via Bedrock

✅ Printed a model response

This foundation lets you build anything from chatbots to smart assistants powered by Amazon Bedrock.

Top comments (0)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.