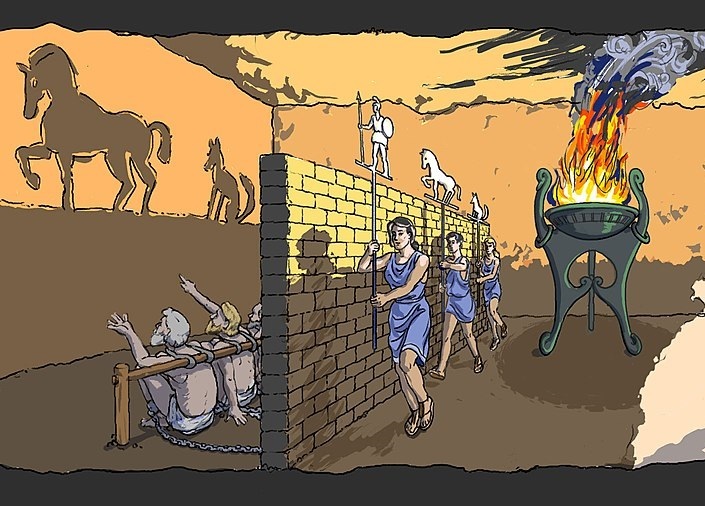

Imagine prisoners chained together in a cave, with all they can see being a wall in front of them. Behind the prisoners is a fire, and between the fire and the prisoners are people carrying sculptures that cast shadows on the wall. The prisoners watch these shadows, believing them to be real.

If an object (a book, let us say) is carried past behind them, and it casts a shadow on the wall, and one of them says “I see a book", he thinks that the word “book” refers to the very thing he is looking at. But he would be wrong. He’s only looking at a shadow. The real referent of the word “book” he cannot see. To see it, he would have to turn his head around.

In a lot of computer vision tasks, images to neural networks are like shadows to the prisoners. In order to gain an understanding of an image's contents, they need to find and use variables that are not exactly observable to them but are the true explanatory factors behind the image. These factors are known as latent variables.

What is a Latent Space

A latent space or vector is basically a distribution of the latent variables above. It is also commonly referred to as a feature representation.

Think of a latent vector as a collection of an image's 'features', I.e. variables that describe what is going on in an image, such as the setting (medieval or modern), the time of day, and so on. This is not exactly how it works - it is just an intuition. The idea is that the latent variables represent high level attributes, rather than raw pixels with little meaning.

Where are Latent Variables Used

Latent variables can be used when connecting computer vision models to models from other domains that do not deal with image data. For example, a common task in natural language processing is image captioning. To generate a caption for an image, an NLP model would require the image's latent variables. That is where they get the understanding necessary to describe what is going on in the image.

Latent variables are also used in image manipulation. These variables when learned well can be used to adjust higher level properties about an image. A common application of this is the creation of deepfakes.

Face recognition is a problem that heavily leverages latent variables. A model can be trained on face recognition data and used to obtain latent variables for every face it encounters. The latent space can then be compared to that of another image to see if it matches.

Another application of latent vectors is debiasing of computer vision systems. By learning a latent space a computer vision model will gain a high level understanding of the data and can be used to tell which features are underly represented, for instance, in the context of face recognition, faces of a certain race or skin complexion.

How is a Latent Space Learned?

A popular model used to learn a latent space for images of a given distribution is the autoencoder, which consists of two parts - an encoder and a decoder. The encoder portion of the network maps an image to its latent space and the decoder samples from this latent space to reconstruct the original image. By training the model end to end to a point where the reconstruction is as close as possible to the original image, the latent space would be learned.

Feature Disentanglement

Ideally, we want learned latent variables to be as independent as possible and not correlated with each other at all such that when we vary a given latent variable, only the aspect of the image the variable represents is changed. There are many ways to enforce this - most of them are beyond the scope of this article.

Top comments (0)