In my previous article, I explained Amazon Bedrock's Intelligent Prompt Routing and how it selects the most suitable model based on the prompt. I demonstrated this feature using the Amazon Bedrock Playground.

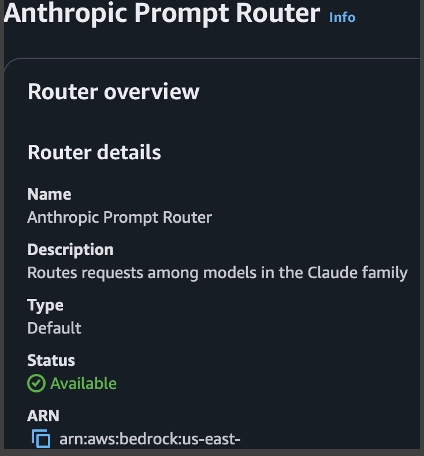

For example, when specifying the Anthropic model family ARN, Amazon Bedrock automatically routes the request to either Anthropic Haiku or Anthropic Sonnet, depending on the prompt's complexity.

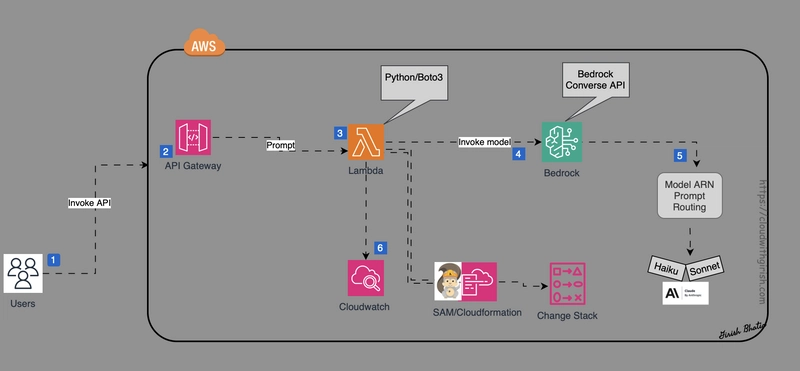

In this article, I will demonstrate the same feature programmatically using the AWS SDK for Python (Boto3). I will invoke this functionality through AWS Lambda, API Gateway, and the Amazon Bedrock API.

Please keep in consideration that this feature is not GA yet and in preview allowing routing with Claude Sonnet 3.5 and Claude Haiku, or between Llama 3.1 8B and Llama 3.1 70B.

Let's look at the architecture diagram!

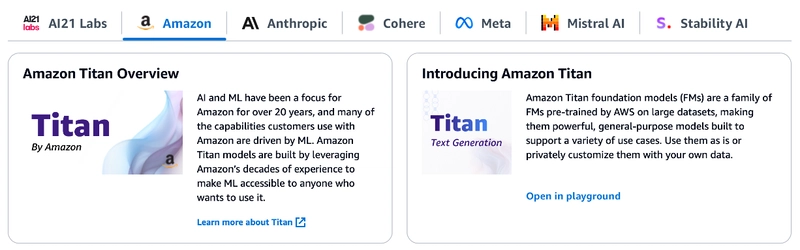

Introduction to Amazon Bedrock

Amazon Bedrock is a fully managed service that provides access to a variety of foundation models, including Anthropic Claude, AI21 Jurassic-2, Stability AI, Amazon Titan, and others.

As a serverless offering from Amazon, Bedrock enables seamless integration with popular foundation models (FMs). It also allows you to privately customize these models with your own data using AWS tools, eliminating the need to manage any infrastructure.

Additionally, Bedrock supports the import of custom models.

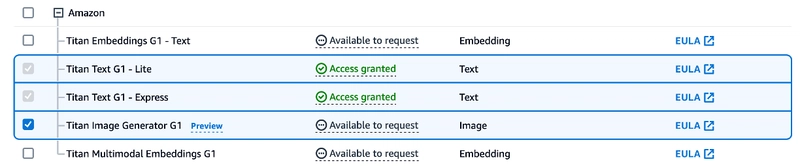

With Bedrock, you have choice of foundation models.

Before model can be used, you need to request the access to the model.

For Amazon Models, typically it takes few mins to get access.

Introduction to Amazon Bedrock Intelligent Prompt Routing

At AWS re:Invent 2024, AWS introduced an exciting new feature: Intelligent Prompt Routing.

This Bedrock feature automates model selection within a model family. Instead of manually choosing specific models like Anthropic Haiku or Anthropic Sonnet, you simply provide a model family ARN. Bedrock then analyzes your prompt and routes it to the most appropriate model.

For instance, with the Anthropic model family ARN, Bedrock automatically directs:

- Simple queries to Anthropic Haiku for fast, efficient responses

- Complex requests to Anthropic Sonnet for detailed, comprehensive answers

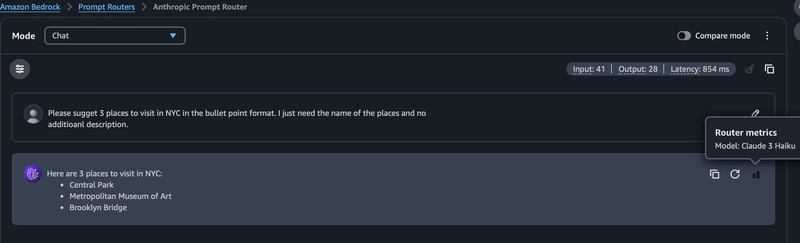

Using Intelligent Prompt Routing in Amazon Bedrock Playground

In this section, I will use Amazon Bedrock Playground to use intelligent prompt routing feature.

Let's sign in to AWS Management console and navigate to Amazon Bedrock service.

You will see prompt routers for Anthropic and Meta LLMs.

Select Anthropic Prompt Router.

I will select Playground from the navigation panel.

Validate the feature using few simple and complex prompts and observe which LLM is being selected for each response.

Prompt:

Please suggest 3 places to visit in NYC in the bullet point format. I just need the name of the places and no additional description.

Result:

Here are 3 places to visit in NYC:

- Central Park

- Metropolitan Museum of Art

- Brooklyn Bridge

Model Selected: Anthropic Haiku

Prompt:

Write a python function that takes a word and print it in reverse.

Result:

Here's a Python function that takes a word as input and prints it in reverse:

def print_reverse(word):

reversed_word = word[::-1]

print(reversed_word)

Model Selected: Anthropic Sonnet 3.5

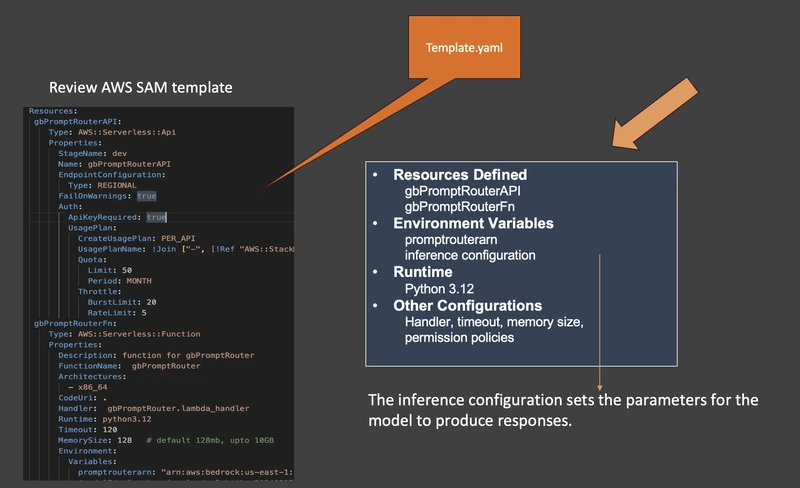

Review AWS SAM template

In this section, I will use Lambda, API, and SAM to demonstrate how this feature can be invoked via code to integrate with a chatbot UI via a Rest API.

The AWS Serverless Application Model (SAM) template defines the infrastructure required for our Intelligent Prompt Routing implementation.

Here’s what the SAM template for this solution looks like:

Review AWS Lambda function (Python/Boto3 Library)

Lambda function is developed using Python and uses the Boto3 library to interact with Amazon Bedrock and implement Intelligent Prompt Routing:

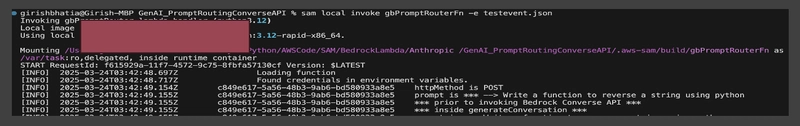

Build function locally using AWS SAM

Command to build the function locally is sam build

Once code is successfully build, it is ready to be invoked.

Invoke function locally using AWS SAM

Command to invoke the function is sam local invoke -e

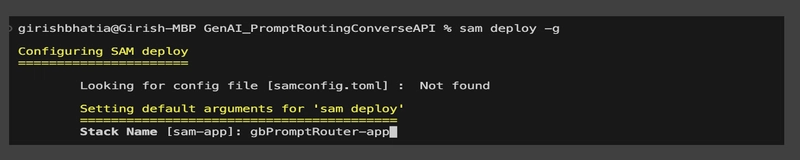

Deploy function using AWS SAM

Once you have validated the function locally, you can deploy it using sam deploy command.

Validate the GenAI Model response using a prompt

I am using Postman to pass the prompt and review the responses.

I will pass different prompts to try multiple scenarios. Relatively simple prompt use invoke Anthropic Claude Haiku model, while a complex prompt should be routed to Anthropic Claude Sonnet model for response.

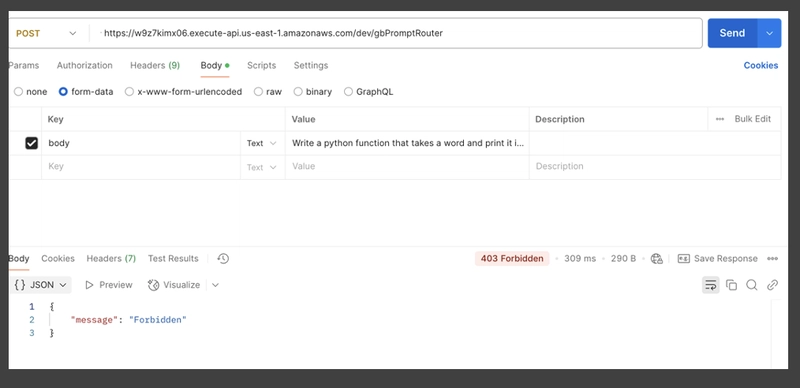

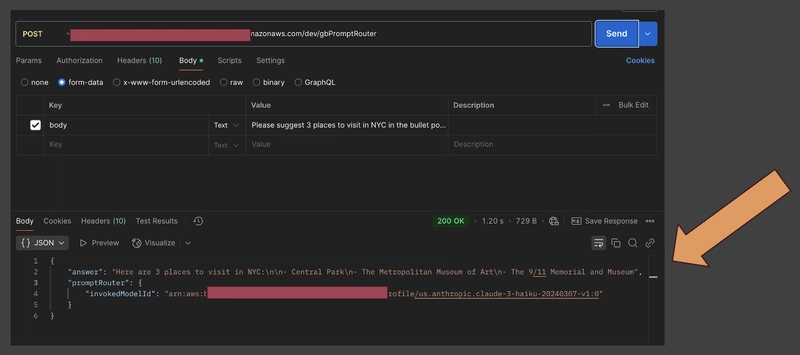

Prompt:

Please suggest 3 places to visit in NYC in the bullet point format. I just need the name of the places and no additional description.

Review the response

I have added API key to make the restful API secure, hence, if I don't provide the api key, I will get a 403 forbidden response as below:

Once I add api key in the header while invoking the secure API, I should get a response similar to below:

Please note that Anthropic Haiku model was used to respond to this prompt.

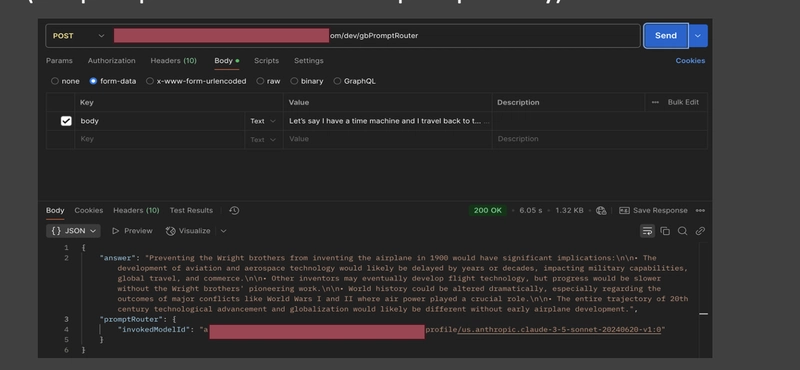

Prompt:

Let’s say I have a time machine and I travel back to the year 1900. While there, I accidentally prevent the invention of the airplane by the Wright brothers. What would be the potential implications of this action?

(this prompt is taken from Claude prompt library)

Review the response

Please note that we have a 200 OK response indicating that API call was successful and a response has been provided.

Please note that Anthropic Sonnet model was used to respond to this prompt.

Review Cloud Watch log

Lambda function is integrated with CloudWatch and log group is created.

Logs can be viewed by navigating to the function's CloudWatch log group.

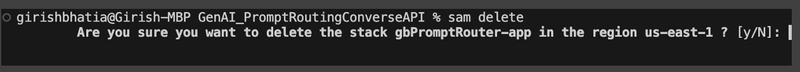

Cleanup - Delete resources

Once done with this exercise, ensure to delete all the resources created so that these resources do not incur charges impacting overall cloud cost and budget.

Since these resources were build and deployed using AWS SAM, these can be deleted using AWS SAM command.

Command: sam delete

You can also delete the resources via AWS Console.

Conclusion

In this article, I demonstrated Amazon Bedrock’s Intelligent Prompt Routing using the Anthropic model family. Instead of specifying a fixed model, I leveraged Bedrock’s intelligence to dynamically select the best model based on the prompt’s complexity.

For this solution, I used Python/Boto3 to create an API integrated with Bedrock via a Lambda function.

I hope you found this article both helpful and informative!

Thank you for reading!

Watch the video here:

https://www.youtube.com/watch?v=fN_c2S1TIqs

𝒢𝒾𝓇𝒾𝓈𝒽 ℬ𝒽𝒶𝓉𝒾𝒶

𝘈𝘞𝘚 𝘊𝘦𝘳𝘵𝘪𝘧𝘪𝘦𝘥 𝘚𝘰𝘭𝘶𝘵𝘪𝘰𝘯 𝘈𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵 & 𝘋𝘦𝘷𝘦𝘭𝘰𝘱𝘦𝘳 𝘈𝘴𝘴𝘰𝘤𝘪𝘢𝘵𝘦

𝘊𝘭𝘰𝘶𝘥 𝘛𝘦𝘤𝘩𝘯𝘰𝘭𝘰𝘨𝘺 𝘌𝘯𝘵𝘩𝘶𝘴𝘪𝘢𝘴𝘵

Top comments (0)