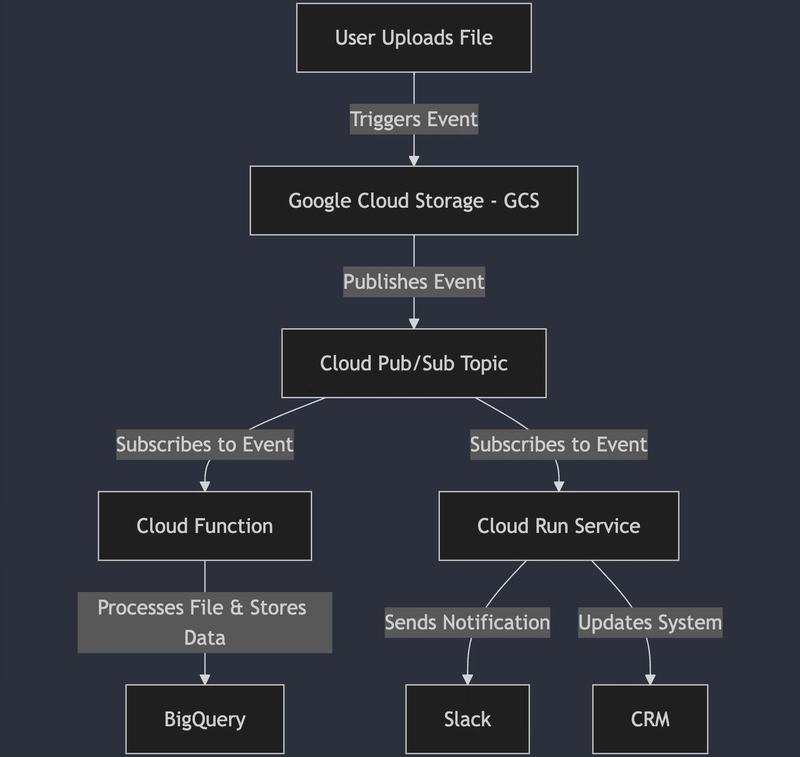

Recently, I was working on a project where the goal was to build a system that could react to events in real-time. The scenario was simple yet powerful: an event would start with a file being uploaded to a Google Cloud Storage bucket, and from there, the system needed to process the file, trigger downstream workflows, and update other systems. This got me thinking about how to architect an event-driven system on Google Cloud that was scalable, reliable, and easy to maintain.

If you’ve ever wondered how to design an event-driven architecture (EDA) on Google Cloud, you’re in the right place. Let’s break it down step by step, using real-world examples and a human touch to make it relatable.

What is Event-Driven Architecture?

At its core, event-driven architecture is about designing systems that respond to events—changes in state or specific occurrences—like a file upload, a database update, or a user action. Instead of polling or waiting for tasks, the system reacts instantly, making it highly efficient and scalable.

In my project, the event was a file upload to a Google Cloud Storage bucket. But how do we take that event and turn it into actionable workflows? Let’s dive in.

Step 1: The Trigger – Google Cloud Storage Event

The journey begins with the file upload. Google Cloud Storage (GCS) is a fantastic service for storing files, but it also has a neat feature: it can emit events whenever something happens, like a new file being uploaded.

Here’s how it worked in my project:

- A user uploads a file to a specific GCS bucket.

- GCS emits an event, saying, “Hey, a new file has arrived!”

- This event is sent to Cloud Pub/Sub , Google’s messaging service, which acts as the central nervous system of our event-driven architecture.

Step 2: The Nervous System – Cloud Pub/Sub

Cloud Pub/Sub is the backbone of our event-driven system. It’s a messaging service that decouples services producing events from those processing them. In our case:

- The GCS bucket publishes an event to a Pub/Sub topic.

- Any service interested in this event can subscribe to the topic and react accordingly.

For example, in my project, we had two subscribers:

- A Cloud Function to process the file and extract data.

- A Cloud Run service to trigger a downstream workflow, like updating a database or sending notifications.

This decoupling is what makes event-driven architecture so powerful. You can add new subscribers without disrupting existing ones, making the system highly extensible.

Step 3: The Muscle – Cloud Functions and Cloud Run

Now that the event is in Pub/Sub, it’s time to act on it.

Cloud Functions are serverless functions that run in response to events. In my project, we used a Cloud Function to:

- Read the uploaded file from GCS.

- Parse its contents (e.g., a CSV file).

- Store the parsed data in BigQuery for analytics.

But wait, there’s more! We also used Cloud Run for more complex processing. Cloud Run is a managed platform for running containerized applications. It’s perfect for tasks that require more compute power or longer execution times than Cloud Functions.

For example, after the Cloud Function processed the file, it published another event to Pub/Sub, which triggered a Cloud Run service to:

- Send a notification to a Slack channel.

- Update a CRM system via an API call.

Step 4: The Brain – Monitoring and Error Handling

No system is complete without monitoring and error handling. In an event-driven architecture, things can go wrong—like a malformed file or a failed API call.

To handle this, we used Cloud Logging and Cloud Monitoring to track events, errors, and performance metrics. We also set up dead-letter topics in Pub/Sub to handle failed messages. If a message couldn’t be processed, it was sent to a dead-letter topic for later investigation.

Step 5: The Big Picture – Bringing It All Together

Let’s recap the flow:

- A file is uploaded to GCS.

- GCS emits an event to Pub/Sub.

- A Cloud Function processes the file and stores data in BigQuery.

- Another Pub/Sub event triggers a Cloud Run service to update external systems.

- Monitoring tools keep an eye on everything, ensuring reliability.

This architecture is scalable, cost-effective, and easy to maintain. Plus, it’s entirely serverless, so you don’t have to worry about managing infrastructure.

Lessons Learned

While designing this system, I learned a few valuable lessons:

- Start small : Begin with a single event and a simple workflow, then expand as needed.

- Embrace decoupling : Use Pub/Sub to keep services independent and scalable.

- Monitor everything : In an event-driven system, visibility is key to debugging and optimization.

- Plan for failure : Always have a strategy for handling errors and retries.

Why Event-Driven Architecture on Google Cloud?

Google Cloud provides a robust set of tools for building event-driven systems. From Cloud Storage and Pub/Sub to Cloud Functions and Cloud Run, everything integrates seamlessly. Plus, the serverless nature of these services means you can focus on writing code rather than managing servers.

Whether you’re building a file processing pipeline, a real-time analytics system, or a notification service, event-driven architecture on Google Cloud is a powerful approach.

Final Thoughts

Architecting an event-driven system can feel overwhelming at first, but breaking it down into smaller steps makes it manageable. In my project, starting with a simple file upload event led to a scalable, reliable system that could handle complex workflows with ease.

If you’re considering event-driven architecture for your next project, I encourage you to give Google Cloud a try. The tools are there, the documentation is excellent, and the possibilities are endless.

Have you built an event-driven system on Google Cloud? I’d love to hear about your experiences and challenges in the comments below!

Happy architecting! 🚀

Top comments (0)