What was the Web 3.0 supposed to mean again?

The Semantic Web

The definition of "Web 3.0" has assumed different incarnations over the past few years, depending on whom you asked and when. We initially thought it would have been some kind of semantic web, with shared ontologies and taxonomies that described all the domains relevant to the published content. The Internet, according to this vision, will be a collective semantic engine driven by a shared language to describe entities and the way they connect and interact with one another. It will be easy for a machine to extract the "meaning" and the main entities of another page, so the Internet will be a network of connected concepts rather than a network of connected pages.

Looking at things in 2022, we can arguably say that this vision hasn't (yet?) been realized. Despite being envisioned for at least the past 20 years, today approximately 1-2% of the Internet domains contain or support the semantic markup extensions proposed by RDF / OWL. I still believe that there's plenty of untapped potential in that area but, since there hasn't been a compelling commercial use-case to invest tons of resources in building a shared Internet of meaning instead of an Internet of links, the discussion has gotten stranded between academia and W3C standards (see also the curse of protocols and shared standards).

The Web-of-Things

More recently, we envisioned the Web 3.0 as the Internet of Things. According to this vision, IPv6 should have taken over within a few years, every device would have been directly connected to the Internet with no need of workarounds such as NAT (IPv6 provides enough addresses for each of the atoms on the planet), all the devices will ideally be talking directly to one another over some extension of HTTP.

Looking at things in 2022, we can say that this vision hasn't (yet) become a reality either. Many years down the line, only one third of the traffic on the Internet is IPv6. In countries like Italy and Spain, the adoption rate is less than 4-5%. So NAT-ting is still a thing, with the difference that it has now been largely delegated to a few centralized networks (mainly Google, Apple and Amazon and their ecosystems): so much for the dream of a decentralized Internet of things. And the situation on the protocols side isn't that rosy either. The problem of fragmentation in the IoT ecosystem is well documented. The main actors in this space have built their own ecosystems, walled gardens and protocols. The Matter standard should solve some of these problems, but after a lot of hype there isn't much usable code on it yet, nor usable specifications (2022 should be the year of Matter though, that's what everybody says). Regardless of how successful the Matter project will be in solving the fragmentation that prevents the Internet of things from being an actual thing, it's sobering to look back at how far we've come from the original vision. We initially envisioned a decentralized web of devices, each with its own address, all talking the same protocols, all adhering to the same standards. We have instead ended up with an IoT run over a few very centralized platforms, with limited capabilities when it comes to talking to one another, and the most likely to solution to this problem will be a new standard which hasn't been established by academic experts, ISO or IEEE, but by the same large companies that run the current oligopoly. Again, so much for the dream of a decentralized Internet of things.

Crypto to the rescue?

Coming to the definition of Web 3.0 most popular in the last couple of years, we've got the Internet-over-Blockchain, a.k.a. crypto-web, a.k.a. dApps. The idea is pretty straightforward: if Blockchain can store financial transactions in a decentralized way, and it can also run some code that transparently manipulates its data in the form of smart contracts, then we've got the foundations to build both data and code in a decentralized way.

To understand how we've got here, let's take a step back and let's ask ourselves a simple question.

What problems is the Web 3.0 actually trying to solve?

What is wrong with the Web 2.0 that actually wants us to move towards a Web 3.0, with all the costs involved in changing the way we organize software, networks, companies and whole economies around them?

Even if they envision different futures, the Semantic Web, the Web-of-Things and the Crypto-Web have several elements in common. And the common trait to all those elements can be summarized by a single word: decentralization.

So why is the Web 3.0, in all of its incarnations, so much into decentralization?

An obvious part to the answer is, of course, that the Web 2.0 has become too much about centralization (of data, code, services, and infrastructure) in the hands of a few corporations.

The other part of the answer to the question is the fact that software engineering, in all of its forms, is a science that studies a pendulum that swings back and forth between centralization and decentralization (the most cynical would argue that this is the reason why information engineers will never be jobless). This common metaphor, however, provides only a low-resolution picture of reality. I believe that the information technology has a strong center of mass that pulls towards centralization. When the system has enough energy (for various reason that we'll investigate shortly) it flips towards decentralization, just to flip back to a centralized approach when the cost of running a system with an increasingly high number of moving parts becomes too high. At that point, most of the people are happy to step back and accept somebody providing them with infrastructure/platform/software-as-a-service®, so they can just focus on whatever their core business is. At that point, the costs and risks of running the technology themselves are too high compared to simply using it as a service provided by someone who has invested enough resources in commoditising that technology leveraging an economy of scale.

The few companies that happen to be in that sweet spot in that precise moment during the evolution of the industry (an economist would call it the moment where the center of mass between demand and supply naturally shifts) are blessed with the privilege of monopoly or oligopoly in the industry for at least the next decade to come - which, when it comes to the IT industry, is the equivalent of a geologic eon. How long it will take until the next brief "decentralized revolution" (whose only possible outcome is the rising of a new centralized ruling oligarchy) depends on how much energy the system accumulates during their reign.

There is a simple reason why technology inertially moves towards centralization unless a sufficient external force is applied, and I think that this reason goes often under-mentioned:

Most of the folks don't want to run and maintain their own servers.

As an engineer and a hobbyist maker that has been running his own servers for almost two decades (starting with a repurposed Pentium computer in my cabinet a long time ago), as well as a long-time advocate of decentralized solutions, this is a truth that hurts. But the earlier we accept this truth, the better it is for our sanity - and the sanity of the entire industry.

The average Joe doesn't want to learn how to install, run and maintain a web server and a database to manage their home automation, their communication with family and friends or their news feed unless they really have to.

Just like most of the folks out there don't want to run and maintain their own power generators, their own water supply and sewage systems, their own telephone cables or their own gasoline refineries. When a product or a technology becomes so pervasive that most of the people either want it or expect it, then they'll expect somebody else to provide it to them as a service.

However, people may still run their own decentralized solutions if it's required. Usually this happens when at least one of the following conditions apply:

- A shortage occurs: think of an authoritarian government cutting its population out of the most popular platforms for messaging or social network, prompting people to flock to smaller, often decentralized or federated, solutions.

- The technology for a viable centralized solution isn't out there yet: think of the boom of self-administered forums and online communities that preceded the advent of social networks and the Web 2.0.

- Some new technology provides many more benefits compared to those provided by the centralized solution: think of the advantage of using a personal computer compared to using an ad-hoc, time-slotted connection to a mainframe.

- People don't trust the centralized solutions out there: this has definitely been the case after the Cambridge Analytica scandal brought in the spotlight the fact that free online platform aren't really free, and that giving lots of your data to a small set of companies can have huge unintended consequences.

When one of these conditions apply, the industry behaves like a physical system that has collected enough energy, and it briefly jumps to its next excited state. Enough momentum may build up that leads to the next brief decentralized revolution that may or may not topple the current ruling class. However, once the initial momentum settles, new players and standards emerge from the initial excitement, and most of the people are just happy to use the new solutions as on-demand services.

Unlike an electron temporarily excited by a photon, however, the system rarely goes back to its exact previous state. The new technologies are usually there to stay, but they are either swallowed by new large centralized entities, packaged as commodified services, or they are applied in much more limited scopes than those initially envisioned.

How is the Web 3.0 going to achieve decentralization?

The different incarnations of the Web 3.0 propose different solutions to implement the vision of a more decentralized Web.

The Semantic Web proposes decentralization through presentation protocols. If we have defined a shared ontology that describes what an apple is, what it looks, smells and tastes like, how it can be described in words, what are its attributes, and we have also agreed to a universal identifier for the concept of "apple", then we can build a network of content where we may tag this concept as an ID in a shared ontology in our web pages (that may reference it as an image, as a word, as a description in the form of text or audio), and any piece of code out there should be able to understand that some parts of that web page page talk about apples. In this ideal world the role of a centralized search engine becomes more marginal: the network itself is designed to be a network of servers that can understand content and context, and they can render both requests and responses from/to human/machine-friendly formats with no middlemen required. Web crawling is not only tolerated and advised: it's the way the Web itself is built. Machines can talk to one another and exchange actual content and context, instead of HTML pages that are only rendered for human eyes.

The Web-of-Things proposes decentralization through network protocols, as well as scaling up the number of devices. If instead of having a few big computers that do a lot of things we break them up into smaller devices with lower power requirements, each doing a specific task, all part of the same shared global network, all with their own unique address, all communicating with one another through shared protocols, then the issues of fragmentation and lock-in effects of the economies of platforms can be solved by the natural benefits of the economies of scale. We wouldn't even need a traditional client/server paradigm in such a solution: each device can be both client and server depending on the context.

The Crypto-Web proposes decentralization through distributed data and code storage. If Blockchains can store something as sensitive as an individual's financial transactions on a publicly accessible decentralized network, and in a way that is secure, transparent and anonymous at the same time, and they can even run smart contracts to manipulate these transactions, then they are surely the tool we need to build a decentralized Web.

Why decentralization hasn't happened before

We have briefly touched in the previous paragraphs why decentralization usually doesn't work on the long run. To better formalize them.

People don't like to run and maintain their own servers

Let's repeat this concept, even if it hurts many of us. Decentralization often involves having many people running their own services, and people usually don't like to run and maintain their own services for too long, unless they really have to. Especially if there are centralized solutions out there that can easily take off that burden.

Decentralized protocols move much slower than centralized platforms

Decentralization involves coming up with shared protocols that everybody agrees on. This is a slow process that usually requires reaching consensus between competing businesses, academia, standards organizations, domain experts and other relevant stakeholders.

Once those protocols are set in stone, it's very hard to change them or extend them, because a lot of hardware and software has been built on those standards. You can come up with extension, plugins and patch versions, or introduce a breaking major version at some point, but all these solutions are usually painful and bring fragmentation issues. The greater the success of your distributed protocol, the harder it is to change, because many nodes will be required to keep up with the changes.

The HTTP 1.1 protocol (a.k.a. RFC 2068 standard) that still largely runs the Web today was released in 1997, long before many of today's TikTok celebrities were even born. On the other hand, Google developed and released gRPC within a couple of years. The IMAP and SMTP protocols that have been delivering emails for decades will probably never manage to implement official support for encryption, while WhatsApp managed to roll out support for end-to-end encryption to all of its users with a single app update. IRC is unlikely to ever find a standard way to support video chat, and its logic for sending files still relies on non-standard extensions built over DCC/XDCC, while a platform like Zoom managed to go from zero to becoming new standard de facto for the videochat within a couple of years.

The same bitter truth applies to the protocols proposed both by the semantic web and the IoT web. The push towards IPv6 has moved with the pace of a snail over the past 25 years, both for political reasons (the push, at least in the past, often came from the developing world, eager to get more IP addresses for their new networks, while the US and Europe, which hoarded most of the IPv4 subnets, often preferred to kick the can down the road), but, most importantly, for practical reasons. Millions of lines of code have been written over decades assuming that an IP address has a certain format, and that it can be operated by a certain set of functions. All that code out there needs to be updated and tested, and all the network devices that run that code, from your humble smoke sensor all the way up to the BGP routers that run the backbone of the Internet, need to be updated. Even though most of the modern operating systems have been supporting IPv6 for a long time by now, there is a very long tail of user-space software that needs to be updated.

While the Internet slowly embraces a new version that allows it to allocate more addresses, companies like Google and Amazon have come in to fill that gap. We still need many devices to be able to transfer messages, but instead of communicating directly to one another, they now communicate with Google's or Amazon's clouds, which basically manage them remotely, and allow communication between two different devices only if both of them support their protocols. So yes, an Internet-of-Things revolution is probably happening already, but it's unlikely to be decentralized, and it smells more like some cloud-based form of NAT.

A shared standard may also be on the way (after many years) in the form of Matter, but it's a different standard than the open standards we are used to. It's a standard agreed mainly by the largest platforms on the market, with little to no involvement from the other traditional actors (academia, standards organizations, other developers).

Money runs the world, not protocols and technologies

Things at these scales often move only when loads of money and human resources are poured into a field. No matter how good the new way of doing things is, or how bad the current way of doing thing is. The semantic web and its open protocols haven't really taken off because they were pursued mostly by academia, standards organizations and Tim Berners-Lee. It didn't really pick up momentum in the rest of the industry because at some point solutions like Google came up. Why would you radically change the way you build, present and distribute your web pages, when Google already provides some black magic to infer content and context and search for anything you want on the Web? When Google already fills that gap somehow, it doesn't matter anymore whether the solution is centralized or not: most folks only care that it works well enough for their daily use. Not only: the vision of a semantic web where content is exposed in a form that is both human-readable and machine-readable, where web crawling becomes a structural part of the new experience, would jeopardise the very reason why the world needs services like the Google search engine. The idea of a semantic web, at least as it was proposed by the W3C, is amazing on paper, but it's hard to monetize. Why would I make my website easier for another machine to scrape and extract content from? We can arguably say that the Web has gone in the opposite direction in the meantime. Many sites have paywalls to protect their precious content from unpaid access. Many have built barriers against robots and scrapers. Many even sue companies like Google for ripping them (through Google News) of the precious profits that come from people dropping their eyeballs on the ads running on their websites. Why would a for-profit website want to invest time to rewrite its code in a way that makes its content easier to access, embed and reuse on other websites, when the whole Internet economy basically runs on ads that people can see only if they come to your website? No matter how good the technological alternatives are, they will never work if the current economic model rewards walled gardens or those that run ads that are seen by the highest number of people, especially if the system is already structurally biased towards centralization.

Will the crypto-web succeed where others have failed?

The crypto-web tries to solve some of the hyper-centralization issues of the Web 2.0 by leveraging the features of Blockchain. Here are some of the proposed solutions:

Monetization problem: the issue is addressed by simply making money a structural part of the architecture. Your digital wallet is also the holder of your identity. You can pay and get paid for content on the platform by using one of the virtual currencies it supports. The currency, the ledger of transactions and the platform are, basically, one single entity. So we have a digital currency at the core, and enough people who agree that it has some form of value, but very few ways to make use of that value - unless you live in El Salvador, you probably don't use Bitcoins to pay for your groceries. For that reason, most of the cryptocurrencies have been, over the past years, mainly instruments for investment and speculation, rather than actual ways to purchase goods and services. The new vision of the Web 3.0 tries to close this loop, by creating a whole IT ecosystem and a whole digital economy around the cryptocurrencies (the most cynical would argue that it's been designed so those who hoarded cryptocurrencies over the past years can have a way of cashing their assets, by artificially creating demand around a scarcely fungible asset). If cryptocurrencies were a solution looking for a problem, then we've now got a problem they can finally solve.

Identity: a traditional problem in any network of computers is how to authenticate remote users. The Web 2.0 showed the power of centralization when it comes to identity management: if a 3rd-party like Google, Facebook or Apple can guarantee (through whatever identification mechanism they have implemented) that somebody is really a certain person, then we don't have to reinvent the authentication/authorization wheel from scratch on every different system. We delegate these functionalities to a centralized 3rd-party that owns your digital identity through mechanisms like OAuth2. By now, we also all know the obvious downsides of this approach - loss of privacy, single-point of failure (if your Google/Facebook account gets blocked/deleted/compromised then you won't be able to access most of your online services), your digital identity is at the mercy of a commercial business that doesn't really care a lot about you, Big Button Brother effect (no matter what you visit on the Internet, you'll always have some Google/Facebook button/tracker/hidden pixel somewhere that sees what you're doing). The crypto-web proposes a solution that maintains the nice features of a federated authentication, while being as anonymous as you can get. Your digital wallet becomes a proxy to your identity. If you can prove that you are the owner of your wallet (usually by proving that you can sign data with its private key), then that counts as authentication. However, since your wallet is just a pair of keys registered on the Blockchain with no metadata linked to you as a physical person, this can be a way to authenticate you without identifying you as a physical person. An interesting solution is also provided for the problem of "what if a user loses their password/private key/the account gets compromised?" in the form of social recovery. In the world of Web 2.0, identity recovery isn't usually a big deal. Your digital identity is owned by a private company, which also happens to know your email address or phone number, or it also happens to run the OS on your phone, and they have other ways to reach out to you and confirm your identity. In the world of crypto, where an account is just a pair of cryptographic keys with no connection to a person, this gets tricky. However, you can select a small circle of family members or friends that have the power to confirm your identity and migrate your data to a new keypair.

Protocols and standards: they are replaced by the Blockchains. Blockchains are ledgers of transactions, and the largest ones (such as Bitcoin and Ethereum) are unlikely to be going anywhere because a lot of people have invested a lot of money into them. Since Blockchains can be used a general-purpose storage for data and code, then we've got the foundations for a new technology that doesn't require us to enforce new protocols that may or may not be adopted.

Scalability: decentralized systems are notoriously difficult to scale, but that's simpler for Blockchains. Everybody can run a node, so you just add more nodes to the network. If people don't want to run their nodes, then they can use a paid service (like Infura, Alchemy) to interact with the Blockchain.

So far so good, right? Well, let's explore why these promises may be based on a quite naive interpretation of how things work in reality.

Blockchains are scalability nightmares

Let's first get the elephant out of the room. From an engineering perspective, a Blockchain is a scalability nightmare. And, if implemented in its most decentralized way (with blocks added by nodes through proof-of-work), they are environmental and geopolitical disasters waiting to happen. This is because the whole assumption that anybody can run their own node on a large Blockchain is nowadays false.

Running a Blockchain is a dirty business

In the early days of the crypto-mania, anybody could indeed run a small clusters of spare Raspberry Pis, mine Bitcoins and make some money on the side. Nowadays, big mining companies have installed server farms in the places where energy is the cheapest, such as near Inner Mongolia's hydroelectric dams, near Xinjiang's coal mines or leveraging Kazakhstan's cheap natural gas.

The author of the post on Tom's Hardware mentions that in February 2021 he made $0.00015569 after running mining software on his Raspberry Pi 4 for 8 hours. His hash rate (the number of block hashes added to the Blockchain per unit of time) varied from 1.6 H/s to 33.3 H/s. For comparison, the average hash rate of the pool was 10.27 MH/s. That's 3-4 million times more than the average hash rate of a Raspberry Pi.

The examples I mentioned (Inner Mongolia, Xinjiang and Kazakhstan) are not casual. At its peak (around February 2020) Chinese miners contributed more than 72% of the Bitcoin hashes. The share hovered between 55% and 67% until July 2021, when the government imposed a nationwide crackdown on private crypto-mining. The motivations, as usual, are complex, and they obviously include the government's efforts of creating a digital yuan. However, the fact that private miners scoop up a lot of cheap energy before it can get downstream to the rest of the grid, especially during times of energy supply shortages and inflationary pushes on the economy, has surely played a major role in Beijing's decisions.

The fall of Beijing in the crypto market left a void that other players have rushed to fill in. The share of Bitcoin hashes contributed by nodes in Kazakhstan has gone from 1.42% in September 2019 up to 8.8% in June 2021, when China announced its crackdown on crypto. Since then, it climbed all the way up to 18% in August 2021 - and that's the latest available data point on the Cambridge Bitcoin Electricity Consumption Index. From more recent news, higher demand for Kazakh gas, mixed with the high inflation, pushed energy prices up, triggering mass protests and the resignation of the government.

The curse of the proof-of-work

Why do decentralized Blockchains consume so much energy that they can impact the energy demand and production of whole countries, as well as destabilise whole geopolitical landscapes?

The Bitcoin Blockchain processes less than 300 thousands transactions per day. For a comparison, as of 2019 the credit card circuits processed about 108.6 millions transactions per day in the US alone.

We therefore have a proposed new approach whose underlying infrastructure currently processes less than 0.28% of the transactions processed in the US alone. Interestingly, the volumes of transactions processed by Visa and Mastercard have never caused geopolitical instabilities nor drained the energy supply of whole countries. How come?

To answer this question, we have to understand how Blockchains (or, at least, the most popular decentralized flavour initially proposed by Natoshi Sakamoto's paper) work under the hood.

We have often heard that a Blockchain is secure by design, and it allows the distributed creation of a ledger of transactions among peers that don't necessarily trust one another. This is possible through what is commonly known as proof-of-work. Basically, any node can add new blocks to the distributed chain, provided that the operation satisfies two requirements:

The new blocks are cryptographically linked to one another and consistent with the hashes of the previous latest block. A Blockchain is, as the name suggest, a chain of blocks. Each of these blocks contains a transaction (e.g. the user associated to the wallet ABC has paid 0.0001 Bitcoins to the user associated to the user associated to the wallet XYZ on time t), and a pointer with the hash of the latest block in the chain at the time t. Any new added blocks must satisfy this contraint: basically, blocks are always added in consecutive order to the chain, they cannot be removed (that would require all the hashes of the following blocks to be updated), and this is usually a good way of ensuring temporal consistency of events on a distributed network.

In order to add new blocks, the miner is supposed to solve a numeric puzzle to prove that they have spent a certain amount of computational resources before requesting to add new blocks. This puzzle is defined in such a way that:

2.1. It's hard to find a solution to it (or, to be more precise, a solution can only be found through CPU-intensive brute force);

2.2. It's easy to verify if a solution is valid (it usually involves calculating a simple hash of a string through an algorithm like SHA-1).

The most popular approach (initially proposed in 1997 by Hashcash to prevent spam/denial-of-service, and later adopted by Bitcoin) uses partial hash inversions to verify that enough work has been done by a node before accepting new data from it. The network periodically calibrates the difficulty of the task by establishing the number of hash combinations in the cryptographic proofs provided by the miners (on Bitcoin, this usually means that the target hash should start with a certain number of zeros). This is tuned in such a way that the block time (i.e. the average time required to add a new block to the network) remains constant. If the only way to find a solution to the riddle is by brute-forcing all the possible combinations, then you can tune the time required to solve the riddle by changing the total number of combinations that a node should explore. If a lot more powerful mining machines are added to the pool, then it'll likely push down the block hashing time, and therefore at some point the network may increase the difficulty of the task by increasing the number of combinations a CPU is supposed to go through.

For example, let's suppose that at some point the network requires the target hashes to start with 52 binary zeros (i.e. 13 hexadecimal zeros). On the original Hashcash network, an email could be sent on January 19, 2038 to calvin@comics.net if it had a header structured like this:

X-Hashcash: 1:52:380119:calvin@comics.net:::9B760005E92F0DAE

Where:

-

X-Hashcashis the header of the string to be used as a cryptographic challenge; -

52is the number of leading binary zeros that the target hash is supposed to have; -

380119represents the timestamp of the transaction; -

9B760005E92F0DAEis the hexadecimal digest calculated by the miner that, appended to the metadata of the block, satisfies the hashing requirements.

If we take the string above (except for the X-Hashcash: header title and any leading whitespaces) and we calculate its SHA-1 hash we get:

0000000000000756af69e2ffbdb930261873cd71

This hash has 13 leading hexadecimal zeros (i.e. 52 binary zeroes), and it therefore satisfies the network's hashing requirements: the node is allowed to process the requested information.

Here you go: you have an algorithm that makes it computationally expensive to add new data (a miner has to iterate through zillions of combinations to find a string whose hash satisfies a certain condition), but computationally easy to verify that the hash of a block is correct (you just have to calculate a SHA hash and verify that it meets the condition).

Miners are usually rewarded for their work by being paid a certain fee of the amount of the processed transaction. It's easy to see how early miners who invested the unused power of a laptop or a Raspberry Pi to mine Bitcoins managed to make quite some money on the side, while making a profit out of mining nowadays, given how high the bar has moved over the past years, is nearly impossible unless you invest on some specialised boards that suck up a lot of power.

The proof-of-work provides a secure mechanism to process information among untrusted parties. A malicious actor who wants to add invalid data to the chain (let's say, move one Bitcoin to their wallet from another wallet that hasn't really authorised the transfer) will first have to crack the private key of the other wallet in order to sign a valid transaction, then invest enough computational power to add the block - and this usually means being faster in average than all the nodes on the Blockchain, which already sets a quite high bar on large Blockchains. The new block needs to be accepted through shared consensus mechanisms from a qualified majority of the nodes on network, and usually long chains are more likely to be added than short ones - meaning that you have to either control a large share of the nodes on the network (which is usually unlikely unless you have invested the whole GDP of a country on it), or build a very long chain, therefore spending more power in average than all the other nodes on the network, to increase the chances that your rogue block gets included. Not only, but the new rogue block needs to be cryptographically linked to all the previous and next transactions in the ledger. So the rogue actor will basically have to forever run a system that is faster than all the other nodes in order to "keep up" with the lie. This is, in practice, impossible. In some very rare cases, rogue blocks may eventually be added to the Blockchain. But, when that happens, the network can usually spot the inconsistency within a short time and fork the chain (i.e. cut a new chain that excludes the rogue block and any successive blocks).

This also means that rogue nodes are implicitly punished by the network itself. An actor who tries to insert invalid nodes will eventually be unable to keep up their lie, but they will have wasted the energy required to calculate the hashes necessary to add those blocks. This means that they'll have wasted a lot of energy without being rewarded in any way.

A problem that wasn't originally addressed by the Hashcash proposal (and wasn't addressed by earlier cryptocurrencies either) was that of double-spending. This has been addressed by Bitcoin by basically implementing a P2P validation where a distributed set of nodes needs to reach consensus before a new block is added to the chain, and temporal consistency of transactions is enforced by design with a double-linked chain of nodes.

The proof-of-work is a proof-of-concept that was never supposed to scale

Now that we know how a Blockchain manages to store a distributed ledger of transactions among untrusted parties, it's easy to see why it's such a scalability nightmare. Basically, the whole system is designed around the idea that its energy consumption increases with the number of transactions processed by the network. Not only: since the whole algorithm for adding new blocks relies on a massively CPU-intensive brute-force logic to calculate a hash that start with a certain number of leading zeros, the amount of CPU work (and, therefore, electricity usage) is basically the underlying currency of the network. If the average block hashing time decreases too much, then the likelihook of success for rogue actors who want to add invalid transactions and invest enough in hardware resources increases. We therefore need to keep the cost of mining high so that it's technically unfeasible for malicious actors to spoof transactions.

As engineers, we should be horrified by a technical solution whose energy requirements increase linearly (or on even steeper curves) with the amount of data it processes per unit of time. Managing the scale of a system so that the requirements to run it don't increase at the same pace as the volumes of data that it processes is corner-stone of engineering, and Blockchains basically throws this principle out of window while trying to convince us that their solution is better. Had the "Blockchain revolution" happened under other circumstances, we'd be quick to dismiss its proposals as hype in the best case, and outright scam in the worst case. In an economy that has to increasingly deal with limited supply of resources and increasingly tighter energy constraints, a solution that basically requires wasting CPU power just to add a new block (not only: a system where the amount of electric energy spent is the base currency used to reward nodes) is not only unscalable: it's immoral.

Not only: the requirement to keep a block mining time constant means that, by design, the network can only handle a limited amount of transactions. In other words, we are capping the throughput of the system by design in order to maintain the system secure and consistent. I'm not sure how anybody with any foundation of logical reasoning can believe that such a system can scale to the point that it replaces the way we do things today.

Proof-of-stake to the rescue?

The scalability issues of the proof-of-work mechanism are well-known to anybody who has any level of technical clue. Those who deny it are simply lying.

In fact, Ethereum is supposed to migrate during 2022 away from proof-of-work and towards a proof-or-stake mechanism.

Under such a mechanism for distributed consensus, miners don't compete with one another to add new blocks, and they aren't rewarded on the basis of the electric energy consumed. Instead, they have a certain amount of tokens that represent votes that they can use to validate transactions added to the network. Under the newly proposed approach, for example, a node would have to spend 32 ETH in order to become a validator on the network (invested stake). Whenever a node tries to add a new transaction to the network, a pool of validating nodes is chosen a random. The behaviour of a validator determines the value of its stake - in other words, rogue nodes would be punished by karma:

Nodes are rewarded (their stake is increased) when they add/validate new valid transactions.

Nodes can be punished (their stake is decreased), for example, when they are offline (as it is seen as a failure to validate blocks), or when they add/validate invalid transactions. Under the worst scenario (collusion), a node could lose its entire stake.

On paper, this approach should guarantee the same goal as proof-of-work (reaching a shared consensus among a distributed network of untrusted parties), without burning up the whole energy budget of the planet in the process. Why don't we just go all-in on the proof-of-stake then?

Well, one reason is that it's a system that is definitely less tested on wide networks compared to proof-of-work. PoW works because it leverage physical constraints (like the amount of consumed energy) to add new blocks. PoS removes those constraints, it proposes instead a stake-based system that leverages some well-documented mechanisms of reward and punishment from game theory, but once the physical constraint is removed there are simply too many things to take into account, and different ways a malicious actor may try and trick the system.

A common problem of PoS systems is that of a 51% attack. An attacker who owns 50%+1 of the share of stakes of a currency can easily push all the transactions that they want - consensus through qualified majority is basically guaranteed. The risk for this kind of attack is indeed very low in the case of Ethereum - one Ethereum coin is expensive, buying such a large share of them is even more expensive, and anyone who invests such a large amount has no interest in fooling the Blockchain, cause a loss of trust and therefore erode the value of their own shares. However, this risk is still very present in smaller Blockchains. PoS is a mechanism that probably only works in the case of large Blockchains where it's expensive to buy such a large share of tokens. On smaller Blockchains with a lower barrier to buy a majority stake it can still be a real issue.

There is another issue, commonly known as nothing-at-stake. This kind of issues can occur when a Blockchain forks - either because of malicious action or because of two nodes actually propose two blocks at the exact same time, therefore making temporal causality tricky.

When this happens, it's in the interest of the miners to keep mining both the chains. Suppose that they keep mining only one chain, and the other one is eventually the one that picks up steam: the miner's revenue will sink down. In other words, mining all the forks of a chain maximises the revenue of a miner.

Suppose now that an attacker wants to attempt a double-spending attack. They may do so by attempting to create a fork in the Blockchain (for example, by submitting two blocks simultaneously from two nodes that they own) just before spending some coins. If the attacker only keeps mining their fork, while all the other miners act in their self-interest and keep mining both the forks, then the attacker's fork eventually becomes the longest chain, even if the attacker only had a small initial stake.

A simpler version of this attack is the one where a validator cashes out their whole stake but the network does not revoke their keys. In this case, they may still be able to sign whatever transactions that they wish, but the system may not be able to punish them - the node has nothing at stake, therefore it can't be punished by slashing their stake.

Ethereum proposes to mitigate these issues by linking the stake onto the network to an actual financial deposit. If an attacker tries to trick the system, they may lose real money that they have invested.

There is, however, a problem with both the mitigation policies proposed by Ethereum. Their idea is basically to disincentivise malicious actors by increasing the initial stake required to become a validator. The idea is that, if the stake you lose is higher than the money you can make by tricking the system, you'll think twice before doing it. What this means is that, in order for the system to work, you need to increase the initial barriers as the value of your currency (and the number of transaction it processes) increases. So, if it works, it'll basically turn the Blockchain into a more centralized system where a limited amount of nodes can afford to pay the high cost required to validate new blocks (so much for the decentralization dream). As of now, the entry fee to become a validating node is already as high as 32 ETH - more than $100k. As the system needs to ensure that the stake a node loses for rogue behaviour is higher than the average gain they can make from tricking the system, this cost is only likely to go up, making the business of becoming a validating node on the Blockchain very expensive. If it doesn't, if the value of the cryptocurrency plunges and the perceived cost of tricking the system gets lower, then there's nothing protecting the system from malicious actors.

In other words, proof-of-stake is a system that works well under some very well defined assumptions - and, when it works, it's probably doomed to swing towards a centralized oligarchy of people loaded with money anyways.

How about data storage scalability?

Another scalability pain point of Blockchains is on the data storage side. Remember that any mining/validating node on the network needs to be able to validate any transaction submitted to the network. In order to perform this task, they need a complete synchronized snapshot of the whole Blockchain, starting with its first block all the way up to the latest one.

If 300k blocks per day are submitted, and supposing that the average size of a block is 1 KB, this translates into a data storage requirement of about 100 GB per year just to store the ledger of transactions.

As an engineer, suppose that you have a technological system that requires thousands of nodes on the network to process about 0.2% of the volumes of transactions currently processed by Visa/Mastercard/Amex in the US alone. The amount of nodes required on the network increases proportionally with the amount of transactions that the network is able to process, because we want the process of adding new blocks to be artificially CPU-bound. Moreover, each of these nodes has to keep an up-to-date snapshot of the whole history of the transactions, and it requires about 100 GB for each node to store a year of transactions. Since every node has to keep an up-to-date snapshot of the whole ledger, you can't rely on popular scalability solutions such as data sharding or streaming pipelines. Not only, but adding a new block to this "decentralized filesystem" requires that you run some code that wastes CPU cycles in order to solve a stupid numeric puzzle with SHA hashes. How would you scale up such a system to manage not only the amount of financial transactions currently handled by the existing solutions, but run a whole web of decentralized apps on it? I think that most of the experienced engineers out there would feel either uncomfortable or powerless if faced with such a scalability challenge.

Castles built on sand

The Blockchain has promised us a system where any nodes could join the network, add and validate transactions (distributed promise) in a scalable and secure way, and that system could soon disrupt not only the way we manage financial transactions, but the way we run the entire Web. This whole promise was based around mechanisms to reach shared consensus on distributed networks.

It turns out that both those mechanism (proof-of-work and proof-of-stake) aren't ready to fullfil those promises.

Proof-of-work is an environmental and scalability disaster by design, with capped throughput, ability to process only a tiny fraction of the transactions we process nowadays on traditional circuits, and the amount of energy wasted to add new blocks being the fundamental currency of the network.

Proof-of-stake is more promising, but it hasn't yet fully addressed its underlying security issues - it has proposed mitigation measures, not real structural solutions - and it's not been tested on large scales yet.

Moreover, both the solutions are doomed, by design, to break their promises about decentralizations.

As the example of Bitcoin clearly shows, when the amount of resources invested by miners increases (because the value of the currency itself increases, therefore mining blocks becomes more profitable), the entry barriers for mining also increase. Unless you have a dedicated server farm located in some of the places on the planet where energy is cheapest, you probably won't make much money out of it. So mining basically becomes a game played by a limited amount of players, with a long tail left to eat breadcrumbs.

When it comes to proof-of-stake, instead, the security of the network, as the number of nodes or the value of the currency increases, can only be guaranteed by increasing the initial deposit required by nodes who want to join as validators. As the margin of revenue increases, you can bet that more affluent players will join the club, eventually pushing up the initial stake and therefore increasing the entry barriers - it's basically a network where the actors with more power are the ones who can afford to pay more money. So much for the idea of a decentralized and more equitable network.

In other words, it can be proven that a Blockchain can't scale to the demands of throughput required by modern networks without either giving up on one of its promises (either of security of true decentralization), or causing unacceptable side effects. So why are we even talking about it as if it actually was a serious option to consider?

We are not in the initial days of the technology either - some rough corners would be a bit more tolerable if that was the case. Sakamoto's paper was published 12 years ago - an eternity when it comes to technology. In the meantime, Bitcoin's unsustainability has been proved again and again, to the point where it currently takes the whole yearly energy output of a country like Argentina to mine only 0.2% of the credit card transactions currently processed in the US alone. Its high energy cost has already had consequences on countries like China and Kazakhstan. 12 years down the line, we don't have yet a proof-of-work 2.0 that could address or at least mitigate its own unsustainability issues. And we have another proposed solution (proof-of-stake) that is either doomed to make Blockchains more centralized than the traditional circuits, or it is doomed to dilute its security constraints with "mitigation actions" for commonly known issues. Would we accept Visa or Mastercard to build their own security around "structural assumptions" and "mitigation policies"? Would we feel confident such an infrastructure to be in charge of our money or personal data? Then, again, we should stop talking of Blockchains as if they were a serious solution.

The Web 3.0 is already much more centralized than we think

This is an obvious corollary of the conclusions we have reached in the previous paragraph.

In order to build any kind of web, you need to be able to build software on it. In the crypto world, software takes the shape of dApps (distributed apps).

dApps basically leverage one of the core features of Ethereum (smart contracts) to run software that can read or manipulate the Blockchain.

Some example standard use-cases for smart contracts are:

You want to create a logic that moves 10% of a certain sum from your wallet to another wallet every month until the original sum is exhausted (payments in installments).

You want to create a logic that moves a certain sum to another wallet if the value of an underlying asset (e.g. a stock, a bond or another financial instrument) goes below/above a certain value (a derivative).

The standard use-case for a smart contract, in other words, is to model a financial instrument that manipulates the ledger and moves money across wallets if/when a certain set of conditions is met.

The whole idea behind the Web 3.0 is to leverage these technological instruments to build all kind of software. In theory, this solution solves the problem of authentication by design (the identity of users is mapped to their digital wallets, which is something recognized by everyone on the Blockchain, yet they mask the user's real identity unless they want to reveal it), while providing a stable secure and immutable storage by design under the form of a Blockchain. Not only, but financial transactions between users can happen on the fly, without having to manage complex integrations for payments, without having to give up the control of the money flow to a handful of major players. This would ideally open a whole world of decentralized micro-payments that don't rely on intermediary banks with their fees.

Not only: the Blockchain allows you to mint Non-Fungible Tokens (NFT_s). Unlike any other tokens, which are equally _fungible (i.e. you can swap any token A for a token B, and everybody agrees that they have the same value, just like a $1 bill has the same value of another $1 bill), NFTs are minted only once, and they can be univocally linked to a digital asset (such as a media file or game mod). A user who buys that token basically buys the underlying asset, and since the transaction can be easily verified by anyone on the Blockchain, everybody can agree that the user owns that asset. This would open a whole new set of revenue streams for artists and digital creators.

So far so good, right? Well, as you may have guessed, there are a few problems with this vision.

Real dApps come with real gatekeepers

We have extensively analysed why Blockchains are no longer that small niche world where everyone can run their server on a Raspberry Pi and seriously be a part of a distributed network. The entry barriers to run a node that is seriously able to push blocks to the network are very high - either because of high costs in terms of energy/hardware requirements (PoW), or high financial costs (PoS, realistic forecast).

So, if the cost of running a node on a Blockchain is high, then how do we interact with it in our software? How do we actually run these marvelous smart contracts? Well, by delegating access to the Blockchain to a few major players that provide API access to interact with it. Right now, the market is rather consolidating around Infura and Alchemy. Even when it comes to authentication-over-wallet, most of the distributed applications nowadays delegate the task to MetaMask, which already provides integrations with most of the popular wallets out there. MetaMask itself, under the hood, simply routes API calls to Infura.

But wait a minute - the whole premise of this crypto-web was that we wanted more decentralization. We were tired of delegating tasks such as user authentication, software execution and financial transactions to a handful of major Web 2.0 technological providers. So we decided to invest tons of resources and efforts to design a decentralized system with shared trust management. How come then have we decided to replace the Google/Facebook login button with a MetaMask login button, and running software on Google/Amazon/Microsoft's clouds with running it over the APIs provided by Infura/Alchemy?

If you have come so far in the article, you probably already know the answer to this question: it was inevitable. Technological systems already have a built-in bias towards centralization. If you model a system in such a way that its entry barriers also scale up with its size, then such a bias becomes inevitability. The reasons, again, are a corollary of the previous paragraphs:

On average, people are lazy. They don't want to run servers unless they really have to. So, if somebody provides them with easy access to some services, and the subscription/on-demand cost of these services is lower than the cost of running their own hardware and software, people will inevitably tend to favour that option. Especially when the system is designed in such a way that being an element of the distributed network requires you to run a small data center and add at least 100 GB of physical storage every year.

On average, developers are lazy (corollary of the previous point). Sure, they may initially want to experiment with new technologies and frameworks. But once the cost to competitively run production-ready hardware and software increases because the complexity of the system itself increases (because there are many cryptocurrencies and types of wallets to support, or because there are many protocols and APIs to support, or because the cost of running a node or investing an initial stake is too high), then they may just opt for someone who offers themselves as an intermediary. If somebody can solve the problems of user authentication, data validation and data integration for us, then we're likely to use their services, APIs or SDKs, instead of reinventing the wheel.

Not only this flavour of Web 3.0 was doomed to swing back towards centralization, but its proposed approached is even much more centralized than today's Web.

Nowadays, nobody prevents you from buying your own domain, running your own server, installing Apache/nginx (or just use an off-the-shelft solution like Wordpress), maybe an integration with PayPal/Stripe/Adyen, and having your own website ready to accept users and payments. You can even store your own database of users, so you don't need to rely on Google/Facebook login buttons and trackers. No fancy clouds involved, no centralization. Sure, the average Joe that isn't that fluent with HTML, JavaScript or DNS management may still prefer an off-the-shelf centralized solution, but nothing prevents anybody with the right tools and skills from running a website without relying on the Web 2.0 mafia.

In the case of the Web 3.0, instead, dApps can run their logic only if they run on a node that is part of the network. Running a node on the network while being profitable, as we have extensively explored, is practically an out-of-reach task even for the most experienced and affluent engineer. So basically, by design, you have no way of running software on the Web 3.0 other than delegating the task to a handful of gatekeepers. Not only: since these gatekeepers manage all the transactions between you and the Blockchain, you have no choice but trusting what they say - their API responses are basically the only source of truth. And you have no options to test your software locally either - either you run your own local Blockchain (which is highly unpractical), or you run your software in their sandboxes. Imagine a world where all the interactions with any website out there require all the HTTP requests and responses to be proxied through Google's servers, and you have no options outside of Google's cloud even to run/test your software. Those who dream of the Web 3.0 as a place to escape the current technological oligopoly should probably ponder the fact that they are just shifting power to another oligopoly with even higher entry barriers and concentration of power.

Transaction fees are actually higher than most of the credit card circuits

How about micro-payments and escaping the jail of credit card circuits with their transaction fees?

Well, one should keep in mind that Blockchains are systems made of miners that consume energy in order to add transactions. In order to incentivise miners to run nodes on the Blockchain, we need to provide them with a reward. This rewards takes the shape of a fee, which can be either fixed or proportional either to the transacted amount or the amount of consecutive blocks that are mined.

Not only: remember that the network has to ensure that it's always profitable enough for miners to run their nodes. If that's no longer the case, the Blockchain may die off - nobody likes to waste electric power with no prospect of making a profit. This means that the fees on a Blockchain are constrained to the demand/supply mechanisms that rule any other markets. A shortage of miners compared to the demand for transactions (either because mining a block isn't profitable when weighed against its cost, or because the network has to handle a spike in the number of transactions, or because a whole country decides to crack down on cryptocurrencies and take supply out of the pool) means that the average fee needs to increase as well - you need to pay miners better if you want to attract more of them. This cost is simply offloaded to the party that initiated the transaction.

Nowadays, the average transaction fee for Bitcoin is around $1.7 (remember, to process only a tiny fraction of the transactions processed by today's circuits). But this can vary widely depending on the supply of miners on the network. Shortage of miners equals higher fees: the few miners on the network will process the transactions that offer higher gains. In April 2021, for example, the average transaction fee to process Bitcoins spiked at nearly $63. How can you build any solution to handle micro-payments around a system whose transaction fees can go from a few cents to $60 depending on the supply of miners on the network? How is it even financially feasible to move $0.05 from my wallet to a band's digital wallet after streaming an mp3 on their website, if the fee to process such a transaction can go all the way from 20 to 1000 times the value of the transacted amount?

Yes, micro-payments are a real technological problem that deserves to be solved. Yes, today's solutions to handle online payments aren't perfect. But Blockchains only seem to make the problem worse. What we may need a shared protocol to handle payments - some parts of it have already been envisioned, like the HTTP 402 code, or the proposed W3C standard for handling payments. Yes, the Web 3.0 proposes similar solutions through shared protocols, but I'd rather extend that we already have (HTTP and HTML) instead of relying on something completely new, especially if that new solution comes with so many drawbacks and shortcomings.

Let's come to another promise that the Web 3.0 can't fullfil: data storage.

A Blockchain does not really store data

Anybody who tells you that your data will be safely stored on a Blockchain is a scammer.

A Blockchain was never supposed to store general-purpose data. Hell, with a throughput of 300k blocks per day, a cost per block that equals the daily energy demand of a small apartment, the requirement for all the nodes to hold an up-to-date snapshot of the whole database and no margins for data sharding, it'd be the worst and most unscalable data storage that I can think of. The only thing that could outcompete it would be storing data through butterflies.

Blockchains were designed to store financial transactions on a network of untrusted nodes. Such transactions are usually small blocks of text that say "wallet A moved X Bitcoins to wallet B at time T, and the latest transaction in the chain at time T was Z". Forget storing your GIFs on such an architecture, let alone your whole website or your self-made movie - it'd be like storing a Blueray DVD on floppy disks. Remember that it takes the average node on the network about 100 GB of storage investment to store one year of transactions, given the current volumes of Bitcoin transactions and assuming an average block size of 1 KB. Storing all kind of media data from every user on top of this would simply be unacceptable.

So, if the data isn't stored on the Blockchain directly (outside of a wallet's metadata and its transactions), where is it stored?

Well, once you scrape the crypto-facade the dear ol' web appears. Take the example of an NFT. The minted token contains a metadata section that references a URL - the linked digital asset. By buying the token, in theory you buy the ownership of the underlying asset. And, since this transaction occurs on the Blockchain, everybody can confirm that you are the actual owner of the asset.

But wait - the digital asset is actually referenced by URL, it's not actually stored on the Blockchain. What this means, in reality, is that most of the NFTs currently on sale point to web servers that run an Apache instance and expose static files.

What happens if the owner of the domain stops paying for the domain name? Or for the web hosting? Or for the SSL certificate? What if the machine goes down? What if the web server is compromised and all the static assets are either scrambled by ransomware or replaced with shit emojis, or the file is simply removed from the server? If instead it's stored in a Dropbox/Google Drive folder, what happens if that account gets deleted/blocked? If the URL is publicly accessible, what prevents me from simply copying the file to my own web server, put it on sale for $0.01, buy it and say that I'm the actual owner? If the underlying physical file has the same content, and URLs are used as identifiers of digital assets, who is supposed to establish what URL is valid if two of them point to files with the same content?

Another guy had similar concerns recently, so he created a piece of digital art and he put it on sale via NFT.

Remember: an NFT simply points to a URL. A URL simply points to a web server. It's quite easy to embed some pieces of code into your images (for example, using GD in PHP) so that the image is rendered differently depending on the context of the HTTP request. For example, the underlying image would render in different ways on different NFT marketplaces, and, if visualized within the context of a wallet (i.e. a user that purchased the underlying asset), it would simply render a "shit" emoji. His point was quite simple: if an NFT simply points to a URL that points to a web server that any dude can run, what prevents all the digital assets purchased via NFTs from turning into a 404 error page (or "shit" emojis) at the moment of purchase or any later moment?

Again, the Web 3.0 tries to address a real problem here: how to compensate digital creators for their work, while cutting away all the middlemen that may eat large shares of the profits. I've been very sensitive myself on this topic myself for a long time. But, again, it proposes the wrong solution. Middlemen aren't really cut out (again, a Blockchain isn't really decentralized), fees aren't really slashed (again, it depends on the demand/supply mechanisms that regulate the mining industry), and having digital content paid thousands of dollars living on some dude's Apache server or Wordpress blog wasn't exactly the solution to the problem of "digital ownership" that I had in mind. I mean, come on, even a naive solution like referencing the SHA-1 hash of the underlying file would have been better than this grotesque sham.

Nobody can touch your digital wallets, transactions and assets - unless they can?

Another argument often proposed by the crypto-web enthusiasts is that, once something is on the Blockchain, it's forever. There's no censorship, no risk for later data manipulation, nobody can "cancel" you.

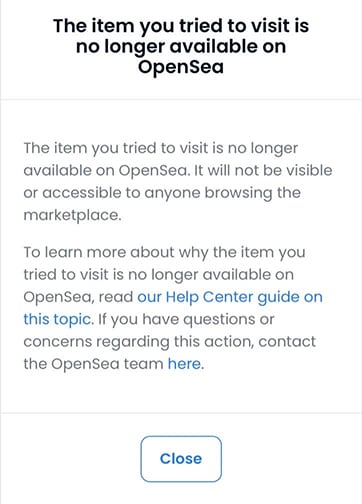

Remember the "shit emoji" dude from the previous paragraph? Well, his digital asset was later removed from OpenSea (one of the main NFT marketplaces). It is quite likely that somebody complained about the "polymorphic" nature of the image:

Not only: it also disappeared from the wallets of those who had legitimately purchased it.

How is this possible? Well, it's quite easy to understand once we keep the previous observations in mind:

- An NFT is simply a token that uniquely points to a URL;

- Access to the Ethereum Blockchain is actually already quite centralized.

And, since wallets and transactions on the Blockchain are actually linked to users and accounts hosted on a normal website, nothing prevents the owner of the website from doing what the owner of any website can do - included blocking/banning users, modifying database records, and so on.

Not only: if Blockchains are really so secure, how come do we read so much about thefts of tokens or whole wallets? Well, while the underlying Blockchains are often quite secure (rogue nodes may occasionally still be able to push illegitimate transactions, but such events are very rare), remember that digital wallets are still associated to a user on a normal web site that logs in like any other user would log into a normal web site - with username and password. Remember that the strenght of a chain always equals the strength of its weakest link. Having a standard web layer wrapped around a Blockchain doesn't really improve security.

Not only: another promise of Blockchains is that of anonymity. You are identified on the Blockchain by your wallet, and your wallet is just a pair of randomly generated cryptographic keys. But if access to the Blockchain is centralized, then you'll probably end up managing multiple wallets with a small set of service providers. These providers can easily link multiple wallets that you manage on their website to your account, therefore enabling all kind of user analytics already performed by today's platforms.

A Blockchain is a distributed ledger, but there's no point in investing the effort in building a distributed ledger if it's wrapped by a centralized layer that doesn't offer significant gains in terms of data control, security and anonymity.

Conclusions

The vision of a crypto-based Web 3.0 revolves around promises of greater decentralization, scalability, privacy, security, data control, transparency, and payments that cut the middlemen. It turns out that, at the current state, it cannot deliver any of these promises:

Decentralization: if the cost to join the network is a node is artificially high, either in terms of energy/hardware requirements (PoW) or initial financial investment (PoS), then we are in a situation where the entry barriers are higher, not lower, than running your own web site on your own web server today.

Scalability: if the entry barriers are high in the first place, and they are designed to structurally increase as the network grows, then the network can hardly scale up. If we talk of a system that consumes the whole energy demand of a country to handle 300k transactions per day, and where all the nodes are supposed to keep a synchronized copy of the whole dataset, then we are talking of a system that performs much worse, not better, than the systems that we currently have.

Security: security is always as strong as the weakest link in the chain. If you have an underlying data structure that is hard to tamper with, but access to that data structure is managed through a username/password login on a normal web site or app, then you can't overall expect the security level to go up.

Privacy, transparency and data control: all the interactions with the major Blockchain-based web solutions are processed by a small number of players in the industry. Consolidation in this space is already strong. Privacy, transparency and data control are as good as when you delegate complete control of your data to a third-party on the Web. You are trusting third-parties to authenticate you, to process your online payments or to keep track of the assets that you own. What they return on their API responses is also the ultimate source of truth. The Web 3.0 basically is attempting to shift power from a tech oligarchy to another - presumably composed of those who have invested a lot into cryptocurrencies and are just searching for problems that Blockchains can solve while making them rich.

Transaction fees: fees are actually linked by design to the demand/supply balance of mining resources. This makes them much more volatile than the fees you would pay for a normal credit card transaction. Not only: they are also higher on average, since the cost of adding a new block to a distributed ledger through shared consensus is higher than the cost of handling the same transaction on a centralized ledger. This makes the solutions proposed by the Web 3.0 unfeasible to handle problems such as micro-payments or royalties.

I still believe that the Blockchain can be a promising technology, but, after this long hype phase, I believe that expectations about what it can actually deliver will be downsized. I don't believe that it can be used as a system for shared consensus in the first place. The original approach, the proof-of-work, is a scalability disaster by design - it was a good proof-of-concept that recycled an idea from some late-90s spam mitigation protocol, nothing more. The newly proposed approach, the proof-of-stake, is more promising, but it hasn't yet been tested enough on large scale and it hasn't yet addressed some security and consistency issues. And, even if it's successful, it's probably doomed to converge towards centralization, since the initial stakes for being a part of the network are likely to increase either with the size of the network or with the value of the underlying cryptocurrency. In short, the Blockchain proposed itself a solution to the problem of distributed consensus, but, 12 years down the line, it hasn't yet figured out how to achieve consensus in a way that is scalable, secure and distributed at the same time.

I still believe that Blockchains will have a place in cutting the middlemen when it comes to B2B financial transactions, or creating financial instruments that currently require fat contracts signed by fat notaries with fat fees. There is quite an ironic twist here: Bitcoin was initially proposed in the aftermath of the 2008-2009 financial crisis. It was supposed to punish the financial system that had caused the crisis (and, in particular, the underlying level of unregulated financial speculation) by creating a new distributed financial system. Instead, smart contracts may end up powering a revolution in the very financial instruments that cryptocurrencies were supposed to destroy.

Blockchains may also revolutionarise logistics: shipping an item from one side of the world to the other currently requires integrating data from many different sources, spanning over different countries and subject to different regulations. If everybody agrees to write both their transactions and their business logic to the same distributed ledger, it would solve a lot of issues in a structural way.

But these use-cases probably depict very different scenarios from the ones originally envisioned. This will be a distributed ledger managed through some proof-of-stake-like mechanism between businesses or financial institutions that already have some ways of identifying their identities - once you relax the constraints of trust enforcement and decentralization, Blockchains can actually be interesting technological tools.

They might also be used to run whole national currencies. China is already working of it. This is another use-case that makes totally sense: if you own a digital wallet directly with your own central bank, then the central bank has much more direct visibility and control over its monetary policies, and it doesn't have to rely on the middlemen (e.g. retail banks) to manage the money of citizens. However, this is, again, a far cry from the Blockchain's original idea. It was initially conceived as a system to let citizens take back control of their money. It may probably end up cutting retail banks out, but only to further centralize control in the hands of the government or the central bank.

However, I also believe that the crypto-based Web 3.0 addresses real problems that are still searching for solutions. Centralization of identity management and data management, and in general an increasing consolidation of power in the hands of a half a dozen of companies, are still real problems. Affordable, easy-to-setup cross-border micro-payments are still looking for a solution. The problem of handling and enforcing ownership of digital assets, and rewarding digital creators for their work, is still looking for a solution. I just believe that the Blockchain is the wrong solution for this problem, because - as many already said - it's a solution still looking for a problem to solve.

In order to address the issues that caused the consolidation of technological power that plagues the Web 2.0, we must first analyse what kind of phenomena lead to technological consolidation in the first place. Once we acknowledge that the level of consolidation is proportional to the entry barriers required to compete in the market, then we may want to design systems that lower those barriers, not systems that increase them.

I also believe that there is a lot of untapped potential in the previous incarnations of the Web 3.0 - both the semantic web and the web-of-things. Sure, they had to face a reality where it's easier to build a platform with your own set of rules rather than getting everybody to agree on the same shared set of protocols and standards, and with an industry that has a strong bias towards centralization and de-facto standards anyways. But at least they proposed incremental solutions built on top of existing standards that address real issues in building a distributed Web - data and protocols fragmentation in the first place.

Top comments (1)

🚀 Areon Network's Hackathon is calling your name! Head to hackathon.areon.network to register and compete for a share of the incredible $500,000 prize pool. Let the coding adventure begin! 💡💰 #AreonHackathon #TechInnovation