I was interested in trying out a hybrid Kubernetes setup and exploring some use cases.

This setup involves creating three control plane (master) nodes in the cloud (AWS) and booting Talos worker nodes on-premises, then connecting them to the master nodes in the cloud.

When it comes to on-premises worker nodes, you have the flexibility to choose any solution that fits your needs. Whether you're using a hypervisor or even local QEMU virtual machines, the choice is entirely up to you. In this guide, I will be using Proxmox because it makes things easier for me.

Repository: https://github.com/kubebn/aws-talos-terraform-hybrid

AWS master nodes provisioning

- Setup vars in

vars/dev.tfvars - Run Terraform

terraform apply -var-file=vars/dev.tfvars -auto-approve

Output:

local_file.kubeconfig: Creating...

local_file.kubeconfig: Creation complete after 0s [id=d3443f0dfed1dbbf0e71f99dfbf0684dc1ca8b95]

Apply complete! Resources: 25 added, 0 changed, 0 destroyed.

Outputs:

control_plane_private_ips = tolist([

"192.168.1.135",

"192.168.2.122",

"192.168.0.157",

])

control_plane_public_ips = tolist([

"18.184.164.166",

"3.122.238.249",

"3.77.57.73",

])

Install talosctl and kubectl:

curl -sL https://talos.dev/install | sh

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

Apply kubeconfig and talosconfig files. These are generated in the same folder where the terraform apply command was executed.

export TALOSCONFIG="${PWD}/talosconfig"

export KUBECONFIG=${PWD}/kubeconfig

After that, you will see that the master nodes are ready, Kubespan is up, and Cilium is fully installed:

kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

aws-controlplane-1 Ready control-plane 2m25s v1.32.3 192.168.1.135 18.184.164.166 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

aws-controlplane-2 Ready control-plane 2m36s v1.32.3 192.168.2.122 3.122.238.249 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

aws-controlplane-3 Ready control-plane 2m24s v1.32.3 192.168.0.157 3.77.57.73 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-599jz 1/1 Running 0 80s

kube-system cilium-5j6wl 1/1 Running 0 80s

kube-system cilium-fkkwv 1/1 Running 0 80s

kube-system cilium-install-tkfrf 0/1 Completed 0 111s

kube-system cilium-operator-657bdd678b-lxblc 1/1 Running 0 80s

kube-system coredns-578d4f8ffc-5lqfm 1/1 Running 0 111s

kube-system coredns-578d4f8ffc-n4hwz 1/1 Running 0 111s

kube-system kube-apiserver-aws-controlplane-1 1/1 Running 0 79s

kube-system kube-apiserver-aws-controlplane-2 1/1 Running 0 107s

kube-system kube-apiserver-aws-controlplane-3 1/1 Running 0 80s

kube-system kube-controller-manager-aws-controlplane-1 1/1 Running 2 (2m11s ago) 79s

kube-system kube-controller-manager-aws-controlplane-2 1/1 Running 0 107s

kube-system kube-controller-manager-aws-controlplane-3 1/1 Running 0 80s

kube-system kube-scheduler-aws-controlplane-1 1/1 Running 2 (2m11s ago) 79s

kube-system kube-scheduler-aws-controlplane-2 1/1 Running 0 107s

kube-system kube-scheduler-aws-controlplane-3 1/1 Running 0 80s

kube-system talos-cloud-controller-manager-599fddb46d-9mmdk 1/1 Running 0 111s

Important Notes for Talos Machine Configuration on Master Nodes

We want to filter out the AWS private VPC from Kubespan, as on-premise workers won't be aware of it anyway.

kubespan:

enabled: true

filters:

endpoints:

- 0.0.0.0/0

- '!${vpc_subnet}'

Although, we have configured both kubelet and etcd to use the internal subnet.

kubelet:

nodeIP:

validSubnets:

- ${vpc_subnet}

etcd:

extraArgs:

election-timeout: "5000"

heartbeat-interval: "1000"

advertisedSubnets:

- ${vpc_subnet}

We have added the kubelet extraArgs for certificate rotation, and Talos CCM will handle that. You can find more details here.

kubelet:

defaultRuntimeSeccompProfileEnabled: true

registerWithFQDN: true

extraArgs:

cloud-provider: external

rotate-server-certificates: true

...

externalCloudProvider:

enabled: true

manifests:

- https://raw.githubusercontent.com/siderolabs/talos-cloud-controller-manager/main/docs/deploy/cloud-controller-manager.yml

DHCP/TFTP configuration in Proxmox

We have set up networking for virtual machines and LXC containers:

- LAN - 10.1.1.0/24

- Proxmox node - 10.1.1.1

- DHCP/tftp LXC container - 10.1.1.2

- Install curl and docker

- Download vmlinuz and initramfs.xz from Talos release repository.

- Copy matchbox contents into lxc container

pct create 1000 local:vztmpl/ubuntu-24.10-standard_24.10-1_amd64.tar.zst \

--hostname net-lxc \

--net0 name=eth0,bridge=vmbr0,ip=10.1.1.2/24,gw=10.1.1.1 \

--nameserver 1.1.1.1 \

--features keyctl=1,nesting=1 \

--storage local-lvm \

--rootfs local:8 \

--ssh-public-keys .ssh/id_rsa.pub \

--unprivileged=true

pct start 1000

ssh root@10.1.1.2

apt update && apt install curl -y

curl -s https://get.docker.com | sudo bash

curl -L https://github.com/siderolabs/talos/releases/download/v1.9.5/initramfs-amd64.xz -o initramfs-amd64.xz

curl -L https://github.com/siderolabs/talos/releases/download/v1.9.5/vmlinuz-amd64 -o vmlinuz-amd64

Configure DHCP range, gateway, matchbox endpoint ip address in matchbox/docker-compose.yaml file.

Start DHCP/TFTP server via docker compose:

docker compose up -d

---

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9f04e46194bf quay.io/poseidon/dnsmasq:v0.5.0-32-g4327d60-amd64 "/usr/sbin/dnsmasq -…" 2 hours ago Up 2 hours dnsmasq

5db45718aa0a root-matchbox "/matchbox -address=…" 2 hours ago Up 2 hours matchbox

Connecting on-premise workers

Talos machine configuration for workers is generated by terraform, look for worker.yaml. We are going to apply this to each worker in Proxmox.

Create VMs

for id in {1001..1003}; do

qm create $id --name vm$id --memory 12088 --cores 3 --net0 virtio,bridge=vmbr0 --ostype l26 --scsihw virtio-scsi-pci --sata0 lvm1:32 --cpu host && qm start $id

done

Scan for Talos API open ports and extract IP addresses

WORKER_IPS=$(nmap -Pn -n -p 50000 10.1.1.0/24 -vv | grep 'Discovered' | awk '{print $6}')

Apply configuration to each discovered IP

echo "$WORKER_IPS" | while read -r WORKER_IP; do

talosctl apply-config --insecure --nodes $WORKER_IP --file worker.yaml

done

Let’s take a look at the result

kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

aws-controlplane-1 Ready control-plane 2m25s v1.32.3 192.168.1.135 18.184.164.166 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

aws-controlplane-2 Ready control-plane 2m36s v1.32.3 192.168.2.122 3.122.238.249 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

aws-controlplane-3 Ready control-plane 2m24s v1.32.3 192.168.0.157 3.77.57.73 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talos-9mf-ujc Ready <none> 29s v1.32.3 10.1.1.24 <none> Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talos-lv8-bc7 Ready <none> 29s v1.32.3 10.1.1.10 <none> Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talos-ohr-1c3 Ready <none> 29s v1.32.3 10.1.1.23 <none> Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talosctl get kubespanpeerstatuses -n 192.168.1.135,192.168.2.122,192.168.0.157,10.1.1.24,10.1.1.19,10.1.1.12

NODE NAMESPACE TYPE ID VERSION LABEL ENDPOINT STATE RX TX

192.168.1.135 kubespan KubeSpanPeerStatus Hh2ldeBX7kuct6Bynehjdgo6xkcOlZ4yUWWQVBqHLWQ= 11 talos-fgw-qpv proxmo-publicip:53639 up 84312 69860

192.168.1.135 kubespan KubeSpanPeerStatus If8ZqCQ1jp0mFV8igLGpfXpYycQSVBTSlT88YUr7eEM= 11 talos-4re-kxo proxmo-publicip:53923 up 82160 99412

192.168.1.135 kubespan KubeSpanPeerStatus iKXIODHF3Tx2b4JsA433j8ey9+CWTKrx5To+4lsofTg= 11 talos-7ye-8j0 proxmo-publicip:51820 up 43608 58280

192.168.1.135 kubespan KubeSpanPeerStatus oxU0e9yTFvN+lCGcIO4s13erkWjtIKrVzb8dX+GYLxE= 26 aws-controlplane-3 3.77.57.73:51820 up 7484600 16097980

192.168.1.135 kubespan KubeSpanPeerStatus vAHCI1pwTbaP/LHwO0MnCGELXsEstQahS0o9WkdVK0g= 26 aws-controlplane-2 3.122.238.249:51820 up 6742536 15472144

192.168.2.122 kubespan KubeSpanPeerStatus Hh2ldeBX7kuct6Bynehjdgo6xkcOlZ4yUWWQVBqHLWQ= 11 talos-fgw-qpv proxmo-publicip:53639 up 178960 354776

192.168.2.122 kubespan KubeSpanPeerStatus If8ZqCQ1jp0mFV8igLGpfXpYycQSVBTSlT88YUr7eEM= 11 talos-4re-kxo proxmo-publicip:53923 up 232064 897928

192.168.2.122 kubespan KubeSpanPeerStatus iKXIODHF3Tx2b4JsA433j8ey9+CWTKrx5To+4lsofTg= 11 talos-7ye-8j0 proxmo-publicip:51820 up 92856 254520

192.168.2.122 kubespan KubeSpanPeerStatus oxU0e9yTFvN+lCGcIO4s13erkWjtIKrVzb8dX+GYLxE= 23 aws-controlplane-3 3.77.57.73:51820 up 1557896 1954328

192.168.2.122 kubespan KubeSpanPeerStatus uzi7NCL64o+ILeyqa6/Pq0UWdcVyfjWulZQB+a2Av30= 27 aws-controlplane-1 18.184.164.166:51820 up 15506236 6773440

192.168.0.157 kubespan KubeSpanPeerStatus Hh2ldeBX7kuct6Bynehjdgo6xkcOlZ4yUWWQVBqHLWQ= 11 talos-fgw-qpv proxmo-publicip:53639 up 261464 916524

192.168.0.157 kubespan KubeSpanPeerStatus If8ZqCQ1jp0mFV8igLGpfXpYycQSVBTSlT88YUr7eEM= 11 talos-4re-kxo proxmo-publicip:53923 up 141868 277180

192.168.0.157 kubespan KubeSpanPeerStatus iKXIODHF3Tx2b4JsA433j8ey9+CWTKrx5To+4lsofTg= 11 talos-7ye-8j0 proxmo-publicip:51820 up 172996 830504

192.168.0.157 kubespan KubeSpanPeerStatus uzi7NCL64o+ILeyqa6/Pq0UWdcVyfjWulZQB+a2Av30= 25 aws-controlplane-1 18.184.164.166:51820 up 16126096 7507456

192.168.0.157 kubespan KubeSpanPeerStatus vAHCI1pwTbaP/LHwO0MnCGELXsEstQahS0o9WkdVK0g= 24 aws-controlplane-2 3.122.238.249:51820 up 1954180 1557896

10.1.1.24 kubespan KubeSpanPeerStatus Hh2ldeBX7kuct6Bynehjdgo6xkcOlZ4yUWWQVBqHLWQ= 10 talos-fgw-qpv 10.1.1.12:51820 up 12204 11960

10.1.1.24 kubespan KubeSpanPeerStatus iKXIODHF3Tx2b4JsA433j8ey9+CWTKrx5To+4lsofTg= 10 talos-7ye-8j0 10.1.1.19:51820 up 16316 15624

10.1.1.24 kubespan KubeSpanPeerStatus oxU0e9yTFvN+lCGcIO4s13erkWjtIKrVzb8dX+GYLxE= 10 aws-controlplane-3 3.77.57.73:51820 up 278988 143592

10.1.1.24 kubespan KubeSpanPeerStatus uzi7NCL64o+ILeyqa6/Pq0UWdcVyfjWulZQB+a2Av30= 10 aws-controlplane-1 18.184.164.166:51820 up 100932 84220

10.1.1.24 kubespan KubeSpanPeerStatus vAHCI1pwTbaP/LHwO0MnCGELXsEstQahS0o9WkdVK0g= 10 aws-controlplane-2 3.122.238.249:51820 up 897880 231472

10.1.1.19 kubespan KubeSpanPeerStatus Hh2ldeBX7kuct6Bynehjdgo6xkcOlZ4yUWWQVBqHLWQ= 10 talos-fgw-qpv 10.1.1.12:51820 up 12692 12732

10.1.1.19 kubespan KubeSpanPeerStatus If8ZqCQ1jp0mFV8igLGpfXpYycQSVBTSlT88YUr7eEM= 10 talos-4re-kxo 10.1.1.24:51820 up 15476 16316

10.1.1.19 kubespan KubeSpanPeerStatus oxU0e9yTFvN+lCGcIO4s13erkWjtIKrVzb8dX+GYLxE= 10 aws-controlplane-3 3.77.57.73:51820 up 832688 176132

10.1.1.19 kubespan KubeSpanPeerStatus uzi7NCL64o+ILeyqa6/Pq0UWdcVyfjWulZQB+a2Av30= 10 aws-controlplane-1 18.184.164.166:51820 up 59680 45224

10.1.1.19 kubespan KubeSpanPeerStatus vAHCI1pwTbaP/LHwO0MnCGELXsEstQahS0o9WkdVK0g= 10 aws-controlplane-2 3.122.238.249:51820 up 255472 94296

10.1.1.12 kubespan KubeSpanPeerStatus If8ZqCQ1jp0mFV8igLGpfXpYycQSVBTSlT88YUr7eEM= 10 talos-4re-kxo 10.1.1.24:51820 up 11812 12204

10.1.1.12 kubespan KubeSpanPeerStatus iKXIODHF3Tx2b4JsA433j8ey9+CWTKrx5To+4lsofTg= 10 talos-7ye-8j0 10.1.1.19:51820 up 12732 12840

10.1.1.12 kubespan KubeSpanPeerStatus oxU0e9yTFvN+lCGcIO4s13erkWjtIKrVzb8dX+GYLxE= 10 aws-controlplane-3 3.77.57.73:51820 up 920660 265640

10.1.1.12 kubespan KubeSpanPeerStatus uzi7NCL64o+ILeyqa6/Pq0UWdcVyfjWulZQB+a2Av30= 10 aws-controlplane-1 18.184.164.166:51820 up 67776 82092

10.1.1.12 kubespan KubeSpanPeerStatus vAHCI1pwTbaP/LHwO0MnCGELXsEstQahS0o9WkdVK0g= 10 aws-controlplane-2 3.122.238.249:51820 up 355552 180240

Let’s check if the Kubernetes networking is functioning correctly:

kubectl create ns network-test

kubectl label ns network-test pod-security.kubernetes.io/enforce=privileged

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/refs/heads/main/examples/kubernetes/connectivity-check/connectivity-check.yaml -n network-test

kubectl get po -n network-test

NAME READY STATUS RESTARTS AGE

echo-a-54dcdd77c-6wjnw 1/1 Running 0 62s

echo-b-549fdb8f8c-j4sw7 1/1 Running 0 61s

echo-b-host-7cfdb688b7-ppz9f 1/1 Running 0 61s

host-to-b-multi-node-clusterip-c54bf67bf-hhm5h 1/1 Running 0 60s

host-to-b-multi-node-headless-55f66fc4c7-f8fc4 1/1 Running 0 60s

pod-to-a-5f56dc8c9b-kk6c2 1/1 Running 0 61s

pod-to-a-allowed-cnp-5dc859fd98-pvxzj 1/1 Running 0 61s

pod-to-a-denied-cnp-68976d7584-wm52m 1/1 Running 0 61s

pod-to-b-intra-node-nodeport-5884978697-c5rs2 1/1 Running 0 60s

pod-to-b-multi-node-clusterip-7d65578cf5-2jh97 1/1 Running 0 61s

pod-to-b-multi-node-headless-8557d86d6f-shvzx 1/1 Running 0 61s

pod-to-b-multi-node-nodeport-7847b5df8f-9kg89 1/1 Running 0 60s

pod-to-external-1111-797c647566-666l4 1/1 Running 0 61s

pod-to-external-fqdn-allow-google-cnp-5688c867dd-dkvpk 0/1 Running 0 61s # can be ignored

Kubernetes Network Benchmark

We will use k8s-bench-suite to benchmark networking performance between the nodes.

From the control plane to worker:

kubectl taint nodes aws-controlplane-1 node-role.kubernetes.io/control-plane:NoSchedule-

knb --verbose --client-node aws-controlplane-1 --server-node talos-4re-kxo

=========================================================

Benchmark Results

=========================================================

Name : knb-3420914

Date : 2025-03-18 04:14:12 UTC

Generator : knb

Version : 1.5.0

Server : talos-4re-kxo

Client : aws-controlplane-1

UDP Socket size : auto

=========================================================

Discovered CPU : Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz

Discovered Kernel : 6.12.18-talos

Discovered k8s version :

Discovered MTU : 1420

Idle :

bandwidth = 0 Mbit/s

client cpu = total 13.45% (user 3.81%, nice 0.00%, system 2.92%, iowait 0.53%, steal 6.19%)

server cpu = total 3.28% (user 1.75%, nice 0.00%, system 1.53%, iowait 0.00%, steal 0.00%)

client ram = 985 MB

server ram = 673 MB

Pod to pod :

TCP :

bandwidth = 887 Mbit/s

client cpu = total 75.29% (user 4.07%, nice 0.00%, system 57.20%, iowait 0.29%, steal 13.73%)

server cpu = total 36.16% (user 2.06%, nice 0.00%, system 34.10%, iowait 0.00%, steal 0.00%)

client ram = 995 MB

server ram = 697 MB

UDP :

bandwidth = 350 Mbit/s

client cpu = total 79.95% (user 6.68%, nice 0.00%, system 54.20%, iowait 0.22%, steal 18.85%)

server cpu = total 20.95% (user 2.61%, nice 0.00%, system 18.34%, iowait 0.00%, steal 0.00%)

client ram = 1000 MB

server ram = 653 MB

Pod to Service :

TCP :

bandwidth = 1063 Mbit/s

client cpu = total 80.58% (user 3.89%, nice 0.00%, system 68.36%, iowait 0.05%, steal 8.28%)

server cpu = total 42.44% (user 2.26%, nice 0.00%, system 40.18%, iowait 0.00%, steal 0.00%)

client ram = 1008 MB

server ram = 696 MB

UDP :

bandwidth = 322 Mbit/s

client cpu = total 78.57% (user 6.24%, nice 0.00%, system 57.02%, iowait 0.18%, steal 15.13%)

server cpu = total 21.02% (user 2.54%, nice 0.00%, system 18.48%, iowait 0.00%, steal 0.00%)

client ram = 995 MB

server ram = 668 MB

=========================================================

From worker to worker locally:

knb --verbose --client-node talos-7ye-8j0 --server-node talos-4re-kxo

=========================================================

Benchmark Results

=========================================================

Name : knb-3423407

Date : 2025-03-18 04:16:29 UTC

Generator : knb

Version : 1.5.0

Server : talos-4re-kxo

Client : talos-7ye-8j0

UDP Socket size : auto

=========================================================

Discovered CPU : Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz

Discovered Kernel : 6.12.18-talos

Discovered k8s version :

Discovered MTU : 1420

Idle :

bandwidth = 0 Mbit/s

client cpu = total 2.97% (user 1.50%, nice 0.00%, system 1.47%, iowait 0.00%, steal 0.00%)

server cpu = total 3.34% (user 1.72%, nice 0.00%, system 1.62%, iowait 0.00%, steal 0.00%)

client ram = 552 MB

server ram = 688 MB

Pod to pod :

TCP :

bandwidth = 1868 Mbit/s

client cpu = total 61.44% (user 2.59%, nice 0.00%, system 58.82%, iowait 0.03%, steal 0.00%)

server cpu = total 70.40% (user 2.82%, nice 0.00%, system 67.58%, iowait 0.00%, steal 0.00%)

client ram = 546 MB

server ram = 801 MB

UDP :

bandwidth = 912 Mbit/s

client cpu = total 64.14% (user 3.75%, nice 0.06%, system 60.30%, iowait 0.03%, steal 0.00%)

server cpu = total 52.89% (user 4.29%, nice 0.00%, system 48.60%, iowait 0.00%, steal 0.00%)

client ram = 556 MB

server ram = 679 MB

Pod to Service :

TCP :

bandwidth = 1907 Mbit/s

client cpu = total 58.43% (user 2.15%, nice 0.00%, system 56.25%, iowait 0.00%, steal 0.03%)

server cpu = total 61.83% (user 2.35%, nice 0.03%, system 59.45%, iowait 0.00%, steal 0.00%)

client ram = 547 MB

server ram = 813 MB

UDP :

bandwidth = 887 Mbit/s

client cpu = total 57.50% (user 3.88%, nice 0.00%, system 53.62%, iowait 0.00%, steal 0.00%)

server cpu = total 52.33% (user 4.18%, nice 0.03%, system 48.12%, iowait 0.00%, steal 0.00%)

client ram = 556 MB

server ram = 685 MB

=========================================================

Interestingly, all our traffic is actually routed through the Kubespan/WireGuard tunnel. For comparison, I created a new local cluster without Kubespan, and the results were different:

k get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

talos-pft-tax Ready control-plane 52s v1.32.0 10.1.1.17 <none> Talos (v1.9.1) 6.12.6-talos containerd://2.0.1

talos-tfy-nig Ready <none> 49s v1.32.0 10.1.1.16 <none> Talos (v1.9.1) 6.12.6-talos containerd://2.0.1

knb --verbose --client-node talos-pft-tax --server-node talos-tfy-nig

=========================================================

Benchmark Results

=========================================================

Name : knb-3432162

Date : 2025-03-18 04:32:21 UTC

Generator : knb

Version : 1.5.0

Server : talos-tfy-nig

Client : talos-pft-tax

UDP Socket size : auto

=========================================================

Discovered CPU : QEMU Virtual CPU version 2.5+

Discovered Kernel : 6.12.6-talos

Discovered k8s version :

Discovered MTU : 1450

Idle :

bandwidth = 0 Mbit/s

client cpu = total 3.56% (user 1.85%, nice 0.00%, system 1.62%, iowait 0.09%, steal 0.00%)

server cpu = total 1.41% (user 0.59%, nice 0.00%, system 0.82%, iowait 0.00%, steal 0.00%)

client ram = 775 MB

server ram = 401 MB

Pod to pod :

TCP :

bandwidth = 6276 Mbit/s

client cpu = total 23.32% (user 2.97%, nice 0.00%, system 20.24%, iowait 0.11%, steal 0.00%)

server cpu = total 25.49% (user 2.13%, nice 0.00%, system 23.36%, iowait 0.00%, steal 0.00%)

client ram = 709 MB

server ram = 391 MB

UDP :

bandwidth = 861 Mbit/s

client cpu = total 55.67% (user 6.55%, nice 0.00%, system 49.02%, iowait 0.05%, steal 0.05%)

server cpu = total 46.81% (user 7.85%, nice 0.00%, system 38.96%, iowait 0.00%, steal 0.00%)

client ram = 711 MB

server ram = 382 MB

Pod to Service :

TCP :

bandwidth = 6326 Mbit/s

client cpu = total 24.26% (user 4.05%, nice 0.00%, system 20.11%, iowait 0.10%, steal 0.00%)

server cpu = total 25.99% (user 2.22%, nice 0.00%, system 23.77%, iowait 0.00%, steal 0.00%)

client ram = 693 MB

server ram = 333 MB

UDP :

bandwidth = 877 Mbit/s

client cpu = total 52.58% (user 7.26%, nice 0.00%, system 45.27%, iowait 0.05%, steal 0.00%)

server cpu = total 46.81% (user 7.76%, nice 0.00%, system 39.05%, iowait 0.00%, steal 0.00%)

client ram = 705 MB

server ram = 334 MB

=========================================================

Although Kubespan works well out of the box, it does not yet support meshed topologies, where you need to control how traffic is routed for specific node pools.

There is already an open issue for this, which you can find here.

Kilo

A solution that supports meshed logical topologies - Kilo. It enables you to manage traffic between nodes in multiple datacenters while keeping native networking intact within each datacenter, for intra-datacenter communication.

The downside is that Kilo requires some customizations, which means additional logic and automation would need to be applied.

Apply terraform using Talos machine configuration for Kilo

The kilo-controlplane.tpl file has Kubespan disabled and kube-proxy enabled. For the CNI, we deploy Kilo, and we also add a CustomResourceDefinition for peers.kilo.squat.ai in the inlineManifests.

Update the paths in the talos.tf file by changing controlplane.tpl and worker.tpl to kilo-controlplane.tpl and kilo-worker.tpl, respectively.

We follow the same process for spinning up the nodes in both AWS and Proxmox.

Once the masters are ready, Kilo will fail because it can't find the kubeconfig access. This issue arises because we are using Talos instead of plain kubeadm. To fix this:

kubectl create configmap kube-proxy --from-file=kubeconfig.conf=kubeconfig -n kube-system

kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

aws-controlplane-1 Ready control-plane 15m v1.32.3 192.168.1.55 3.73.119.119 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

aws-controlplane-2 Ready control-plane 16m v1.32.3 192.168.0.75 18.195.244.87 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

aws-controlplane-3 Ready control-plane 16m v1.32.3 192.168.2.68 18.185.241.183 Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talos-8vs-lte Ready <none> 2m20s v1.32.3 10.1.1.22 <none> Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talos-8wf-r6g Ready <none> 2m19s v1.32.3 10.1.1.21 <none> Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

talos-ub5-bc2 Ready <none> 2m14s v1.32.3 10.1.1.23 <none> Talos (v1.9.5) 6.12.18-talos containerd://2.0.3

...

kube-system kilo-286hz 1/1 Running 0 6m46s 10.1.1.22 talos-8vs-lte <none> <none>

kube-system kilo-4h8kn 1/1 Running 0 12m 10.1.1.21 talos-8wf-r6g <none> <none>

kube-system kilo-gcclp 1/1 Running 0 13m 192.168.2.68 aws-controlplane-3 <none> <none>

kube-system kilo-rq2sl 1/1 Running 0 4m17s 192.168.1.55 aws-controlplane-1 <none> <none>

Next, we need to specify the topology, set logical locations, and ensure that at least one node in each location has an IP address that is routable from the other locations.

For aws control planes (location):

for node in $(kubectl get nodes | grep -i aws | awk '{print $1}'); do kubectl annotate node $node kilo.squat.ai/location="aws"; done

For workers (location):

kubectl annotate node talos-8vs-lte talos-8wf-r6g talos-ub5-bc2 kilo.squat.ai/location="on-prem"

Endpoint for each location:

kubectl annotate node talos-8vs-lte kilo.squat.ai/force-endpoint="proxmox-public-ip:51820"

kubectl annotate node aws-controlplane-1 kilo.squat.ai/force-endpoint="3.73.119.119:51820"

Rolling out and checking the network again:

kubectl rollout restart ds/kilo -n kube-system

kubectl get po -n network-test

NAME READY STATUS RESTARTS AGE

echo-a-54dcdd77c-psgqb 1/1 Running 0 34s

echo-b-549fdb8f8c-5pjbk 1/1 Running 0 34s

echo-b-host-7cfdb688b7-zff5b 1/1 Running 0 34s

host-to-b-multi-node-clusterip-c54bf67bf-f7d6c 1/1 Running 0 33s

host-to-b-multi-node-headless-55f66fc4c7-kd2wv 1/1 Running 0 33s

pod-to-a-5f56dc8c9b-64k7v 1/1 Running 0 34s

pod-to-a-allowed-cnp-5dc859fd98-684lh 1/1 Running 0 34s

pod-to-a-denied-cnp-68976d7584-6w6fn 1/1 Running 0 34s

pod-to-b-intra-node-nodeport-5884978697-ddtz4 1/1 Running 0 32s

pod-to-b-multi-node-clusterip-7d65578cf5-rx9ws 1/1 Running 0 33s

pod-to-b-multi-node-headless-8557d86d6f-8dqr8 1/1 Running 0 33s

pod-to-b-multi-node-nodeport-7847b5df8f-zjlzt 1/1 Running 0 33s

pod-to-external-1111-797c647566-qnc22 1/1 Running 0 34s

pod-to-external-fqdn-allow-google-cnp-5688c867dd-9btvt 1/1 Running 0 33s

Kilo knb benchmark

Let’s test the network performance by running the same test between the master and worker nodes, as well as between worker nodes.

Master to worker:

kubectl taint nodes aws-controlplane-1 node-role.kubernetes.io/control-plane:NoSchedule-

knb --verbose --client-node aws-controlplane-1 --server-node talos-8vs-lte

=========================================================

Benchmark Results

=========================================================

Name : knb-3461466

Date : 2025-03-18 05:25:08 UTC

Generator : knb

Version : 1.5.0

Server : talos-8vs-lte

Client : aws-controlplane-1

UDP Socket size : auto

=========================================================

Discovered CPU : Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz

Discovered Kernel : 6.12.18-talos

Discovered k8s version :

Discovered MTU : 1420

Idle :

bandwidth = 0 Mbit/s

client cpu = total 15.67% (user 4.70%, nice 0.00%, system 2.74%, iowait 0.44%, steal 7.79%)

server cpu = total 2.82% (user 1.41%, nice 0.00%, system 1.41%, iowait 0.00%, steal 0.00%)

client ram = 799 MB

server ram = 417 MB

Pod to pod :

TCP :

bandwidth = 942 Mbit/s

client cpu = total 61.10% (user 5.12%, nice 0.00%, system 36.35%, iowait 0.39%, steal 19.24%)

server cpu = total 22.78% (user 1.35%, nice 0.00%, system 21.43%, iowait 0.00%, steal 0.00%)

client ram = 787 MB

server ram = 479 MB

UDP :

bandwidth = 448 Mbit/s

client cpu = total 75.97% (user 6.76%, nice 0.00%, system 49.81%, iowait 0.28%, steal 19.12%)

server cpu = total 18.07% (user 2.96%, nice 0.00%, system 15.11%, iowait 0.00%, steal 0.00%)

client ram = 798 MB

server ram = 391 MB

Pod to Service :

TCP :

bandwidth = 1253 Mbit/s

client cpu = total 69.80% (user 3.58%, nice 0.00%, system 45.60%, iowait 0.36%, steal 20.26%)

server cpu = total 30.59% (user 1.88%, nice 0.00%, system 28.71%, iowait 0.00%, steal 0.00%)

client ram = 787 MB

server ram = 592 MB

UDP :

bandwidth = 478 Mbit/s

client cpu = total 80.83% (user 6.97%, nice 0.00%, system 53.98%, iowait 0.25%, steal 19.63%)

server cpu = total 18.77% (user 2.70%, nice 0.03%, system 16.04%, iowait 0.00%, steal 0.00%)

client ram = 795 MB

server ram = 391 MB

=========================================================

Worker to worker:

knb --verbose --client-node talos-8vs-lte --server-node talos-8wf-r6g

=========================================================

Benchmark Results

=========================================================

Name : knb-3467118

Date : 2025-03-18 05:27:23 UTC

Generator : knb

Version : 1.5.0

Server : talos-8wf-r6g

Client : talos-8vs-lte

UDP Socket size : auto

=========================================================

Discovered CPU : Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz

Discovered Kernel : 6.12.18-talos

Discovered k8s version :

Discovered MTU : 1420

Idle :

bandwidth = 0 Mbit/s

client cpu = total 2.57% (user 1.29%, nice 0.00%, system 1.28%, iowait 0.00%, steal 0.00%)

server cpu = total 2.05% (user 1.10%, nice 0.00%, system 0.95%, iowait 0.00%, steal 0.00%)

client ram = 416 MB

server ram = 521 MB

Pod to pod :

TCP :

bandwidth = 8409 Mbit/s

client cpu = total 17.34% (user 1.85%, nice 0.00%, system 15.49%, iowait 0.00%, steal 0.00%)

server cpu = total 22.70% (user 1.96%, nice 0.00%, system 20.74%, iowait 0.00%, steal 0.00%)

client ram = 398 MB

server ram = 508 MB

UDP :

bandwidth = 1403 Mbit/s

client cpu = total 36.43% (user 3.27%, nice 0.00%, system 33.16%, iowait 0.00%, steal 0.00%)

server cpu = total 35.20% (user 5.36%, nice 0.00%, system 29.84%, iowait 0.00%, steal 0.00%)

client ram = 405 MB

server ram = 542 MB

Pod to Service :

TCP :

bandwidth = 8366 Mbit/s

client cpu = total 21.10% (user 1.64%, nice 0.04%, system 19.42%, iowait 0.00%, steal 0.00%)

server cpu = total 22.15% (user 1.85%, nice 0.00%, system 20.30%, iowait 0.00%, steal 0.00%)

client ram = 398 MB

server ram = 515 MB

UDP :

bandwidth = 1349 Mbit/s

client cpu = total 36.52% (user 3.21%, nice 0.00%, system 33.31%, iowait 0.00%, steal 0.00%)

server cpu = total 34.03% (user 5.38%, nice 0.00%, system 28.65%, iowait 0.00%, steal 0.00%)

client ram = 414 MB

server ram = 535 MB

=========================================================

Conclusion

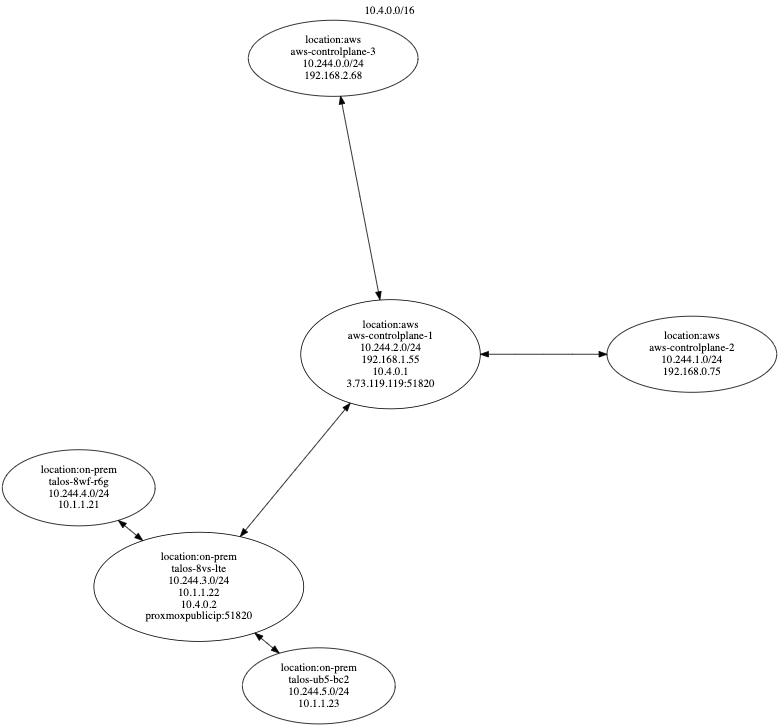

We can see that the nodes are aware of each other and use the internal connection based on their logical location, as demonstrated in the diagram below Generated using this :

To conclude, since it's not possible to set annotations in advance for the nodes, automating the process completely when Talos/Kubeadm is bootstrapped could be problematic. This would still require an additional layer to set up the mesh. From this perspective, Kubespan is much easier to implement, but it currently doesn't support logical separation.

Delete cluster

# Delete VMs

for id in {1001..1003}; do

qm stop $id && qm destroy $id

done

terraform destroy -auto-approve -var-file=vars/dev.tfvars

References

- https://github.com/isovalent/terraform-aws-talos

- https://github.com/pfenerty/talos-aws-terraform

- https://dev.to/sergelogvinov/kubernetes-on-hybrid-cloud-talos-network-51lo

- https://dev.to/sergelogvinov/kubernetes-on-hybrid-cloud-network-design-3m9f

- https://www.talos.dev/v1.9/talos-guides/network/kubespan/

- https://kilo.squat.ai/

Top comments (1)

Nice job! Thanks