Introduction

Model Context Protocol (MCP) is an open standard that connects AI assistants to the systems where the data is stored. It is open-sourced by Anthropic.

Traditional AI agents can respond to user prompts and use Retrieval-Augmented Generation (RAG) to answer domain-specific queries. However, they cannot fetch data from a specific local data source, make API calls, or execute specific code on a local machine.

To explain simply, an AI agent would not be able to summarize my last three commits on a specific GitHub repository or retrieve all verified users by running a specific query in my database. This is where MCP comes into the picture.

Model Context Protocol (MCP)

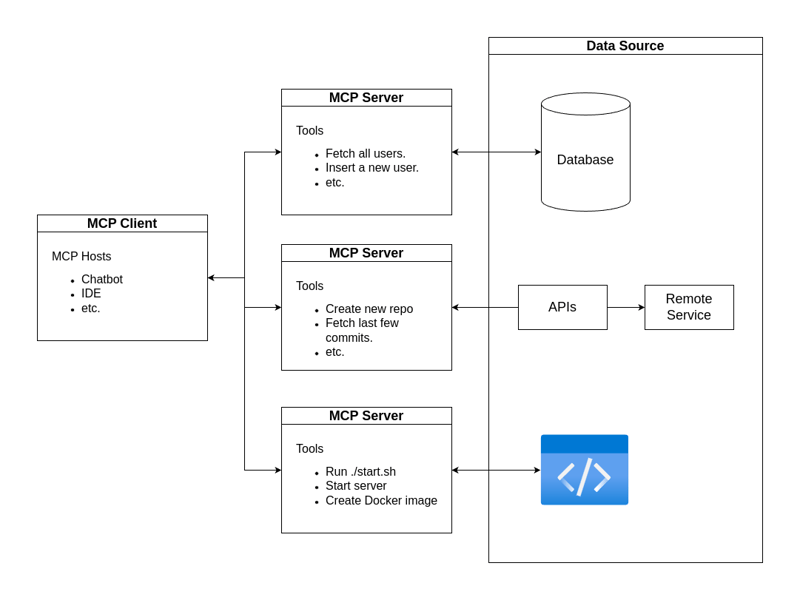

Model Context Protocol (MCP) consists of three main components: MCP Host, MCP Client, and MCP Server.

MCP Host

An MCP Host is an AI interface, such as a chatbot, IDE, or any other AI-powered application that accepts user prompts and returns the generated response to the user.

MCP Client

The MCP Client maintains one-to-one connections with multiple MCP Servers. A single MCP Client can interact with multiple MCP Servers to fetch relevant information.

MCP Server

Each MCP Server consists of multiple tools, each designed to interact with a specific data source. These data sources can include:

- Databases (SQL/NoSQL) – Running specific queries to fetch structured data.

- Web Services (APIs) – Making API calls to retrieve external data.

- Code Execution Environments – Running specific scripts or commands.

Each MCP Server provides multiple tools for different operations. For example:

- Tool 1: Create a new GitHub repository.

- Tool 2: Fetch specific data from a database.

- Tool 3: Execute a predefined script.

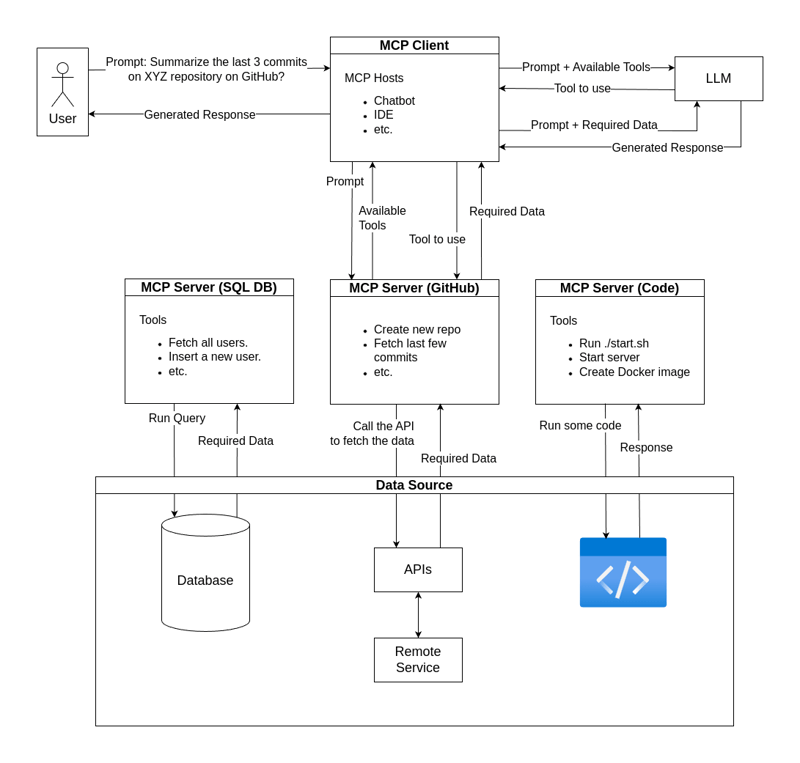

MCP Workflow

The workflow of MCP follows a structured process:

- User sends a prompt to the MCP Host (e.g., an AI chatbot or IDE).

- The MCP Host queries the MCP Client to fetch a list of available tools from relevant MCP Servers.

- The MCP Client retrieves the tools from the MCP Server and forwards the list to the LLM along with the prompt.

- The LLM analyzes the prompt and selects the appropriate tool(s) needed to fulfill the query.

- The MCP Client instructs the MCP Server to execute the selected tool(s).

- The MCP Server processes the request (e.g., making an API call, running a query, or executing a script) and returns the results to the MCP Client.

- The MCP Client forwards the results to the LLM in addition to the prompt.

- Finally, the LLM-generated response is returned to the user.

Practical Workflow Example

Let’s break this down with a practical example.

User Prompt: "Summarize the last 3 commits on XYZ repository on GitHub."

- The MCP Client sends this prompt to the MCP Server that handles GitHub-related queries and requests a list of available tools.

- The MCP Server responds with tools such as:

- Tool 1: Create a new repository.

- Tool 2: Fetch the last few commits.

- The MCP Client sends the tool list to the LLM, along with the user’s prompt, and asks it to determine the correct tool(s) to use.

- The LLM selects 'Tool 2: Fetch last few commits' as the appropriate tool for this query.

- The MCP Client instructs the MCP Server to execute Tool 2.

- The MCP Server makes the necessary API call to GitHub, retrieves the last three commits, and sends the data back to the MCP Client.

- The MCP Client forwards the commit data, with the prompt, to the LLM, which generates a summarized response.

- The final summary is returned to the user.

This structured process allows AI agents to retrieve real-time data and execute tasks beyond their traditional capabilities.

Final Words

That was the blog on Model Context Protocol. I hope this simplified explanation helped you understand the concept. If you are interested in learning more, here are some reference links that I used while researching MCP:

Model Context Protocol Overview - Anthropic

MCP Introduction

YouTube Video 1

YouTube Video 2

If you have any questions or thoughts, feel free to discuss them in the comments!

Top comments (0)