Model Context Protocol (MCP) is the latest trend of 2025.

It makes it easier to scale your workflows and opens many powerful use cases.

Today, we will learn how to connect Cursor with 100+ MCP servers, with some awesome examples at the end.

Let's jump in.

What is covered?

In a nutshell, we are covering these topics in detail.

- What is Model Context Protocol (MCP)?

- A step-by-step guide on how to connect Cursor to 100+ fully managed MCP servers with built-in Auth.

- Practical use cases with examples.

I will be using Composio for MCP servers because it has built-in auth and comes with fully managed servers.

1. What is Model Context Protocol (MCP)?

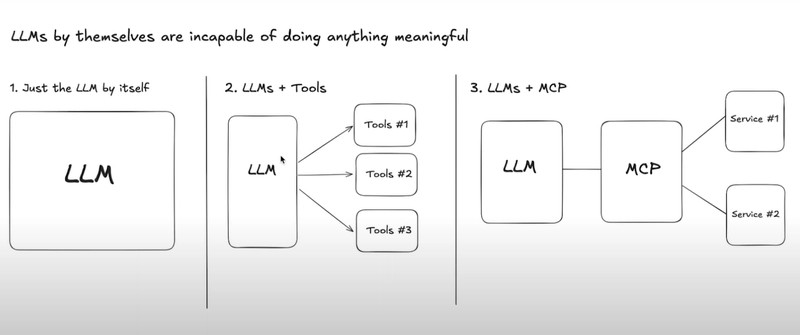

Model Context Protocol (MCP) is a new open protocol that standardizes how applications provide context and tools to LLMs.

Think of it as a universal connector for AI. MCP works as a plugin system for Cursor which allows you to extend the Agent’s capabilities by connecting it to various data sources and tools.

MCP helps you build agents and complex workflows on top of LLMs.

For example, an MCP server for Obsidian helps AI assistants search and read notes from your Obsidian vault.

Your AI agent can now:

→ Send emails through Gmail

→ Create tasks in Linear

→ Search documents in Notion

→ Post messages in Slack

→ Update records in Salesforce

All while you chat naturally with it.

Think about what this means for productivity.

Tasks that used to require context switching between 5+ apps can now happen in a single conversation with your agent.

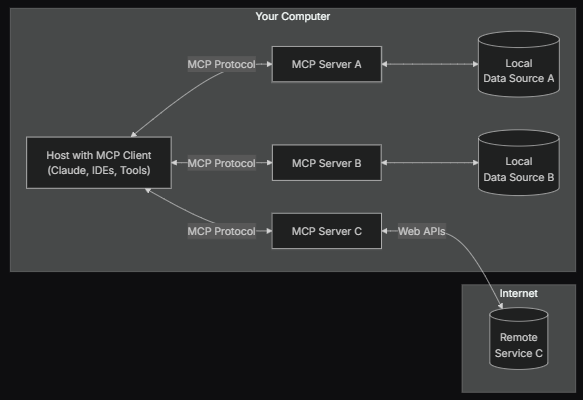

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers.

There is a very interesting blog that explains architecture in deep, lifecycle, protocol, drawbacks and behind-the-scenes of MCP.

If you're looking for a perfect video and want to understand why MCP matters, check out this!

What MCP Servers Are Not

A lot of people are confused about it, so let's clarify things:

❌ Cannot replace APIs - MCP can use APIs but it's a standardized interface and not a replacement for specific API functionalities.

❌ Not complex to create - developers can create MCP servers using straightforward protocols. There are templates, videos and more resources on the internet.

❌ It's not a direct DB - MCP servers don't directly store data but they just act as a bridge.

And it's not only restricted to remote servers. You can operate it locally as well.

2. A step-by-step guide on how to connect Cursor to 100+ fully managed MCP servers with built-in Auth.

In this section, we will be exploring how to connect Cursor with MCP servers.

If you want to explore yourself how to add and use custom MCP servers within the Cursor, read the official docs.

Step 1: Prerequisites.

Install Node.js and ensure npx is available in your system.

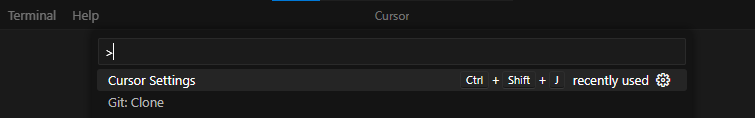

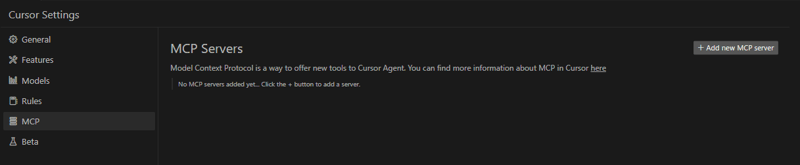

Step 2: Enable MCP server in Cursor.

You can open the command palette in Cursor with Ctrl + Shift + P and search for cursor settings.

You will find an MCP option on the sidebar.

Step 3: Using a pre-defined MCP server.

We can also create one from scratch but let's use predefined-one for the sake of simplicity.

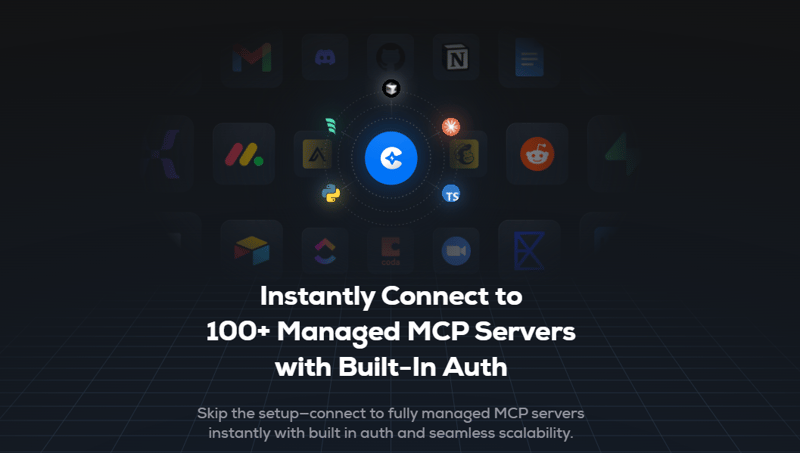

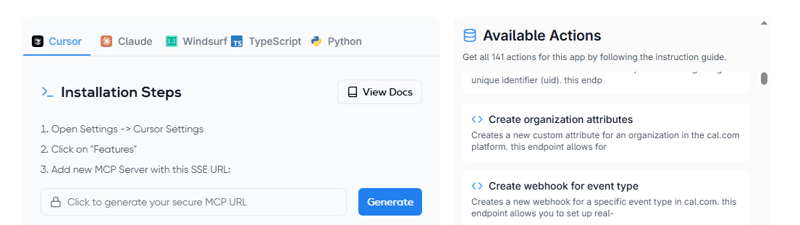

We will use Composio for the servers since they have built-in auth. You can find the list at mcp.composio.dev. It supports Claude and Cursor as MCP hosts.

⚡ Built-in Auth comes with support for OAuth, API keys, JWT and Basic Auth. This means you don't have to create your own login system.

⚡ Fully managed servers eliminate the need for complex setups, making it easy to integrate AI agents with tools like Gmail, Slack, Notion, Linear and more.

⚡ Better tool-calling accuracy allows AI agents to interact smoothly with integrated apps.

It also means less downtime and fewer maintenance problems. You can read more on composio.dev/mcp.

You can easily integrate with a bunch of useful MCP servers without writing any code.

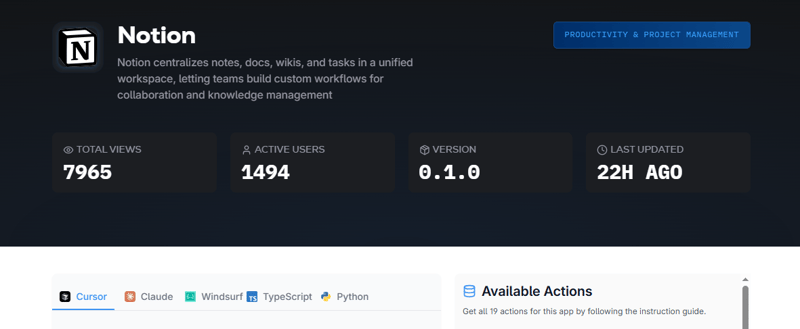

With each of the options, you will find the total active users, its current version, how recently it was updated and all the available actions.

You will also get the option of Claude (MacOS), Windsurf (MacOS), TypeScript and Python for your choice of integration.

Some reasons why I decided to go with Composio:

⚡ Fully managed servers with built-in authentication (as explained earlier).

⚡ Supports over 250+ tools like Slack, Notion, Gmail, Linear, and Salesforce.

⚡ Provides 20,000+ pre-built API actions for quick integration without coding.

⚡ Can operate locally or remotely depending on your configuration needs.

⚡ It's compatible with AI agents which means it can connect AI agents to tools for tasks like sending emails, creating tasks or managing tickets in a single conversation.

If you're interested in creating from scratch. Here's how you can do it (TypeScript SDK).

You will need to install the SDK package by using this command.

npm install @modelcontextprotocol/sdk

This is how you can create a simple MCP server that exposes a calculator tool and some data.

import { McpServer, ResourceTemplate } from "@modelcontextprotocol/sdk/server/mcp.js";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

import { z } from "zod";

// Create an MCP server

const server = new McpServer({

name: "Demo",

version: "1.0.0"

});

// Add an addition tool

server.tool("add",

{ a: z.number(), b: z.number() },

async ({ a, b }) => ({

content: [{ type: "text", text: String(a + b) }]

})

);

// Add a dynamic greeting resource

server.resource(

"greeting",

new ResourceTemplate("greeting://{name}", { list: undefined }),

async (uri, { name }) => ({

contents: [{

uri: uri.href,

text: `Hello, ${name}!`

}]

})

);

// Start receiving messages on stdin and sending messages on stdout

const transport = new StdioServerTransport();

await server.connect(transport);

Below are some of the core concepts that will help you understand the above code:

⚡ Server - McpServer is your core interface to the MCP protocol. It handles connection management, protocol compliance and message routing.

⚡ Resources - This is how you expose data to LLMs. They're similar to GET endpoints in a REST API.

⚡ Tools - Tools let LLMs take actions through your server. Unlike resources, tools are expected to perform computation and have side effects.

⚡ Prompts - Prompts are reusable templates that help LLMs easily interact with your server.

You can run your server by using stdio (for command-line tools or direct integrations) and HTTP with SSE (for remote servers).

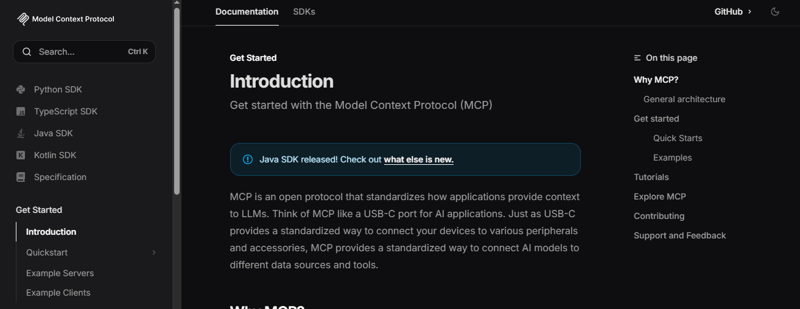

On the official website of MCP which is available at modelcontextprotocol.io, you will find TypeScript SDK, Python SDK, Java SDK and Kotlin SDK. Once you visit the repository, you will find all the necessary details in the readme.

If you're looking for a good blog, check out MCP server: A step-by-step guide to building from scratch.

Step 4: Integrating MCP server.

It's time to integrate one with the cursor. For now, we will be using the Hackernews MCP server.

If you don't know, Hacker News is a tech-focused news aggregator by Y Combinator, featuring user-submitted stories and discussions on startups, programming and awesome projects.

Just a couple of days ago, Cursor changed this method. I'm explaining the new and previous methods to avoid confusion.

Newer version.

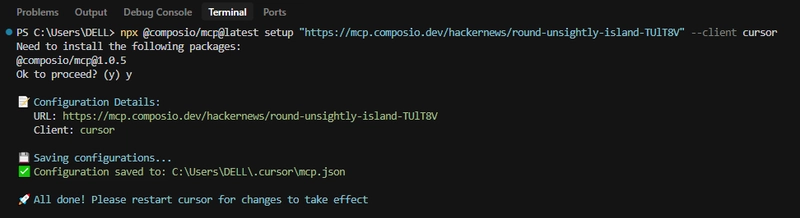

We will need to generate the terminal command. Check this page to generate yours.

It will look something like this.

npx @composio/mcp@latest setup "https://mcp.composio.dev/hackernews/xyzxyz..." --client cursor

You can place this configuration in two locations, depending on your use case:

1) For tools specific to a project, create a .cursor/mcp.json file in your project directory. This allows you to define MCP servers that are only available within that specific project.

2) For tools that you want to use across all projects, create a \~/.cursor/mcp.json file in your home directory. This makes MCP servers available in all your Cursor workspaces.

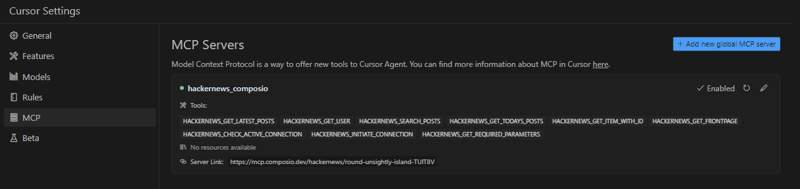

It will display the necessary actions and status green dot which indicates, that it's successfully integrated.

This is how the JSON data file looks (SSE, Python CLI, Node.js CLI in order).

// This example demonstrated an MCP server using the SSE format

// The user should manually setup and run the server

// This could be networked, to allow others to access it too

{

"mcpServers": {

"server-name": {

"url": "http://localhost:3000/sse",

"env": {

"API_KEY": "value"

}

}

}

}

// if you're using CLI server Python

// This example demonstrated an MCP server using the stdio format

// Cursor automatically runs this process for you

// This uses a Python server, ran with `python`

{

"mcpServers": {

"server-name": {

"command": "python",

"args": ["mcp-server.py"],

"env": {

"API_KEY": "value"

}

}

}

}

// if you're using CLI server Node.js

// This example demonstrated an MCP server using the stdio format

// Cursor automatically runs this process for you

// This uses a Node.js server, ran with `npx`

{

"mcpServers": {

"server-name": {

"command": "npx",

"args": ["-y", "mcp-server"],

"env": {

"API_KEY": "value"

}

}

}

}

You can check out the list of sample servers and implementations.

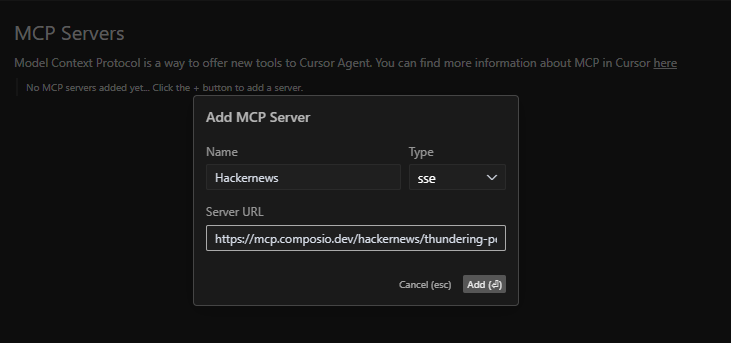

Older version.

We will need to generate a secure MCP URL. Check this page to generate yours.

Make sure you have type sse which gives you the option to insert the server URL.

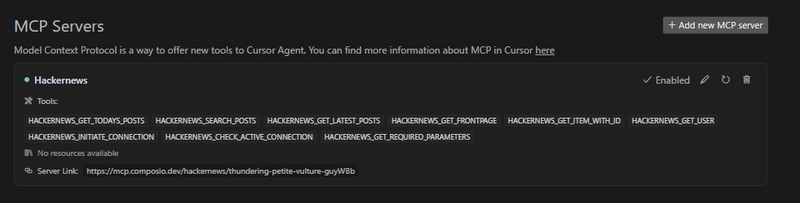

It will display the necessary actions and status green dot which indicates, that it's successfully integrated.

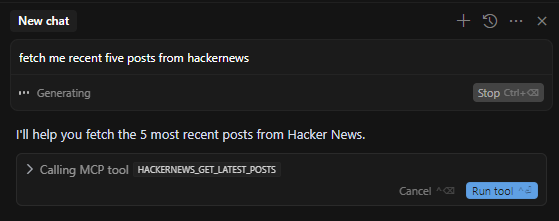

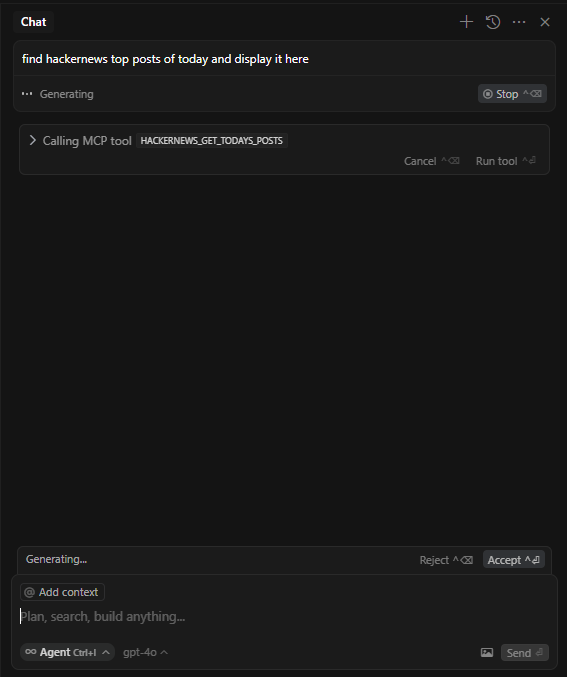

Step 5: Using the server directly within Agent.

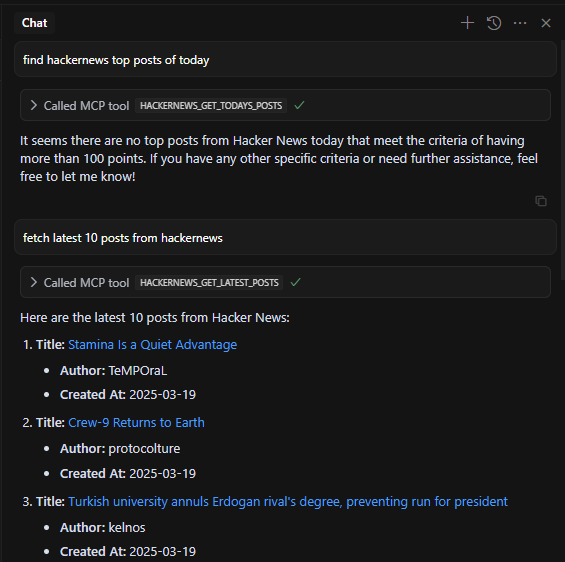

You can open the Chat using the Ctrl + I command. Then you can simply ask anything related to Hackernews like find hackernews top posts of today.

As you can see, it will call the appropriate MCP server (if you have multiple of those) and it will accordingly use the correct action based on your prompt.

Just click accept to generate a response. Since no posts reached 100 points (a top post criterion), I tried another prompt to fetch the latest 10 posts.

Congrats! 🎉 That's how simple it really is to use MCP servers of your choice.

Hackernews was a simple case but if it's something different like GitHub where it requires a personal token, you will need to put a slightly detailed prompt like create a new repo named composio mcp and add readme with a brief info about mcp servers.

Now that we know how to integrate MCP servers into the cursor. In the next section, we are going to explore a lot of real-world examples.

3. Practical use cases with examples.

You can build lots of innovative stuff using these, so let’s explore a few that stand out. Some of these include source code (GitHub repository).

✅ Blender MCP

This connects Blender to Claude AI through MCP, allowing Claude to directly interact with and control Blender. This integration enables prompt assisted 3D modeling, scene creation and manipulation.

For instance, Siddharth (the owner) generated a 3D scene like low-poly dragon guarding treasure without directly working on it himself.

You can check the GitHub repository, which has 6.9k stars.

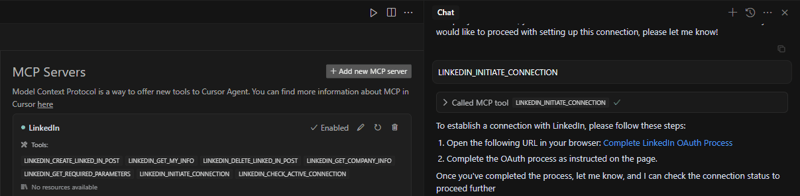

✅ LinkedIn MCP server

After adding this to the cursor MCP servers list, you will get a lot of options. However, some need to initiate a connection to avoid overloading of input response.

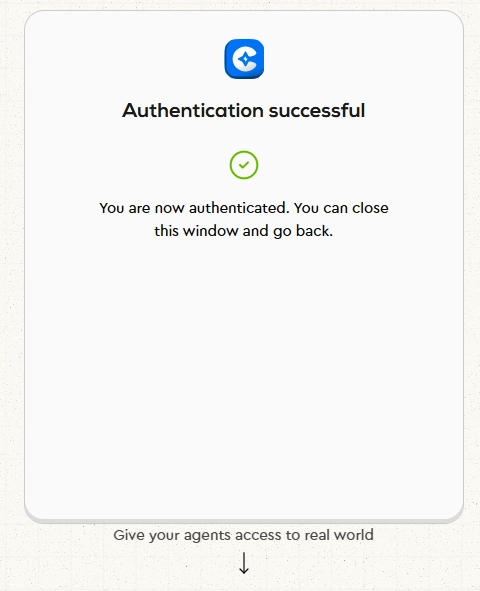

You can simply authenticate by copying the OAuth URL in the browser.

You will get a confirmation message once it's done.

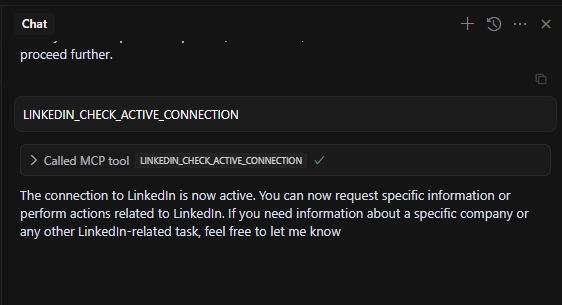

You can also check that based on the actions of the server. As you can see, there is an active connection.

I normally wouldn't recommend using it on your official accounts because you can never be too careful. I've checked MCP servers related to DocuSign (eSignature), Cal.com (for connecting to the calendar), Notion (fetching page contents) and many more.

Most of these are simple. In the next example, we will create something much more complex.

✅ Create a web scraper using Crawl4AI.

With all these already present, you might think that it's worthless to create something new. Let's discuss a potentially complex idea.

Imagine you're a newbie and you want to improve the context for the cursor related to Python SDK docs from the official MCP repo.

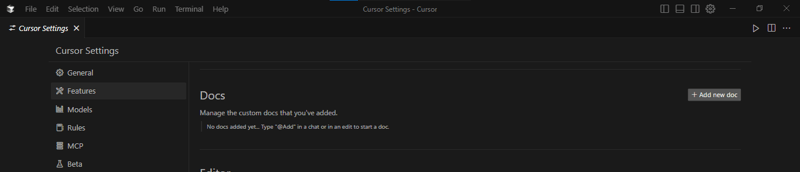

In the cursor settings, you will find Features in the sidebar. Under that, you will find the docs section.

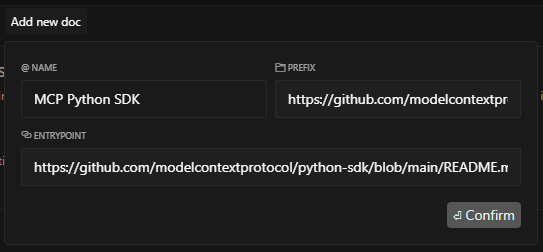

Then, add this link to README with an appropriate name.

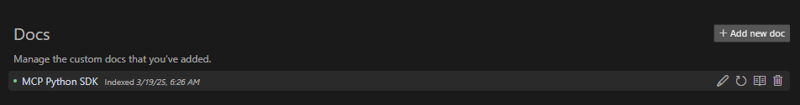

It's successfully added.

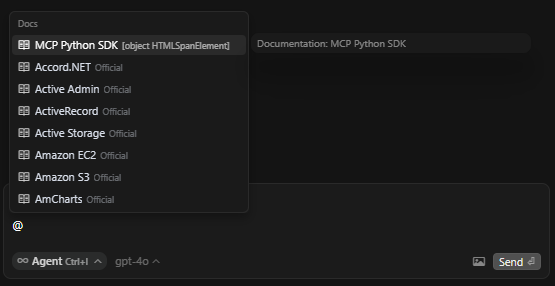

Now, you can add that doc to improve context in the agent chat.

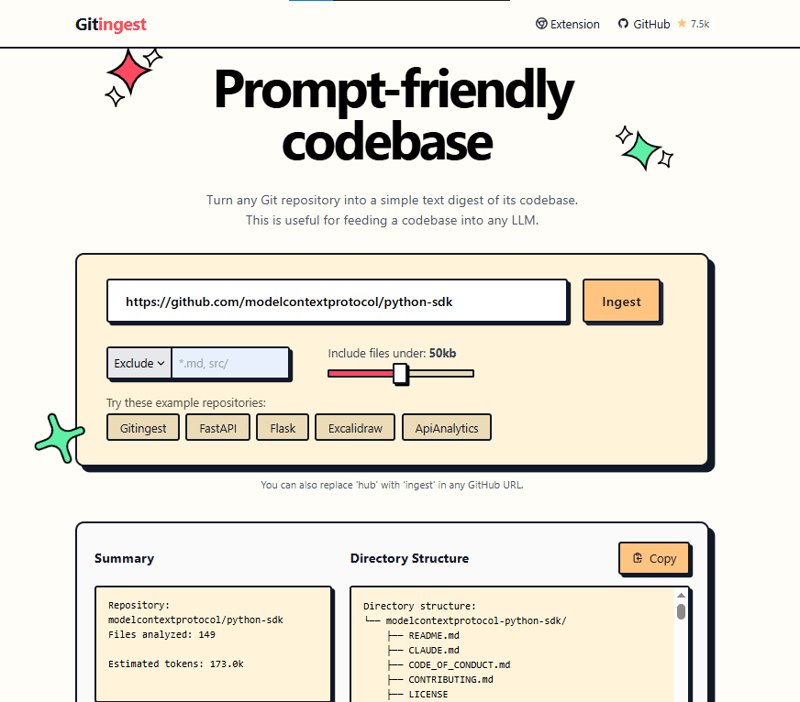

If you want to take it a step further by making the docs more accessible. There is a nice tool called Gitingest which helps you convert it to LLM readable data. You can simply replace hub on any GitHub URL with ingest and it will work properly.

Now, you can use Crawl4AI which is an open source LLM Friendly Web Crawler and Scraper. It has 33k+ stars on GitHub.

Before proceeding, make sure you have installed MCP dependencies using pip install mcp.

You can install the crawl4ai package using the following command.

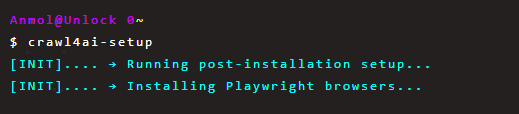

pip install -U crawl4ai

# Run post-installation setup

crawl4ai-setup

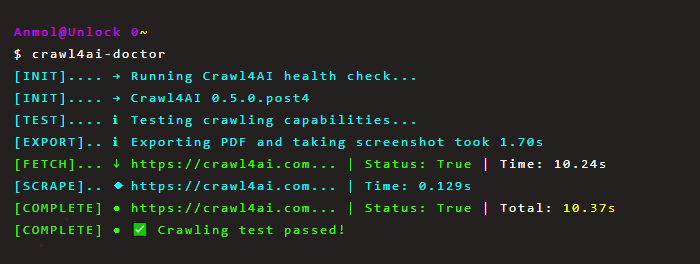

You can verify your installation using crawl4ai-doctor.

You can create a server.py file and add proper code as per the docs.

import asyncio

from crawl4ai import *

async def main():

async with AsyncWebCrawler() as crawler:

result = await crawler.arun(

url="https://www.nbcnews.com/business",

)

print(result.markdown)

if __name__ == "__main__":

asyncio.run(main())

You can install this server in Claude Desktop and interact with it right away by running mcp install server.py. You can read the docs on how to integrate it.

There are more things like stealth mode and tag based content extraction which you can check in the readme.

Once you run it like crawl http://cursor.com and give me all of its sections, you will realize that it's not giving the official structure of the sections but just contents.

There is a new repository for this use case and Hossein on X shared a similar project. Check them out!

You can adjust it based on the docs of Crawl4AI. Now, since the docs of Python have an additional context, this will practically help directly clone the website landing pages with much better accuracy.

That's how deep the use case is for this workflow.

Here are some nice resources if you're planning to build MCP:

Popular MCP Servers directory by official team - 20k stars on GitHub.

Cursor directory of 1800+ MCP servers.

Those MCP totally 10x my Cursor workflow - YouTube video with practical use cases.

It's safe to say that using MCP servers in Cursor isn't that complex.

One single conversation with an Agent can help you automate complex workflows.

Let me know if you have tried it before or built something awesome.

Have a great day! Until next time :)

| You can check my work at anmolbaranwal.com. Thank you for reading! 🥰 |

|

|---|

Top comments (10)

Excellent write-up. Now that even OpenAI endorsed MCP, it will be the de facto protocol for agentic systems. It will be interesting to see how this moves.

Thanks Sunil. With OpenAI supporting it, I'm confident AI agents will surprise most of the people by the end of this year.

Brilliant post! I’m excited to apply this! 🎉

Awesome! Cursor recently changed the integration method, making it much easier now with the json file. Thank you for reading.

So awesome!

Very cool tool....but it's buggy as hell. Trying to add a Git server is impossible as the checkboxes are all bugged.

Going to join the Discord to see what's up.

Aaand their Discord invite link is bugged too :facepalm

You can join the discord server using this link. I joined it a while ago.. there might be server issues (though I’m not aware of any). Maybe try again later and just let the team know in the Discord server.

Awesome article, Anmol.

Thanks for checking this out Bonnie :)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.