AI agents are no longer just a buzzword—they're the hottest trend of 2025! Everyone on Twitter, Reddit, and LinkedIn is talking about them, and new tools seem to emerge every week. With so many options, it’s easy to feel overwhelmed.

The recent launch of OpenAI's Agents SDK has added another compelling choice to this ecosystem. But does it truly stand out?

In this blog, I’ll break down the OpenAI Agents SDK and compare it with LangGraph, CrewAI, and AutoGen—helping you choose the best framework for your needs.

So, let’s begin!

TL; DR: Key Takeaways

- OpenAI Agents SDK offers a lightweight, production-ready framework with minimal abstractions, making it accessible for developers new to agent development. I was able to build an agent in just 10 minutes of code tinkering.

- It has three core primitives: Agents, Handoffs, and Guardrails, providing a balance between simplicity and power.

- Agent SDK excels in tracing and visualization capabilities, making debugging and monitoring agent workflows straightforward.

- Lang Graph offers superior state management and graph-based workflows, ideal for complex, cyclical agent interactions and production, but have a steeper learning curve

- Crew AI provides the most intuitive approach to role-based multi-agent systems. Its only good for crew-based architecture and still worked on deployment and scalability.

- Auto Gen offers strong support for diverse conversation patterns and agent topologies. Learning curve is medium and docs are not so clear.

- Your choice should depend on your specific use case:

- Open AI Agents SDK - simplicity and production,

- Lang Graph - complex workflows,

- Crew AI - intuitive multi-agent crew-based systems,

- Auto Gen - flexible conversation patterns.

Deep Dive into OpenAI Agents SDK

OpenAI's Agents SDK is a lightweight yet powerful framework for building agentic AI applications

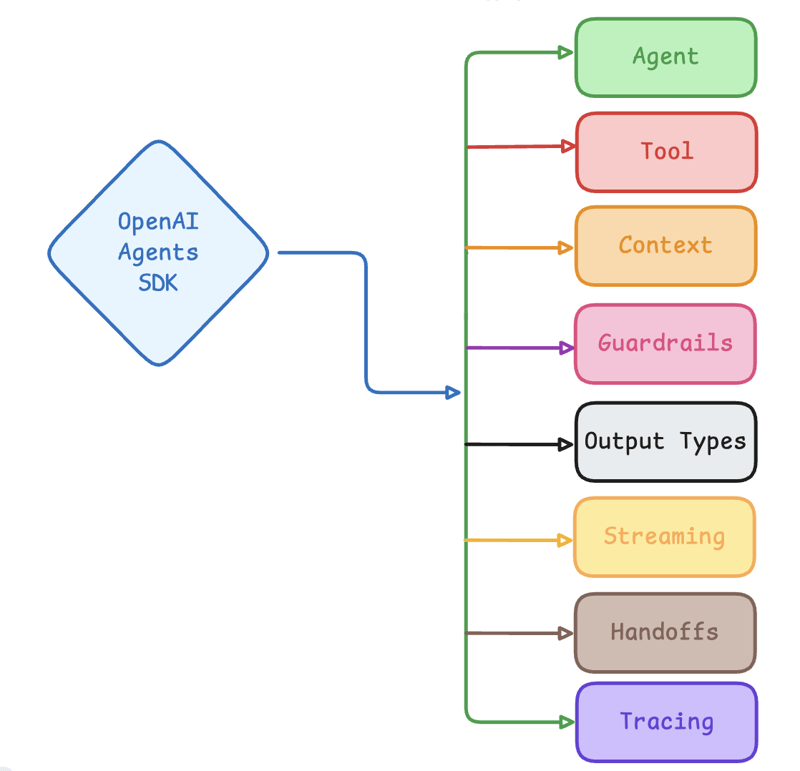

It simplifies the creation of multi-agent systems by providing primitives such as:

- Agents: LLM’s equipped with Instructions & Tools.

- Handoffs: Allows delegating a specific task to another agent. Suitable for multi-agent settings.

- Guardrails: Safety nets are used to validate inputs to agents. Talk about security.

Designed for flexibility and scalability, the SDK allows developers to orchestrate workflows, integrate tools, and debug processes seamlessly.

One of the standout points is OpenAI Agent SDK don’t have a steep learning curve and allows building complex system or task automation a breeze.

Open AI

The SDK has two driving design principles:

- Enough features to be worth using, but few enough primitives to make it quick to learn.

- Works great out of the box, but you can customize exactly what happens.

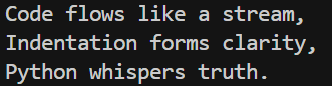

Let’s explore how simple it is to get started by creating a hello world program - Haiku on Python!

Haiku On Python - hello world program!

💡 To keep things organized, create a new environment and enable it.

Install the package using:

pip install openai-agents

Create a file agent.py and. env in the cwd and paste the following code:

from agents import Agent, Runner

agent = Agent(name="Assistant", instructions="You are a helpful assistant")

result = Runner.run_sync(agent, "Write a haiku on python.")

print(result.final_output)

Code explanation:

- Import necessary tools like Runner and Agent primitive class.

- Initializes the Agent name and instruction (think system prompt, but diff)

- Calles the Runner

run_syncmethod to execute task synchronously i.e asking agent to “write a haiku about python”. - prints the result.

In env file add your *OPENAI_API_KEY = "your_api_key" and run the program with:*

python agents.py

Output:

Amazing, we just made an AI agent in just 4 lines of code, pretty wild right!

However, there is more to OpenAI Agent SDK feature offerings and why it can be a game changer. Let’s look at few of them.

Key Features of Open AI Agents SDK

OpenAI Agents SDK Features, credits (adasci.org)

Though there are a lot of hidden features that we are yet to explore, here are key ones:

- Agent Loop: A built-in mechanism that handles calling tools, sending results to the language model and continuing the loop until completion. (feel like Cursor)

- Python-First Design: Leverages native Python features for orchestration rather than introducing new abstractions, reducing the learning curve. (fast and easy to get started)

- Handoffs: Enables coordination and delegation between multiple agents, allowing for specialized task handling. (co-working)

- Guardrails: Provides input validation and safety checks that run parallel to your agents and can break execution early if checks fail. (security net)

- Function Tools: Converts any Python function into a tool with automatic schema generation and validation. (function calling automation)

- Tracing: Built-in visualization tools for debugging and monitoring workflows, plus integration with OpenAI's evaluation and fine-tuning capabilities. (visualization support)

In essence, Agents SDK offers several standout features that make it a strong choice for AI Projects. But does it stand out in the case of complex projects?

Building with OpenAI Agents SDK & Composio

We will use Composio tools integration and OpenAI Agent SDK to simplify building and develop agents. But before that,

A word on Composio

*Composio is an agentic integration platform that enables LLMs/AI agents to interact with external services via function calling. Its rich set of tools allows seamless integrations and reduces burden on developers.*

Setting up environment

We will use a virtual environment to keep things isolated. I am using Windows, so some commands might differ for other OSs.

Start by creating a new folder and opening it in your editor of choice. I am going with Cursor.

Next open the terminal and create a virtual env with:

python -m venv env_name

Make sure to replace env_name with any meaningful name,

Now activate the environment with:

composio-learn\Scripts\activate

In this case, composio-learn is my environment name. For Linux/ max, refer online.

Finally, let’s add all the necessary libraries with:

pip install composio-openai openai-agents

next, we need to set up composio.

Setup Composio

Follow the steps to setup composio successfully:

Head to Composio.dev and sign in/sign up for the account. It's recommended that you use GitHub for the job.

Next, install uv an alternative to pip for package management. This is done as composio requires uvx for setup:

curl -LsSf https://astral.sh/uv/install.sh | sh

Now login to composio using:

uvx --from composio-core composio login

If done correctly, it will open a browser with an access key, which you need to paste in the terminal. Once done, the key will automatically be generated in the backend.

To view the key type:

uvx --from composio-core composio whoami

As the API key is visible, save it in the environment variable using:

# create .env

echo "COMPOSIO_API_KEY=YOUR_API_KEY" >> .env

# setup enviorment variable - I did

export COMPOSIO_API_KEY=YOUR_API_KEY

Please replace your API Key with the one you see in the terminal. If you get stuck, refer to the Composio Installation Guide.

Next , authenticate the cli & GitHub using:

uvx --from composio-core composio add github

& follow the instructions in the CLI to authenticate and connect your GitHub account.

Once we are done, we will get started!

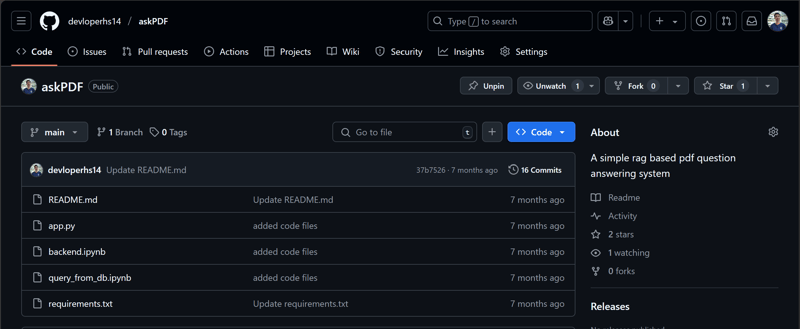

Writing Code for Starred GitHub Agent

To keep things simple, we will test the agent on a single functionality: Star, a GitHub repo provided by the user in the prompt. Here is the base code to get started:

# github_agent.py

from __future__ import annotations

import asyncio

import os

from typing import List, Optional

from openai import AsyncOpenAI

from composio_openai import ComposioToolSet, Action

from agents import (

Agent,

Model,

ModelProvider,

OpenAIChatCompletionsModel,

RunConfig,

Runner,

function_tool,

set_tracing_disabled,

)

# Environment variables

BASE_URL = os.getenv("BASE_URL") # Load default url as environment variable

API_KEY = os.getenv("API_KEY") # Add Open AI key as environemt Variable

MODEL_NAME = "gpt-4o-mini"

COMPOSIO_API_KEY = os.getenv("COMPOSIO_API_KEY") # Add your Composio API key as an environment variable

if not BASE_URL or not API_KEY or not MODEL_NAME:

raise ValueError(

"Missing required environment variables. Please set BASE_URL, API_KEY, and MODEL_NAME."

)

if not COMPOSIO_API_KEY:

print("Warning: COMPOSIO_API_KEY environment variable not set. GitHub star functionality will not work.")

# Initialize OpenAI client

client = AsyncOpenAI(base_url=BASE_URL, api_key=API_KEY)

set_tracing_disabled(disabled=True)

# Initialize Composio toolset

composio_toolset = ComposioToolSet(api_key=COMPOSIO_API_KEY or "YOUR_API_KEY")

github_tools = composio_toolset.get_tools(actions=[Action.GITHUB_STAR_A_REPOSITORY_FOR_THE_AUTHENTICATED_USER])

class CustomModelProvider(ModelProvider):

def get_model(self, model_name: str | None) -> Model:

return OpenAIChatCompletionsModel(model=model_name or MODEL_NAME, openai_client=client)

CUSTOM_MODEL_PROVIDER = CustomModelProvider()

@function_tool

async def star_github_repo(repo_name: str) -> str:

"""

Star a GitHub repository for the authenticated user.

Args:

repo_name: The name of the repository to star (format: owner/repo)

Returns:

A message indicating the result of the operation

"""

try:

# Ensure repo_name is in the correct format

if '/' not in repo_name:

return f"Error: Repository name should be in the format 'owner/repo', got '{repo_name}'"

# Use OpenAI with Composio tools

response = await client.chat.completions.create(

model=MODEL_NAME,

tools=github_tools,

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": f"Star the repository {repo_name} on GitHub"},

],

)

# Handle the tool calls

result = composio_toolset.handle_tool_calls(response)

return f"Successfully starred repository: {repo_name}" if "error" not in str(result).lower() else f"Failed to star repository: {result}"

except Exception as e:

return f"Error starring repository: {str(e)}"

async def main():

# Create an agent with the GitHub star function

agent = Agent(

name="GitHub Assistant",

instructions="You are a helpful assistant that can star GitHub repositories.",

tools=[star_github_repo]

)

# Test the GitHub star functionality

result = await Runner.run(

agent,

"Star the repository https://github.com/<user_name>/<repo_name> on GitHub",

run_config=RunConfig(model_provider=CUSTOM_MODEL_PROVIDER),

)

print("GitHub star result:")

print(result.final_output)

if __name__ == "__main__":

asyncio.run(main())

Intimidating, right? Let’s break it down!

The script:

- Imports necessary modules, including OpenAI, Composio, and custom agent components.

- Loads environment variables (

BASE_URL,API_KEY,MODEL_NAME,COMPOSIO_API_KEY) and validates them. - Initializes OpenAI client and disables tracing for debugging.

- Sets up Composio toolset to enable GitHub repository starring (as per our use case).

- Defines a custom model provider to fetch AI models dynamically.

- Implements

star_github_repo(repo_name: str)to star a GitHub repository using OpenAI and Composio. - Creates an AI agent (

GitHub Assistant) with instructions to star repositories. - Runs the agent with a test command and prints the result.

- Uses

asyncio.run(main())to execute the script asynchronously.

Now let’s see the agent in action

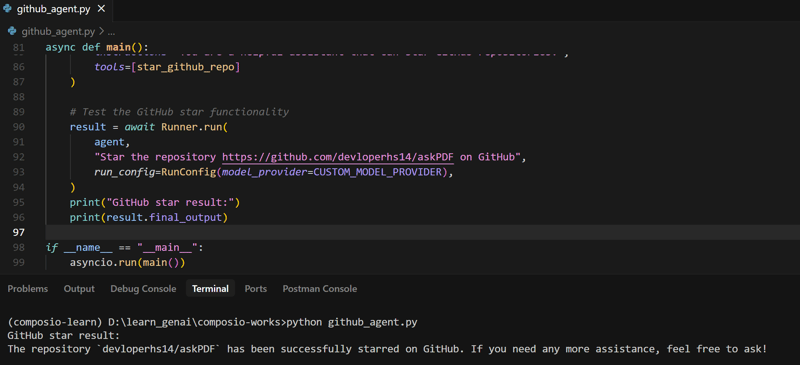

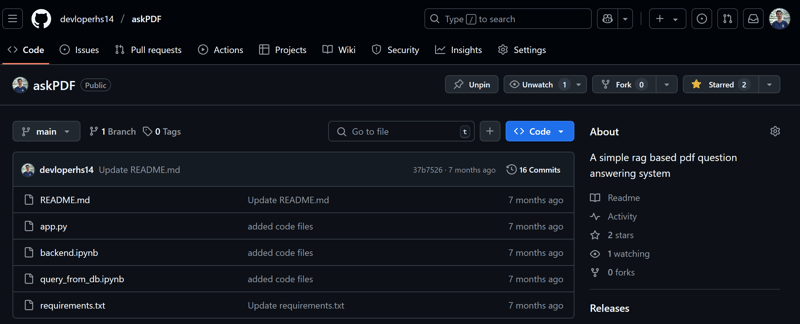

Results - Run the script

Make sure to replace "https://github.com/<user_name>/<repo_name>" in test section with an actual public GitHub Repo URL. I have changed mine too.

Now head to the terminal & type:

python github_agent.py

and see the magic happen!

Before running the agent

Agent Running…

After Running The Agent

If you don’t see your repo starred🌟, refresh your browser, and it will be fixed.

Having hands-on experience with building using Open AI Agents SDK, let’s look at how it compares against all its competitors- (the main section of the blog)

Comparing Agent Frameworks

This section focuses on the comparison between OpenAI Agents vs LangGraph vs Autogen and CrewAI against various pointers like:

- Getting Started - Hello World docs

- Building Complex Agents

- Multi-Agent Support

- State Management

- Ecosystem and Support

For ease of understanding, I have included a table for most of the pointers.

So, let’s start with 1st comparison.

Getting Started Experience

| Framework | Learning Curve | Documentation Quality | Hello World Simplicity |

|---|---|---|---|

| OpenAI Agents SDK | Low | High, with clear examples | Very simple (few lines of code) |

| LangGraph | Medium-High | Comprehensive but technical | More complex, requires understanding graph concepts |

| CrewAI | Low-Medium | Practical with many examples | Simple with intuitive concepts |

| AutoGen | Medium | Extensive with diverse examples | Moderately simple |

I tested out all of them and here is my experience:

- OpenAI Agents SDK: Super easy to start—just a few lines of code! The docs are well-written and not overwhelming. Enjoyed building with it.

- LangGraph: Tough to begin with. Had to learn about graphs and states just for a simple agent. Docs are technical, not beginner-friendly—but they do offer a free course, which helped.

- CrewAI: The second easiest. Requires understanding Tools and Roles, but the docs are well-structured and beginner-friendly.

- AutoGen: A bit tricky. Needs manual setup and works around chat conversations. Confusing versioning in the docs made things harder.

Building Complex Agents

| Framework | Customization | Tool Integration | Complexity Management |

|---|---|---|---|

| OpenAI Agents SDK | High with minimal abstractions | Seamless with function tools | Simple primitives for complex workflows |

| LangGraph | Very high with graph structures | Strong via LangChain integration | Excellent for complex, cyclical workflow |

| CrewAI | High with role-based design | Good with custom tools | Natural for team-based workflows |

| AutoGen | High with conversable agents | Strong with diverse tools | Good for conversation-based workflows |

I tested out all of them for building complex agents, and here’s how they compare:

- OpenAI Agents SDK: Highly customizable without adding unnecessary layers. Tool integration is seamless, and it uses simple primitives to build complex workflows.

- LangGraph: Offers deep customization through graph structures. Best suited for cyclical workflows, but the learning curve is steep. Strong LangChain integration is a plus.

- CrewAI: Uses a role-based approach, making it easy to design multi-agent systems. Tool integration is good, and it naturally fits team-based workflows.

- AutoGen: Designed for conversation-based agents. Supports diverse tools, but its complexity lies in managing interactions rather than logic flow.

Multi-Agent Support

| Framework | Agent Communication | Orchestration | Parallel Execution |

|---|---|---|---|

| OpenAI Agents SDK | Strong with handoffs | Simple, Python-based | Limited built-in support |

| LangGraph | Excellent with graph edges | Sophisticated with state transitions | Good support via graph structure |

| CrewAI | Intuitive with crew metaphor | Natural with role-based design | Supported with task management |

| AutoGen | Excellent with conversation patterns | Flexible with agent topologies | Supported with agent networks |

I tested all of them for multi-agent support, and here’s how they stack up:

- OpenAI Agents SDK: Strong in agent handoffs but lacks built-in parallel execution. Orchestration is simple and Python-based, making it easy to implement.

- LangGraph: Excels in communication via graph edges, allowing sophisticated state transitions. Its graph structure makes parallel execution smoother.

- CrewAI: Uses an intuitive "crew" metaphor, making agent communication natural. Orchestration is role-based, and task management helps with parallel execution.

- AutoGen: Best for conversation-driven agents. Supports flexible agent topologies and enables parallel execution through agent networks.

State Management

| Framework | State Persistence | State Transitions | Debugging |

|---|---|---|---|

| OpenAI Agents SDK | Good with built-in tracing | Simple, function-based | Excellent with visualization tools2 |

| LangGraph | Excellent with graph state | Sophisticated with conditional edges | Good with LangSmith integration710 |

| CrewAI | Basic with task outputs | Simple with sequential/parallel processes | Limited built-in tools58 |

| AutoGen | Good with agent memory | Flexible with conversation patterns | Good with conversation tracking |

State management refers to tracking the system's condition at any moment—an essential part of system design and a key pillar of any product.

I tested it with long conversations, multiple interactions, and mid-session debugging. Here’s what I found:

- OpenAI Agents SDK: State persistence is solid with built-in tracing. Simple function-based state transitions make it easy to work with, and debugging is excellent thanks to visualization tools.

- LangGraph: Excels in managing state through graph structures. Transitions are sophisticated, leveraging conditional edges, and debugging is well-supported via LangSmith integration.

- CrewAI: Handles basic state persistence through task outputs. Transitions are straightforward with sequential and parallel processes, but debugging options are limited.

- AutoGen: Maintains agent memory well, making it suitable for conversation-driven workflows. State transitions are flexible, and debugging is supported with conversation tracking.

Ecosystem and Support

| Framework | Community Size | Integration Options | Production Readiness |

|---|---|---|---|

| OpenAI Agents SDK | Growing (new) | Strong with OpenAI tools | High (designed for production) |

| LangGraph | Large (part of LangChain) | Excellent with LangChain ecosystem | Medium-High (designed for production) |

| CrewAI | Large and growing | Good with Python ecosystem | Medium |

| AutoGen | Large (Microsoft-backed) | Good with diverse tools | Medium-High |

A strong ecosystem and support help developers fix issues and stay updated.

I often get stuck on small things—thankfully, communities exist! A good ecosystem is always a plus.

Let’s see how these frameworks compare:

- OpenAI Agents SDK: Still new but growing fast. Strong integration with OpenAI tools and built for production use.

- LangGraph: Benefits from LangChain’s large ecosystem. Excellent integration within that space, making it a solid choice for complex workflows.

- CrewAI: Has a growing community and fits well into the Python ecosystem. Suitable for production (only crew-based workflows) but still maturing.

- AutoGen: Backed by Microsoft, with a large community and strong tool integration. Production readiness is solid but not yet at the highest level.

Overall

It seems like everyone have their quirks, so it's better to do an overall comparison and here are the results (In table format):

| Feature | OpenAI Agents SDK | LangGraph | CrewAI | AutoGen |

|---|---|---|---|---|

| Learning Curve | Lightweight with minimal abstractions, quick to learn | Steepest, requires designing agentic architecture | Moderate, requires understanding of agents, tasks | Fastest, easy to get started |

| Design Flexibility | Flexible with customizable agents, tools, and workflows | Most flexible, supports any agentic architecture | More flexible than AutoGen, primarily task-based | Least flexible, based on actor framework |

| Integrations | Compatible with any model supporting Chat Completions API format | Integrates with Python, JavaScript, and LangChain | Direct LangChain integration, supports monitoring | Good LLM integrations, strong with Microsoft |

| Scalability & Deployability | Designed for production-ready applications with built-in tracing and debugging | Highly scalable with Pragal and Apache Beam | More scalable than AutoGen, supports async execution | Scalable with async messaging, shifting architecture |

| Documentation | Comprehensive with examples and tutorials | Improved, includes courses but still challenging | Excellent, structured with guides and blogs | Good, but discovery could be improved |

| Human-in-the-Loop Support | Configurable safety checks with guardrails for input and output validation | Very flexible, intervention at any point | Only at the end of a task | Limited (never, always, or at termination) |

| Streaming Support | Not explicitly detailed | First-class support for streaming | No streaming support | Supports streaming but setup is complex |

| Low-Code Interface | No dedicated low-code interface | LangGraph Studio (custom IDE, not truly low-code) | No official low-code UI (unofficial exists) | AutoGen Studio (open-source low-code tool) |

| Time Travel (Debugging) | Built-in tracing for visualizing and debugging agent execution | Full time travel support for exploring alternatives | Supports time travel from last crew run | No support |

| Language Support | Python | Python, JavaScript | Python only | Python, Dolap (.NET) |

| Memory Management | Not explicitly detailed | Requires explicit state management implementation | Built-in support for short-term, long-term, user memory | Not explicitly detailed |

💡Note

The OpenAI Agents SDK is a recent release, and some features may evolve as the platform matures. For the most current information, refer to the official documentation.

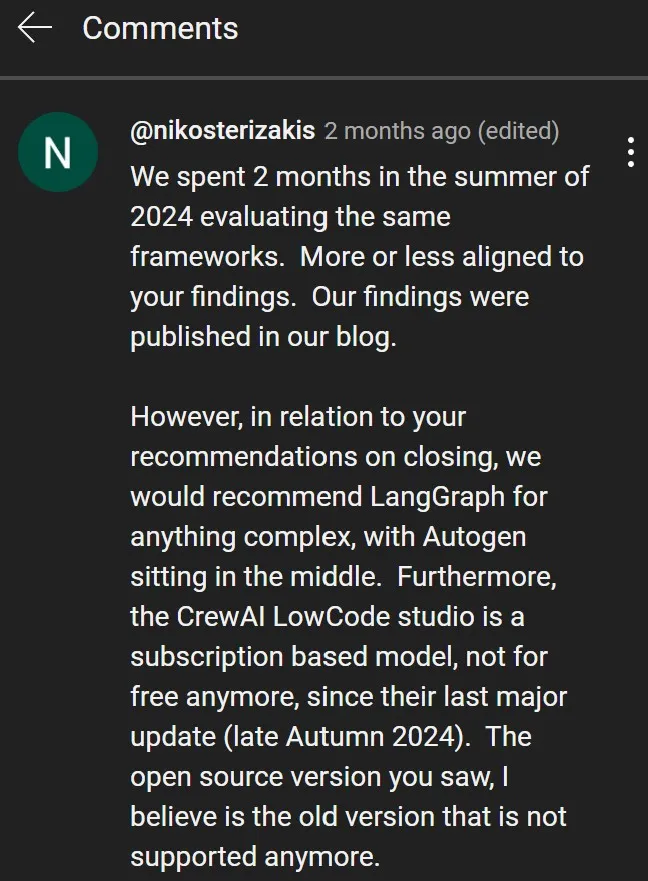

Also, I found this comment under one of videos, I was researching:

Though I would like to share my experience as Final Verdict.

Final Conclusion

I know it might not align with yours, but after comparing all frameworks across multiple dimensions, here's my recommendation:

Choose OpenAI Agents SDK if:

- You want a simple, production-ready framework with minimal learning curve.

- You're already using OpenAI models and want tight integration.

- You need strong tracing and debugging capabilities, baked into the SDK, enabled via a one-line command.

- You prefer Python-native approaches with few abstractions.

- Preferred option for production

Choose LangGraph if:

- You need complex, cyclical workflows with sophisticated state management.

- You're building applications with multiple interdependent agents.

- You're already familiar with the LangChain ecosystem.

- You need visual representation of your agent workflows.

- Ready to learn / already have prior experience in coding and graph structure and theory.

Choose CrewAI if:

- You want the most intuitive approach to multi-agent systems.

- You prefer thinking in terms of roles, goals, and tasks.

- You need a framework that naturally models human team structures.

- You want a balance between simplicity and power.

- Not going for production.

Choose AutoGen if:

- You need flexible conversation patterns between agents.

- You're building applications with diverse conversational agent.

- You want strong support from Microsoft's research team.

- You need a framework that's been battle-tested in research settings.

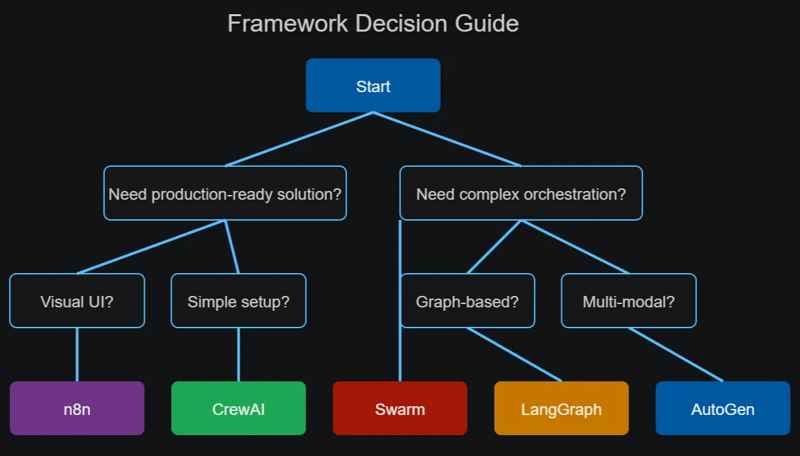

Ultimately, the best framework depends on your specific use case, team expertise, and project requirements. Here is a simple flow chart to get started (ignore swarm & n8n)

The good news is that all four frameworks are actively developed, well-documented, and capable of building powerful AI agent systems.

As the field evolves, we can expect these frameworks to continue improving and potentially converging on common patterns and best practices.

What's your experience with these frameworks? Have you built something interesting with any of them? Share your thoughts in the comments below!

Top comments (1)

Read Formatted Version at: openai-agents-sdk-vs-langgraph-vs-...