Discover how Undetected ChromeDriver helps bypass anti-bot systems for web scraping, along with step-by-step guidance, advanced methods, and key limitations. Plus, learn about Scrapeless - a more robust alternative for professional scraping needs.

In this guide, you will learn:

- What Undetected ChromeDriver is and how it can be useful

- How it minimizes bot detection

- How to use it with Python for web scraping

- Advanced usage and methods

- Its key limitations and drawbacks

- Recommended alternative: Scrapeless

- Technical analysis of anti-bot detection mechanisms

Let's dive in!

What Is Undetected ChromeDriver?

Undetected ChromeDriver is a Python library that provides an optimized version of Selenium's ChromeDriver. This has been patched to limit detection by anti-bot services such as:

- Imperva

- DataDome

- Distil Networks

- and more ...

It can also help bypass certain Cloudflare protections, although that can be more challenging.

If you have ever used browser automation tools like Selenium, you know they let you control browsers programmatically. To make that possible, they configure browsers differently from regular user setups.

Anti-bot systems look for those differences, or "leaks," to identify automated browser bots. Undetected ChromeDriver patches Chrome drivers to minimize these telltale signs, reducing bot detection. This makes it ideal for web scraping sites protected by anti-scraping measures!

How does Undetected ChromeDriver work?

Undetected ChromeDriver reduces detection from Cloudflare, Imperva, DataDome, and similar solutions by employing the following techniques:

- Renaming Selenium variables to mimic those used by real browsers

- Using legitimate, real-world User-Agent strings to avoid detection

- Allowing the user to simulate natural human interaction

- Managing cookies and sessions properly while navigating websites

- Enabling the use of proxies to bypass IP blocking and prevent rate limiting

These methods help the browser controlled by the library bypass various anti-scraping defenses effectively.

Using Undetected ChromeDriver for Web Scraping: Step-By-Step Guide

Step #1: Prerequisites and Project Setup

Undetected ChromeDriver has the following prerequisites:

- Latest version of Chrome

- Python 3.6+: If Python 3.6 or later is not installed on your machine, download it from the official site and follow the installation instructions.

Note: The library automatically downloads and patches the driver binary for you, so there is no need to manually download ChromeDriver.

Create a directory for your project:

mkdir undetected-chromedriver-scraper

cd undetected-chromedriver-scraper

python -m venv env

Activate the virtual environment:

# On Linux or macOS

source env/bin/activate

# On Windows

env\Scripts\activate

Step #2: Install Undetected ChromeDriver

Install Undetected ChromeDriver via the pip package:

pip install undetected_chromedriver

This library will automatically install Selenium, as it is one of its dependencies.

Step #3: Initial Setup

Create a scraper.py file and import undetected_chromedriver:

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

import json

# Initialize a Chrome instance

driver = uc.Chrome()

# Connect to the target page

driver.get("https://scrapeless.com")

# Scraping logic...

# Close the browser

driver.quit()

Step #4: Implement the Scraping Logic

Now let's add the logic to extract data from the Apple page:

import undetected_chromedriver as uc

from selenium.webdriver.common.by import By

import json

import time

# Create a Chrome web driver instance

driver = uc.Chrome()

# Connect to the Apple website

driver.get("https://www.apple.com/fr/")

# Give the page some time to fully load

time.sleep(3)

# Dictionary to store product info

apple_products = {}

try:

# Find product sections (using the classes from the provided HTML)

product_sections = driver.find_elements(By.CSS_SELECTOR, ".homepage-section.collection-module .unit-wrapper")

for i, section in enumerate(product_sections):

try:

# Extract product name (headline)

headline = section.find_element(By.CSS_SELECTOR, ".headline, .logo-image").get_attribute("textContent").strip()

# Extract description (subhead)

subhead_element = section.find_element(By.CSS_SELECTOR, ".subhead")

subhead = subhead_element.text

# Get the link if available

link = ""

try:

link_element = section.find_element(By.CSS_SELECTOR, ".unit-link")

link = link_element.get_attribute("href")

except:

pass

apple_products[f"product_{i+1}"] = {

"name": headline,

"description": subhead,

"link": link

}

except Exception as e:

print(f"Error processing section {i+1}: {e}")

# Export the scraped data to JSON

with open("apple_products.json", "w", encoding="utf-8") as json_file:

json.dump(apple_products, json_file, indent=4, ensure_ascii=False)

print(f"Successfully scraped {len(apple_products)} Apple products")

except Exception as e:

print(f"Error during scraping: {e}")

finally:

# Close the browser and release its resources

driver.quit()

Run it with:

python scraper.py

Undetected ChromeDriver: Advanced Usage

Now that you know how the library works, you're ready to explore some more advanced scenarios.

Choose a Specific Chrome Version

You can specify a particular version of Chrome for the library to use by setting the version_main argument:

import undetected_chromedriver as uc

# Specify the target version of Chrome

driver = uc.Chrome(version_main=105)

With Syntax

To avoid manually calling the quit() method when you no longer need the driver, you can use the with syntax:

import undetected_chromedriver as uc

with uc.Chrome() as driver:

driver.get("https://example.com")

# Rest of your code...

Limitations of Undetected ChromeDriver

While undetected_chromedriver is a powerful Python library, it does have some known limitations:

IP Blocks

The library does not hide your IP address. If you're running a script from a datacenter, chances are high that detection will still occur. Similarly, if your home IP has a poor reputation, you may also be blocked.

To hide your IP, you need to integrate the controlled browser with a proxy server, as demonstrated earlier.

No Support for GUI Navigation

Due to the inner workings of the module, you must browse programmatically using the get() method. Avoid using the browser GUI for manual navigation—interacting with the page using your keyboard or mouse increases the risk of detection.

Limited Support for Headless Mode

Officially, headless mode is not fully supported by the undetected_chromedriver library. However, you can experiment with it using:

driver = uc.Chrome(headless=True)

Stability Issues

Results may vary due to numerous factors. No guarantees are provided, other than continuous efforts to understand and counter detection algorithms. A script that successfully bypasses anti-bot systems today might fail tomorrow if the protection methods receive updates.

Recommended Alternative: Scrapeless

Given the limitations of Undetected ChromeDriver, Scrapeless offers a more robust and reliable alternative for web scraping without getting blocked.

We firmly protect the privacy of the website. All data in this blog is public and is only used as a demonstration of the crawling process. We do not save any information and data.

Why Scrapeless is Superior

Scrapeless is a remote browser service that addresses the inherent problems with the Undetected ChromeDriver approach:

Constant updates: Unlike Undetected ChromeDriver which may stop working after anti-bot system updates, Scrapeless is continuously updated by its team.

Built-in IP rotation: Scrapeless offers automatic IP rotation, eliminating the IP blocking issue of Undetected ChromeDriver.

Optimized configuration: Scrapeless browsers are already optimized to avoid detection, which greatly simplifies the process.

Automatic CAPTCHA solving: Scrapeless can automatically solve CAPTCHAs you might encounter.

Compatible with multiple frameworks: Works with Playwright, Puppeteer, and other automation tools.

Sign in to Scrapeless for a free trial.

Recommended reading: How to Bypass Cloudflare With Puppeteer

How to use Scrapeless to scrape the web (without getting blocked)

Here's how to implement a similar solution with Scrapeless using Playwright:

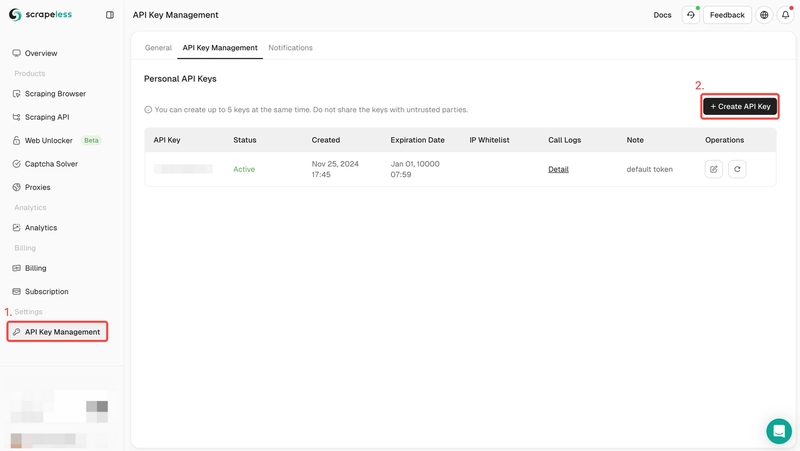

Step 1: Register and log in to Scrapeless

Step 2: Get the Scrapeless API KEY

Step 3: You can integrate the following code into your project

const {chromium} = require('playwright-core');

// Scrapeless connection URL with your token

const connectionURL = 'wss://browser.scrapeless.com/browser?token=YOUR_TOKEN_HERE&session_ttl=180&proxy_country=ANY';

(async () => {

// Connect to the remote Scrapeless browser

const browser = await chromium.connectOverCDP(connectionURL);

try {

// Create a new page

const page = await browser.newPage();

// Navigate to Apple's website

console.log('Navigating to Apple website...');

await page.goto('https://www.apple.com/fr/', {

waitUntil: 'domcontentloaded',

timeout: 60000

});

console.log('Page loaded successfully');

// Wait for the product sections to be available

await page.waitForSelector('.homepage-section.collection-module', { timeout: 10000 });

// Get featured products from the homepage

const products = await page.evaluate(() => {

const results = [];

// Get all product sections

const productSections = document.querySelectorAll('.homepage-section.collection-module .unit-wrapper');

productSections.forEach((section, index) => {

try {

// Get product name - could be in .headline or .logo-image

const headlineEl = section.querySelector('.headline') || section.querySelector('.logo-image');

const headline = headlineEl ? headlineEl.textContent.trim() : 'Unknown Product';

// Get product description

const subheadEl = section.querySelector('.subhead');

const subhead = subheadEl ? subheadEl.textContent.trim() : '';

// Get product link

const linkEl = section.querySelector('.unit-link');

const link = linkEl ? linkEl.getAttribute('href') : '';

results.push({

name: headline,

description: subhead,

link: link

});

} catch (err) {

console.error(`Error processing section ${index}: ${err.message}`);

}

});

return results;

});

// Display the results

console.log('Found Apple products:');

console.log(JSON.stringify(products, null, 2));

console.log(`Total products found: ${products.length}`);

} catch (error) {

console.error('An error occurred:', error);

} finally {

// Close the browser

await browser.close();

console.log('Browser closed');

}

})();

You can also join the Scrapeless Discord to participate in the developer support program and receive up to 500k SERP API usage credits for free.

Enhanced Technical Analysis

Bot Detection: How It Works

Anti-bot systems use several techniques to detect automation:

Browser fingerprinting: Collects dozens of browser properties (fonts, canvas, WebGL, etc.) to create a unique signature.

WebDriver detection: Looks for the presence of the WebDriver API or its artifacts.

Behavioral analysis: Analyzes mouse movements, clicks, typing speed that differ between humans and bots.

Navigation anomaly detection: Identifies suspicious patterns like too-fast requests or lack of image/CSS loading.

Recommended reading: How to Bypass Anti Bot

How Undetected ChromeDriver Bypasses Detection

Undetected ChromeDriver circumvents these detections by:

Removing WebDriver indicators: Eliminates the

navigator.webdriverproperty and other WebDriver traces.Patching Cdc_: Modifies Chrome Driver Controller variables that are known signatures of ChromeDriver.

Using realistic User-Agents: Replaces default User-Agents with up-to-date strings.

Minimizing configuration changes: Reduces changes to Chrome browser's default behavior.

Technical code showing how Undetected ChromeDriver patches the driver:

Simplified extract from Undetected ChromeDriver source code

def _patch_driver_executable():

"""

Patches the ChromeDriver binary to remove telltale signs of automation

"""

linect = 0

replacement = os.urandom(32).hex()

with io.open(self.executable_path, "r+b") as fh:

for line in iter(lambda: fh.readline(), b""):

if b"cdc_" in line.lower():

fh.seek(-len(line), 1)

newline = re.sub(

b"cdc_.{22}", b"cdc_" + replacement.encode(), line

)

fh.write(newline)

linect += 1

return linect

Why Scrapeless is More Effective

Scrapeless takes a different approach by:

Pre-configured environment: Using browsers already optimized to mimic human users.

Cloud-based infrastructure: Running browsers in the cloud with proper fingerprinting.

Intelligent proxy rotation: Automatically rotating IPs based on the target site.

Advanced fingerprint management: Maintaining consistent browser fingerprints throughout the session.

WebRTC, Canvas, and Plugin suppression: Blocking common fingerprinting techniques.

Sign in to Scrapeless for a free trial.

Conclusion

In this article, you've learned how to deal with bot detection in Selenium using Undetected ChromeDriver. This library provides a patched version of ChromeDriver for web scraping without getting blocked.

The challenge is that advanced anti-bot technologies like Cloudflare will still be able to detect and block your scripts. Libraries like undetected_chromedriver are unstable—while they may work today, they might not work tomorrow.

For professional scraping needs, cloud-based solutions like Scrapeless offer a more robust alternative. They provide pre-configured remote browsers specifically designed to bypass anti-bot measures, with additional features like IP rotation and CAPTCHA solving.

The choice between Undetected ChromeDriver and Scrapeless depends on your specific needs:

- Undetected ChromeDriver: Good for smaller projects, free and open-source, but requires more maintenance and can be less reliable.

- Scrapeless: Better for professional scraping needs, more reliable, constantly updated, but comes with a subscription cost.

By understanding how these anti-bot bypass technologies work, you can choose the right tool for your web scraping projects and avoid the common pitfalls of automated data collection.

Top comments (0)