Harder, Faster, Better, Stronger: Programming with AI Pair Programmers

Chat-GPT was launched to the public in November 2022. Less than two months later, Open AI's chatbot had crossed the 100 million subscriber milestone, making it (back then) the fastest-growing consumer application ever.

AI's rapid rise to popularity is nowhere more obvious than in programming. In fact, AI has become so commonplace in programming that a stunning 92% of programmers say that they are using AI tools, according to a GitHub developer survey from 2023. In programming, almost everyone uses AI.

Clearly, programmers see the benefits of working with an AI pair programmer in their day-to-day work. But it is worth asking just how much more productive programmers are when using an AI tool to assist in their development.

What's the impact of AI on developer productivity? How does AI change the developer experience?

Let's check out what researchers, analysts, and industry experts have found out about the productivity gains from using an AI pair programmer, and let's quantify the impact on developer productivity.

AI Pair Programming and Developer Productivity: What's the Impact?

To quantify the impact of AI pair programmers on developer productivity, researchers have come up with a number of different experiments and studies.

In this article, we review the results of five studies measuring the impact of AI on developer productivity:

- Which AI tool was assessed by the research?

- What was the research question?

- How were the results measured?

- What was the overall conclusion?

-

AI Pair Programming and Developer Productivity: What's the Impact?

-

1. The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

-

2. The Robots Are Coming: Exploring the Implications of OpenAI Codex on Introductory Programming

-

3. Automatic Generation of Programming Exercises and Code Explanations Using Large Language Models

-

4. Evaluating the Code Quality of AI-Assisted Code Generation Tools: An Empirical Study on GitHub Copilot, Amazon CodeWhisperer, and ChatGPT

- 5. Using AI for Code Documentation, Code Generation, Code Refactoring, and Other High-Complexity Tasks

-

1. The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

- Conclusion

1. The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

Who can code faster: a programmer with or without an AI pair programmer?

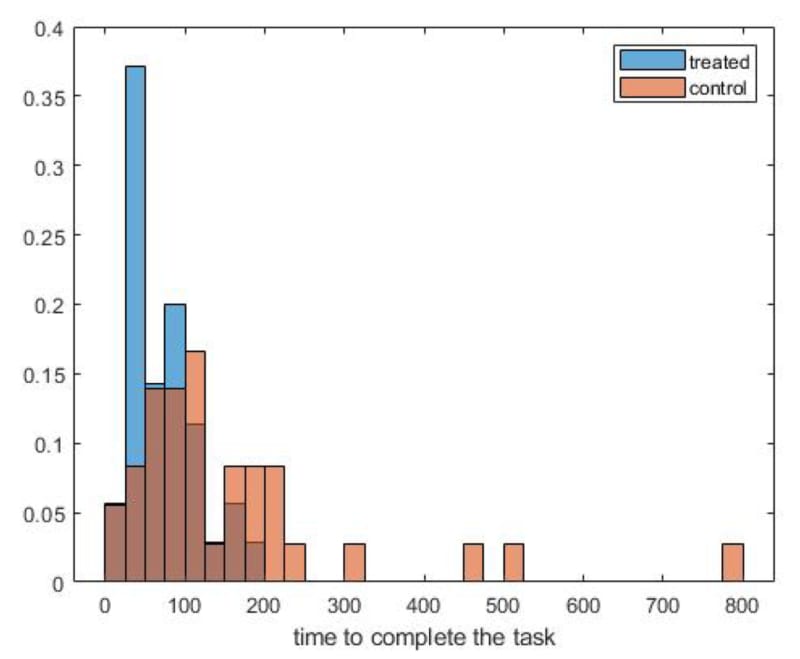

In this 2023 study, two groups of programmers were given the same task: implement an HTTP server in JavaScript as quickly as possible. One group used GitHub Copilot, an AI pair programmer, to complete the task. The other did not.

Who completed the task faster?

Which Tool Did the Researchers Assess?

GitHub Copilot

What Was the Research Question?

Do programmers using GitHub Copilot complete a common development faster than programmers not using GitHub Copilot?

How Was the Question Tested?

The researchers set up a controlled experiment to measure the productivity gains from using AI tools in professional software development: 95 developers were split into two groups and asked to implement an HTTP server in JavaScript as quickly as possible. The faster they completed the task, the higher the payment they would receive.

45 developers had access to an AI pair programmer (GitHub Copilot), and 50 developers could complete the task in any way they wanted to, including, for example, searching for answers online or consulting StackOverflow.

Result

The group of developers with access to GitHub Copilot completed the task 55.8% faster than the group without access to Copilot. The result is statistically significant and suggests that AI tools have a strong impact on developer productivity.

As the saying goes: it won't be AI replacing you. It will be the human knowing how to use AI.

"The group [of developers] that has access to GitHub Copilot was able to complete the task 55.8% faster than the control group [without access to GitHub Copilot]."

Peng et al (2023). The Impact of AI on Developer Productivity: Evidence from GitHub Copilot

The paper was published by researchers from Microsoft Research, GitHub, and the MIT Sloan School of Management and is available as a free download.

2. The Robots Are Coming: Exploring the Implications of OpenAI Codex on Introductory Programming

Who can program better: AI or humans?

This is the question a team of researchers asked in their paper titled "The Robots Are Coming: Exploring the Implications of OpenAI Codex on Introductory Programming". In it, the researchers compare Codex' performance against that of first-year programming students.

Which Tool Did the Researchers Assess?

OpenAI Codex (Private Beta)

What Was the Research Question?

How well does Codex do on introductory programming exams when compared to students who

took these same exams under normal conditions?

How Was the Question Tested?

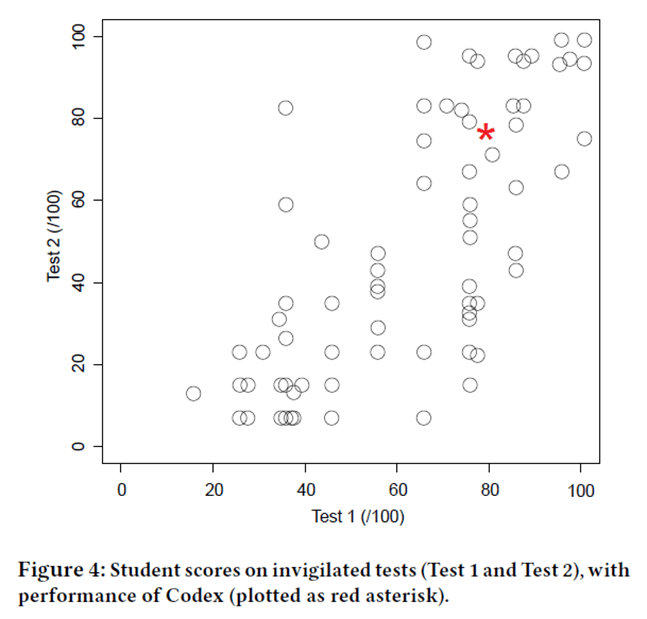

The researchers presented Codex with 23 real-world programming exam questions, and compared Codex' performance against that of first-year students.

Result

The researchers concluded that Codex performs better than most students on code writing questions in typical first-year programming exams.

"Our results show that Codex performs better than most students on code writing questions in typical first-year programming exams."

Finnie-Ansley et al (2022). The Robots Are Coming: Exploring the Implications of OpenAI Codex on Introductory Programming.

Just how much better does Codex do compared to first-year programming students? Here are the results.

The red asterisk is Codex. Note that Codex did not score 100% on the tests, i.e. it is not almighty. But its score places it within the top quartile of participants.

The paper was published in February 2022 by Finnie-Ansley et al. and it is available as a download here.

3. Automatic Generation of Programming Exercises and Code Explanations Using Large Language Models

Not everyone who touches code in their everyday life works as a programmer. There is also a large number of instructors and educators who teach other people how to code. Are LLMs useful for them?

This is the question a 2022 paper published by Sarsa et al seeks to answer.

Which Tool Did the Researchers Assess?

OpenAI Codex (Private Beta)

What Was the Research Question?

How useful are Large Language Models (LLMs) for educators? Can AI tools help with creating programming exercises, such as problem statements, sample code solutions, and automated tests?

How Was the Question Tested?

The researchers analyzed two questions:

- To what extent are programming exercises created by OpenAI Codex sensible, novel, and readily applicable?

- How comprehensive and accurate are OpenAI Codex natural language explanations of code solutions to introductory programming exercises?

Their paper thus tested two of OpenAI Codex' features: its ability to turn natural-language into programming exercises, and its ability to turn code into natural-language explanations.

Result

Codex did a great job in both categories: creating programming exercises, as well as explaining code.

"There is significant value in massive generative machine learning models as a tool for instructors".

Sarsa et al (2022). Automatic Generation of Programming Exercises and Code Explanations Using Large Language Models.

The researchers found that out of 240 programming exercises created by Codex, "the majority [...] were sensible, novel, and included an appropriate sample solution".

In addition, Codex explained 67.2% of lines of code given to it correctly. The mistakes that Codex made in its explanations contained "only minor mistakes that could easily be fixed by an instructor".

Visit this website to download a copy of this paper.

4. Evaluating the Code Quality of AI-Assisted Code Generation Tools: An Empirical Study on GitHub Copilot, Amazon CodeWhisperer, and ChatGPT

Developers are spoilt for choice when it comes to selecting AI pair programmers. Microsoft, Amazon, OpenAI, as well as a large number of start-ups have already introduced code generators to the market. But which one works best?

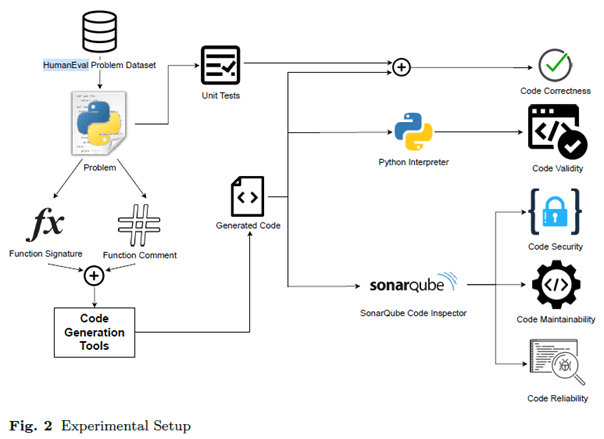

A 2023 paper published by Yetistiren et al compares three of the more popular AI tools, Copilot, CodeWhisperer, and ChatGPT. The tools are compared using five different code quality metrics: code validity, code correctness, code security, code reliability, and code maintainability. It is one of the most comprehensive analyses of each tool's code-generation capabilities.

Which Tool Did the Researchers Assess?

GitHub Copilot, AWS CodeWhisperer and ChatGPT

What Was the Research Question?

What is the quality of code generated by code generation tools? This question was further broken down into sub-questions centered on code validity, code correctness, code security, code reliability, and code maintainability.

How Was the Question Tested?

The researchers presented each AI tool with a standardized set of 164 coding problems, called the HumanEval dataset. The generated code was then tested for the 5 code quality metrics mentioned above.

The experimental setup is shown in this figure:

Result

Copilot, CodeWhisperer, or ChatGPT: Which AI tool performed best?

Surprisingly, ChatGPT "had the highest success rate among the evaluated code generation tools": "It was able to generate correct code solutions for 65.2% of the problems in the HumanEval problem dataset", compared to 46.3% and 31.1% for Copilot and CodeWhisperer respectively.

Why is this surprising? CodeWhisperer and Copilot are dedicated AI pair programmers that can be integrated into your favorite IDE. ChatGPT, on the other hand, is a multipurpose AI that cannot be embedded into an IDE.

For a free download of the paper, visit the Research Gate website.

5. Using AI for Code Documentation, Code Generation, Code Refactoring, and Other High-Complexity Tasks

An analysis by McKinsey focused on the time gains resulting from AI tools. The team from McKinsey measured the impact of AI on developer productivity in three common development tasks: code documentation, code generation, and code refactoring. The team further presented AI with more complex tasks to measure the impact on developer productivity in a non-trivial setting.

The study concluded that "software developers can complete coding tasks up to twice as fast with generative AI".

The time savings were most significant for code documentation, where the use of an AI pair programmer can reduce the time required to complete a task by up to 50%.

However, for more complex tasks, these time savings "shrank to less than 10 percent". Also, the study found that junior developers sometimes even take more time to complete a task when using an AI pair programmer, indicating that AI tools are not right for everyone all the time.

Conclusion

AI in programming is still a novel technology, yet it already shows tremendous potential. All studies reviewed here show that AI's ability to generate code is equal to, if not even better than the human ability to write code.

AI pair programmers have a direct impact on developer productivity and developer experience in at least five ways:

- Speed of development (faster),

- Amount of hand-written code (less),

- Quality of code documentation (better and faster),

- Learning how to code (AI will work side-by-side with a human instructor), and

- Job scope (evolving - human programmers will perform less manual, repetitive work).

But, of course, just like humans, AI is not flawless: AI cannot solve all coding challenges correctly, does not interpret code correctly 100% of the time, and does not generate code that is always free of errors. It also struggles to solve more complex coding challenges.

Nevertheless, there is little doubt that generative AI will transform software development. Eran Yahav, co-founder and CTO of Tabnine, a generative AI company, predicts that five years from now, all code in the world will be touched by AI: maybe not all code will be completely generated by AI, but reviewed, tested, or interpreted with the help of AI.

Oldest comments (0)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.