Reap What You Sow II

IaC, Terraform, & Azure DevOps

Now With YAML

In this post I will take you through deploying your Infrastructure using Terraform and Azure DevOps. Instead of using Azure DevOps’ classic pipeline editor as I did in my previous post, Reap What You Sow, I will now use YAML.

Why Use YAML?

For one, we should be treating Everything as Code. Well at least everything we are able to. Treating things as code allows us to apply modern software engineering practices to them such as source control and a peer review process. Two, I like to learn new things. Three, I was challenged to do it so I’m required by law to do it.

Fear and Loathing in DevOps…

Prior to accepting this challenge, I had absolutely no experience with YAML. I had looked at some pipelines written in YAML at one point and I was honestly intimidated. How terrified was I? The day I accepted the challenge, I had been attending DevOps Days Indy and I explicitly told a few people about how I had been avoiding writing my pipelines in YAML. Little did I know I would have my first pipeline written in YAML up and running later that evening.

What is YAML?

YAML Ain’t Markup Language (YAML) is a data serialization language that is commonly used for configuration files. You can read more about YAML and its history here.

Let’s Get Started

In order to follow along this post you will need to take a look at my previous post. At a minimum complete the steps prior to “Back to Azure DevOps.” It is, however, highly recommend that you complete it entirely as you will be able to click the “View YAML” button if you get stuck.

### Enable Multi-stage Pipelines

### Enable Multi-stage Pipelines

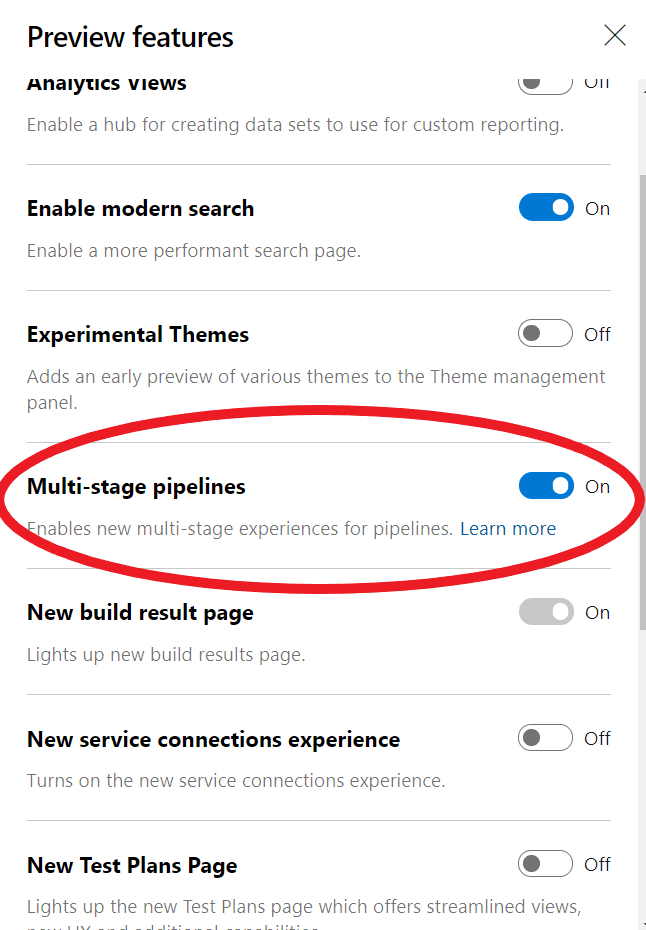

Once you have completed the above steps, make sure you have the new multi-stage pipeline feature enabled. Click on your profile in the upper right corner, then select Preview features. Make sure the Multi-stage pipelines feature is turned on.

### Creating Your First YAML Pipeline

### Creating Your First YAML Pipeline

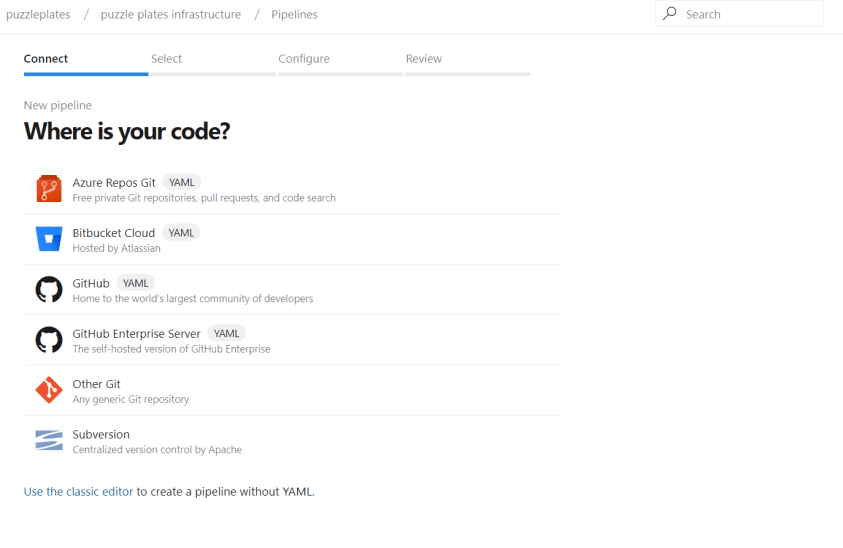

Next, click Pipelines in the left-hand menu. Afterwards, click New Pipeline in the upper right corner of the Pipelines section. Then select your repository. If you’ve followed this blog, select Azure Repos Git.

Next, select Starter pipeline.

Next, select Starter pipeline.

This will create a basic YAML pipeline, add it to our repository and merge it into the master branch.

This will create a basic YAML pipeline, add it to our repository and merge it into the master branch.

The above YAML is fairly straight forward. It is triggered by the master branch (lines 6 and 7). Line 9 and 10 tells it what type of agent to use. While line 12 begins to describe the steps in the pipeline. This pipeline runs two inline scripts.

The above YAML is fairly straight forward. It is triggered by the master branch (lines 6 and 7). Line 9 and 10 tells it what type of agent to use. While line 12 begins to describe the steps in the pipeline. This pipeline runs two inline scripts.

You can learn more about Azure Pipelines YAML schema here.

Next we are going update the YAML with our Build tasks. Open VS Code or your editor of choice. If you are using VS Code, download this Azure Pipelines extension. Next, git pull on master. You should now see a new file, azure-pipelines.yml.

For the purposes of this post, I worked directly on master. Do not do this in the real world! In my first update of the pipeline, I removed the two existing steps. Then I made the following changes.

For the purposes of this post, I worked directly on master. Do not do this in the real world! In my first update of the pipeline, I removed the two existing steps. Then I made the following changes.

To begin with, change the vmImage to ‘vs2017-win2016’ .

Then add the CopyFile@2 task. TargetFolder is the only required input. Set it to ‘$(build.artifactstagingdirectory)/Terraform’.

We also set the Contents to ‘**’ this specifies that we want to copy all files in the root directory and all files in the sub-folders.

Reference documentation for the CopyFile@2 task is available here.

Next add the PublishBuildArtifacts@1 task. Set the PathtoPublish to ‘$(build.artifactstagingdirectory)/Terraform’, the ArtifactName to ‘drop’.

I’m not sure why but the “code” block in WordPress adds a space to the first line of each YAML code snippet.

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

trigger:

- master

pool:

vmImage: 'vs2017-win2016'

steps:

- task: CopyFiles@2

displayName: 'Copy Files'

inputs:

TargetFolder: '$(build.artifactstagingdirectory)/Terraform'

Contents: '**'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: $(build.artifactstagingdirectory)/Terraform

ArtifactName: drop

Now, git commit and push your code to master.

Congratulations you have a working build pipeline.

Getting the build pipeline working was nice and all but what we really want is a full CI/CD pipeline.

The first step in this process is to refactor our existing pipeline into stages. Stages are logical boundaries in your pipeline such as Build, Deploy To Dev, Run Tests, Deploy to Prod, etc. You can read more about stages here.

The refactored pipeline looks like this

trigger:

- master

stages:

- stage: Build

jobs:

- job: Build

pool:

vmImage: 'vs2017-win2016'

steps:

- task: CopyFiles@2

displayName: 'Copy Files'

inputs:

TargetFolder: '$(build.artifactstagingdirectory)/Terraform'

Contents: '**'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: $(build.artifactstagingdirectory)/Terraform

ArtifactName: drop

I added a stages block and then added the Build stage. In that Build stage, we add a jobs block with a single job named Build. From there, the rest of our code looks exactly the same.

Adding the Deployment Functionality

Since the goal is to convert the entire pipeline to YAML, its time to add the deployment functionality.

- stage: Deploy

jobs:

- deployment: DeployInfrastructure

pool:

vmImage: 'vs2017-win2016'

variables:

functions_appinsights: puzzleplatesfunctionsappinsights

functions_appservice: puzzleplatesfunctionsappservice

functions_storage: puzzleplatesstorage

location: centralus

puzzleplates_functions: puzzleplatesfunctions

resource_group: PuzzlePlates-ResourceGroup

terraformstorageaccount: pzzlepltstfrmstrgaccnt

terraformstoragerg: PuzzlePlatesStorage

environment: 'Puzzel Plates Development Infrastructure'

strategy:

runOnce:

deploy:

First, add a new stage named Deploy. Then add a jobs block and then add a deployment job. You can read more about deployment jobs here.

Set the vmImage to ‘ vs2017-win2016’ .

Then set your variables within the job. Variables can be set at either the job level or the root level.

From there, set your environment name. Then declare the deployment strategy. For now, only runOnce is available.

Next, define your deployment steps.

deploy:

steps:

- download: current

artifact: drop

Continuing from deploy, the first step to define is downloading the build artifact. “-download” is short hand for the Download Pipeline Artifact task. You can read more about it here. All we are doing is telling it to download the most current artifact named “drop”.

- task: AzureCLI@1

displayName: 'Azure CLI - Create Terraform State Storage'

inputs:

azureSubscription: 'Terraform_Azure_RM'

scriptLocation: inlineScript

inlineScript: |

call az group create --location centralus --name $(terraformstoragerg)

call az storage account create --name $(terraformstorageaccount) --resource-group $(terraformstoragerg) --location centralus --sku Standard_LRS

call az storage container create --name terraform --account-name $(terraformstorageaccount)

- task: AzurePowerShell@3

inputs:

name: 'Azure PowerShell - Get Key'

azureSubscription: 'Terraform_Azure_RM'

azurePowerShellVersion: LatestVersion

scriptType: InlineScript

Inline: |

$key=(Get-AzureRmStorageAccountKey -ResourceGroupName $(terraformstoragerg) -AccountName $(terraformstorageaccount)).Value[0]

Write-Host "##vso[task.setvariable variable=storagekey]$key"

Next, add the AzureCLI task. Set the displayName, then set the inputs. The first input, azureSubscription is set to a service principal that can create resources in Azure. Deploying infrastructure into Azure from Azure DevOps requires an Azure Resource Manager service connection. Follow these instructions to create one. After setting the subscription set the scriptLocation to inlineScript. Then set the inlineScrpt to the code shown above. This code creates the Azure Blob Storage to store Terraform’s State. The pipe symbol signifies that any indented text that follows should be treated as multi-line scalar value. You can read more about it on stackoverflow.

Moving on, AzurePowerShell@3 task. Do not add the version 4 task as there are conflict with the inline script. Set the name. Then set the azureSubscription to the principle service we used in the previous task. Afterwards set the azurePowerShellVersion to LatestVersion and scriptTYpe to InlineScript. Then copy the above code. This code saves the storage key into the release pipeline.

Next we will be adding the replacetokens task. This task is a marketplace extension so you will need to install it into your Azure DevOps account. This task will replace all of the values with the “__” prefix and suffix with variables from the release pipeline. Click here to get the extension.

#Marketplace Extension

- task: replacetokens@3

displayName: 'Replace Tokens'

inputs:

rootDirectory: '$(Pipeline.Workspace)/drop'

targetFiles: '**/*.tf'

tokenPrefix: '__'

tokenSuffix: '__'

I had a little bit of trouble figuring out what the actual input arguments were named as they are slightly different that what is presented in the classic editor. I ended up having to dive into the extension’s code base on GitHub. Set the rootDirectory to the drop directory in pipeline workspace. Then set the targetFiles to scan all .tf files in the current directory including all sub-directories.

Next add the Terraform tasks. The Terraform task is another extension and you will need to install it into your Azure DevOps account. The extension can be found here.

#Terraform

- task: Terraform@2

displayName: 'Terraform Init'

inputs:

TemplatePath: '$(Pipeline.Workspace)/drop'

Arguments: init

InstallTerraform: true

UseAzureSub: true

ConnectedServiceNameARM: 'Terraform_Azure_RM'

- task: Terraform@2

displayName: 'Terraform Plan'

inputs:

TemplatePath: '$(Pipeline.Workspace)/drop'

Arguments: plan

InstallTerraform: true

UseAzureSub: true

ConnectedServiceNameARM: 'Terraform_Azure_RM'

- task: Terraform@2

displayName: 'Terraform Apply'

inputs:

TemplatePath: '$(Pipeline.Workspace)/drop'

Arguments: apply -auto-approve

InstallTerraform: true

UseAzureSub: true

ConnectedServiceNameARM: 'Terraform_Azure_RM'

Terraform Init initializes a working directory containing Terraform configuration files on the agent. Set the TemplatePath to the artifact location, $(Pipline.Workspace)/drop. Next, set the Arguments to init. Then set InstallTerraform to true. Afterwards set UseAzureSub to true and set the ConnectedServiceNameARM to the service account you previously used.

Terraform Plan compares existing infrastructure to what is in the code you are deploying and determines if it needs to create, update, or destroy resources. The settings are exactly the same except you set the Arguments to plan.

Terraform Apply applies the plan to the infrastructure. The settings are exactly the same as the two previous Terraform tasks except you set the Arguments to apply -auto-approve. This final task will create, update, or destroy your infrastructure in Azure.

Congratulations you now have a working multi-stage Azure Pipeline written in YAML!!!

The Entire YAML Pipeline

trigger:

- master

stages:

- stage: Build

jobs:

- job: Build

pool:

vmImage: 'vs2017-win2016'

steps:

- task: CopyFiles@2

displayName: 'Copy Files'

inputs:

TargetFolder: '$(build.artifactstagingdirectory)/Terraform'

Contents: '**'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: $(build.artifactstagingdirectory)/Terraform

ArtifactName: drop

- stage: Deploy

jobs:

- deployment: DeployInfrastructure

pool:

vmImage: 'vs2017-win2016'

variables:

functions_appinsights: puzzleplatesfunctionsappinsights

functions_appservice: puzzleplatesfunctionsappservice

functions_storage: puzzleplatesstorage

location: centralus

puzzleplates_functions: puzzleplatesfunctions

resource_group: PuzzlePlates-ResourceGroup

terraformstorageaccount: pzzlepltstfrmstrgaccnt

terraformstoragerg: PuzzlePlatesStorage

environment: 'Puzzel Plates Development Infrastructure'

strategy:

runOnce:

deploy:

steps:

- download: current

artifact: drop

- task: AzureCLI@1

displayName: 'Azure CLI - Create Terraform State Storage'

inputs:

azureSubscription: 'Terraform_Azure_RM'

scriptLocation: inlineScript

inlineScript: |

call az group create --location centralus --name $(terraformstoragerg)

call az storage account create --name $(terraformstorageaccount) --resource-group $(terraformstoragerg) --location centralus --sku Standard_LRS

call az storage container create --name terraform --account-name $(terraformstorageaccount)

- task: AzurePowerShell@3

inputs:

name: 'Azure PowerShell - Get Key'

azureSubscription: 'Terraform_Azure_RM'

azurePowerShellVersion: LatestVersion

scriptType: InlineScript

Inline: |

$key=(Get-AzureRmStorageAccountKey -ResourceGroupName $(terraformstoragerg) -AccountName $(terraformstorageaccount)).Value[0]

Write-Host "##vso[task.setvariable variable=storagekey]$key"

#Marketplace Extension

- task: replacetokens@3

displayName: 'Replace Tokens'

inputs:

rootDirectory: '$(Pipeline.Workspace)/drop'

targetFiles: '**/*.tf'

tokenPrefix: '__'

tokenSuffix: '__'

#Terraform

- task: Terraform@2

displayName: 'Terraform Init'

inputs:

TemplatePath: '$(Pipeline.Workspace)/drop'

Arguments: init

InstallTerraform: true

UseAzureSub: true

ConnectedServiceNameARM: 'Terraform_Azure_RM'

- task: Terraform@2

displayName: 'Terraform Plan'

inputs:

TemplatePath: '$(Pipeline.Workspace)/drop'

Arguments: plan

InstallTerraform: true

UseAzureSub: true

ConnectedServiceNameARM: 'Terraform_Azure_RM'

- task: Terraform@2

displayName: 'Terraform Apply'

inputs:

TemplatePath: '$(Pipeline.Workspace)/drop'

Arguments: apply -auto-approve

InstallTerraform: true

UseAzureSub: true

ConnectedServiceNameARM: 'Terraform_Azure_RM'

Conclusion

Though at first I was intimidated I was able to get my first pipeline up and running within a few hours.

I ran into some issues with spacing and indentation that took me longer than I care to admit. I also ran into an issue using the version 4 preview of the AzurePowershell task and downgraded to version 3.

My initial fear was completely unfounded (except for indenting/spacing nightmares, those are completely justified). Once I had the initial pipeline built I was hooked. I thoroughly enjoyed writing the pipeline and am looking forward to the new it will offer.

Top comments (0)