Introduction

Before we get started with coding I want to give you a quick introduction to object-oriented and functional programming.

Both are programming paradigms differing in the techniques they allow and forbid.

There are programming languages that only support one paradigm e.g. Haskell (purely functional).

Aswell as languages that support multiple paradigms such as JavaScript, you can use JavaScript to write object-oriented or functional code or even a mixture of both.

Setup

Before we can dive deep into the differences between these two paradigms we need to setup the project.

For that we first create all the files and folders we need like this:

$ mkdir func-vs-oop

$ cd ./func-vs-oop

$ cat index.html

$ cat functional.js

$ cat oop.js

I'm using the cat command because it works both on Linux-systems and Windows Powershell.

Next up we need to create a simple form for the factorial calculator inside the index.html.

<!DOCTYPE html>

<html>

<head>

<link rel="stylesheet" href="https://stackpath.bootstrapcdn.com/bootstrap/4.5.0/css/bootstrap.min.css" integrity="sha384-9aIt2nRpC12Uk9gS9baDl411NQApFmC26EwAOH8WgZl5MYYxFfc+NcPb1dKGj7Sk" crossorigin="anonymous">

<script src="functional.js" defer></script>

</head>

<body>

<div class="container mt-5">

<div class="container mt-3 mb-5 text-center">

<h2>Functional vs OOP</h2>

</div>

<form id="factorial-form">

<div class="form-group">

<label for="factorial">Factorial</label>

<input class="form-control" type="number" name="factorial" id="factorial" />

</div>

<button type="submit" class="btn btn-primary">Calculate</button>

</form>

<div class="container mt-3">

<div class="row mt-4 text-center">

<h3>Result:</h3>

<h3 class="ml-5" id="factorial-result"></h3>

</div>

</div>

</div>

</body>

</html>

To give this form a better look and feel we use bootstrap as a CSS-Framework.

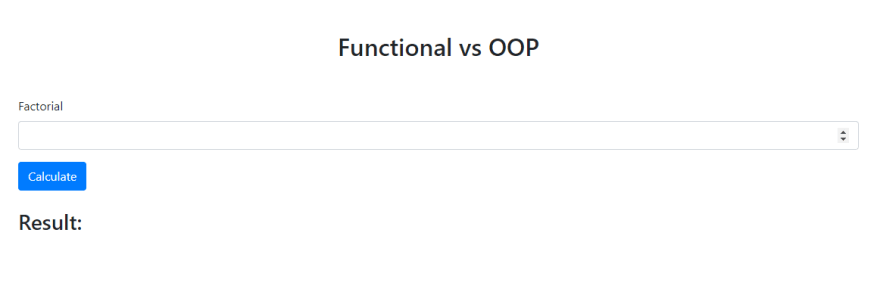

If you display this HTML in the browser it should look like this:

Currently this form won't do anything.

Our goal is to implement a logic where you can enter a number up to 100. After clicking the "Calculate"-button it should show the result in the result-div.

We will implement this both in the object-oriented way and the functional way.

Functional implementation

First off we will create a file for the functional programming approach.

$ cat functional.js

To get started we need a function that get's called when loading this file into the browser.

This function should get the form and then add the functionality we need to the submit-event of the form.

function addSubmitHandler(tag, handler) {

const form = getElement(tag);

form.addEventListener('submit', handler);

}

addSubmitHandler("#factorial-form", factorialHandler);

First we declare the function called addSubmitHandler.

This function takes in two parameters, the first one being the tag we want to look for in our HTML, the second one being the function we want to bind to the submit-event of our Element.

Next we call this function by passing in #factorial-form and the function name factorialHandler.

The hashtag in front of the tag indicates that we are looking for the id-attribute in the HTML.

This code will throw an error if you try to run it now, because neither the function getElement nor factorialHandler are defined anywhere.

So let's first define getElement above our addSubmitHandler function like this:

function getElement(tag) {

return document.querySelector(tag);

}

This function is really simple and only returns the HTML-Element we found by the tag we passed in.

But we will reuse this function later on.

Now let's start creating the core logic by adding the factorialHandler function above the addSubmitHandler.

function factorialHandler(event) {

event.preventDefault();

const inputNumber = getValueFromElement('#factorial');

try {

const result = calculateFactorial(inputNumber);

displayResult(result);

} catch (error) {

alert(error.message);

}

}

We pass in the event and instantly call preventDefault.

This will prevent the default behavior of the submit event, you can try out what happens on the button click without calling preventDefault.

After that we get the value entered by the user from the input-field by calling the getValueFromElement function.

Upon getting the number we try to calculate the factorial by using the function calculateFactorial and then render the result to the page by passing the result to the function displayResult.

If the value is not in the correct format or the number is higher than 100, we will throw an error and display that as and alert.

This is the reason for using a try-catch-block in this particular case.

In the next step we create two more helper functions, getValueFromElement and displayResult.

Let's add them below the getElement function.

function getValueFromElement(tag) {

return getElement(tag).value;

}

function displayResult(result) {

getElement('#factorial-result').innerHTML = result

}

Both of these functions use our getElement function. This reusablility is one part why functional programming is so effective.

To make this even more reusable we could potentially add a second argument to displayResult, called tag.

So that we can dynamically set the element that should display the result.

But in this example I went with the hard-coded way.

Next up we create our calculateFactorial function right above factorialHandler.

function calculateFactorial(number) {

if (validate(number, REQUIRED) && validate(number, MAX_LENGTH, 100) && validate(number, IS_TYPE, 'number')) {

return factorial(number);

} else {

throw new Error(

'Invalid input - either the number is to big or it is not a number'

);

}

}

We validate if the argument 'number' is not empty, not above 100 and of type number.

For that we use a function called validate that we will need to create next.

If the checks pass we call the function factorial and return it's result.

If these checks don't pass we throw the error we catched in the factorialHandler function.

First let's create the validate function right below displayResult and the three constants MAX_LENGTH, IS_TYPE and REQUIRED.

const MAX_LENGTH = 'MAX_LENGTH';

const IS_TYPE = 'IS_TYPE';

const REQUIRED = 'REQUIRED';

function validate(value, flag, compareValue) {

switch (flag) {

case REQUIRED:

return value.trim().length > 0;

case MAX_LENGTH:

return value <= compareValue;

case IS_TYPE:

if (compareValue === 'number') {

return !isNaN(value);

} else if (compareValue === 'string') {

return isNaN(value);

}

default:

break;

}

}

In this function we use a switch to determine which kind of validation we are going to perform.

After determining that, it is just a simple value validation.

Now we are going to add the actual factorial function right above the calculateFactorial declaration.

This will be our last function for this approach.

function factorial(number) {

let returnValue = 1;

for (let i = 2; i <= number; i++) {

returnValue = returnValue * i;

}

return returnValue;

}

There are many different ways to perform a factorial calculation, I went with the iterative approach.

If you want to learn more about the different approaches I recommend you check out this article on geeksforgeeks:

The final functional.js file should look like this:

const MAX_LENGTH = 'MAX_LENGTH';

const IS_TYPE = 'IS_TYPE';

const REQUIRED = 'REQUIRED';

function getElement(tag) {

return document.querySelector(tag);

}

function getValueFromElement(tag) {

return getElement(tag).value;

}

function displayResult(result) {

getElement('#factorial-result').innerHTML = result

}

function validate(value, flag, compareValue) {

switch (flag) {

case REQUIRED:

return value.trim().length > 0;

case MAX_LENGTH:

return value <= compareValue;

case IS_TYPE:

if (compareValue === 'number') {

return !isNaN(value);

} else if (compareValue === 'string') {

return isNaN(value);

}

default:

break;

}

}

function factorial(number) {

let returnValue = 1;

for (let i = 2; i <= number; i++) {

returnValue = returnValue * i;

}

return returnValue;

}

function calculateFactorial(number) {

if (validate(number, REQUIRED) && validate(number, MAX_LENGTH, 100) && validate(number, IS_TYPE, 'number')) {

return factorial(number);

} else {

throw new Error(

'Invalid input - either the number is to big or it is not a number'

);

}

}

function factorialHandler(event) {

event.preventDefault();

const inputNumber = getValueFromElement('#factorial');

try {

const result = calculateFactorial(inputNumber);

displayResult(result);

} catch (error) {

alert(error.message);

}

}

function addSubmitHandler(tag, handler) {

const form = getElement(tag);

form.addEventListener('submit', handler);

}

addSubmitHandler("#factorial-form", factorialHandler);

In this approach we worked exclusively with functions. Every function got a single purpose and most of them are reusable in other parts of the application.

For this simple web application the functional approach is a bit of an overkill. Next we will code the same functionality but this time object-oriented.

Object-oriented implementation

First of all we need to change the src in the script-tag of our index.html file to the following.

<script src="oop.js" defer></script>

Now we create the oop.js file.

$ cat oop.js

For the OOP approach we want to create three different classes, one for validation, one for the factorial calculation and one for handling the form.

We get started with creating the class that handles the form.

class InputForm {

constructor() {

this.form = document.getElementById('factorial-form');

this.numberInput = document.getElementById('factorial');

this.form.addEventListener('submit', this.factorialHandler.bind(this));

}

factorialHandler(event) {

event.preventDefault();

const number = this.numberInput.value;

if (!Validator.validate(number, Validator.REQUIRED)

|| !Validator.validate(number, Validator.MAX_LENGTH, 100)

|| !Validator.validate(number, Validator.IS_TYPE, 'number'))

{

alert('Invalid input - either the number is to big or it is not a number');

return;

}

const factorial = new Factorial(number);

factorial.display();

}

}

new InputForm();

In the constructor we get the form-element and the input-element and store it in class variables, also called properties.

After that we add the method factorialHandler to the submit-event.

In this case we need to bind 'this' of the class to the method.

If we don't do that we will get reference errors, e.g. calling this.numberInput.value will be undefined.

After that we create the class method factorialHandler with the event as an argument.

The code of this method should look somewhat familiar, for example the if-statement checks if the inputvalue is valid or not, like we did in the calculateFactorial function.

Validator.validate is a call to a static method inside the class Validator that we still need to create.

We don't need to initialize a new instance of an object if we work with static methods.

After the validations pass we create a new instance of the Factorial class, pass in the inputvalue and then display the calculated result to the user.

Next up we are going to create the Validator class right above the InputForm class.

class Validator {

static MAX_LENGTH = 'MAX_LENGTH';

static IS_TYPE = 'IS_TYPE';

static REQUIRED = 'REQUIRED';

static validate(value, flag, compareValue) {

switch (flag) {

case this.REQUIRED:

return value.trim().length > 0;

case this.MAX_LENGTH:

return value <= compareValue;

case this.IS_TYPE:

if (compareValue === 'number') {

return !isNaN(value);

} else if (compareValue === 'string') {

return isNaN(value);

}

default:

break;

}

}

}

As you can see everything inside of this class is static, the method validate aswell as the three properties.

Therefor we do not need any constructor.

The advantage of this is that we do not need to initialize this class everytime we want to use it.

validate is mostly the same as the validate function is our functional.js except that we do this.REQUIRED, this.MAX_LENGTH and this.IS_TYPE instead of just the variable name.

Next up we create our Factorial class right below the Validator class.

class Factorial {

constructor(number) {

this.resultElement = document.getElementById('factorial-result');

this.number = number;

this.factorial = this.calculate();

}

calculate() {

let returnValue = 1;

for (let i = 2; i <= this.number; i++) {

returnValue = returnValue * i;

}

return returnValue;

}

display() {

this.resultElement.innerHTML = this.factorial;

}

}

Upon initializing an instance of this class we get the resultelement and store it as a property aswell as the number we pass in.

After that we call the method calculate and store it's return value in a property.

The calculate method contains the same code as the factorial function in functional.js.

Last but not least we got the display method that sets the innerHTML of our resultelement to the calculated factorial number.

The complete oop.js file should look like this.

class Validator {

static MAX_LENGTH = 'MAX_LENGTH';

static IS_TYPE = 'IS_TYPE';

static REQUIRED = 'REQUIRED';

static validate(value, flag, compareValue) {

switch (flag) {

case this.REQUIRED:

return value.trim().length > 0;

case this.MAX_LENGTH:

return value <= compareValue;

case this.IS_TYPE:

if (compareValue === 'number') {

return !isNaN(value);

} else if (compareValue === 'string') {

return isNaN(value);

}

default:

break;

}

}

}

class Factorial {

constructor(number) {

this.resultElement = document.getElementById('factorial-result');

this.number = number;

this.factorial = this.calculate();

}

calculate() {

let returnValue = 1;

for (let i = 2; i <= this.number; i++) {

returnValue = returnValue * i;

}

return returnValue;

}

display() {

this.resultElement.innerHTML = this.factorial;

}

}

class InputForm {

constructor() {

this.form = document.getElementById('factorial-form');

this.numberInput = document.getElementById('factorial');

this.form.addEventListener('submit', this.factorialHandler.bind(this));

}

factorialHandler(event) {

event.preventDefault();

const number = this.numberInput.value;

if (!Validator.validate(number, Validator.REQUIRED)

|| !Validator.validate(number, Validator.MAX_LENGTH, 100)

|| !Validator.validate(number, Validator.IS_TYPE, 'number'))

{

alert('Invalid input - either the number is to big or it is not a number');

return;

}

const factorial = new Factorial(number);

factorial.display();

}

}

new InputForm();

We created three different classes handling three different aspects of our application:

- Validation: Validation class

- Factorial Handling: Factorial class

- Form Handling: InputForm class

Conclusion

Both approaches are valid ways of structuring your code.

Personally I like to try out what works best in the different projects I work on.

Most of the time it is not even possible to seperate both paradigms so clearly.

I hope this little comparison gave you a fundamental understanding of what the different approaches look like.

As always you can find the code for this project on my github.

Top comments (26)

When you say 'Functional' do you mean to say procedural? BASIC is a procedural language, but definitely not a Functional language, whereas languages like F#, Haskell (like you mention), Scala are Functional languages.

The reason I ask is because you give more of a view of Procedural vs OOP, rather than functional vs imperative (procedural/OOP with state maintenance and change) as an example.

The OOP example you provide could still be viewed as functional in the sense you don't mutate state.

If functional - in the sense you are operating on immutable functions only - is truly the goal, perhaps an example where the OOP approach maintains and mutates state?

Beyond that though, it's well written article.

You can do OOP with immutable objects. In js numbers, strings are objects but immutable.

But Date on other hand is mutable. Having some objects mutable some immutable leads to bugs if one does not know what are doing.

But regardless if it's mutable or not, state is mutated somewhere still.

Take redux reducer for example

it's immutable function on the surface, but it returns new (updated) state - of whole application state, thus there is a power to change anything in application state here. Mutability is not the main problem. Using purely immutable interfaces can help avoid certain type of bugs. But eventually it leads to the same big problem - somewhere state is updated, and the big question is, how is it encapsulated.

A careful developer would divide state in small chunks and ensure all reducers only take care of one little piece of state

not careful developer still can just as easily add unpredictable side effects even though interface is immutable

In redux on can also do same thing that one can do with setters/getters in object

now one can modify state anywhere from app code - immutable and functional version of setters/getters antipattern of OOP

Immutability and functional development it self doesn't do that much. It's good but it's not a lot. One must also follow SOLID and other better known guiding principles (like Law of Demeter) to build good code and it doesn't matter OOP or functional.

You are totally right, I struggled to get a good example that is small and simple enough for beginners to understand. I think bringing in state and managing it would have been a bit to much for beginners.

I hope the point I wanted to make gets more clear now.

Thanks for the constructive feedback I really appreciate it :)

I think that trying to turn functions into classes may not have produced the most convincing examples. :)

I think that the biggest problem is that oop requires a more explicit structuring of domains of responsibility -- it might be easier to go from an oop design to a functional design.

That's a good point. My intention was not to go too deep into the details and try to find a simple example.

Maybe the order was a bit confusing.

Thanks for the feedback :)

You're welcome.

This is more like OOP vs Procedural comparison indeed :)

By the way you can use recursion for your factorial function

Nice article :)

Thanks for the feedback. I linked to geeksforgeeks for more details on the implementation possibilities for a factorial. I decided to go for the iterative approach because I didn’t want to introduce recursion in this article 😁

Yep, just seen it :) I was thinking to opposite, factorial is good chance to show off the recursion :)

To me week spot of functional code in js is composition.

I think this quickly becomes hard to reason about it once you get little larger codebase, because it can be hard to find code that is gonna run, when function is passed as argument and all you see when opening some deep module is this

When instance is passed as argument - it has a type (it's class name) - and so it's very easy to locate related code even without typescript.

with typescript it's possible to achive similar end goal with just functions but in expense of declaring a lot of additional types (while with classes - class it self already is a type). With all additional type declarations functional approach can get even more verbose that class based approach.

Lambda calculus deliberately only deals with single argument functions because they compose well.

For functions with multiple arguments you have to curry to preserve composability.

TypeScript pushes JavaScript deeper into 'class-based object-orientation' territory (apart from soundness being a non-goal) - so any deviation from that ideal will require a deep dive into the typing metalanguage.

Statically typed FP is better served by ReScript.

Quote

IMHO currying in javascript only makes things worse

I don't need to curry in order to make anything more composable. I could always compose simply by declaring new function

number of things is better in this approach over curried version:

So in nutshell currying to me is a solution for non existing problem which makes things worse when applied religiously.

In my initial example I did used one case of a 2nd order function, which was a case of curried function. But this was done in order to separate dependencies from data. I would do that only if I know usage patterns

This implies that intended use of it is of is to be instantiated before usage.

and definitely not

createFunction()()- if there is a case to use it like this then it's better to declare it with flat arguments.To me problems with this functional approach and composed functions happen when composed function must be passed down as parameter. This is where to me class based approach wins over function based approach. After function is passed as argument, it becomes more difficult to trace which code is relevant when function is executed. It's not necessary a problem when writing a code but it's a problem when you are in large code base and want to understand it. With typescript can declare interface of function but that does not help finding implementation. On other hand in class based approach receiver can indicate concrete class that it expects (even without typescript - with js docs).

Your initial composing function

accepted arbitrary single argument functions.

Now your preference

is to manually assemble the function.

I was referring to generalized function composition. Given that functions only return a single value general function composition can only compose functions that accept a single value. In that context currying is the workaround to fake multi argument functions.

I wasn't advocating "curry all the things" just for the sake of it.

This style

is related to

i.e. using a closure to mimic partial application - it's just that "some code here" is never isolated into an independent function.

Your particular annotation identifies

dependenciesas arguments to a kind of constructor: "A closure is an object that supports exactly one method: apply."Passing a function as an argument to a higher order function is equivalent to passing a strategy object to a context object (Strategy Pattern) - i.e. this kind of composition exists in both paradigms.

What you are saying is that you find it more difficult to work with interfaces than concrete implementations (i.e. this isn't about functions vs. objects). That may be so but:

"Design Patterns: Elements of Reusable Object-Oriented Software" p.18

i.e. classes depending on other concrete classes should be the exception, not the rule.

The natural boundary around a class that depends on other "concrete classes" automatically includes those "concrete dependencies" (and recursively their concrete dependencies). This creates a much larger unit that needs to be "reasoned about" as a whole.

Interfaces are at the core of many OO practices including the dependency inversion principle.

You probably have other, bigger problems in the code base when you have difficulty tracking down the concrete implementation that is used to service a particular interface at a call site.

It's also a running joke that function types satisfy the interface segregation principle by default.

The impression I'm getting here is that you find imperative code ("do this then that") easier to read - which isn't surprising given that most of us learn programming that way - it's the allure of the familiar.

Functional programming tends to focus less on the "how" and more on the "why" and "what" (some say it's more declarative) - but it still has its 'step-by-step' moments. For example:

Now

transformis a terrible name, eventextToFactorialwould have been better but at the time I was trying to make a general point. But the "steps" are still there, clearly outlining what is going on. The big difference (to imperative code) is that this code isn't transforming any data at this point - the function that will be transforming the data is being "wired up".Functional code is composed of functions and as functions are generally smaller than objects there will be proportionally more code dedicated to "wiring up" the capability rather than "doing" the capability. So when reading the code one has to differentiate between "construction" code (the scaffolding) and "running" code.

But the same is true for any non-trivial object-oriented code base. As it grows and God Objects are avoided more and more code is dedicated to setting up the relationships between the collaborating objects before they can do any useful work.

However a network of interacting stateful objects can grow in complexity rapidly. A composition of stateless functions (or immutable closures) is typically easier to reason about. Coming from an imperative background the functional approach is different enough to take some getting used to.

(One issue with React hooks is that functional components are a now just as stateful as objects - which gives rise to much richer (i.e. complex) behaviour - the standing argument is that hooks are more declarative than object methods but that is a whole discussion its own).

It's not that I have difficulty. It's just not as fast. With OOP when coding on top of interfaces tooling supports jump-to-implementation. It's just less straight forward with function types.

I do not advocate towards breaking any best practices. That said not every practice that is necessary when designing reusable code is also necessary or even good when building one off pieces of implementation.

Strategy is just one use case. There are many cases. In UI applications hardly anything passed down element tree is 1st order function. If it was 1st order most of time you'd not pass it down - it can be imported and called directly.

Well firstly I think it's little bit condescending for you to make impression of why I "find imperative code easier to read".

But regardless of that, I think this is a terrible argument. Normally if someone does not find my code immediately straight forward I tend to believe that I made it too difficult. Sometimes I just couldn't think of easier way. Sometimes simply because I did something I thought was clever - turns out it wasn't.

Now to your example. If you're writing in this style then at least you could get rid of fn1, fn2, etc and that closure pattern - all that make it very ugly

to me very big downside of this code is that it's very hard to use debugger on it. Almost in no point can you place a breakpoint on it except for parseInt() part

And what is reason for it? I don't think there is good one. It's very imperative style in the end. Why would you write imperative code in declarative-functional-composition? Just makes no sense. There are situations where declarative style is great. There are situations where imperative style is great. Use one that is the most appropriate. Application like this would be best split into input, validation, action phases. Validator can be setup in declarative way. The rest can go in imperative. And result is best of both worlds, easy to follow, easy to debug.

Not sure where this is going.

As far as I'm aware "higher order function" doesn't imply an actual ordinality.

Eric Elliot used first order function to refer to a function that doesn't "take a function as an argument or return a function as output". Eric Normand on the other hand uses:

So I can only conclude that the "n-th order function" terminology with reference to higher order functions is neither standardized nor commonplace.

"Higher order function" simply calls attention to the fact that a function is specialized by and/or returns another function. But in the end in the functional style it is as natural to return functions and take them as arguments as it is to return an object and take object arguments in the object style.

The impression I'm getting is that you're saying that "a function that takes a function that takes a function" (and beyond) is getting hard to track. But given that functions have a type, a function can simply transform one type of function into another type of function.

No condescension was implied. The point was familiarity bias - Rich Hickey style i.e. easy is a result of familiarity, not simplicity and the unfamiliar can seem difficult even when it's simple.

At its core JavaScript is an imperative language - it just happens to also have first class functions and supports closures which can be leveraged when practicing a functional style.

And given that TypeScript keeps coming up, it's been my observation that TypeScript is much less conducive to enabling a functional style than JavaScript - to the point that it could be argued that TypeScript is the "wrong tool for the job" to support a statically typed functional style. I know of fp-ts but you have to work much harder in TypeScript to practice a functional style compared to an object style. But that's not the fault of the "functional style" but a result of TypeScript being streamlined for OO style typing.

That "ugliness" exists for illustration purposes - being explicit about the bound values being functions and the manner in which those functions are being composed.

That argument keeps coming up. You can still set a breakpoint at any function declaration and you can run into similar problems with dynamically assembled objects.

One could just as easily argue that it is disappointing that

I think the "hard to debug" argument has even more of a negative impact when it comes to adoption of streams which could potentially simplify UI architecture or perhaps enable some alternate approaches.

The code isn't imperative, the style is. Even Haskell has the do notation ("Haskell is the world’s finest imperative programming language").

Some people find it more intention-revealing.

The functions are organized in the run-time sequence of the composed function so that it's clear what the transform will do. The

{ok/err}type implements the necessary Railway-Oriented Programming (viaandThenandmap).To me that sample code seems influenced by Go:

"It must be familiar, roughly C-like. Programmers working at Google are early in their careers and are most familiar with procedural languages, particularly from the C family. The need to get programmers productive quickly in a new language means that the language cannot be too radical."

There's anecdotal evidence that something like How to Design Programs (HtDP 2e) may be a better first exposure to programming: “It’s mind boggling that your HtDP students are better C++ problem solvers than people who went through the C++ course already”.

The Structure and Interpretation of the Computer Science Curriculum

Well this is going deeper and deeper into rabbit hole.

The only thing I am trying to do is point out what sort of things work and what don't work in JavaScript (or TypeScript). Meanwhile you're pointing to a lot of deep topics that I don't necessary find that relevant.

I do not stand against using functional languages and transpiling it to js. If someone wants functional programming - this is what I would suggest.

And I tell that things not work not because I am unfamiliar. So far every single topic that you mentioned either I tried my self or received in codebase that I had to work with and I suffered. I also coded bit in scala, elixir and practiced solving problems in functional manner thus functional concepts are not new to me.

The fact for example that railway programming exists does not mean that it's good technique in JavaScript (most often it isn't). Even in F# same author that you shared wrote a more skeptical piece about it 6y later more recent piece

Regarding discrediting the use of debuggers. Some people don't use debuggers. That does not change fact that for a lot of people it's a time saving tool in every day job. I have learned to use debugger many years later than I started coding thus to me personally it's less important - yet still in multiple cases it was very time saving tool. But I also met people who learned to use it from very first days they started learning coding and I don't think that telling them that now they should stop using debugger and learn "the way real men are programming" and send them a link to what Linus Torvalds thinks of debuggers. I don't think this gonna help them do their job better. What will make things better is code that is easy to debug, straight forward and use only the minimal amount of concepts necessary to solve the problem.

But argument about being able to use debugger often touches on other aspects. Usually if it's hard to use debugger you also have muffled tracebacks and application flow that is very hard to follow. Thus it's very good idea to stay aware of things that break debugging and tracebacks.

On topic of tracebacks. I will bring again the case of currying. Here is example with curried version

If stuff breaks in onHandle, the

renderhere is not in the traceback despite it being responsible for wiring things up (bindingsomethingto the handler).Same handler might been used in 100 other places. And without traceback pointing to above will be hard to find.

And it is so easy to make things much better

now if onHandle fails, 'render' function is in the traceback and you immediately know what exactly failed.

When you get email with error and traceback with a new bug in production this very simple thing could change situation from you not having any idea where to start at to knowing exactly what went wrong.

You also mentioned piece about unfamiliar bias. It's still never a good idea at any point to say to anyone "maybe you find this hard because you are unfamiliar". Instead you could nudge them in right direction and they might realize that they been lacking familiarity. It could very well turn out that you your self was the one unfamiliar about something. Thus it's just good conversation tactics to keep it to your self even if you think that way and see where things will roll.

It started with your initial statement:

leading up to

Polymorphic code in OO has exactly the same problem - and if you're unlucky, even if you locate the implementing class, you may still have to drill through several layers of implementation inheritance to find the code that is actually running - i.e. it's the type of code where even with a debugger you wonder - how did I even get here? And even if the polymorphic type on the parameter is declared the implementing type doesn't have to declare it due to structural typing in TypeScript (because of duck typing in JavaScript).

In the end functional composition or more specifically composing closures works just fine in JavaScript - and really isn't that different from composing objects. The difference is that closures are implicit while objects are explicit - which can make closures more difficult to grasp initially but objects can be more verbose.

The point of the original article was to contrast OO vs functional style.

The functional approach focuses on values and how you transform the values you have into the ones you need. The building blocks are functions and their behaviour can be composed through higher order functions.

Object-orientation is based on partitioning the solution in terms of Class-Responsibility-Collaborator and using commonality and variability analysis to identify opportunities for polymorphism.

Which approach sounds simpler now? It's this type of comparison that leads to statements like:

"OO makes code understandable by encapsulating moving parts. FP makes code understandable by minimizing moving parts."

Michael Feathers

Another relevant sound bite:

"GOTO was evil because we asked, 'how did I get to this point of execution?' Mutability leaves us with, 'how did I get to this state?'"

Jessica Kerr

Eliminating mutability in JavaScript is unreasonable but it makes sense to try to find approaches to "use mutability responsibly" whether you are using objects or closures. Both mutable objects and closures should be used with care.

Object Thinking, Functional Thinking, and Reactive Thinking are very different, each useful in their own way.

The point is that there are things that work in JavaScript that don't sit well with TypeScript as its design is much more OO centric than JavaScript's. When you use TypeScript you are effectively choosing OO - with a functional style your are constantly fighting an "impedance mismatch".

While TypeScript is superset from a feature support perspective, it becomes a subset once one clamps down on its flavour of type checking.

JavaScript has exceptions and they exist for a reason. But there are always "expected errors" and those shouldn't be handled via exceptions. The whole idea is that

mapand the like remove the need for those noisyif(error) return [null, error]statements all through the application code - if that isn't an acceptable tradeoff then use something else.Given an approach with promise and merit:

That's the trap that "hard to debug" is a part of.

Meanwhile once there is enough tooling even mediocre solutions can gain momentum. TypeScript was languishing until VS Code came along.

That behaviour is common for any function that creates a function. Your second example creates a closure inside of

render()soonHandlewill appear in the stack trace.So if that stack trace is important to you create the intermediate closure:

It's been my experience that people tend to view "difficulty" as something that is inherent to a subject - not something that derives from their own experience. The question "Does this only seem difficult simply because I haven't done this before?" isn't asked nearly enough.

IMHO this whole juxtaposition of OOP and functional ideologies doesn't really make much sense to me.

My impression was that article expressed similar idea - that you can use OO and functional techniques when it makes sense.

When I say that I prefer class-based composition over higher-order-function based composition I do not stand that I support OOP paradigm and reject functional paradigm.

I prefer classes because they work as anchor points for documentation, by providing a referable name that can be used with TypeScript or without TypeScript (with jsdoc) and thus making code easier to understand and navigate. Higher-order-functions are lacking this. If someone can figure this part out for higher-order-functions then I would happily use it.

The only takeaway I can do here is that both things are equally important

Focusing only on 1 will lead to problems.

Some concepts that you bring - I don't see any reason to classify as "functional" or "OOP". For example railway programming - it's a technique for error handling. There is nothing about it that makes it "functional". It plays nicely if pattern matching is supported as 1st class citizen. But one can also use idea to implement this concept with OO. If it's practical to do so is another question. I'd lean towards saying that "everything has known error" is incorrect assumption - only on application boundaries there are known errors (data inputs, networking), within application there usually are no errors - if there are - then maybe it's a time to review architecture.

I don't think highly polymoprhic code is that normal. It's often a case of inheritance abuse. And then the more layers you have the stronger my point gets because there is no reason why higher-order-function based solution would have less layers than that of class-based solution, and with every single layer every time it's easier to navigate the code.

How could anyone consider JavaScript "functional" is something I don't quite understand. It came out same decade as Python and Ruby and all 3 have a lot in common. All 3 implement closures very similarly - similarly like it was done in Smalltalk - a precursor to all OO dynamic languages. All have OO model at it's core. Even a Function in JavaScript is a callable object - same as Python by the way (

(function(){}) instanceof Object => true).I can't think of good example of what works well with JS but not TypeScript thus I'm not entirely sure what you're pointing to.

I believe reason why some piece of code won't work with TypeScript is not because it's not OO but because it's "too dynamic".

But I think this sort of code tends to not work not only with TypeScript but also with all other JS ecosystem (js runtime engines, jsdocs, linters).

It's never enough to bring in a concept but not solve the tooling problem. Thinking about tooling first also ensures that concept will play well with tooling. Some concepts make tooling practically impossible to build.

TypeScript from it's beginning was built thinking about tooling - from first days it come with language server that integrates seamlessly into any IDE. TypeScript became best language server available for javascript even if one is not using TypeScript. VSCode also became biggest open-source project in javascript ecosystem thus it showed cased how to solve scalability problems of javascript - with typescript. I totally agree that tooling will effect how solutions are created. In this case not exactly because of vscode, but because of typescript language server. Capability to produce tools always had and always will dictate what approach is taken and not purely theoretical background. We might like it might not but it's a simply a facts. This is true in every engineering field. When you write code for your own personal project you can explore anything you want. When you write code for it to serve a business together with many other practicing developers your goal is no longer to explore solution scope but to produce a solution that is well accepted in industry - and that means it a solution that plays well with existing tools - not hypothetical tools.

The article uses "vs" and supplies two distinct implementations (though the first example is largely procedural). To highlight differences it's typical to take the examples to the extreme.

Of course there is a range of possible solutions between both extremes.

You seem to prefer leaning toward the OO end of the spectrum.

My contention is that JavaScript naturally tends more towards the function-based end (not necessarily to the extreme of my "function-style" example).

In the above example

Op2Argsis a referable name - but from your past comments you don't like this because at the definition site offit isn't obvious that eitheradd,subormultmight be used.As stated "minimizing moving parts" has priority (as long as all the tradeoffs are acceptable) over "encapsulating moving parts". Without minimizing the moving parts first, "accidental complexity" tends to be entombed into the encapsulation. But that is true of any approach.

is considered functional because "mapping a function over a type" is extremely common in functional programming - in an OOP without first class functions you would have to "map an object over another object" where the former has to implement a method (i.e. a single method interface) the latter is requiring - with is way more convoluted.

Polymorphism is everywhere.

i.e. appendChild will append anything that implements the Node interface - that could be a lot of different kinds of objects.

In the example above

Op2Argsmakesfpolymorphic.React uses React.Component so that it can treat all your components in an identical manner - that is polymorphism in action.

Guido van Rossum is anti-functional - lambda almost didn't make the cut.

Closures were devised by Peter Landin in 1964 as described in "The Mechanical Evaluation of Expressions" (lambda calculus). That is why closures are considered a "functional" feature - regardless of where they may have been adopted later.

If you were talking about TypeScript I would agree - it's opinionated towards class-based OO. But in the case of JavaScript I disagree and I've elaborated on that here.

While being essentially imperative the core building block of JavaScript is the function. Multiple functions can share state through a shared closure so that these functions may act as methods. That is essentially how OOP with functions works.

The object - in an "object-oriented" (but not class-based) sense - is an emergent concept via the function context -

thisgiving a function the capability to access other values on the "object" the function was being referenced through.The prototype chain then makes it possible to share a function across object instances (to save memory). So conceptually object instances sharing the same function across the prototype chain belong to the same "class" - but there is no class construct, only object instances.

Given the function first nature of JavaScript, OO workarounds like the Command Pattern (as in Replace Conditional Dispatcher with Command) aren't necessary - just pass a function with its attached closure.

I'm referring to the lopsided "typing tax" that TypeScript imposes on "function-style" vs "class-style" (or "procedural-style") code that you yourself already commented on. If one persists on using function and closure based approaches in TypeScript one quickly finds oneself knee deep in noisy type definitions expressed in TypeScript's typing meta language. Functional languages already devised concise ways of expressing function types.

With "class-style" code you can coast on rudimentary and terse typing features for a long time before you ever have to dive into the advanced types. But making it easy to work with function and closure types wasn't a priority - despite the fact that these are core JavaScript features.

Going by the mantra "make doing the right things easy and the wrong things hard" by focusing on making "class-style" code easier to type, TypeScript reinforces the idea that "class-based object-orientation" is the "right thing" while "function-style" is the wrong thing.

TypeScript admits as much:

In 2008 Douglas Crockford wrote:

"TypeScript the Good Parts" focuses on "class-based object-orientation" much the same way that "JavaScript the Good Parts" doesn't. In a strange twist TypeScript has become that other language that most people would rather be using so that they don't have to bother learning JavaScript first.

Oliver Steele made an interesting distinction between "Language Mavens" and "Tool Mavens" in IDE divide - he comes to the conclusion that tool-orientation comes at the cost of language features.

Ironically Java has been continually ridiculed for its need of tooling to "keep developers productive" in the face of the all the language's and ecosystem's warts. Meanwhile it's perfectly acceptable for the members of the JavaScript community to employ tool heavy build pipelines (editors requiring language servers) and constantly clamour for "better tools".

I have read JavaScript the Good-Parts. Haven't yet had chance to read the similarly named one on TypeScript.

On function returning objects through closures as means of OO there are benefits and weaknesses compared to class (old prototype based) approach.

I think it's not a good approach. I think Crockfords originally didn't take all important aspects into consideration.

It's useable in isolated case but I believe it was never practical to accept this as a standard way to move forward with OO in JS.

I also believe even before introduction of 'class' it was never mainstream approach. The approach that was always considered "correct" was prototypal OO (check examplesf influencial js libraries such as dojo, prototype, jQuery v1 - most common are custom class builders due to verbosity of direct usage of prototype, or use of prototype directly)

And due to multitude of different approaches towards doing OO in JS, there was a need to have a standard. Class was a straight forward thing that is widely understood and played perfectly well with JS prototype thing.

The approach of doing OO through means of closures comes with some benefits over prototypal/class based approach

This can be worked without much difficulty in 'class' and it was already proven.

Regarding the private attributes

#privateMemberRegarding the

thisbounding problemonClick=e => myObject.onClick(e)solves thisI consider passing method from object as a function to always be a weak practice. If one wants to pass function - this is excellent use case for arrow function. Always create a new arrow function, if wanting to pass a function. Arrow functions are the link between functional and OO approaches. Using method without object (as in passing it as function) doesn't check out with core OO idea of message passing to object.

Weak points of this approach to do OO I think were much harder to iron out. It simply didn't played nicely with underlying language concepts that existed from 1st days of JavaScript (prototypes). Problems of this approach:

x'in ReScript)const x = MyObject(); x instanceof MyObject, keywordinstanceofjust no longer makes any sense, it's hard to move forward with a technique that doesn't play well with certain 1st class language features already in existenceI understand the point of not using classes, in favor of doing pure functional development in JS. I completely miss the point of trying to use functions for OO where classes are available to implement OO.

Here is a case from 2009 that argued against

functional pattern proposed by Douglas Crockford bolinfest.com/javascript/inheritan...

Many arguments are Closure Compiler related. Now of course if to be purists arguments about specific tool maybe would not matter. But consider that job of developers is to produce working software. If you had to write a bigger piece of code in js then Closure Compiler was the tool to go with, it would be either impossible, or you'd need to invent your own tools.

There isn't one.

But when Microsoft states "so that the programmers at Microsoft could bring traditional object-oriented programs to the web" they are talking about C# style class-based object-orientation.

TypeScript was designed with a good class-based OO development experience in mind - sacrificing the ease of other approaches that may be equally valid under JavaScript (which claims to be multi-paradigm).

You are correct. Because mainstream OO is "class-based". "OOP with closures" is only about objects, not classes.

I would argue that the mainstream didn't even accept "prototypal OO" as correct given that there is no explicit class mechanism and the confusion that it caused up to and including ES5. But it certainly is possible to emulate class-based OO with prototypal OO. Strictly speaking membership to a class should last for the lifetime of the object. JavaScript objects can be augmented after construction - so "class membership" isn't fixed.

The important aspect was that it aligned with the mainstream mindset of class-based object-orientation. However the code before ES2015 wasn't straightforward.

You missed:

newto instantiate an object (useful if instances are cached);They landed in Chrome 74 but as such the proposal has been stuck at stage 3 since 2017 - it didn't get into ES2020; maybe it will be part of ES2021 (ES2016 is ECMA-262 7ᵗʰ Edition).

The problem with

thishas more to do with developers from other languages not understanding how it works - that it is a deliberate decision not to bind the function to the object.'xis a simply type variable just likeTis a type variable inOp2Args<T>. And the object returned by the factory still has a structural type.instanceofcan be a great escape hatch but the whole point of polymorphism is that the object should know what to do without the type needing to be known first (Replace Conditional with Polymorphism).There isn't just one kind of object-orientation. You are correct that the mainstream assumes "class-based object-orientation" when OO is mentioned; really COP - class-oriented programming would have been a better name (the code is declaring classes, not assembling objects).

"OOP with closures" doesn't seek to emulate "class-based object-orientation". Without inheritance, composition is the only option which leads to a simpler style of object-orientation. Also the notion isn't that "closures are like classes" but that "closures are like objects".

Once "closures are like objects" sinks in, it should become apparent that there are situations where closures can be more succinct than objects (created by a class).

Also consider that in 2008 ES5 wasn't even finalized yet -

classwasn't official until 2015.Which one is easier to understand

Or this one?

Not everybody has Google size problems - and the tradeoffs of the closure-based approach are known.

Clearly in a project using the Closure compiler one would stick to the recommended coding practices. But when in the past I had a look at the Closure library it struck me that it was organized to appeal to Java programmers - so it's not that surprising that the compiler would favour the pseudo-classical approach (apart from being more optimizable).

In any case I'm not recommending ignoring

class- just to be familiar with the closure based approach; it does exist in the wild and in some situations it could come in handy.TL;DNR

While JavaScript is an imperative language (i.e. it isn't a functional programming language) I think it's fair to call it "Function-Oriented".

From that perspective mastering functions and closures is an essential part of developing JavaScript competence.

While "class-based object-orientation" goes back to Simula (1962) and Smalltalk (1972) it largely became mainstream due to languages like Java (1995) and C# (2000). While both Java and C# have gained a number of features that aren't directly related to classes over the years, it is probably accurate to say that their fundamental base unit of composition is a class instance, i.e. an object.

The same isn't true for JavaScript. The base unit of composition is a function and its stateful counterpart the closure. On a fundamental level object literals are simply associative arrays where the keys are limited to Strings and Symbols while the value can be of any type (in modern JavaScript Map fits the use case of an associative array much better). The notion of an "object with methods" emerges when functions are stored as values in a plain object (the prototype chain largely exists to help reuse functions across multiple objects).

While in Java and C# classes and class instances (objects) are atomic units of composition, in JavaScript an object in the object-oriented sense is an aggregate of a plain object and functions as values.

Personally the mental model of an object as an aggregate clarified the role of the function context

thisimmensely.thisis a special reference that gives a function access to some "other data". If a function is meant to act as a method (is accessed via an object reference) it needs to usethisto refer to the rest of the "object". Butthiscan also be used to pass any other context with call (or apply) and bind can be used to create another function with a "bound"this(an arrow function'sthisis automatically bound to the scope it's defined in). But a function is also free to ignorethisentirely.In my judgement the introduction of the module first as a pattern and then as a language feature is more important than the adoption of the

classsyntax sugar. It's possible to create a well structured code base with just modules, functions and plain objects.In fact during the ES5 years (2009-2015) an alternate model of object-orientation not based on constructor functions or classes but instead based on object factories emerged (OOP with Functions in JavaScript). A factory creates a plain object holding functions that are linked to "object state" not via

thisbut through the shared closure that created the functions. It's an approach well worth being familiar with in order to get better acquainted with closures.So in JavaScript object-orientation isn't class-based (

classis more of a creational convention) and the base unit of composition is the function (and plain objects). Objects compose as well and objects can compose with functions and closures. As a result objects (and more so classes) aren't always the "go-to" building block in JavaScript - functions and closures often play the role that small classes do in mainstream OO languages.Aside: There are some opinions that Closure components are a more obvious solution than hooks for stateful functional components (Hooks reimagined, Preact Composition API).

Functional programming is primarily about composing functions to transform a value.

So I might write the "functional-style" version as:

Edit: It's useful to remember Master Qc Na's lessons:

Using a closure

versus using an object

compositionandpipecould be replaced with:or

IIFE (Immediately Invoked Function Expression)

Great article, thanks! It would be great if you could write more similar articles and an insight on when to choose a paradigm over another.

Thanks for the awesome feedback. I‘m currently writing a 3 part beginners series on react. The first part is already out here.

After that I wanted to do a vanilla JS article again 😁