Recently, I had a conversation with a friend who, among a few thanks for my written text, advised me to pursue pure philosophy. The situation wouldn't be so bad if I didn't completely agree with his opinion. These few texts about different views on writing programs really fall into such a category. I have been circling around the topic for some time now and I'm not quite sure how to write the truth to you. I probably think that a certain understanding of the basics of our goal is the best foundation for starting to learn programming. I haven't talked about time yet, the thing that will give you the biggest headaches while trying to understand my text, but honestly, programming is always in some endless struggle with the concept of time and what time means for microcontrollers. Perhaps for that reason, I smell in the air that I will create a critical mass of enemies in a few sentences who will tell you, in this or that form, that I'm writing complete nonsense. Of course, this text is not only read by beginners, but also by people with a few initials in front of their names, like "Jean-Claude Van Damme". But regardless of the opinions of all the others who are trying to teach you programming, I will open Pandora's box and release the hell that makes up the biggest programming delusions.

Among other things, I was criticized for not writing program examples that would introduce beginners to programming. What program examples? For 20 years, while reading texts about programming, we've been spinning in chaos of lies and wrong beliefs. What should I do now? Continue the path that all those before me have walked? I really don't want to. On the other hand, when it comes to programming microcontrollers, 99% of "normal" people we meet in life immediately push us into some basket with all other programmers. I often hear how the neighbor's kid is also a programmer, just like me, but is currently in Tehran delivering 70,000 dinosaur eggs from 60 million years ago... I have long lost the desire to say that I'm a programmer. "Normal" people don't know exactly what that means, and if I said some subset of programming like "Microcontrollers", then everyone would put me in the category of "Walking Weirdo". For several years now, I've been saying that I'm an electrician, just to avoid the misunderstanding of the word "Programmer". The person who designs websites or adjusts a CNC machine is also a programmer, regardless of the fact that their job has no points of contact with the issues we deal with. What is special about programming microcontrollers is the close collaboration of the program we write, the microcontroller hardware, and electronics. We can't put these three items into one "Windows" programmer who writes an application for a personal computer. He has no contact with electronics, and it certainly doesn't occur to him to solder a 100nF capacitor onto his personal computer processor. After all, his hardware is finite and cannot be changed, so he can only write software code.

If we go back to our microcontrollers, hardware is not final. We are the ones who will decide on every pin of the microcontroller. We must connect it to an LCD, buttons, sensors... And to top it all off, we are the ones who collaborate with the hardware and architecture of the microcontroller. When I say "Architecture", referring to AVR, that is what I want to teach you. Knowing at least one architecture is incredibly valuable knowledge, and it brings with it the ability to program any microcontroller.

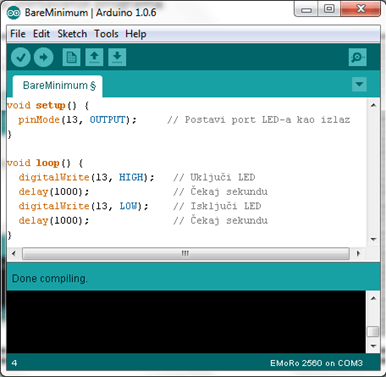

Everything you have learned about programming during your upbringing is the biggest possible lie. For years, we have trained your minds to think logically, but we do it in a completely opposite way. We know very well that we are teaching you wrongly, but we cannot find a better solution. It is not a problem of the teacher, but a problem of our perception and experiencing of the world around us. We are used to thinking procedurally, programming just as we experience the world, but in such chaos, we never ask ourselves how the world experiences the microcontroller? The first program we will always make you do is called "Turning on and off the LED," and we immediately trick you. Our intuition tells us that we should turn on the LED, wait for 1 second, turn it off, wait for 1 second, and repeat the same procedure. We make this mistake in the Arduino IDE:

Delay commands are not used in programming, at least not the way they are used by 100% beginners. The greatest power we possess is called instruction execution speed, and it is directly related to the concept of time. This is difficult to explain because the way microcontrollers work is directly opposite to our expectations. If I cannot explain it to you now, I can show you. Take all the programs you have ever written in your life and delete all the wait commands such as "Wait", "Delay", "Sleep". What you can conclude is that your program runs incredibly fast, and that is exactly the kind of program we should be writing.

No one will tell you that wait commands are not used because it leads you to a difficult question: "How to wait for a second if we are not allowed to wait?" The answer is complicated, but trust me, let me guide you through the philosophy a bit more and we will start exploring this tangled truth about programming. I did not say that programs that use wait commands like "delay" do not serve their purpose, I am just saying that no program that uses "delay" for the purpose of describing real time is written correctly and completely limits the programmer's imagination. Time and "delay" will be our enemy in all the news that this text brings, and it is currently important that you only remember the name of the enemy. Don't worry, dear reader, you will understand this when time traps you.

An assembly programmer sits on a "devil's" flying broomstick that has almost no brakes. He cannot call "delay(1000);" to wait for one second. The only thing he can do is execute an assembly "NOP" instruction, but it only slows the program down by 50 ns (nanoseconds) if the MCU is running at 20 MHz. He can add the same number over and over again to pass one second, but he cannot afford to waste so much time. We must forget about the waiting commands and not use them until we learn how to write programs without them. Of course, I don't expect you to understand me now, but when you understand how to program without "delay" commands, you will immediately realize the heavy burden you carry by using waiting commands.

When we teach you how to program, we always start from the top of the mountain and develop your "delay" way of thinking in programming languages that are not even languages, but rather pictorial flowchart programs. This is when you arrange pictures like "Turn on LED, wait x time, turn off LED...". I will start from the bottom called assembly, just so you don't think that we write fried chicken in the FLASH memory of the microcontroller, but rather instructions composed of zeros and ones, in our case 2 or 4 bytes of combinations of the numbers "0" or "1". Assembly is a truly perfect programming language, they say 1 to 1. This means that each assembly instruction is identical to one microcontroller instruction stored in FLASH memory. Assembly is nothing but a more readable HEX file. You can find simple proof in the program for turning on the LED written in assembly and published in the previous text.

Programming languages for microcontrollers can be divided into two groups, assembly and all the rest. In a program that involves LED on the Arduino MEGA 2560 board, we can see how powerful assembly language is and how short hex files it can generate. Of course, it can, as the hex file and assembly are one and the same, just packaged differently. Now you might be thinking, "I will write in assembly and be the best, fastest, and most perfect." Well, you won't, you didn't ask me about the cost of programming in assembly. When someone gives us perfect gifts, something must necessarily smell like great evil. If assembly language were so good, no one would use higher-level programming languages like C/C++. In fact, higher-level programming languages wouldn't even exist, yet they are here to stab magical fairies into the flesh on which we sit and force us to reconsider the programming language we want to work with microcontrollers. So far, I have only written about the perfection of assembly, but I haven't talked about its shortcomings, which is why we prefer C/C++.

Every microcontroller architecture only supports the instructions for which it was designed. Knowledge of assembly can only help you on computers whose assembly language you know. You cannot use AVR assembler on other architectures and microcontrollers, and this fact limits us only to one architecture. For each new architecture, we would have to learn a new assembler. Each computer and its internal order are too different, so instructions that you know on the AVR architecture do not exist on other computers at all. Each architecture writes instructions completely differently and executes them completely differently. Assembly is an extremely complicated language, not only because it is tied to one architecture but also because it is the lowest level of thinking in which you cannot simply add even two numbers. If you wanted to use assembly, you would have to think identical to the microcontroller, and that is, believe me, complicated.

Of course, in this regard, we needed some help and a new language that would unify all computers, architectures, and simplify the complicated assembler. Lo and behold, on the sixth day, higher-level programming languages were created, along with c/c++. They are considered higher-level only because they are not assembler. Therefore, they do not belong to the group that microcontrollers "think" in, but are much closer and simpler for us programmers. The programming language c/c++ is standardized and the whole world adheres to its rules. Remember the main() function. Regardless of whether it is hidden in the Arduino IDE, the rules of the c/c++ language dictate that the function must exist, otherwise, the programming language cannot be called c/c++. The beauty of a standardized language like c/c++ is the fact that all programmers must adhere to all language rules, and when you learn it, you can say that you speak at least two languages, one of which is c/c++. Today, you can communicate with all microcontrollers in the world using the programming language c/c++, which is why learning it is extremely important if you want to work with microcontroller programming.

Why is the "main()" function so important? That's where we anchor and transition from philosophy to programming, that's where everything starts and ends, where every complete program is written in the c/c++ programming language, and there is nothing as pure and bright as an empty "main()" function that will be our starting point for creative work. However, no matter how hard we try to program in higher-level programming languages, we cannot escape the ugly reality that microcontrollers still execute only assembler instructions, only those zeros and ones, that hex file that always marks the bottom of our work.

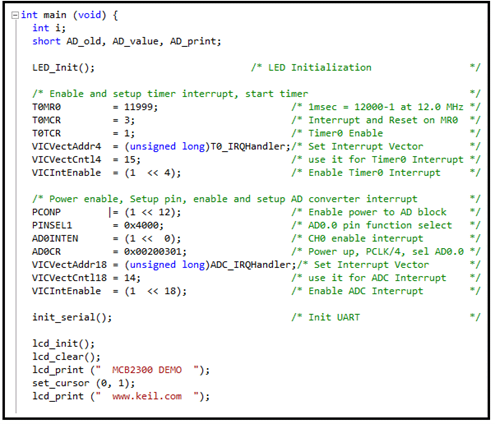

Slowly but surely, we are finally approaching the new concept of our life's misery called "Compiler." I have already tired you enough to know that it is not possible to enter a high-level programming language program into the FLASH memory of a microcontroller. High-level programming languages like C/C++ look like this:

Now all possible alarm bells should be ringing in your head about how the microcontroller does not understand the language we keep whispering to it about what to do. It needs those zeros and ones, that ugly hex file. A compiler is a program that translates higher-level programming languages into assembler instructions that the microcontroller can understand. It's nice that there is a compiler for AVR, a compiler for 8051, a compiler for ARM, a compiler for everything. We no longer have to learn all the assembler languages, it's enough that we know c/c++, and let the compiler translate our higher-level programming language thinking into assembler for specific architectures.

As in everything, we have to pay the price. There is no compiler as good as our wisdom and cunning. The compiler is a program that doesn't have our intelligence and can't translate higher-level programming languages as perfectly as we would write them in assembler. For this reason, there is no perfection in assembler, and we cannot use the microcontroller to 100% of its capabilities. However, due to the universal language with which we can program all microcontrollers, and the fact that speed, memory, and performance of microcontrollers are increasing year by year, the sacrifice we make is much smaller than the advantage we gain by using higher-level programming languages such as c/c++.

Top comments (0)