Author: Prakarsh & Abhinav Dubey, Devtron Labs (Platinum sponsor, KCD Chennai)

If you are running microservices, there is a high probability that you're running it on a Cloud platform like AWS, GCP, Azure, etc. The services of these cloud providers are powered by data centers, which typically comprise thousands of interconnected servers and consume a substantial amount of electrical energy. It is estimated that data centers will use anywhere between 3% and 13% of global electricity by 2030 and will be responsible for a similar share of carbon emissions. This post will step through an example with a case study of how to use Kubernetes to minimize the carbon footprint of your organization's infrastructure.

Carbon Footprint

What exactly is carbon footprint? It is the total amount of Greenhouse gases (Carbon dioxide, Methane, etc) released into the atmosphere due to human interventions. Sounds familiar, isn’t it? We all have read about the different ways greenhouse gases get released into the atmosphere and the need to prevent them. But why are we talking about it in a technical blog? And how can Kubernetes help to reduce your organization's carbon footprint?

In the era of technology, everything is hosted on cloud servers, which are backed by massive data centers. In other words, we can say data centers are the brain of the internet. Right from the servers to storage blocks, everything is present in these data centers.

All the machines require energy to operate, i.e, electricity, irrespective of the source of generation, renewable or nonrenewable. According to a survey conducted by Aspen Global Change Institute, data centers account for being one of the direct contributors to climate change due to the release of greenhouse gasses. According to an article by Energy Innovation, a data center that contains thousands of IT devices can use around 100 megawatts (MW) of electricity.

How can Kubernetes help reduce the carbon footprint? Before answering the above question, let’s learn how to calculate the carbon footprint of a server that is created with a public cloud provider like AWS. In AWS, we create instances of different sizes, computation power, storage capacity, etc as per our needs. Let’s calculate the carbon footprint emitted by initializing an instance of type - m5.2xlarge in the region - ap-south-1 which runs for 24 hours. We can calculate using the Carbon Footprint Estimator for AWS instances. As you can see in the below image, after giving the values, we can easily estimate the carbon footprint for the respective instance which is 1,245.7 gCO₂eq.

Factors considered during the calculation

There are primarily two factors that contribute to carbon emissions in the Compute Resource carbon footprint analysis, which are discussed below:

Carbon emissions related to running the instance, including the data center PUE

Carbon emissions from electricity consumed are the major carbon footprint source in the tech industry. The AWS EC2 Carbon Footprint Dataset available, is used for the calculation of carbon emissions based on how much wattage is used on various CPU consumption levels.

Carbon emissions related to manufacturing the underlying hardware

Carbon emissions from the manufacturing of hardware components are another major contributor when it comes to carbon footprint calculations within the scope of this study.

How K8s helps

Kubernetes is one of the most adopted technologies for running containerized workloads. As per the survey by Portworx, 68% of companies have increased the usage of Kubernetes and IT automation, and the adoption metrics are increasing, day by day. For any application to be deployed over Kubernetes, we need to create a Kubernetes cluster that comprises any number of master and worker nodes. These are nothing but instances/servers which are being initialized, where all the applications will be deployed. The number of nodes increases as the load increases, which eventually will contribute more to the carbon footprint. But with the help of Kubernetes Autoscaling, we can lower the count of nodes i.e, reduce the number of instances created as per the requirements.

Let’s try to understand it with a use case.

A logistic company uses Kubernetes in its production to deploy all its microservices. A single microservice requiring around 60 replicas, can initialize around 20 instances of m5.2xlarge type in ap-south-1 region. If we calculate the carbon footprint of a single microservice in 24 hours, it would be around 24,914 gCO₂eq. This is the amount of carbon footprint emitted just by a single microservice, and there are thousands of microservices in an organization that runs 24*7 workloads. Now, if you are wondering how this can be reduced, you are at the right place.

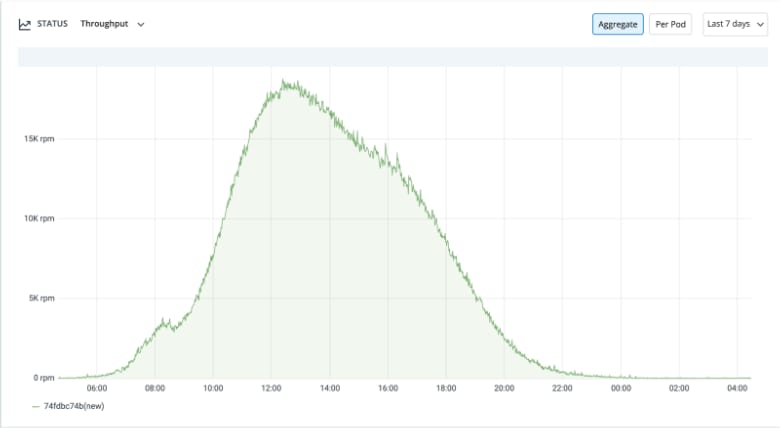

The maximum traffic that a logistic company's first / last mile app experiences are during the daytime when the deliveries happen. Generally, from morning around 8:00 AM, there’s a steep increase in the traffic and it experiences its peak in the afternoon hours. That's when it would have the most number of pod replicas and nodes/instances. Then post 8:00 PM the traffic decreases gradually. During the off-hours, when the traffic decreases, the count of replicas also drops to 6 from an average of 60 for each microservice, which requires only 2 nodes/instances. The microservice uses throughput metrics based on horizontal pod autoscaling using Keda.

Now, if we talk about the carbon emissions by 1 micro service after deploying it on Kubernetes paired with horizontal pod auto-scaling, it would drop to around

51.9 gCO₂eq x 2 (nodes) x 12 (hrs 8pm to 8am non-peak hours) + 51.9 gCO₂eq x 20 (nodes) x 12 (hrs 8am to 8pm peak traffic hours) = 13,701.6 gCO₂eq

51.9 gCO₂eq x 20 (nodes) x 24 (hrs no autoscaling) = 24,912 gCO₂eq

That's a whopping 11,211 gCO₂eq (45% reduction) in carbon emissions [1], and this is just by 1 micro-service in 24hrs. This translates to 4092 KgCo2eq per year! To understand how much this is, a Boeing 747-400 releases about 90 KgCo2eq per hour of flight when it covers around 1000 Kms [2].

Now think of how much your organization is capable of reducing per year by migrating 1000s of microservices on Kubernetes paired with efficient autoscaling.

Migrating to Kubernetes?

Kubernetes is the best container orchestration technology out there in the cloud-native market, but the learning curve for adopting Kubernetes is still a challenge for the organizations looking to migrate to that platform. There are many Kubernetes-specific tools in the marketplace like ArgoCD, Flux for deployments, Prometheus and Grafana for monitoring, Argo workflows for creating parallel jobs, etc. Kubernetes space has so many options, but they need to be configured separately, which again is complex.

That's where the open source tool - Devtron, can help you. Devtron configures and integrates all these tools that you would have to otherwise configure separately and lets you manage everything from one slick Dashboard. Devtron is a software delivery workflow for Kubernetes-based applications. It is trusted by thousands of users and used by organizations like Delhivery, Bharatpe, Livspace, Moglix, etc, with good user communities across the globe. And the best thing is, it gives you a no-code Kubernetes deployment experience which means there's no need to write Kubernetes YAML at all.

Devtron is running an initiative #AdoptK8sWithDevtron where it offers expert assistance and help to the first 100 organizations that want to migrate their microservices to Kubernetes.

References

[1] When compared to micro-services which are not configured to scale efficiently.

[2] https://catsr.vse.gmu.edu/SYST460/LectureNotes_AviationEmissions.pdf

Latest comments (1)

Nice information. cick the link below for latest interior designing.

saventurebiz.com/interior-designer...