In the previous installment, we established a robust foundation of components that will power our self-hosted on-demand runner infrastructure. These components, including the GitHub App, API Gateway, Lambda functions, SQS, S3, EC2, SSM Parameters, Amazon EventBridge, and CloudWatch, work in concert to provide a scalable and cost-effective solution for GitHub runners.

Now, we'll configure the GitHub App to serve as a nexus between GitHub and AWS, triggering the creation or removal of EC2 instances based on webhook events. This dynamic mechanism ensures that the number of available runners always aligns with the current workload, optimizing resource utilization and costs.

We'll also delve into the practical implementation of this infrastructure using Terraform, an infrastructure as code (IaC) tool that streamlines the provisioning and management of AWS resources. With Terraform, we'll automate the deployment of EC2 instances, VPCs, and IAM roles, ensuring consistent and repeatable infrastructure setups.

Create GitHub App

To begin, navigate to GitHub and establish a new app. Bear in mind that you have the option to create apps for either your organization or a specific user. For the time being, we'll use an organization-level app.

Step 1: Create a GitHub App

- Access GitHub and navigate to the "Settings" section for your organization.

- Select "Developer settings" from the left-hand sidebar.

- Click on "New GitHub App" in the "GitHub Apps" section.

- Provide a name for your app, such as "Self-Hosted Runner App".

- Enter a website URL for your app (mandatory, but not required for this module).

- Uncheck the "Enable webhook" option for now, as we will configure this later or create an alternative webhook.

Step 2: Define App Permissions

- Scroll down to the "Permissions" section and define the following permissions for all runners:

- Repository: Actions: Read-only (check for queued jobs)

- Repository: Checks: Read-only (receive events for new builds)

- Repository: Metadata: Read-only (default/required)

- Next, define the following permissions specifically for repo-level runners:

- Repository: Administration: Read & write (to register runner)

- Finally, define the following permissions specifically for organization-level runners:

- Organization: Self-hosted runners: Read & write (to register runner)

Step 3: Save and Note App Details

- Click the "Save" button to finalize the app creation process.

- On the General page, make a note of the "App ID" and "Client ID" parameters. These will be used later in the process. Step 4: Generate Private Key

- Generate a new private key using an appropriate tool or command-line utility.

- Save the generated private key as

app.private-key.pem. This file will be used later to authenticate the app with GitHub.

Create Infrastructure with Terraform

Prerequisites

The following tools are required to perform this step:

- A GitHub account with an organization or personal access token

- An AWS account with VPC and subnets already created

- Terraform installed and configured on your system

- Node.js and Yarn (for Lambda development)

- Bash shell or compatible shell

Once you have installed and configured all the required tools, you are ready to proceed to the next step, where we will create the necessary AWS resources using Terraform.

Creating Resources

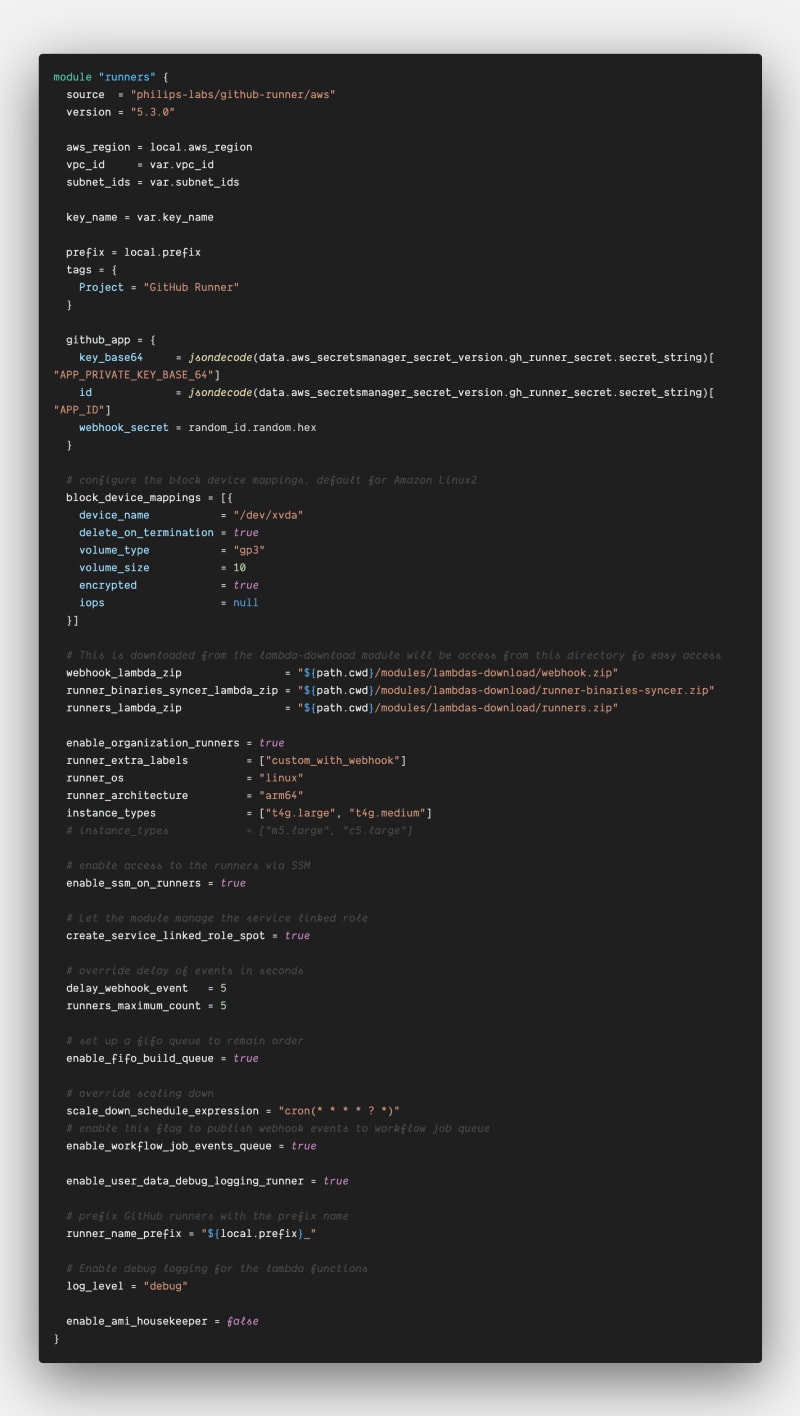

We'll utilize a highly configurable and exceptional Terraform module terraform-aws-github-runner

to streamline the implementation of our infrastructure. This module boasts stellar maintenance and offers various approaches through internal modules, allowing you to seamlessly adapt the infrastructure to your project's specific needs. In this article, we'll focus on implementing the simple runner configuration provided by the module, which aligns perfectly with the article's requirements.

All Terraform code is available here.

Before diving into the intricacies of the runner infrastructure, we'll begin by downloading the essential lambda function code required by the module to dynamically create and destroy our resources as needed.

Then, we'll create our runner module which will create Lambda functions(webhook, scale-up, scale-down, syncer), SQS(workflow-queue, queue-builds), EC2 Launch template(e), s3(s) among others.

Lambda Functions: The Heart of the Infrastructure

Lambda functions serve as the brains of our infrastructure, orchestrating various operations and ensuring seamless responsiveness to changing demands. Each Lambda function fulfills a specific role:

- webhook: This function acts as the gateway for incoming webhook events, meticulously verifying their authenticity and ensuring they align with the expected criteria. It only processes events related to

workflow_job, statusqueued, and matching the runner labels. - scale-up: Continuously monitoring the SQS queue, this Lambda function eagerly awaits incoming events. Upon receiving an event, it performs a series of checks to determine whether a new EC2 spot instance is required to accommodate the workload. If deemed necessary, it utilizes the predefined EC2 Launch template(e) to spin up new EC2 instances, expanding the runner pool.

- scale-down: Monitors the SQS queue triggered at an interval. This Lambda function diligently monitors the runner pool, identifying idle runners that have been removed from GitHub. Once an idle runner is detected, it prompts the termination of the corresponding EC2 instance, optimizing resource utilization and minimizing costs.

- syncer: Downloading the GitHub Action Runner distribution can occasionally be a time-consuming process, sometimes exceeding ten minutes. To alleviate this bottleneck, a dedicated Lambda function is introduced. This function meticulously synchronizes the action runner binary from GitHub to a designated S3 bucket(s). Subsequently, EC2 instances(e) seamlessly fetch the distribution from the S3 bucket(s) rather than relying on slower internet downloads.

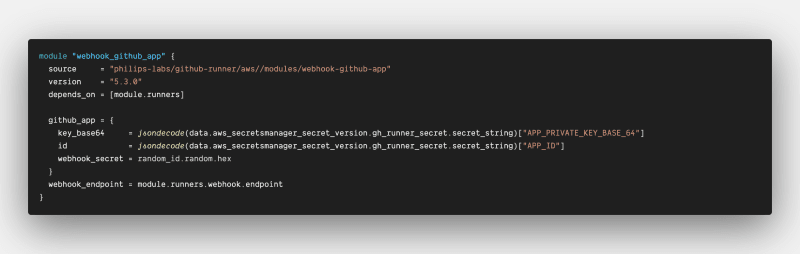

GitHub App Module: Bridging the Gap Between GitHub and AWS

To establish seamless communication between GitHub and AWS, a GitHub app module is meticulously implemented. This module integrates seamlessly with our GitHub app, enabling the creation of an API gateway. This gateway serves as the intermediary, securely handling webhook events sent by the GitHub App over HTTPS. Additionally, it relays responses back to the GitHub app, ensuring a robust and reliable communication channel.

Conclusion

In this comprehensive guide, we have embarked on a journey to build a scalable and cost-effective self-hosted runner infrastructure on AWS using Terraform, an infrastructure as code (IaC) tool. Through this exploration, we have delved into the key components that power this infrastructure, including the GitHub App, API Gateway, Lambda functions, SQS, S3, EC2, SSM Parameters, Amazon EventBridge, and CloudWatch.

By leveraging these components, we ensure optimal resource utilization and cost-efficiency. We have also explored the practical implementation of this infrastructure using Terraform, automating the provisioning and management of AWS resources for consistent and repeatable setups.

The self-hosted runner infrastructure we have created provides a powerful foundation for organizations seeking to enhance their CI/CD capabilities and streamline their software development processes as we currently do at Blackthorn. By harnessing the scalability and flexibility of AWS, organizations can effectively manage runner capacity and costs, ensuring that their CI/CD infrastructure aligns with their evolving needs.

As you embark on your own journey to build self-hosted runner infrastructures, remember the key takeaways from this guide:

- Utilize Infrastructure as Code (IaC): Leverage IaC tools like Terraform to automate the provisioning and management of AWS resources, ensuring consistent and repeatable infrastructure setups.

- Design for Scalability: Build your infrastructure with scalability in mind, employing components like Lambda functions and SQS to dynamically adjust runner capacity based on workload demands.

- Optimize Resource Utilization: Monitor resource utilization metrics and proactively scale your infrastructure to avoid resource bottlenecks and unnecessary costs.

- Embrace Continuous Improvement: Continuously evaluate and refine your infrastructure to adapt to changing requirements and optimize performance.

By adhering to these principles, you can effectively build and maintain a self-hosted runner infrastructure that empowers your organization to achieve continuous delivery excellence.

Links:

- All Terraform code is available here

- terraform-aws-github-runner

Top comments (0)