As you know, ChatGPT will disrupt simple programmer. So I tried what will be happened when developing cloud apps with ChatGPT fully.

(Actually I used ChatGPT Plus with GPT-4 mode.)

TL;DR

ChatGPT was very useful to accelerate our development speed on beginning phase.

But GIGO(Garbage In, Garbage Out) is truth when we cannot use ChatGPT (actually not only ChatGPT but also other AIs) fully.

It depends on how we can use ChatGPT well.

Pre-condition

This time, I made several codes to make web scraping for Amazon product pages. But Amazon prohibited the scraping and I didn't notice that before starting.

Overview

I sent following message to ChatGPT (in Japanese), it is too rough for human developer too :)

You are a software engineer and you need to create one application that can notice specific product price will be changed, and it will notify me.

# Specification.

1. Use following technologies for development.

1) Processing: Python

A) Read the product code from the database and access the Amazon product page.

B) Retrieve the price from the product page and compare it with the previous price.

C) If the price is lower than the previous price, notify on Slack.

2) Database: MySQL

The following contents are stored in the DB.

A) Product code

B) Product price (previous price)

3) Notification destination when price changes: Slack

2. The application shall be a stand-alone application running on AWS EC2.

3.This program will be launched once an hour.

# Deliverables

1. DDL for database setting up.

2. Python program.

3. CloudFormation template to build them on EC2.

The output code should be in a copyable format.

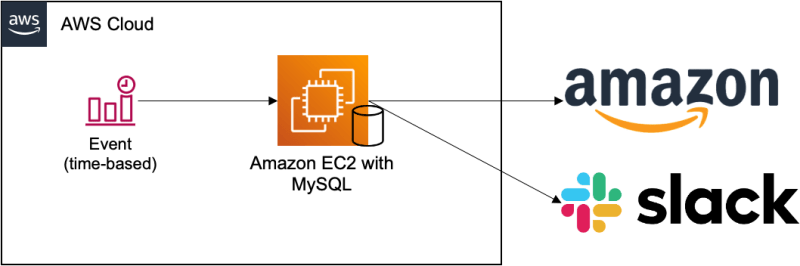

Simple application architecture is below.

Reply from ChatGPT

There were a few minor points that I reviewed and had made ChatGPT correct them, but approximately this is what I got back immediately.

At a quick glance, it looks right and even scary. And CloudFormation (CFn) templates can be generated, this surprised me.

(The details of the communication needed at the bottom.)

DDL:

CREATE TABLE `product_price` (

`product_code` varchar(50) NOT NULL,

`price` decimal(10,2) DEFAULT NULL,

PRIMARY KEY (`product_code`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

Python code (Amazon page scraping is now prohibited, so I deleted related code)

import os

import boto3

import requests

from bs4 import BeautifulSoup

import pymysql

import logging

from slack_sdk import WebClient

from slack_sdk.errors import SlackApiError

region_name = os.environ["AWS_REGION"]

ssm = boto3.client("ssm", region_name=region_name)

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

# Database connection information

DB_ENDPOINT = ssm.get_parameter(Name='/cgpt-amazon-price-alert/db-endpoint', WithDecryption=True)['Parameter']['Value']

DB_USER = ssm.get_parameter(Name='/cgpt-amazon-price-alert/db-user', WithDecryption=True)['Parameter']['Value']

DB_PASSWORD = ssm.get_parameter(Name='/cgpt-amazon-price-alert/db-password', WithDecryption=True)['Parameter']['Value']

DB_NAME = ssm.get_parameter(Name='/cgpt-amazon-price-alert/db-name', WithDecryption=True)['Parameter']['Value']

# Slack Bot connection information

SLACK_BOT_TOKEN = ssm.get_parameter(Name='/cgpt-amazon-price-alert/slack-bot-token', WithDecryption=True)['Parameter']['Value']

SLACK_CHANNEL = ssm.get_parameter(Name='/cgpt-amazon-price-alert/slack-channel', WithDecryption=True)['Parameter']['Value']

# Database connection

conn = pymysql.connect(host=DB_ENDPOINT, user=DB_USER, password=DB_PASSWORD, database=DB_NAME)

# Slack Notify

def notify_slack(message):

try:

client = WebClient(token=SLACK_BOT_TOKEN)

response = client.chat_postMessage(channel=SLACK_CHANNEL, text=message)

except SlackApiError as e:

logger.error("Error sending message: {}".format(e.response['error']))

def get_price(url):

### Deleted because scraping Amazon website is prohibited now. ###

def main():

# Get product information from Database

cursor = conn.cursor()

cursor.execute("SELECT product_code, price FROM product_price")

rows = cursor.fetchall()

# Get Amazon product price and compare with previous one.

for row in rows:

# Get the price from Amazon

product_code, previous_price = row

url = f"https://www.amazon.co.jp/dp/{product_code}"

current_price = get_price(url)

# Comparing with previous price

if current_price < previous_price:

message = f"Product {product_code} price was lower than before. Previous price: {previous_price} Yen, Current price: {current_price} Yen"

notify_slack(message)

# Update DB product information.

cursor.execute("UPDATE products SET price=%s WHERE product_code=%s", (current_price, product_code))

conn.commit()

if __name__ == "__main__":

main()

Registration of necessary keys with SSM:

aws ssm put-parameter \

--name "/cgpt-amazon-price-alert/db-endpoint" \

--value "your_db_endpoint" \

--type "SecureString"

aws ssm put-parameter \

--name "/cgpt-amazon-price-alert/db-user" \

--value "your_db_username" \

--type "SecureString"

aws ssm put-parameter \

--name "/cgpt-amazon-price-alert/db-password" \

--value "your_db_password" \

--type "SecureString"

aws ssm put-parameter \

--name "/cgpt-amazon-price-alert/db-name" \

--value "your_db_name" \

--type "SecureString"

aws ssm put-parameter \

--name "/cgpt-amazon-price-alert/slack-bot-token" \

--value "your_slack_bot_token" \

--type "SecureString"

aws ssm put-parameter \

--name "/cgpt-amazon-price-alert/slack-channel" \

--value "your_slack_channel" \

--type "SecureString"

CloudFormation Template:

---

AWSTemplateFormatVersion: 2010-09-09

Parameters:

VpcId:

Type: AWS::EC2::VPC::Id

Description: "VPC ID"

SubnetId:

Type: AWS::EC2::Subnet::Id

Description: "Subnet ID"

Resources:

EC2Instance:

Type: AWS::EC2::Instance

Metadata:

AWS::CloudFormation::Init:

config:

commands:

01_install_mysql:

command: |

amazon-linux-extras install -y epel

yum install -y https://dev.mysql.com/get/mysql80-community-release-el7-3.noarch.rpm

yum --nogpgcheck install -y mysql-community-server

systemctl start mysqld

systemctl enable mysqld

02_generate_password:

command: |

echo $(grep 'temporary password' /var/log/mysqld.log | awk '{print $NF}') > /tmp/mysql_root_password

03_install_slack_sdk:

command: |

yum install -y python3-pip

pip3 install slack-sdk

04_install_beautifulsoup4:

command: |

pip3 install beautifulsoup4

05_install_lxml:

command: |

pip3 install lxml

06_install_requests:

command: |

pip3 install requests

services:

sysvinit:

mysqld:

enabled: true

ensureRunning: true

Properties:

ImageId: ami-05b5badc2f7ddd88d

InstanceType: t3.micro # Instance Type

KeyName: cgpt-amazon-price-alert-key # Keypair name for connection to EC2 instance.

UserData:

Fn::Base64: !Sub |

#!/bin/bash

echo "export AWS_REGION=${AWS::Region}" >> /etc/profile.d/aws_region.sh

chmod +x /etc/profile.d/aws_region.sh

source /etc/profile.d/aws_region.sh

/opt/aws/bin/cfn-init --stack ${AWS::StackName} --resource EC2Instance --region ${AWS::Region}

yum update -y

yum install -y python3

pip3 install boto3

pip3 install pymysql

pip3 install slack-sdk

mkdir /app

touch /app/check_price.py

touch /var/log/check_price.log

chown ec2-user:ec2-user /var/log/check_price.log

echo '*/1 * * * * ec2-user /usr/bin/python3 /app/check_price.py' > /etc/cron.d/check_price-cron

IamInstanceProfile: !Ref InstanceProfile

NetworkInterfaces:

- AssociatePublicIpAddress: true

DeviceIndex: 0

GroupSet:

- !Ref InstanceSecurityGroup

SubnetId: !Ref SubnetId

InstanceSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Allow SSH access

VpcId: !Ref VpcId

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: 22

ToPort: 22

CidrIp: 0.0.0.0/0

InstanceProfile:

Type: AWS::IAM::InstanceProfile

Properties:

Path: /

Roles:

- !Ref InstanceRole

InstanceRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- ec2.amazonaws.com

Action:

- sts:AssumeRole

Path: /

Policies:

- PolicyName: SSMParameterAccess

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- ssm:GetParameter

Resource: "*"

It took time for me to prepare MySQL DB initialization, so I used ChatGPT to create shell scripts.

(Actually following code has non-secured part "password direct input" so better not to use.)

#!/bin/bash

# Variables

initial_password="your_initial_password"

new_password="your_new_password"

# Log in to MySQL and execute commands

mysql -u root -p"${initial_password}" <<EOF

-- Change the initial password

ALTER USER 'root'@'localhost' IDENTIFIED BY '${new_password}';

-- Create the amazonprice database

CREATE DATABASE amazonprice;

-- Use the amazonprice database

USE amazonprice;

-- Create the product_price table

CREATE TABLE product_price (

product_code VARCHAR(50) NOT NULL,

price DECIMAL(10,2) DEFAULT NULL,

PRIMARY KEY (product_code)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

-- Insert sample data into the product_price table

INSERT INTO product_price (product_code, price) VALUES ('your_price_code', 1000.00);

EOF

Procedure of executing them:

chmod +x setup_mysql.sh

./setup_mysql.sh

Conclusion

As I mentioned at the beginning, this seems to be a very powerful service to increase the initial speed of development. However, if you try to do more than increase the initial speed, it will take a lot of time, so now, I would say it is best to use it in the early stages of development.

Note that the code is finished with a lot of things I want to point out, like digging a folder in the root with UserData and only root can access it, or exception handling, etc. I don't get paid with this as products.

(Appendix) What I needed to communicate with ChatGPT several times

There were several incomplete parts with first message I mentioned at the top. So I communicated with ChatGPT several times to upgrade the quality.

As total, there were 40 to 50 times of communications with ChatGPT.

Below are main parts that I communicated several times.

- Values such as DB_ENDPOINT were defined in environment variables. →Improved to get them from SSM.

- Installation of required libraries was not described enough in CFT (CloudFormation Template).

- VPC/Subnet, etc. were not described and CFn stack generation was not possible.

- After several communications, previous modification points were degraded. I don't want to be human-like to such a point :)

- MySQL installation was not included in the CFn template.

- There was a problem that the call to Metadata in CFT was not listed in the Userdata part and the Metadata part was not executed.

- MySQL installation had several errors, including a GPG key error.

- The region specification required to access AWS resources and the IAM role setting to attach to EC2 were missing.

- ChatGPT provided scraping code even though Amazon has anti-scraping measures. (In this case, I did not modify the code to use AmazonAPI, but if I explicitly specified that AmazonAPI should be used, it could be generated by ChatGPT.)

Top comments (0)