(Updated on 12, 2, 2022)

Introduction

This article is about machine learning and for beginners like me. I am always not sure how I decide the numbers of layers and units of each layer when I build a model. In this article, I will explore the effect of them those with California housing dataset.

All of the following codes are in my git repository. You can perform the following each experiment by cloning and then running the notebook.

github repository: comparison_of_dnn

Note that, this is not a "guide", this is a memo from a beginner to beginners. If you have any comment, suggestion, question, etc. whilst reading this article, please let me know in the comments below.

California housing dataset

California housing dataset is for regression. It has eight features and one target value. We can get the dataset using sklearn.datasets.fetch_california_housing() function. The eight features are as follows.

- MedInc: median income in block group

- HouseAge: median house age in block group

- AveRooms: average number of rooms per household

- AveBedrms: average number of bedrooms per household

- Population: block group population

- AveOccup: average number of household members

- Latitude: block group latitude

- Longitude: block group longitud

The one target value is as follows:

- MedHouseVal: median house value for California districts, expressed in hundreds of thousands of dollars

As I said, it is for regression, so I will build a model whose the inputs are those features and the output is the target value.

I will not analyze this dataset carefully, but do just a little bit using pandas.DataFrame class' methods.

Let's see the information to check if there are missing values.

Input:

import sys

sys.path.append("./src")

import src.utils

# Load dataset

callifornia_df = src.utils.load_california_housing()

callifornia_df.info()

Output:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 20640 entries, 0 to 20639

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 MedInc 20640 non-null float64

1 HouseAge 20640 non-null float64

2 AveRooms 20640 non-null float64

3 AveBedrms 20640 non-null float64

4 Population 20640 non-null float64

5 AveOccup 20640 non-null float64

6 Latitude 20640 non-null float64

7 Longitude 20640 non-null float64

8 MedHouseVal 20640 non-null float64

dtypes: float64(9)

memory usage: 1.4 MB

There are no missing values. Then, let's see some statistics.

Input:

callifornia_df.describe().drop(["count"])

Output:

MedInc HouseAge AveRooms AveBedrms Population \

mean 3.870671 28.639486 5.429000 1.096675 1425.476744

std 1.899822 12.585558 2.474173 0.473911 1132.462122

min 0.499900 1.000000 0.846154 0.333333 3.000000

25% 2.563400 18.000000 4.440716 1.006079 787.000000

50% 3.534800 29.000000 5.229129 1.048780 1166.000000

75% 4.743250 37.000000 6.052381 1.099526 1725.000000

max 15.000100 52.000000 141.909091 34.066667 35682.000000

AveOccup Latitude Longitude MedHouseVal

mean 3.070655 35.631861 -119.569704 2.068558

std 10.386050 2.135952 2.003532 1.153956

min 0.692308 32.540000 -124.350000 0.149990

25% 2.429741 33.930000 -121.800000 1.196000

50% 2.818116 34.260000 -118.490000 1.797000

75% 3.282261 37.710000 -118.010000 2.647250

max 1243.333333 41.950000 -114.310000 5.000010

Comparison

For the sake of simplicity, I suppose the following conditions.

- A model is fixed all conditions except for the number of layers and the numbers of units of each layer.

- Any data preprocessing is not performed.

- Seed is fixed.

So, the following discussion is under those conditions, but if you want to change those conditions or remove those conditions, it will be done soon. Most of them, all of you have to do is changing config_california.yaml. It has the following statements.

mlflow:

experiment_name: california

run_name: default

dataset:

eval_size: 0.25

test_size: 0.25

train_size: 0.75

shuffle: True

dnn:

n_layers: 3

n_units_list:

- 8

- 4

- 1

activation_function_list:

- relu

- relu

- linear

seed: 57

dnn_train:

epochs: 30

batch_size: 4

patience: 5

You only have to change 57 in seed block to other integer or None if you perform an experiment under other fixed seed or without fixing seed, respectively.

result

The loss summary is as follows.

| #layers | training loss | evaluation loss | test loss |

|---|---|---|---|

| three (tiny #units) | 0.616 | 0.565 | 0.596 |

| four | 0.54 | 0.506 | 0.53 |

| five | 0.543 | 1.126 | 1.2 |

| six | 0.515 | 0.49 | 0.512 |

| seven | 1.31 | 1.335 | 1.377 |

| three (many #units) | 0.537 | 0.515 | 0.555 |

The best model is one that has six layers in the sense of the test loss (actually, in the sense of all of losses). The test losses of the five and the seven ones are near, but the training results are not near at all. In the training of seventh one, vanishing gradient problem occurs. This is probably occurred due to the deep depth.

I will plot the predicted values and the true target values. It tells us the fourth one is also the best in the sense of each prediction.

The models from the first to fifth have different numbers of the number of units. The last model whose three layers and the number of units are similar to the one of the fourth model was built to compare the effect of the number of layers and the one of the number of units. As the result, the fourth model is more better than another and it implies the depth of layers is more important that the number of units at least for California dataset. Obviously, the difference between the maximum true target value and the mean one is greater than the difference between the minimum one and mean one in California dataset. The depth of layers probably is effective in tolerance to outliers.

Further, by comparing the first model and the last model, the effect of the number of nodes is appeared. Obviously, the number of nodes contributes to fit better.

See the following sections for more information.

tiny three layers (two hidden layers plus one output layer)

This structure is as follows.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_3 (Dense) (None, 8) 72

dense_4 (Dense) (None, 4) 36

dense_5 (Dense) (None, 1) 5

=================================================================

Total params: 113

Trainable params: 113

Non-trainable params: 0

_________________________________________________________________

The final losses are as follows.

- training loss: 0.616

- evaluation loss: 0.565

- test loss: 0.596

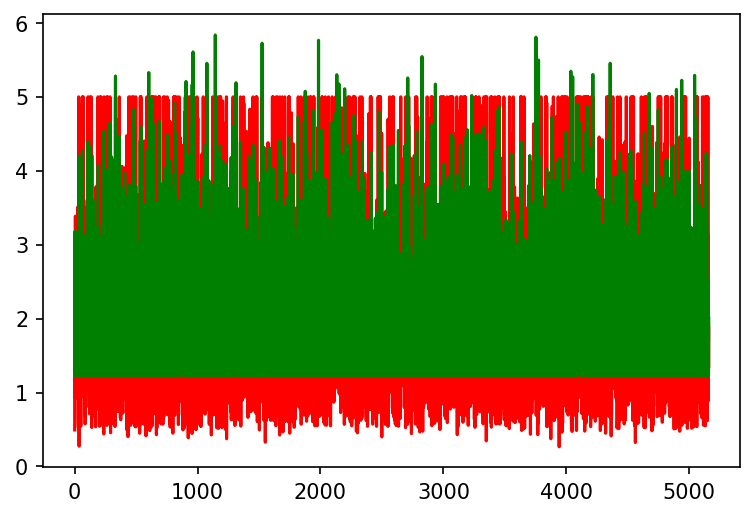

The prediction results are as follows.

The green line represents the predicted target values and the red line does the true target values.

The invisible lower limit line is there and some predicted values are greater than the maximum true value. It probably implies that the model is underfitting the data.

four layers (three hidden layers plus one output layer)

This structure is as follows.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_10 (Dense) (None, 16) 144

dense_11 (Dense) (None, 8) 136

dense_12 (Dense) (None, 4) 36

dense_13 (Dense) (None, 1) 5

=================================================================

Total params: 321

Trainable params: 321

Non-trainable params: 0

_________________________________________________________________

The final losses are as follows.

- training loss: 0.54

- evaluation loss: 0.506

- test loss: 0.53

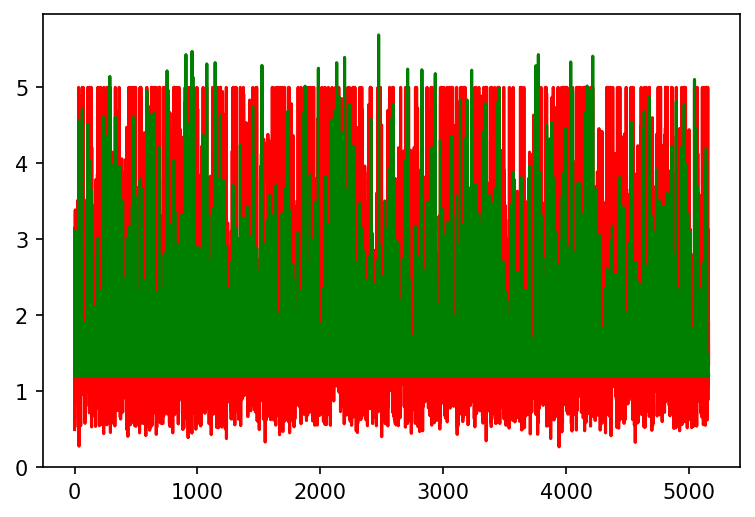

The prediction results are as follows.

The green line represents the predicted target values and the red line does the true target values. Note that, it is drown the first 500 values for good visibility.

The invisible line is still there. The number of the predicted values that are greater than the maximum true value is less than the predicted values from the three layers model, but there are still some predicted values that are greater than the maximum true value.

five layers (four hidden layers plus one output layer)

This structure is as follows.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_19 (Dense) (None, 32) 288

dense_20 (Dense) (None, 16) 528

dense_21 (Dense) (None, 8) 136

dense_22 (Dense) (None, 4) 36

dense_23 (Dense) (None, 1) 5

=================================================================

Total params: 993

Trainable params: 993

Non-trainable params: 0

_________________________________________________________________

The final losses are as follows.

- training loss: 0.543

- evaluation loss: 1.126

- test loss: 1.2

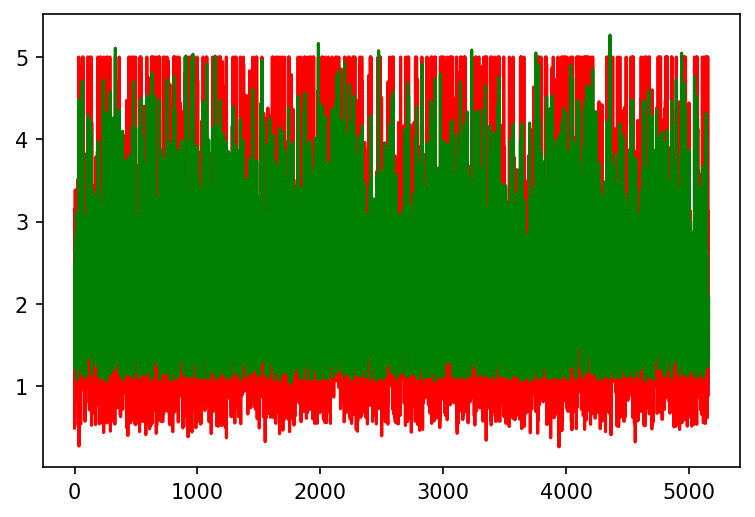

The prediction results are as follows.

The green line represents the predicted target values and the red line does the true target values.

The invisible line is still there. The number of high predicted values is less than before. Whilst that, some of the predicted values are smaller than before. It might be overfitting.

six layers (five hidden layers plus one output layer)

This structure is as follows.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_30 (Dense) (None, 64) 576

dense_31 (Dense) (None, 32) 2080

dense_32 (Dense) (None, 16) 528

dense_33 (Dense) (None, 8) 136

dense_34 (Dense) (None, 4) 36

dense_35 (Dense) (None, 1) 5

=================================================================

Total params: 3,361

Trainable params: 3,361

Non-trainable params: 0

_________________________________________________________________

The final losses are as follows.

- training loss: 0.515

- evaluation loss: 0.49

- test loss: 0.512

The prediction results are as follows.

The green line represents the predicted target values and the red line does the true target values.

The invisible horizontal line is collapsed a bit. The line is still there, but the horizontality is certainly less than before. Also the number of high predicted values are less than before.

seven layers (six hidden layers plus one output layer)

This structure is as follows.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_43 (Dense) (None, 128) 1152

dense_44 (Dense) (None, 64) 8256

dense_45 (Dense) (None, 32) 2080

dense_46 (Dense) (None, 16) 528

dense_47 (Dense) (None, 8) 136

dense_48 (Dense) (None, 4) 36

dense_49 (Dense) (None, 1) 5

=================================================================

Total params: 12,193

Trainable params: 12,193

Non-trainable params: 0

_________________________________________________________________

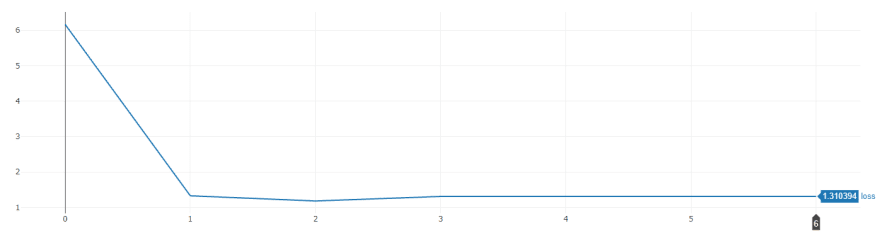

The final losses are as follows.

- training loss: 1.31

- evaluation loss: 1.335

- test loss: 1.377

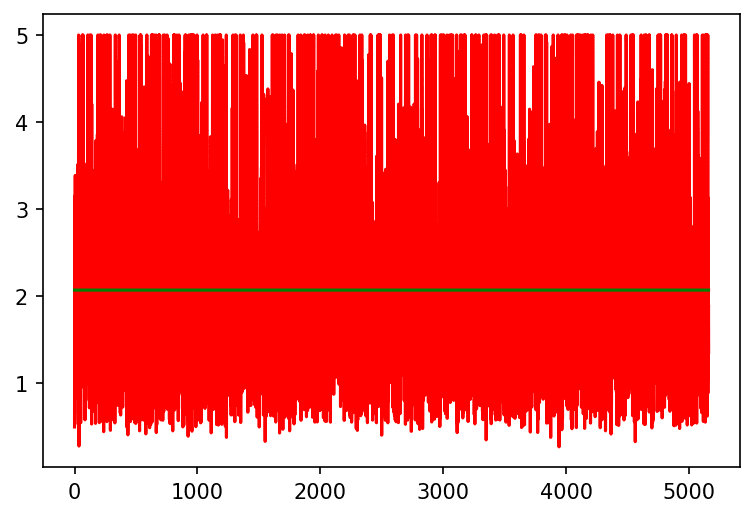

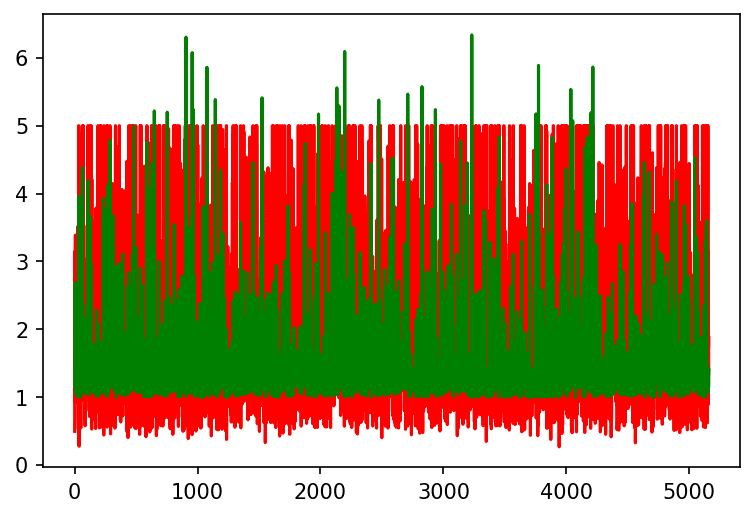

The prediction results are as follows.

The green line represents the predicted target values and the red line does the true target values.

The model outputted constant for all of inputs. Vanishing gradient probably occurred. Actually the training loss converged soon:

three layers with almost 3300 units (two hidden layers plus one output layer)

This structure is as follows.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_67 (Dense) (None, 72) 648

dense_68 (Dense) (None, 36) 2628

dense_69 (Dense) (None, 1) 37

=================================================================

Total params: 3,313

Trainable params: 3,313

Non-trainable params: 0

_________________________________________________________________

The final losses are as follows.

- training loss: 0.537

- evaluation loss: 0.515

- test loss: 0.555

The prediction results are as follows.

Same to six layers model, the horizontality of the invisible line is less than others. Whilst that, the number of the high predicted values are obviously greater than one of six layers model.

Conclusion

I explored effect of the number of layers and nodes with California dataset. As the result, I found that the bigger number of layers and the bigger number of units are effective to fit better, but the huge number of layers causes vanishing gradient problem.

It is excellent to write codes and perform experiments by myself, but unfortunately, I am still not sure how I decide the numbers. I will explore the effect with other datasets again.

Again, I would so appreciate you if you give me comment, suggestion, question, etc.

Top comments (0)