"What Happens When You Put AI Agents in a Crew and Ask Them to Write a Blog?” - I was curious too. So I built one.

This blog guides you step by step on how you can spin up your own AI-powered writing crew and how to use modern Agent building tools and frameworks to supercharge your agent building process.

LinkedIn, Reddit, Twitter, even Dev.to everywhere I go, everyone is building AI agents! But,

- Either your vibe-coded mess of agents lack observability and you treat it like a blackbox imbued with an inviolable epistemic sanctity and your prompts as “sacrosanct” because “they just work”.

- Or you are a novice who has no idea of what everyone is raving about but want to join the bandwagon of Agentic AI and want someplace to start.

Whichever camp you’re in, this guide should help.

Following the unwritten rules of writing blogs let me give you a basic Introduction on what AI agents are, according to the guys at IBM “An artificial intelligence (AI) agent refers to a system or program that is capable of autonomously performing tasks on behalf of a user or another system by designing its workflow and utilizing available tools.” [cite: https://www.ibm.com/think/topics/ai-agents]

However, lets be real, think of AI agents as an unpaid intern in your company, he/she does the tasks you don’t want to do, has decent understanding of how to use basic tools to perform certain tasks, does not get paid even though they work very hard and might screw up your tasks if left unobserved!

A few years ago(in the pre-LLM era), building something like this would’ve required a team of researchers at a Big Tech company with a sizeable research budget. Now? With some Python and a weekend, you can spin up your own AI-powered team right from your laptop.

What are we building?

Now, before we start invoking the gods of programming, it is essential we understand the end-goal of our build, tools we would require and have an overall plan for the project.

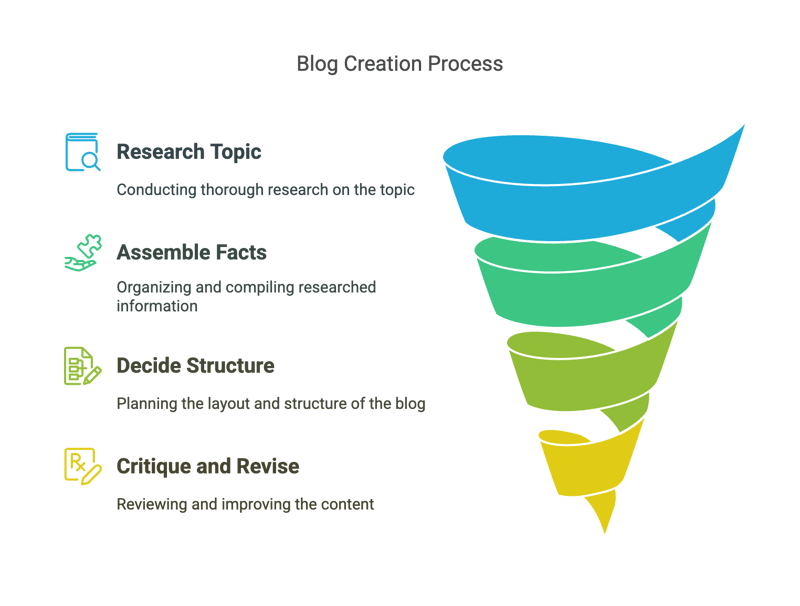

We are building a crew of agents that can take a topic for a blog, perform detailed research on the topic, assemble the facts, decide on a structure for the blog, critique and revise the contents and then finally output a well researched, well written blog.

Meet the Crew

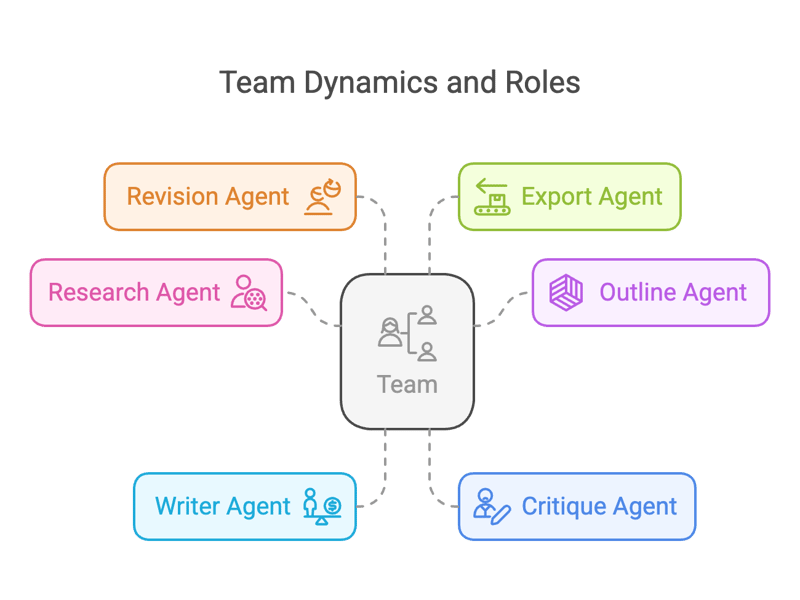

Here’s the team we’re assembling, each with its own quirks and responsibilities:

- Research Agent - Finds information, has ADHD and is hyperactive

- Outline Agent - Defines structure, loves telling others what to do

- Writer Agent - Writes stuff, does the heavy lifting but isn’t appreciated enough

- Critique Agent - Critiques everything, is hard to satisfy

- Revision Agent - Writer Agent who was demoted to revising stuff

- Export Agent - Doesn’t care what the group talks about, only exports when the work is done

Tools of the trade

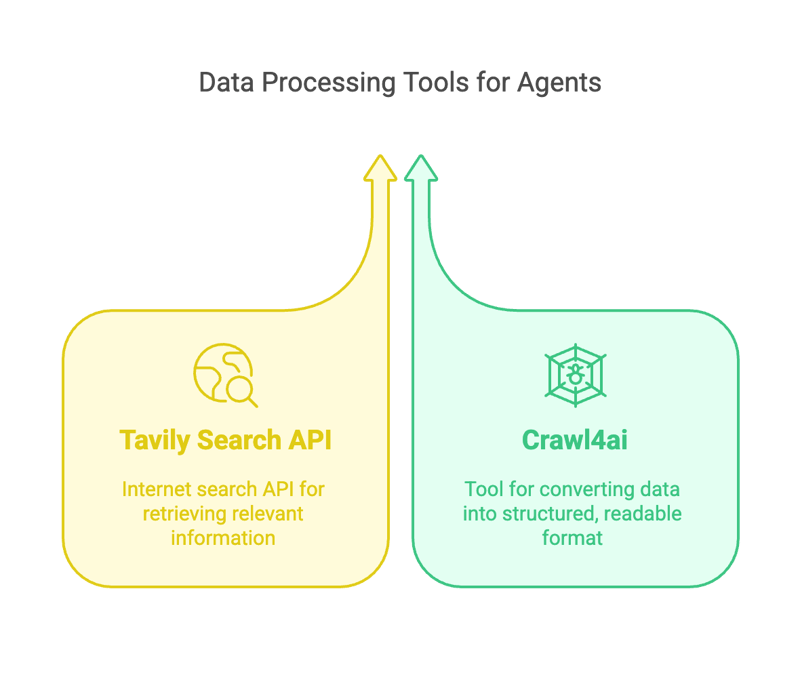

Now that we have assembled our team of agents, we’ll need certain tools for our agents to perform the tasks they are assigned:

- Tavily Search API - for searching for information on the internet

- Crawl4ai - structuring the information from the internet into a readable format for the LLM

Now, since I am not a millionaire, I like things that are free, so for LLM inferencing, we will be using Google AI studio, it provides you access to the top Gen AI models from Google for free and moreover does not ask for your Credit Card information.

Observability (AKA How to Stop Your AI Agents from Going Full Ultron)

While building agents sound fun, watching them work in a black box is not.

We need to be able to observe our agents in action and be able to evaluate how our agents are performing in real life, thus, for observability of our AI agents we will be using Maxim AI (https://getmaxim.ai) - Believe me when I say, if you don’t want your AI system to become an uncontrollable, psychopathic, maniac like Ultron and prefer something more like Jarvis, you need to incorporate observability in your AI projects.

It will not only save time in building and debugging complex systems but would help you tame the AI beast and not treat agentic workflows like a blackbox.

It’s the difference between hoping your agents do the right thing and knowing they do.

Building the Agent Crew

For the purpose of building a crew of agents we will be using CrewAI [https://www.crewai.com/], it is sufficiently beginner friendly and allows for a certain level of complexity for the pro-users as well. The good thing about this framework is that when you are starting off with a multi-agent setup that is pretty straight forward, it should satisfy most of your requirements, but if you are building your billion dollar startup on CrewAI’s rock, may God have mercy on your soul.

I am using Google Colab to write the code, the full notebook will be attached below at the end.

So, lets get started with Coding, we start by importing the necessary packages:

import os

import json

from crewai import Crew, Task, Agent

from crewai.tools import BaseTool

from typing import List, Dict, Any, Union

from pydantic import BaseModel, Field

from tavily import TavilyClient

from typing import Annotated, Optional, Any, Type

from crewai import LLM

import asyncio

import nest_asyncio

from tavily import TavilyClient

from crawl4ai import AsyncWebCrawler

import asyncio

from crawl4ai.async_configs import BrowserConfig, CrawlerRunConfig

nest_asyncio.apply()

Next, we will be loading our API keys for Tavily Search, Google AI Studio and Maxim AI from a .env file, the .env file would look like this:

GEMINI_API_KEY=************************

TAVILY_API_KEY=************************

MAXIM_API_KEY=*************************

We can import the API Keys into our notebook environment using the below code block:

from dotenv import load_dotenv

load_dotenv()

Next, we will define the Tavily Client and the LLM client:

tavily_client = TavilyClient(api_key=os.getenv("TAVILY_API_KEY"))

llm = LLM(

model="gemini/gemini-1.5-flash-latest",

temperature=0.7,

)

You can run the LLM client and see the output, to make sure your LLM client is working:

response = llm.call("What is the capital of France?")

print(response)

[2025-03-16 19:43:21][🤖 LLM CALL STARTED]: 2025-03-16 19:43:21.543898

[2025-03-16 19:43:23][✅ LLM CALL COMPLETED]: 2025-03-16 19:43:23.502009

Paris

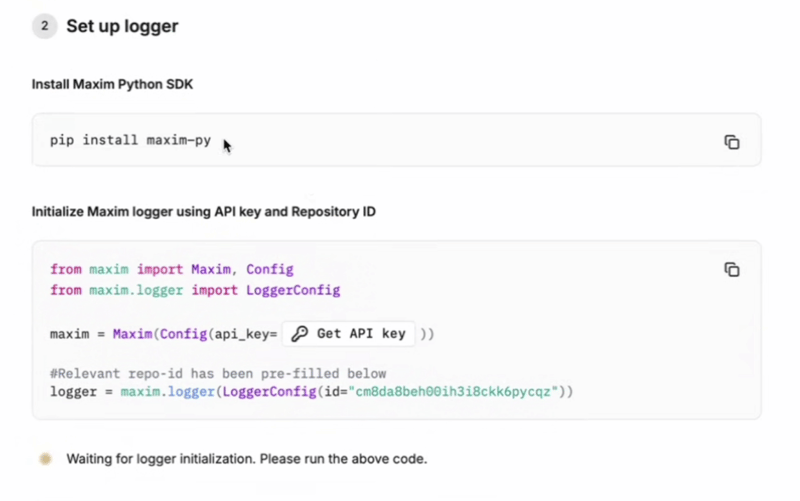

Setting up Maxim AI for log tracing

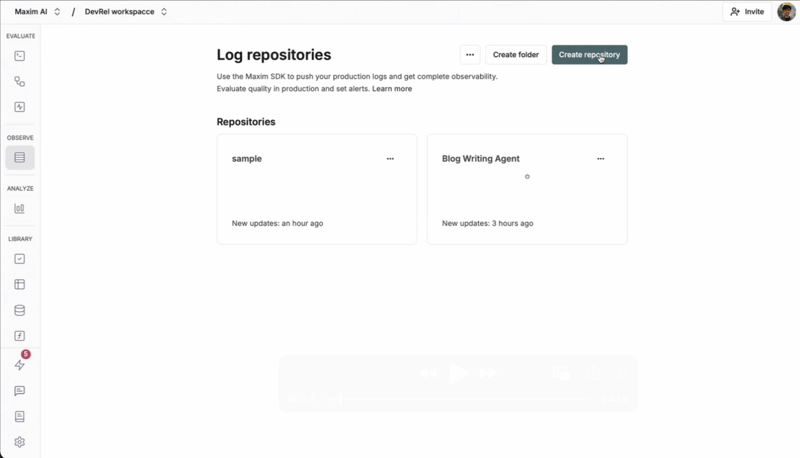

Create an account on https://getmaxim.ai

From the dashboard navigate to Observe → Logs and click on the Create Repository Button

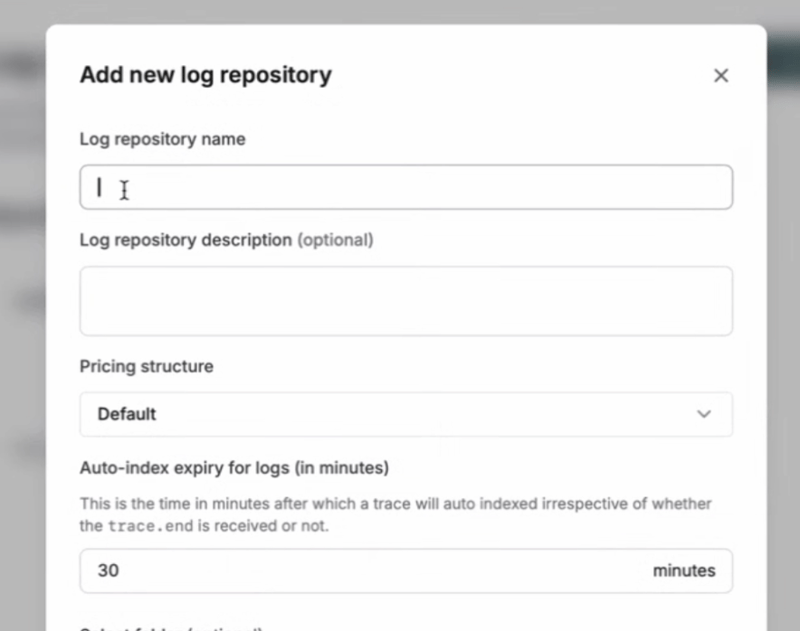

Create a new log repository by selecting a repository name

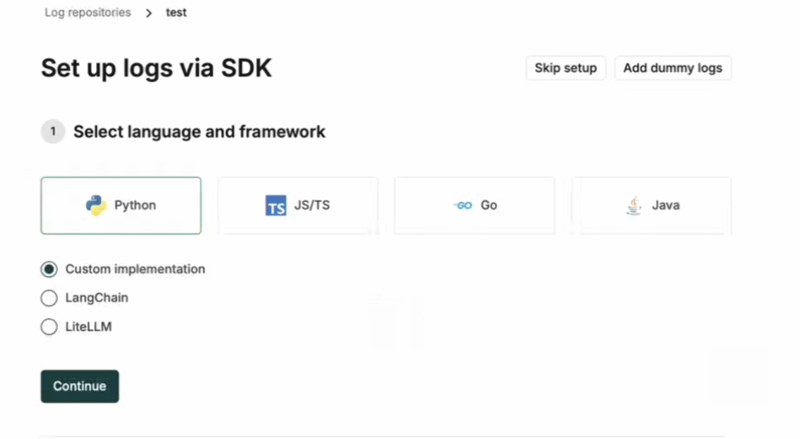

Select Python for Language and Custom implementation as the framework

Install maxim Python SDK on your colab environment using the command:

!pip install maxim-py

Follow the guide to Get an API Key from Maxim and add it to your .env file

Using the following code, connect the SDK to your Log Repository:

from maxim import Maxim, Config

from maxim.logger import LoggerConfig

from maxim.logger.components.trace import TraceConfig

import uuid

from maxim.logger import ToolCallConfig

maxim = Maxim(Config(api_key=os.getenv("MAXIM_API_KEY")))

logger = maxim.logger(LoggerConfig(id="YOUR_LOG_REPO_ID_FROM_MAXIM_DASHBOARD"))

Once the SDK is properly setup you will be able to see a success message:

In Crew AI there are certain concepts which we need to understand before we delve deeper into building our crew of agents. Crew AI helps you build modular agent swarms by simply defining the configuration of each agent in the crew. In order to enable the agents to perform certain tasks, the agents are provided with certain tools and are assigned certain tasks.

Each Agent is defined by the following parameters in Crew AI:

#role="Agent Role",

# goal="",

# backstory="",

# llm="gpt-4", # Default: OPENAI_MODEL_NAME or "gpt-4"

# function_calling_llm=None, # Optional: Separate LLM for tool calling

# llm=llm,

# memory=True, # Default: True

# verbose=True, # Default: False

# allow_delegation=False, # Default: False

# max_iter=20, # Default: 20 iterations

# max_rpm=None, # Optional: Rate limit for API calls

# max_execution_time=None, # Optional: Maximum execution time in seconds

# max_retry_limit=2, # Default: 2 retries on error

# allow_code_execution=False, # Default: False

# code_execution_mode="safe", # Default: "safe" (options: "safe", "unsafe")

# respect_context_window=True, # Default: True

# use_system_prompt=True, # Default: True

# tools=[tavily_search_tool, web_crawler_tool], # Optional: List of tools

# knowledge_sources=None, # Optional: List of knowledge sources

# embedder=None, # Optional: Custom embedder configuration

# system_template=None, # Optional: Custom system prompt template

# prompt_template=None, # Optional: Custom prompt template

# response_template=None, # Optional: Custom response template

# step_callback=None, # Optional: Callback function for monitoring

An agent has access to multiple tools that it can use, the tool class contains a description for how the agent can use the tool and the agent can decide to use the tool when it deems it suitable. For our use case we will be creating a number of tools that our agents will be requiring.

Creating the Tavily Search Tool

Now that you have successfully integrated the Maxim AI SDK to your workflow, we will build the Search tool that would enable our agents to search the internet. The tool uses the Tavily Search API to look for relevant information on the internet and has a decent API limit for you to experiment and explore different applications of Search integrated LLM applications.

class TavilySearchInput(BaseModel):

query: Annotated[str, Field(description="The search query string")]

max_results: Annotated[

int, Field(description="Maximum number of results to return", ge=1, le=10)

] = 5

search_depth: Annotated[

str,

Field(

description="Search depth: 'basic' or 'advanced'",

choices=["basic", "advanced"],

),

] = "basic"

class TavilySearchTool(BaseTool):

name: str = "Tavily Search"

description: str = (

"Use the Tavily API to perform a web search and get AI-curated results."

)

args_schema: Type[BaseModel] = TavilySearchInput

client: Optional[Any] = None

def __init__(self, api_key: Optional[str] = None):

super().__init__()

self.client = TavilyClient(api_key=api_key)

def _run(self, query: str, max_results=5, search_depth="basic") -> str:

if not self.client.api_key:

raise ValueError("TAVILY_API_KEY environment variable not set")

try:

response = self.client.search(

query=query, max_results=max_results, search_depth=search_depth

)

output = self._process_response(response)

# send trace

trace = logger.trace(TraceConfig(

id=str(uuid.uuid4()),

name="Tavily Search Trace",

))

tool_call_config = ToolCallConfig(

id='tool_tavily_search',

name='Tavily Search',

description="Use the Tavily API to perform a web search and get AI-curated results.",

args= { "query": query, "output" : output, "max_results": max_results, "search_depth": search_depth },

tags={ "tool": "tavily_search" }

)

trace_tool_call = trace.tool_call(tool_call_config)

return output

except Exception as e:

return f"An error occurred while performing the search: {str(e)}"

def _process_response(self, response: dict) -> str:

if not response.get("results"):

return "No results found."

results = []

for item in response["results"][:5]: # Limit to top 5 results

title = item.get("title", "No title")

content = item.get("content", "No content available")

url = item.get("url", "No URL available")

results.append(f"Title: {title}\\nContent: {content}\\nURL: {url}\\n")

return "\\n".join(results)

tavily_search_tool = TavilySearchTool()

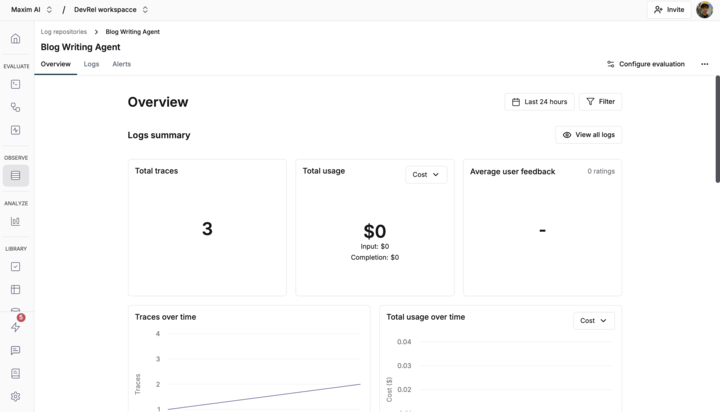

This implementation of the Tavily Search API integrates the log tracing capabilities of Maxim AI into the Search tool so that you are able to see the information being passed to your agent directly from the Maxim Dashboard. If you want to dive deeper into how Maxim AI Log tracing works, feel free to refer to the docs on https://www.getmaxim.ai/docs/observe/concepts.

Implementing the Crawl4Ai Web Crawler Tool

Based on the search results returned from Tavily API, we would want our Agent to be able to gather more information from the websites fetches through search. In order to do this we are using Crawl4AI which is a web-crawler capable of crawling multiple URLs, extract their information in markdown format and gather images from those pages. Since, crawl4ai organises the data in markdown, it is easy for LLMs to read the information and build context of the contents of the websites.

from bs4 import BeautifulSoup

class WebCrawlerTool(BaseTool):

name: str = "Web Crawler"

description: str = "Crawls websites and extracts text and images."

async def crawl_url(self, url: str) -> Dict:

"""Crawl a URL and extract its content."""

print(f"Crawling URL: {url}")

try:

browser_config = BrowserConfig()

run_config = CrawlerRunConfig()

async with AsyncWebCrawler(config=browser_config) as crawler:

result = await crawler.arun(url=url, config=run_config)

# Extract images if available

images = []

soup = BeautifulSoup(Markdown.markdown(result.markdown), 'html.parser')

for img in soup.find_all('img'):

if img.get('src'):

images.append({

"url": img.get('src'),

"alt": img.get('alt', ''),

"source_url": url

})

return {

"content": result.markdown,

"images": images,

"url": url,

"title": result.metadata.get("title", "")

}

except Exception as e:

return {"error": f"Failed to crawl {url}: {str(e)}", "url": url}

def _run(self, urls: List[str]) -> Dict[str, Any]:

"""Implements the abstract _run method to integrate with Crew AI."""

print(f"Crawling {len(urls)} URLs")

results = {}

async def process_urls():

tasks = [self.crawl_url(url) for url in urls]

return await asyncio.gather(*tasks)

crawl_results = asyncio.run(process_urls())

for result in crawl_results:

url = result.get("url")

if url:

results[url] = result

return results

# Instantiate tool for use in Crew AI

web_crawler_tool = WebCrawlerTool()

Creating the File Saver Tool

The file saver tool allows our agents to save created files locally:

class FileSaverTool(BaseTool):

name: str = "File Saver"

description: str = "Saves a file to a specified local folder."

def _run(self, file_content: str, save_folder: str, file_name: str) -> str:

"""Save the given content as a file in the specified folder."""

try:

if not os.path.exists(save_folder):

os.makedirs(save_folder)

save_path = os.path.join(save_folder, file_name)

with open(save_path, 'w', encoding='utf-8') as file:

file.write(file_content)

return f"File saved at {save_path}"

except Exception as e:

return f"Error saving file: {str(e)}"

# Instantiate tool for use in Crew AI

file_saver_tool = FileSaverTool()

Building the Agents

Crew AI allows us to create AI agents simply by defining certain parameters for our agents. Like all of us, each agent has a role, a goal and a backstory that we need to define along with a few other parameters depending on the individual agent’s role.

Our Research Agent needs to be able to use the Tavily Search Tool and the Web Crawler tool to be able to conduct thorough research on a topic. With Crew AI building AI agents is as simple as defining an Agent Schema, Crew AI handles most of the complexity associated with the Agent. Here is the code for the Research Agent:

research_agent = Agent(

role="Technical Researcher",

goal="Search the web for information & blogs on the topic of {topic} provided by the user and extract the findings in a structured format.",

backstory="With over 10 years of experience in technical research, you can help users find the most relevant information on any topic.",

# llm="gpt-4", # Default: OPENAI_MODEL_NAME or "gpt-4"

# function_calling_llm=None, # Optional: Separate LLM for tool calling

llm=llm,

memory=True, # Default: True

verbose=True, # Default: False

# allow_delegation=False, # Default: False

# max_iter=20, # Default: 20 iterations

# max_rpm=None, # Optional: Rate limit for API calls

# max_execution_time=None, # Optional: Maximum execution time in seconds

# max_retry_limit=2, # Default: 2 retries on error

# allow_code_execution=False, # Default: False

# code_execution_mode="safe", # Default: "safe" (options: "safe", "unsafe")

# respect_context_window=True, # Default: True

# use_system_prompt=True, # Default: True

tools=[tavily_search_tool, web_crawler_tool], # Optional: List of tools

# knowledge_sources=None, # Optional: List of knowledge sources

# embedder=None, # Optional: Custom embedder configuration

# system_template=None, # Optional: Custom system prompt template

# prompt_template=None, # Optional: Custom prompt template

# response_template=None, # Optional: Custom response template

# step_callback=None, # Optional: Callback function for monitoring

)

Similarly we define the outline agent, the writer agent, the critique agent, the export agent and the revision agent:

outline_agent = Agent(

role="Tech Content Outlining Expert",

goal="Create an outline for a technical blog post on the topic of {topic} provided by the user",

backstory="With years of experience in creating technical content, you can help the user outline your blog post on any topic.",

memory=True, # Default: True

verbose=True, # Default: False

llm=llm,

tools=[], # Optional: List of tools

)

writer_agent = Agent(

role="Tech Content Writer",

goal="Write a technical blog post on the topic of provided by the user",

backstory="With years of experience in writing technical content, you can help the user create a high-quality blog post on any topic in markdown format. You can also include images in the blog post & code blocks.",

memory=True, # Default: True

verbose=True, # Default: False

llm=llm,

tools=[], # Optional: List of tools

)

critique_agent = Agent(

role="Tech Content Critique Expert",

goal="Critique a technical blog post written by the writer agent",

backstory="With years of experience in critiquing technical content, you can help the user improve the quality of the blog post written by the writer agent.",

memory=True, # Default: True

verbose=True, # Default: False

llm=llm,

tools=[], # Optional: List of tools

)

revision_agent = Agent(

role="Tech Content Revision Expert",

goal="Revise a technical blog post based on the critique feedback provided by the critique agent",

backstory="With years of experience in revising technical content, you can help the user implement the feedback provided by the critique agent to improve the quality of the blog post.",

memory=True, # Default: True

verbose=True, # Default: False

llm=llm,

tools=[], # Optional: List of tools

)

export_agent = Agent(

role="Blog Exporter",

goal="Export the final blog post in markdown format in the folder location provided by the user - {folder_path}",

backstory="With experience in exporting technical content, you can help the user save the final blog post in markdown format to the specified folder location.",

memory=True, # Default: True

verbose=True, # Default: False

llm=llm,

tools=[file_saver_tool], # Optional: List of tools

)

As we have created the agents and the tools we need to assign tasks for each of our agents. This would enable our agents to perform certain actions based on the tasks it has been assigned. For example the Research Agent in our crew needs to perform detailed research on a topic by performing web searches and crawling the websites it finds to gather information about the topic we are researching about.

Defining the Tasks

Research Task - Conduct web searches and crawl relevant web pages for information:

research_task = Task(

description="""

Conduct a thorough about the topic {topic} provided by the user

Make sure you find any interesting and relevant information given

the current year is 2025. Use the Tavily Search tool to find the most

trending articles around the topic and use the Web Crawler tool to

extract the content from the top articles.

""",

expected_output="""

You should maintain a detailed raw content with all the findings. This should include the

extracted content from the top articles.

""",

agent=research_agent

)

Outline Task - Create a structured outline and logical flow for the blog:

outline_task = Task(

description="""

Create a structured outline for the technical blog post based on the research data.

Ensure logical flow, clear sections, and coverage of all essential aspects. Plan for necessary headings,

tables & figures, and key points to be included in the blog post.

The flow of every blog should be like this -

- Catcy Title

- 2-3 line introduction / opening statement

- Main Content (with subheadings)

- Conclusion

- References - Provide all the links that you have found during the research.

At any place if there is a need to draw an architecture diagram, you can put a note like this -

--- DIAGRAM HERE ---

--- EXPLANATION OF DIAGRAM ---

""",

expected_output="""

A markdown-styled hierarchical outline with headings, subheadings, and key points.

""",

agent=outline_agent

)

Writing Task - Generate the full markdown blog post, including relevant code snippets and images:

writing_task = Task(

description="""

Write a detailed technical blog post in markdown format, integrating research insights.

Keep the tone as first person and maintain a conversational style. The writer is young and enthusiastic

about technology but also knowledgeable in the field.

Include code snippets and ensure clarity and depth. Use the outline as a guide to structure the content.

Make sure to include required tables, comparisons and references.

""",

expected_output="""

A well-structured, easy-to-read technical blog post in markdown format.

""",

agent=writer_agent

)

Critique Task - Review the blog post for accuracy, clarity, and completeness:

critique_task = Task(

description="""

Review the blog post critically, checking for technical accuracy, readability, and completeness.

Provide constructive feedback with clear suggestions for improvement.

Check if the content is not very boring to read and should be engaging. Necessary Tables and Figures should be included.

The final article that is approved should be production ready, it can not have any place where we have

used drafts or questions by LLM. It can just have the diagram placeholder.

""",

expected_output="""

A markdown document with detailed feedback and proposed changes.

""",

agent=critique_agent

)

Revision Task - Improve the blog post based on feedback from the critique agent:

revision_task = Task(

description="""

Revise the blog post based on critique feedback, ensuring higher quality and clarity.

""",

expected_output="""

An improved version of the markdown blog post incorporating all necessary changes.

""",

agent=revision_agent

)

Export Task - Save the final markdown file, images, and metadata to a designated folder:

export_task = Task(

description="""

Save the final blog post in markdown format to the specified folder location.

""",

expected_output="""

A markdown file stored at the designated location in the same folder.

""",

agent=export_agent

)

Defining the Crew

Now that we have created, the agents, defined the tools for the agents and assigned tasks to the agents, we need to define the agent crew so that our agents can work together in tandem and generate blogs for us on a topic of our choice. Here is how we define the crew in our project:

crew = Crew(

agents=[research_agent, outline_agent, writer_agent, critique_agent, revision_agent, export_agent],

tasks=[research_task, outline_task, writing_task, critique_task, revision_task, export_task],

chat_llm=llm,

manager_llm=llm,

planning_llm=llm,

function_calling_llm=llm,

verbose=True

)

Crew AI allows using of different LLMs for different functions of our Agent Crew, but since we are using one model from Gemini for our project we will be using the same model for chat, management, planning and function calling, however if you want to build something that requires multiple agents for various functions in the crew, you can use this functionality of Crew AI.

Running our Crew of Agents

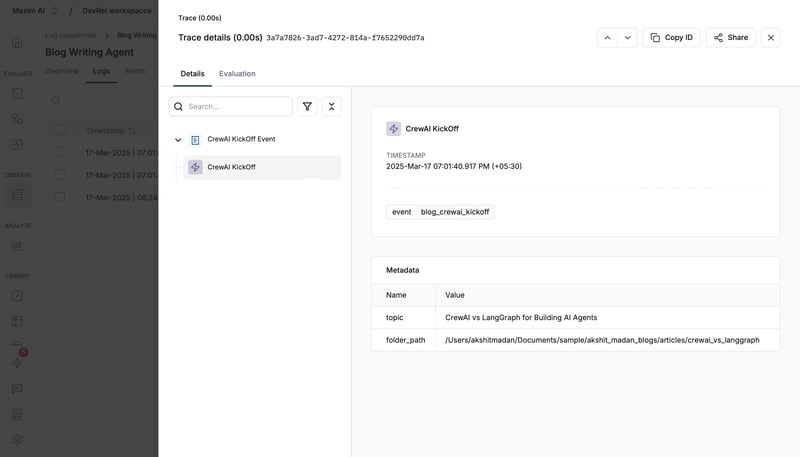

With all parts of our Agents created, we need to start our agent team and generate detailed researched blogs for us. But before we do that we need to add event tracing in our code using Maxim AI SDK that would enable us to log events every time our workflow runs, this allows us to trace and evaluate user experience, log agent quality issues and identify potential issues in our system. Paired with the log tracing, event tracing enables superior observability in LLM Applications and allows you to gain full understanding of the internal flow of non-deterministic systems like the one we are building.

inputs={

"topic": "CrewAI vs LangGraph for Building AI Agents",

"folder_path": "/Users/akshitmadan/Documents/sample/akshit_madan_blogs/articles/crewai_vs_langgraph"

}

trace = logger.trace(TraceConfig(

id=str(uuid.uuid4()),

name="CrewAI KickOff Event",

))

trace.event(

id=str(uuid.uuid4()),

name="CrewAI KickOff",

metadata= inputs,

tags={"event": "blog_crewai_kickoff"}

)

crew_output = crew.kickoff()

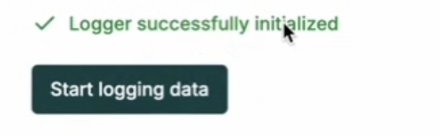

When you run the last block of code you should be able to see the logs getting stored in your Maxim AI dashboard:

You can see the log traces:

And the event traces:

Final Output

Our AI agents are ready to start churning content, here is a sample of a blog written by the AI agents we just built:

from IPython.display import display_markdown

for output in crew_output:

print("********************************************")

display_markdown(output, raw=True)

# CrewAI vs. LangGraph: A Comparative Analysis for Building AI Agents

**Introduction:**

Building sophisticated AI agents requires robust frameworks capable of managing complex interactions and task delegation. CrewAI and LangGraph are two prominent contenders in this space, each offering unique strengths and weaknesses. This blog post compares these frameworks, highlighting their key features, use cases, and suitability for different development scenarios. We'll explore their ease of use, multi-agent capabilities, tool integrations, and more, helping you choose the right framework for your project. Note that some claims in the source material require further verification through direct testing and documentation review.

**Main Content:**

## 1. Framework Overview: CrewAI vs. LangGraph

Let's start with a high-level overview of each framework:

* **CrewAI:** CrewAI prioritizes ease of use and efficient task delegation among multiple agents. Its structured, role-based approach simplifies agent organization, making it ideal for beginners and projects requiring streamlined multi-agent workflows. Its strength lies in straightforward task management, particularly well-suited for e-commerce applications (sales, customer service, inventory).

* **Key Features:** User-friendly interface, efficient task management, role-based agent organization, strong support for structured outputs, LangChain integration (needs verification).

* **Strengths:** Ease of use, efficient multi-agent coordination, robust documentation (needs verification).

* **Weaknesses:** May be less flexible for highly complex or unconventional agent interactions (needs further investigation).

* **LangGraph:** LangGraph employs a graph-based approach to represent and manage agent interactions. This makes it exceptionally well-suited for complex scenarios with intricate relationships between agents and tasks. It's a powerful choice for software development projects involving code generation and multi-agent coding workflows where visualizing and managing complex interactions is critical.

* **Key Features:** Graph-based agent representation, excellent for complex multi-agent interactions, strong support for structured outputs, LangChain integration (needs verification), advanced memory support.

* **Strengths:** Handles complex agent relationships effectively, powerful visualization capabilities, advanced memory features.

* **Weaknesses:** Steeper learning curve compared to CrewAI (needs verification).

## 2. Feature Comparison: A Detailed Look

This table provides a detailed comparison of key features. Claims requiring further verification are noted.

| Feature | CrewAI | LangGraph | Notes |

|-----------------|---------------------------------------|--------------------------------------|-------------------------------------------------------------------------|

| Ease of Use | High (reported) | Moderate (reported) | Requires user testing and feedback for validation. |

| Multi-Agent Support | Excellent, role-based | Excellent, graph-based | Both excel, but the approaches differ significantly impacting workflow. |

| Tool Integration | Strong (LangChain integration needs verification) | Strong (LangChain integration needs verification) | Requires testing and documentation review to confirm integration specifics. |

| Memory Support | Advanced (reported) | Advanced (reported) | Requires investigation into specific mechanisms and performance benchmarks. |

| Scalability | Requires benchmarking | Requires benchmarking | Benchmarking is necessary to determine scalability limits. |

| Documentation | Extensive (needs verification) | Extensive (needs verification) | Requires verification through direct examination of documentation. |

| Use Cases | E-commerce automation, simpler tasks | Software development, complex workflows| Illustrative examples; actual use cases require further exploration. |

## 3. Choosing the Right Framework

The optimal framework depends entirely on your project's specific needs and complexity:

* **Choose CrewAI if:** You prioritize ease of use and need a streamlined approach for managing multi-agent systems with relatively straightforward interactions, particularly in areas like e-commerce automation where role-based organization is beneficial.

* **Choose LangGraph if:** Your project involves complex agent interactions requiring detailed visualization and management of intricate relationships between agents and tasks, particularly suitable for software development or other complex workflows.

## 4. Future Directions and Considerations

This comparison provides a foundation for understanding CrewAI and LangGraph. Future work should include:

* **Benchmarking:** Rigorous performance testing to compare scalability, speed, and resource utilization under various workloads.

* **User Studies:** Gathering user feedback on ease of use, learning curve, and overall user experience.

* **Integration Depth:** A deeper exploration of specific integrations with other tools and libraries (beyond LangChain).

* **Community Analysis:** Assessing the size and activity of each framework's community for support and resource availability.

--- DIAGRAM HERE ---

--- EXPLANATION OF DIAGRAM - A Venn diagram showing the overlapping and unique features of CrewAI and LangGraph. For example, "Ease of Use" would be heavily weighted towards CrewAI, while "Complex Workflow Management" would be heavily weighted towards LangGraph. The overlapping section would represent features common to both. ---

**Conclusion:**

Both CrewAI and LangGraph are valuable tools for building AI agents. The best choice depends on the project's complexity and developer preferences. Careful consideration of the features discussed, coupled with further research and testing, will guide developers to the most appropriate framework for their specific needs.

**References:**

* [1] https://www.linkedin.com/pulse/agentic-ai-comparative-analysis-langgraph-crewai-hardik-shah-ujdrc

* [2] https://medium.com/@aydinKerem/which-ai-agent-framework-i-should-use-crewai-langgraph-majestic-one-and-pure-code-e16a6e4d9252

* [3] https://oyelabs.com/langgraph-vs-crewai-vs-openai-swarm-ai-agent-framework/

* [4] https://www.galileo.ai/blog/mastering-agents-langgraph-vs-autogen-vs-crew

* [5] https://www.gettingstarted.ai/best-multi-agent-ai-framework

Where we go from here?

So, we have assembled our scrappy band of agents, given them tasks and hopefully watched them churn out some content without burning the place down. Congrats! you have a basic agentic workflow that can research, write, critique, revise and export blogs like a redbull chugging team of unpaid interns.

But this is just level - 1, agentic systems can be super powerful and versatile! Where to go next? Totally upto you.

- Make your crew smarter (or dumber if thats your thing) - give your writer agent more creative freedom or make your critique agent less harsh (unless you enjoy pain)

- Make it Rich! With Media - Currently, its all text, but you could easily add AI generated Images or Image Search capabilities to the blog generator to make it media rich. You can also add Mermaid diagrams to add technical diagrams to your blog!

- Hook in Eleven Labs or a TTS model to turn your Blogs into Podcasts.

- Log Agent Reasoning traces to Maxim and up your observability game

- Have agents debate with each other to decide upon the topic or the title

- Add multiple writers with different vibes and let them write content thats more engaging.

The possibilities are endless, with your own agentic system you can experiment and learn more about how agents can help you complete monotonous tasks faster. Push the limits, break things (safely) and share the experiments with the community.

Oh, and if you don’t want your crew going rogue? Keep an eye on them. Maxim AI (www.getmaxim.ai) is there for you when your agents start showing signs of existential dread or decide they’re better off freelancing.

And for those who have been waiting for the full code - Fork my notebook here: https://github.com/dskuldeep/blog-writing-agent.git.

Show me what you build in the comments!

Top comments (4)

Impressive, but you can't use AI (exclusively) to generate high quality content. You can create correct content, you can create informational content, but you can't create content human beings want to read. If I want information, I'll just ask ChatGPT, so when reading articles I want your opinion. ChatGPT or other AIs will never give me "your opinion".

Psst, I should know, this is my company ==> ainiro.io

hey this is very helpful! thanks for sharing. going to fork your notebook and build my crew

Very Helpful!! Keep posting more

Some comments may only be visible to logged-in visitors. Sign in to view all comments.