Augmented Reality (AR) is an innovative area of technology with global leaders such as Google, Microsoft and Apple actively investing in the action. Have you heard the rumour about Apple Glass?

It works on computer vision-based recognition algorithms to augment sound, video, graphics and other sensor-based inputs on real-world objects and environments, to provide an intuitive digital overlay to assist real-world problems. For example, AR applications in medicine are used to reduce the risk of an operation, by giving surgeons improved sensory perception during procedures.

AR is said to change the shape of many industries such as education, entertainment, branding and in particular, commerce; through creating better more memorable customer experiences.

The growth of AR is hard to ignore, with mobile games such as ‘Pokémon GO’ having been downloaded over 1 billion times since its release in 2016. It’s estimated the number of mobile AR users is projected to grow to 3.5 billion by 2022. 😳

AR can be broken down into 3 types:

- Mobile: Real-time video is streamed to a smartphone, where AR graphics are superimposed over the entire screen. (i.e. Snapchat filters)

- Optical See-Through Head-Mounted Display (HMD): Wearable computer glasses that add information alongside what the wearer sees in the real-world. (i.e. Google Glass, HoloLens)

- Video See-Through HMD: Real-time video is streamed to the user, where AR graphics are superimposed over the entire field of view. (i.e. ZED Mini)

In my final year project, I explored the capabilities of AR on video see-through HMDs. This area has significantly less research and development than mobile and smart glasses, which only offer a small field of view for AR graphics; if you've ever tried the HoloLens, you'll know about the "letterbox" effect. AR on VR headsets introduce fully immersed experiences, offering the largest field of view currently available in AR development.

Project Overview 👩💻

To showcase the capabilities of AR on video see-through HMDs, this project followed a lean methodology through conducting various investigations and research to develop an end system that utilised the best technologies in the given time-frame. The following investigations/demos were produced:

- Investigation: ZED Mini vs Vive Pro front-facing cameras

- Investigation: Real-time Colour Segmentation in Python

- Demo: Spatial Mapping and Positional Tracking on the ZED Mini

- Demo: Interactive AR Unity scene on the ZED Mini and Vive VR headset

- Demo: Real-time Object Detection on the ZED Mini (YOLOv3, SSD and Faster R-CNN)

How I Built it 🛠

I'll spare you the details - Let's be real, no one wants to read all 40 pages of my dissertation.

ZED Mini vs Vive Pro front-facing cameras

The left image in the Unity screenshot below shows a frame from the ZED Mini; A stereo camera developed by Stereolabs that offers AR capabilities by attaching to a VR headset (Oculus Rift, Rift S, HTC Vive or Vive Pro) via their Unity plugin and SDK. Within minutes I was able to superimpose a floating white sphere in the centre of the room which maintained positional tracking - Shoutout to Stereolabs for fantastic documentation. 👏👏

The right image in the Unity screenshot below shows a frame from the Vive Pro's front-facing cameras. This was captured using Vive's SRWorks SDK, also built for AR capabilities. This was an absolute nightmare to work with - Their Beta Trial version didn't work on a Turing architecture, so I had to request exclusive access to an unreleased edition #justRTX2080problems. Working with a beta trial with little to no documentation was hard enough, let alone unreleased software.

This investigation presented a pretty easy design decision (surprise, the ZED Mini won by a landslide 😱). It features better latency (60ms vs 200ms), depth-perception (12 meters vs 2.5 meters), streaming resolution (720p vs 480p) and developer documentation.

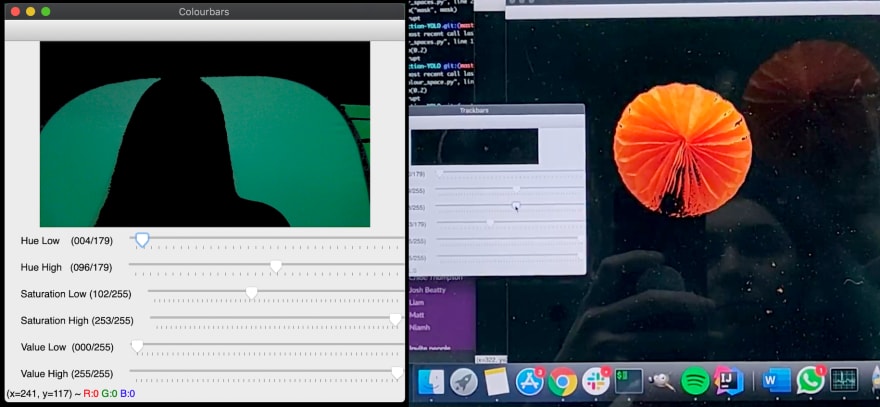

Real-time Colour Segmentation in Python

This project explored colour segmentation to investigate real-time real-world object manipulation, enabling objects of the same colour to be segmented from the rest of the image; suggesting the ability to modify specific objects in a video stream.

A Python colour segmentation system was developed to prove this concept, following Sentdex’s 'Colour Filtering OpenCV Python' tutorial, as well as their 'Blurring and Smoothing OpenCV Python' tutorial. The program takes live webcam input and provides sliders for the user to select an upper and lower boundary for hue, saturation and value.

Here's me in front of a green screen on the left, and me waving around an orange pumpkin decoration on the right (it was Halloween ok 🎃). Although this component served as a proof of concept for real-time real-world object manipulation, this code wasn't used in the final system.

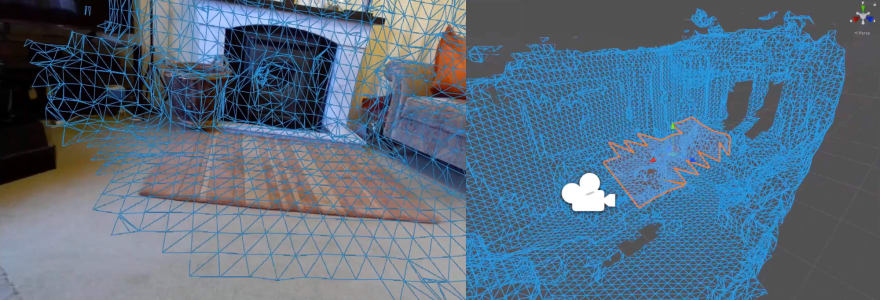

Spatial Mapping and Positional Tracking on the ZED Mini

To create an interactive AR scene, spatial mapping and positional tracking were required to link the real and virtual worlds. To do this, the ZED SDK and ZED Unity plugin were used configure a ZED Manager component in Unity, which spatially mapped my environment cough living room cough.

This created a mesh that represented the geometry of the room by its surfaces, via a web of watertight triangles defined by vertices and faces, which can be further textured and filtered. Parameters such as range (up to 12 meters) and mapping resolution (2cm-8cm) could also be configured, which was pretty neat.

This process worked well on large flat surfaces such as floors and walls, as well as simple shapes such as sofa curves. The spatial mapping didn’t handle areas of glass very well due to their reflections, as seen with the fireplace door which looks like a vortex to the underworld. Once this mesh was created, individual chunks could be manipulated within Unity.

Positional tracking goes hand-in-hand with spatial mapping, with the ZED Mini visual tracking is used to understand its movement in an environment. As the camera moves IRL, it reports its position and orientation known as its Six Degrees of Freedom (6DoF) pose. You can retrieve data such as angular velocity, orientation, translation and acceleration, which can be useful for a variety of use cases.

Interactive AR Unity scene on the ZED Mini and Vive VR headset ✨

Spatial mapping and positional tracking came into play when developing the interactive AR Unity scene. Using the ZED SDK, ZED Unity plugin and ZED Mini camera on a Vive VR headset; the user can walk around the room and interact with floating planets, a tree (with a hidden mushroom 🍄), a zombie bunny, butterfly and succulent (gotta love Unity's asset store freebies). One of the controllers has a pink torch effect, which makes clever use of spatial mapping by warping the pink light beam around real-world and digital objects.

This setup creates a pretty immersive AR experience, I'd imagine with more computing power this demo could be made even more realistic. However the ZED Mini's frame rate of 90 FPS at 480p is limited by the its USB 3.0 bandwidth.

Lauren Taylor@lornidon

Lauren Taylor@lornidon Spent the past few weeks experimenting with the @Stereolabs3D Zed Mini for my final year Computer Science project - Researching augmented reality on VR headsets 🌎

Spent the past few weeks experimenting with the @Stereolabs3D Zed Mini for my final year Computer Science project - Researching augmented reality on VR headsets 🌎

You can really see the spatial mapping come into play when you shine the pink torch around the room! #AR #VR13:57 PM - 05 Apr 2020

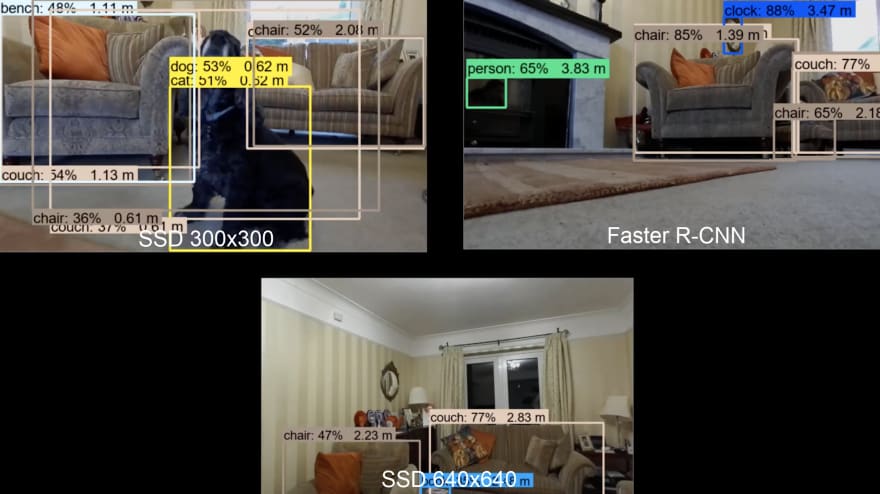

Real-time Object Detection on the ZED Mini (YOLOv3, SSD and Faster R-CNN)

The last component of this project explored real-time object detection on the ZED Mini's stream. This included an investigation into different pre-trained object detection models, including the algorithms YOLOv3, SSD and Faster R-CNN using PyTorch and TensorFlow's Object Detection API.

I tested a total of 7 YOLOv3 models via PyTorch, with various mAP values, input resolutions and weights/configs (to tiny yolo or not to tiny yolo 🤔). These resulted in some decent results! The image below shows a side-by-side comparison screenshot from a video of my top 4 YOLOv3 models.

I also tried two SSD models and a faster R-CNN model using TensorFlow's Object Detection API. However the frame rate was terrible with my project hardware setup, and they weren't anywhere near as accurate as the YOLOv3 models.

In conclusion, the best model was the YOLOv3 algorithm with an input image resolution of 256 and standard YOLOv3 weights/config. It consistently detected objects correctly, offered accurate localisation and had the lowest misclassification rate out of all the models at a decent 15 FPS.

This component was definitely the hardest part of the project for me - If I got £1 for every dependency I had to manually install on Windows, I would not need this degree 😅👋 (I'm looking at you Conda)

Thoughts & Feelings

The Learning Curve

I learnt a lot from this project - From project planning to independent-learning, critical analysis, Windows (lol) and most importantly, knowing when to pivot when a technology doesn't meet your requirements.

Starting with zero knowledge of immersive technology, to 40 pages of research showcasing the capabilities of AR on video see-through HMDs, I'm pretty happy with my results!

If anyone reading this wants to get into AR development but is intimidated by Unity and spooky incomprehensible documentation, same. Don't worry, everyone's just pretending to know wtf they're doing in this industry. Once you realise this, the world's your oyster! All aboard the ship of continuous learning and uncertainty, because the software you're working with right now probably won't exist in 10 years! 😜⛵️💨

Future of Video See-through HMDs

I would love to see more innovation in this area of AR! Apple, Google and Microsoft are chasing smart glasses into the sunset, but until their fields of view match those of video see-through technology, I feel video see-through is the best choice for full AR immersion. That being said, it won't be long until this happens, in which case AR developers have some super exciting and creative times to look forward to!

Thanks for reading! ❤️

Let me know your thoughts on AR - Which platform do you prefer? Mobile, optical see-through or video see-through?

Tell me your favourite and why in the comments! I wanna hear some juicy, opinionated discussions 🗣

Github Link 🔗

I'll be sure to upload my Unity AR scene soon for anyone interested 👀

Hit me up

Twitter: @lornidon

GitHub: @laurentaylor1

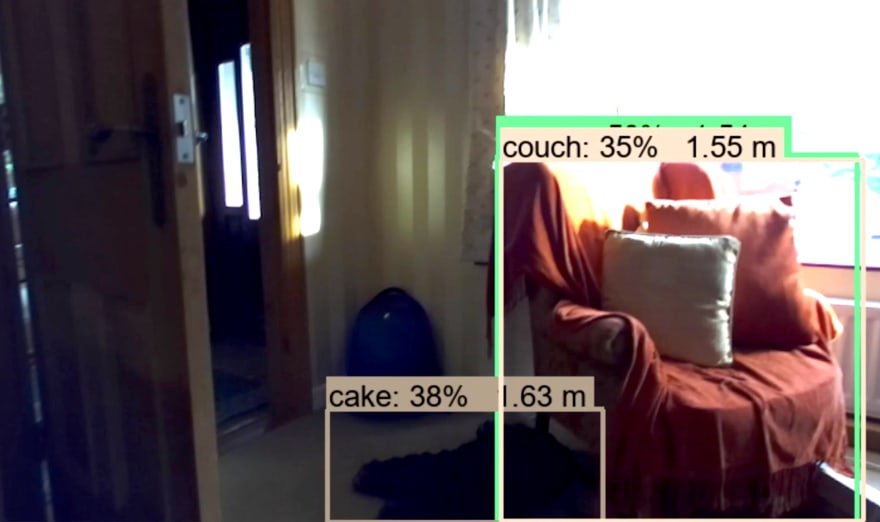

Stay safe, have a lovely week and enjoy this iconic screenshot of my dog being classified as 38% cake (where is the lie?) ✨🎂

Top comments (0)