Human Pose Estimation is a computer vision-based technology that identifies and classifies specific points on the human body. These points represent our limbs and joints to calculate the angle of flexion, and estimate, well, human pose.

While it sounds awkward, knowing the right angle of a joint in a specific exercise is the basis of work for physiotherapists, fitness trainers, and artists. Implementing such capabilities for a machine results in surprisingly useful applications in different fields.

In this article we’ll explore human pose estimation in depth. We’ll figure out its principle of work and capabilities to understand suitable business cases. Also, we’ll analyze different approaches to Human Pose Estimation as a machine learning technology, and try to define the applications for each.

What is Human Pose Estimation?

Human Pose Estimation (HPE) is a task in computer vision that focuses on identifying the position of a human body in a specific scene. Most of the HPE methods are based on recording an RGB image with the optical sensor to detect body parts and the overall pose. This can be used in conjunction with other computer vision technologies for fitness and rehabilitation, augmented reality applications, and surveillance.

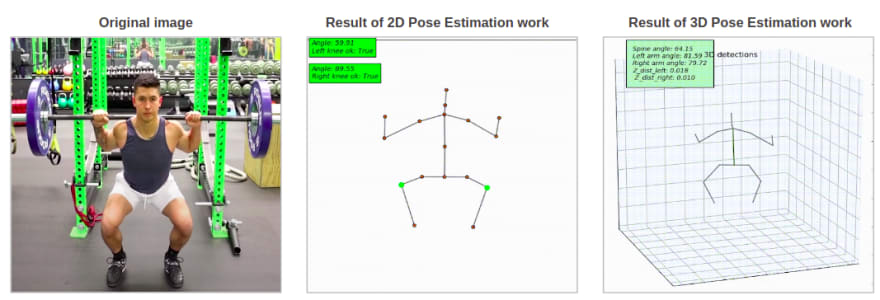

The essence of the technology lies in detecting points of interest on the limbs, joints, and even face of a human. These key points are used to produce a 2D or 3D representation of a human body model.

These models are basically a map of body joints we track during the movement. This is done for a computer not only to find the difference between a person just sitting and squatting, but also to calculate the angle of flexion in a specific joint, and tell if the movement is performed correctly.

There are three common types of human models: skeleton-based model, contour-based, and volume-based. The skeleton-based model is the most used one in human pose estimation because of its flexibility. This is because it consists of a set of joints like ankles, knees, shoulders, elbows, wrists, and limb orientations comprising the skeletal structure of a human body.

A skeleton-based model is used for 2D, as well as 3D representation. But generally, 2D and 3D methods are used in conjunction. 3D human pose estimation grants better accuracy to the application measurements since it considers the depth coordinate and fetches those results into calculation. For the majority of movements, depth is important, because the human body doesn’t move in a 2D dimension.

So now let’s find out how 3D human pose estimation works from a technical perspective, and find out the current capabilities of such systems.

How 3D Human Pose Estimation Works

The overall flow of a body pose estimation system starts with capturing the initial data and uploading it for a system to process. As we’re dealing with motion detection, we need to analyze a sequence of images rather than a still photo. Since we need to extract how key points change during the movement pattern.

Once the image is uploaded, the HPE system will detect and track the required key points for analysis. In a nutshell, different software modules are responsible for tracking 2D keypoints, creating a body representation, and converting it into a 3D space. So generally, when we speak about creating a body pose estimation model, we mean implementing two different modules for 2D and 3D planes.

So for the majority of human pose estimation tasks, the flow will be broken into two parts:

- Detecting and extracting 2D key points from the sequence of images. This entails using horizontal and vertical coordinates that build up a skeleton structure.

- Converting 2D key points into 3D adding the depth dimension.

During this process, the application will make the required calculations to perform pose estimation.

Estimating human pose during the exercise is just one example in the fitness industry. Some models can also detect keypoints on the human face and track head position, which can be applied for entertainment applications like Snapchat masks. But we’ll discuss the use cases of HPE later in the article.

You can check this demo to see how it works in a nutshell: just upload a short video performing some movement and wait for the processing time to see the pose analysis.

3D POSE ESTIMATION PERFORMANCE AND ACCURACY

Depending on the chosen algorithm, the HPE system will provide different performance and accuracy results. Let’s see how they correlate in terms of our experiment with two of the most popular human pose estimation models, VideoPose3D and BlazePose.

We’ve tested BlazePose and VideoPose3D models on the same hardware using a 5-second video with 2160*3840 dimensions and 60 frames per second. VideoPose3D got a total time of 8 minutes for video processing and a good accuracy result. In contrast, BlazePose processing time reached 3-4 frames per second, which allows the use in real-time applications. But the accuracy results shown below don’t correspond to the objectives of any HPE task.

The processing time depends on the movement complexity, video and lighting quality, and the 2D pose detector module. Given the fact that BlazePose and VideoPose3D have different 2D detectors, this stage appears to be a performance bottleneck in both cases.

One of the possible ways to optimize HPE performance is the acceleration of 2D keypoint detection. Existing 2D detectors can be modified or amplified with the post processing stages to improve general accuracy.

Real-time 3D human pose estimation

Whether we deal with a fitness app, an app for rehabilitation, face masks, or surveillance, real-time processing is highly required. Of course, the performance of the model will depend on the chosen algorithm and hardware, but the majority of existing open-source models provide quite a long response time. In the opposite scenario, the accuracy suffers. So is it possible to improve existing 3D human pose estimation models to achieve acceptable accuracy with real-time processing?

While models like BlazePose are able to provide real-time processing, the accuracy of its tracking is not suitable for commercial use or complex tasks. In terms of our experiment, we tested the 2D component of a BlazePose with a modified 3D-pose-baseline model using Python language.

In terms of speed, our model achieves about 46 FPS on the above-mentioned hardware without video rendering where the 2D pose detection model produces keypoints with about 50 FPS. In comparison to the 2D pose detection model, the modified 3D baseline model can produce keypoints with about 780 FPS. The detailed information about the spent processing time of our approach is presented below.

While this approach doesn’t guarantee reliability in complex scenarios with dim lighting or unusual poses, standard videos can be processed in real time. But, generally, the accuracy of model predictions will depend on the training and the chosen architecture. Understanding the true capabilities of human pose estimation, we can analyze some common business applications and general use cases for this technology.

Human pose estimation use cases

HPE can be considered a quite mature technology since there are groundworks in the areas of applications like fitness, rehabilitation, augmented reality, animation, gaming, robotics, and even surveillance. So now let’s talk about the existing use cases.

AI FITNESS AND SELF-COACHING

Fitness applications and AI-driven coaches are some of the most obvious use cases for body pose estimation. The model implemented in the phone app can use the hardware camera as a sensor to record someone doing an exercise and perform its analyses.

Tracking the movement of a human body, the exercise can be split into phases of eccentric and concentric movements to analyze different angles of flexion and overall posture. This is done via tracking the keypoints and providing analytics in the form of hints or graphic analysis. This can be handled in real-time or after some delay, providing analytics on the major movement patterns and body mechanics for the user.

REHABILITATION AND PHYSIOTHERAPY

The physiotherapy industry is another human activity tracking use case with similar rules of application. In the era of telemedicine, in-home consultations become much more flexible and diverse. AI technologies have enabled more complex ways that treatment can be done online.

The analysis of rehab activities applies similar concepts to fitness applications, except for the requirements to accuracy. Since we’re dealing with recovering from the injury, this category of applications will fall into the healthcare category. Which means it has to meet the standards of the healthcare industry and general data protection laws in a certain country.

AUGMENTED REALITY

Augmented reality applications like virtual fitting rooms can benefit from human estimation as one of the most advanced methods of detecting and recognizing the position of a human body in space. This can be used in ecommerce where shoppers struggle to fit their clothes before buying.

Human pose estimation can be applied to track key points on the human body and pass this data to the augmented reality engine that will fit clothes on the user. This can be applied to any body part and type of clothes, or even face masks. We’ve described our experience of using human pose estimation for virtual fittings rooms in a dedicated article.

ANIMATION AND GAMING

Game development is a tough industry with a lot of complex tasks that require knowledge of human body mechanics. Body pose estimation is widely used in animation of game characters to simplify this process by transferring tracked key points in a certain position to the animated model.

The process of this work resembles motion tracking technology used in video production, but doesn’t require a large number of sensors placed on the model. Instead, we can use multiple cameras to detect the motion pattern and recognize it automatically. The data fetched then can be transformed and transferred to the actual 3D model in the game engine.

SURVEILLANCE AND HUMAN ACTIVITY ANALYSIS

Some surveillance cases don’t require spotting a crime in a crowd of people. Instead, cameras can be used to automate everyday processes like shopping at a grocery store.

Cashierless store systems like Amazon GO, for example, apply human pose estimation to understand whether a person took some item from a shelf. HPE is used in combination with other computer vision technologies, which allows Amazon to automate the process of checkout in their stores using a network of camera sensors, IoT devices, and

Human pose estimation is responsible for the part of the process where the actual area of contact with the product is not visible to the camera. So here, the HPE model analyzes the position of customers’ hands and heads to understand if they took the product from the shelf, or left it in place.

How to train a human pose estimation model?

Human pose estimation is a machine learning technology, which means you’ll need data to train it. Since human pose estimation completes quite difficult tasks of detecting and recognizing multiple objects on the screen, and neural networks are used as an engine of it. Training a neural network requires enormous amounts of data, so the most optimal way is to use available datasets like the following ones:

The majority of these datasets are suitable for fitness and rehab applications with human pose estimation. But this doesn’t guarantee high accuracy in terms of more unusual movements or specific tasks like surveillance or multi-person pose estimation.

For the rest of the cases, data collection is inevitable since a neural network will require quality samples to provide accurate object detection and tracking. Here, experienced data science and machine learning teams can be helpful, since they can provide consultancy on how to gather data, and handle the actual development of the model.

HOW TO AVOID TRAINING HUMAN POSE ESTIMATION FROM SCRATCH?

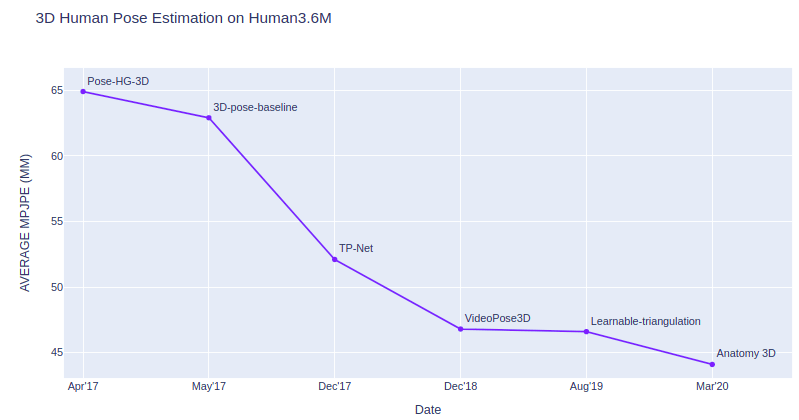

Human pose estimation models are appearing rapidly, as the technology is live and progressive. This gives us options in terms of pretrained models tailored for different tasks. To analyze existing approaches and models, we used Human3.6M as an evaluation dataset.

The evaluation metric is MPJPE (Mean Per Joint Position Error) that shows the distance averaged over all joints which is measured in millimeters. In other words, this metric shows how accurately each specific model detects joints over time. The graph represents the analysis of several open-source models trained for human pose estimation tasks.

In terms of our experiment with such models, we can conclude that some of them can be modified to implement real-time processing with comparably high FPS. The performance of the model for the most part depends on its 2D detector module. Which enables us to implement a high-performance model for the most business cases, including mobile applications.

Top comments (1)

how can i start initiate my journey as a proper beginner!!