Performance is the heartbeat of any JavaScript application, wielding a profound impact on user experience and overall success. It's not just about speed: it's about responsiveness, fluidity and efficiency. A well-performing JS app increases load speed, provides smoother interactions and more engaged user base. Users expect seamless experience, and optimizing performance ensures that your app meets the expectations. Improved performance leads to better SEO rankings, higher conversion rates and increased user retention. It's the cornerstone of user satisfaction, which makes it imperative to prioritize and continually optimize performance in your JavaScript applications.

Let's go to the problems!

Problem #1 - Combination of Array Functions

I've already reviewed numerous PRs (Pull Requests) in my life, and there is an issue I've seen a lot of times. It happens when someone combines .map with .filter or .reduce in any order like this:

arr.map(e => f(e)).filter(e => test(e)).reduce(...)

When you combine these methods, they go through all of the elements for a couple of times. For a small amount of data it doesn't really make any difference, but when the array gets bigger the computations take longer.

The easy solution

Use only reduce method. reduce is the ultimate solution when you need mapping and filtering at the same time. It'll go through the array only once and do both operations simultaneously, saving time and iterations count.

For example, this:

arr.map(e => f(e)).filter(e => test(e))

will transform into:

arr.reduce((result, element) => {

const mappedElement = f(element)

if(test(mappedElement)) result.push(mappedElement)

return result

}, [])

Problem #2 - Useless Reference Update

This problem shows up if you're using React.js or any other library where immutability is important for reactivity and re-renders. Creating a new reference using spreading is quite a common action to do when you update the state/property of the component:

...

const [arrState, setArrayState] = useState()

...

...

setArrayState([...newArrayState])

...

However, spreading the results of .map,.filter and other functions into a new array for new references is useless, because you'll just create an array with new reference to the result which is a new array with new result:

...

const [arrState, setArrayState] = useState()

...

...

setArrayState([...arrState.map(e => f(e))])

...

The solution

Just remove useless spread operator when you use:

- .map

- .filter

- .reduce - with new array accumulator-result

- .reduceRight - with new array accumulator-result

- .flat

- .flatMap

- .concat

- .toReversed

- .toSorted

- .toSpliced

You are welcome to share other JS antipatterns you know in the comments, I'll add them all in the post!

Be sure to follow my dev.to blog and Telegram channel; there will be even more content soon.

Latest comments (44)

Whatever the number of loops you'll do the same exact operations !

This code:

Is almost exactly the same as

Which again is the same as (assuming order of operations doesn't matter):

The only thing you're saving is a counter. It's peanuts.

While doing more operations in one loop is gonna save you incrementing a counter multiple times, you'll still have O(n) complexity where n is the size of your array, so you will most likely not save any measurable amount of resources at all.

Sometimes it is more clear to write map then filter just for readability, cuz not everyone understands what reduce does unfortunately.

Code that cannot be read fluently by the whole team is in my experience way more costly than a few sub-optimal parts in an algorithm that takes nanoseconds to complete.

You should study the subject of algorithmic complexity if you don't know much about this concept.

The code you start off with is readable but as you optimize the code, it becomes more unreadable since your fusing many operations into a single loop. Consider using a lazy collections or a streaming library which applies these loop fusion optimizations for you so you don’t have to worry about intermediate collections being generated and you can keep your focus on more readable single purpose code. Libraries like lazy.js and Effect.ts help you do this

I don’t believe this to be accurate.

For starters, just because you're looping once doesn't mean the total number of operations changed. In your reduce function, you have two operations per iteration. In the chained methods there are two loops with one operation per iteration each.

In both cases the time complexity is O(2N) which is O(N). Which means their performance will be the same except for absolutely minor overhead from having two array methods instead of one.

Even if we assume you managed to reduce the number of operations, you can't reduce it a full order of magnitude. At best (if at all possible) you can make your optimization be O(N) while the chained version is O(xN), and for any constant x the difference will be quite minimal and only observable in extreme cases.

This is just a theoretical analysis of the proposal here. I haven't done any benchmarks, but my educated guess is that they will be in line with this logic.

I made a very similar comment, it pleases me to see it here already :)

I'm baffled by the number of people who seem to think that if they cram more operations into one loop they are somehow gonna reduce overall complexity in any meaningful way... But no: it'll still be O(n) where n is the length of the array.

Many people don't understand what "reduce" does, it can thus make more sense if you're in a team to use a map and a filter, which is extremely straightforward to understand and not a performance penalty.

I love obscure one-liners as much as any TypeScript geek with a love for functional programming, but I know I have to moderate myself when working within a team.

Shit, is big O complexity still studied in computer science?

Compare

reducewithfor of, And you would realize thatfor ofperforms faster compared toreduce.I would give least preference toreduceoverfor of,filter, map, reduceall can be done in a singlefor of.The choice between using array methods like

map,filter, andreduceversus explicit loops often depends on your code style. While both approaches achieve similar results, the array methods offer clearer readability: map modifies the data structure, filter removes specific elements from an array, and reduce accumulates values. However, using loops, like 'for', might require more effort to grasp since they can perform a variety of tasks, making it necessary to spend time understanding the logic within the loop's body.This gave me an idea for a library - a library that implements all the common array utils, but using the Builder pattern, so you can chain the util functions together and they will be run only once at the same time.

Anyhow great article and thanks for the tip!

Great tips, great article, thanks!

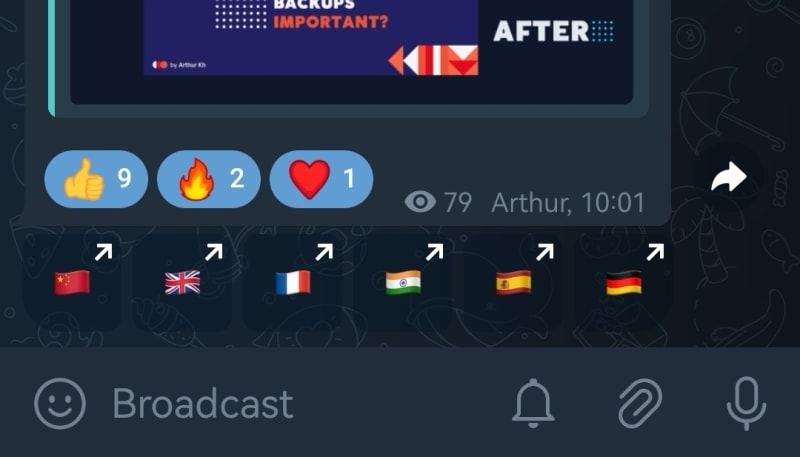

your Telegram channel is Russian! So I can't understand, unfortunately.

Hey!

I've developed a bot, which automatically translates my posts to popular languages so anyone can read

It's sometimes hard to choose which language to use when you're trilingual :D

Hey! Great tips! There are other methods I like too, suchs as

.trim()and.some()I completely agree that Promise.then point, async/await syntax is fantastic and widely compatible across browsers and Node environments, so it should be more prioritized.

When it comes to naming and organizing components, I'm a fan of atomic design. Its structure is exceptional, clear, and easily shareable within a team.

Some comments have been hidden by the post's author - find out more