AR/VR/XR Highlights from Apple's WWDC Developer Conference 2023

It's been six years since ARKit's debut at WWDC 2017, and we've seen big milestones since then; but none come close to what was announced at WWDC 2023 this week.

From a full AR/VR simulator, to Apple Vision Pro, to full skeletal hand tracking, you'll find the AR/VR highlights from the main keynote listed below; as well as other things hiding around in Apple's beta API References. It won't go into too much technical details on how to implement some of these new features and new frameworks, you'll have to follow me on twitter.

Although many of the APIs are not yet public (expected later in June 2023), we'll cover an outline of the main new features coming our way soon.

ARKit

Starting with the core of AR at Apple, there only seems to be one feature available for now, and only on the newly introduced OS.

Skeletal Hand Tracking

As well as tracking surfaces, images and bodies, ARKit will now have skeletal hand tracking, starting with visionOS. This seems to be enabled due to a new device that has multiple cameras to better percieve depth.

RealityKit

Not known to be the fastest horse in terms of rolling out features, especially when compared to its older sister SceneKit. But this year there were some much needed advancements, which were of course necessary for a certain piece of hardware.

RealityKit x SwiftUI

With the introduction of a whole new SwiftUI view, RealityView, RealityKit can now fully take advantage of SwiftUI layouts, and vice versa.

You can also add SwiftUI views into RealityKit in the form of attachments.

This now allows us to add content with sharp, readable text, especially those with complex layouts and animations.

MaterialX

RealityKit is adopting the open standard MaterialX for surface and geometry shaders.

MaterialX is supported across a broad variety of tools. Meaning people already familiar with those tools can now write already shaders in RealityKit, as well as those already using RealityKit can now have more developer tools and countless shader examples at their disposal.

visionOS

Apple's exciting new operating system, visionOS brings many of the AR/VR concepts that RealityKit developers may be familiar with together in a stunning new headset dubbed "Vision Pro". While many of the

Fundamentals

There are a few key objects that you can create within Vision Pro's OS, visionOS:

- Windows, planes in space.

- Volumes, which can hold 3d objects, such as a game board.

- Full Space, which are fully immersive environments, and can not necessarily be manipulated by the user.

Apps

Existing iOS/iPadOS apps are supported as a scalable 2d window shown in 3d space on visionOS. This window keeps their original look and feel.

If you simply add the build target of visionOS to your application, it immediately gets those nice visionOS materials, a fully resizable window, and displays the eye focus of the user, as you find in all visionOS applications.

With visionOS SwiftUI or UIKit can be used display content, RealityKit for 3d content, and ARKit to understand the user's space. The apps designed specifically for visionOS can create a collection of windows, volumes, and spaces.

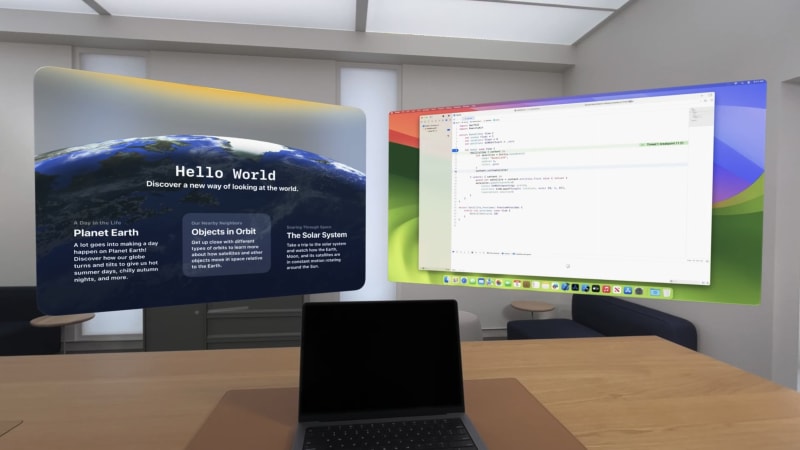

Simulator

My favourite part of the developer tooling at WWDC 2023. This simulator lets you move and look around the scene, and interact with the app by simulating the system gestures. There are several different builtin scenes, with both day and night coditions to help better understand how users will interact with your apps.

Mac Virtual Display

Simply by looking at your Mac through a Vision Pro, your Mac's screen will pop out and instead a high fidelity 4k monitor will appear in its place. This enables a whole new end-to-end development experience, where you can code, test, and debug all in the same place.

Bluetooth keyboards and mice can be used for data input across visionOS, so of course you won't have to type on a virtual keyboard.

Reality Composer Pro

Using Reality Composer Pro, you can add and prepare 3d content for visionOS apps.

What's next?

You may have noticed some sections are a little bit bare, I'll be expanding upon the content over the next week or so as more videos and software are released.

To stay tuned, drop a follow on dev.to as well as twitter. I'm always posting about the latest advancements in AR/VR, and specifically those in the Swift ecosystem.

Top comments (0)