This is a Plain English Papers summary of a research paper called AI Image Generation 52% Faster: New Method Dynamically Routes Processing Power Where Needed Most. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

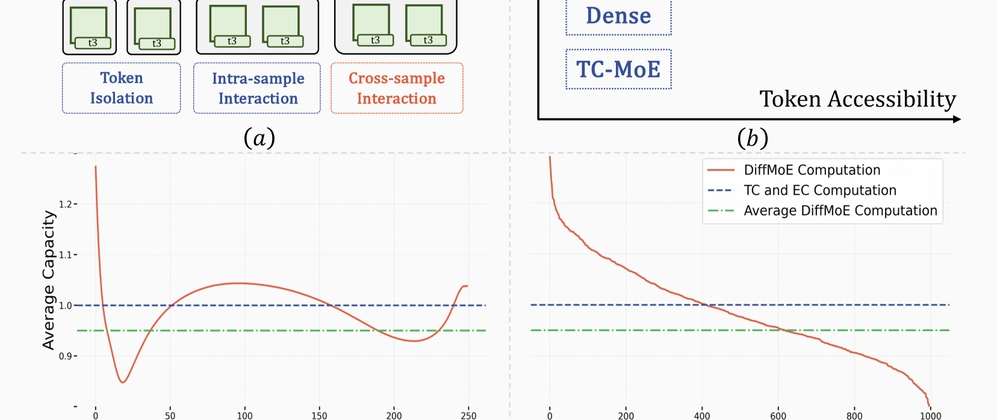

- DiffMoE introduces dynamic token selection for diffusion transformers

- Uses a mixture of experts (MoE) approach to increase model efficiency

- Reduces computational costs by up to 52% with minimal quality loss

- Achieves comparable or better results than dense models while using fewer resources

- Shows scaling benefits at larger model sizes (1B to 16B parameters)

Plain English Explanation

When generating images with AI, the latest models use something called diffusion transformers. These are powerful but resource-hungry. DiffMoE solves this problem by being selective about where to focus computational power.

Traditional image generation models process every par...

Top comments (0)