This is a Plain English Papers summary of a research paper called AI Models Still Fall 30% Behind Humans in Understanding Scientific Papers, New Benchmark Shows. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

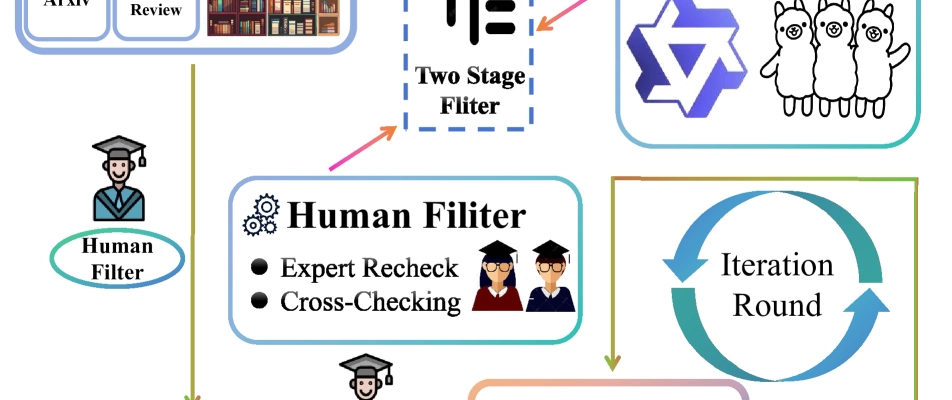

- MMCR is a benchmark for cross-source reasoning in scientific papers

- Tests ability to connect information across text, tables, figures, and math

- Contains 2,186 questions based on arXiv research papers

- First comprehensive dataset requiring multimodal reasoning across different elements

- Evaluates current AI models' limitations in scientific document understanding

- GPT-4V achieves highest performance (55.5%) but falls short of human level (85.5%)

Plain English Explanation

When reading a scientific paper, your eyes dart back and forth between the main text, the figures showing visualizations, the tables with organized data, and the mathematical equations. You need to mentally connect these different parts to fully understand what the researchers ...

Top comments (0)