This is a Plain English Papers summary of a research paper called Top AI Models Fail LEGO Puzzle Test, Humans Still Better at Spatial Reasoning. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

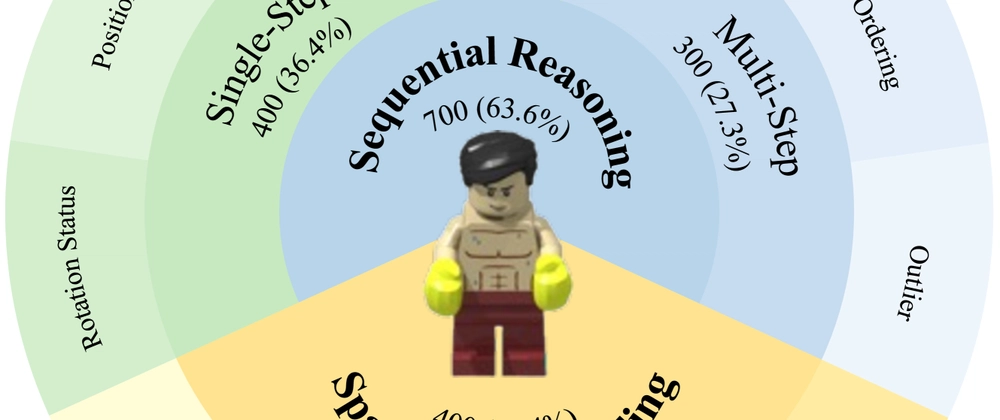

- LEGO-Puzzles is a new benchmark for testing multimodal large language models (MLLMs) on spatial reasoning.

- The benchmark uses LEGO bricks to create complex 3D puzzles requiring multi-step planning.

- Models must determine if puzzle pieces can be combined into target shapes following specific rules.

- Top MLLMs (GPT-4V, Claude 3 Opus) struggle on this benchmark, with performance below 60%.

- Human performance (85.8%) significantly outperforms current AI models.

- The research reveals particular weaknesses in MLLMs' ability to reason about physical constraints and multi-step processes.

Plain English Explanation

LEGO bricks have fascinated children and adults for generations because they require spatial thinking and planning. This research uses LEGO-style puzzles to test how well advanced AI systems can handle similar challenges.

The researchers created LEGO-Puzzles, a test where AI s...

Top comments (0)