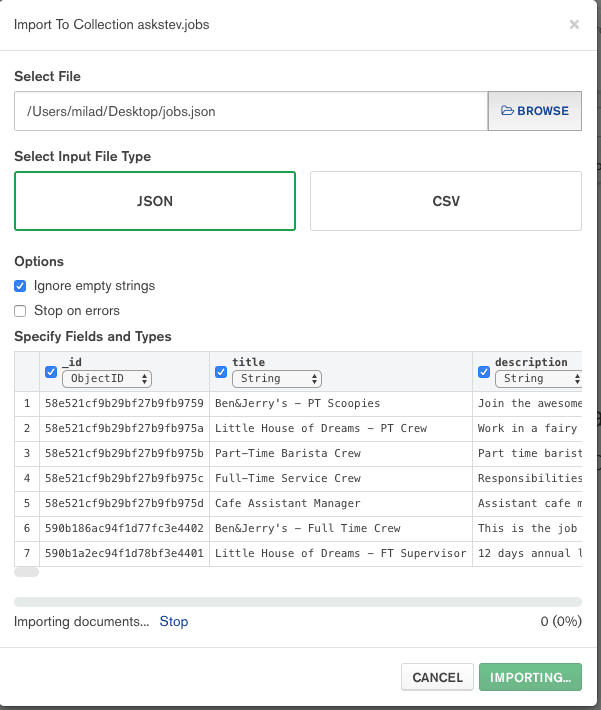

I was trying to import large data set JSON file into MongoDB my first method was using MongoDB Compass my file size was about 7GB but after 30 minutes passed still stuck on 0%, like the image below:

So I used next method, mongoimport but I am using MongoDB with docker so in the first step I need to copy my huge JSON file to MongoDB container:

docker cp users.json CONTAINER_NAME:/users.json

then connect to my Mongodb container with below command:

docker exec -it CONTAINER_NAME bash

and now just need to insert huge file using mongoimport:

mongoimport --db MY_DB --collection users --drop --jsonArray --batchSize 1 --file ./users.json

by using --batchSize 1 option you will be insured huge JSON file will be parsed and stored in batch size to prevent memory issue.

if during import you encounter killed error from MongoDB import, maybe you need to increase memory size for your container by adding deploy.resources to your docker-compose file:

version: "3"

services:

mongodb:

image: mongo:3.6.15

deploy:

resources:

limits:

memory: 4000M

reservations:

memory: 4000M

Top comments (3)

what's about

batchSize? I didn't found any parameter like that for the mongoImport command. It seems not to exist, or not documented?!mongodb.com/docs/database-tools/mo...

nice man that's so cool working

thanks this was just I needed to initialize a mongodb with json