If you would prefer to listen to this post rather than read it, scroll to the bottom to view the talk this post was written from.

Breaking stuff is part of being a developer, but that never makes it any easier when it happens to you. The story I am about to share is from my time at my previous employer, Kenna Security. It is often referred to as "The Elasticsearch Outage of 2017" and it was the biggest outage Kenna has ever experienced.

We drifted between full-blown downtime and degraded service for almost a week. However, it taught us a lot about how we can better prepare and handle upgrades in the future. It also bonded our team together and highlighted the important role teamwork and leadership play in high-stress situations. The lessons we learned are ones that we will not soon forget. By sharing this story I hope that others can learn from our experience and be better prepared when they execute their next big upgrade.

Elasticsearch Lingo

Before I get started I want to briefly explain some of the Elasticsearch lingo that I will be using so that it is easier to follow along.

- Node: For the sake of this post, whenever I say node I am referring to a server that is running Elasticsearch

- Cluster: Nodes are grouped together to form a cluster. A cluster is a collection of nodes that all work together to serve Elasticsearch requests.

- Elasticsearch 2.x -> 5.x: In this post, I will talk about upgrading from 2.x to 5.x. Despite the fact that the numbers jump by a value of 3, Elasticsearch version 5 is actually only a single version bump from 2. At the time, Elasticsearch decided to update how they numbered their versions in order to match with the underlying language that powers it which is why the numbers jump by a value of 3.

Ok now to the good stuff!

The Story

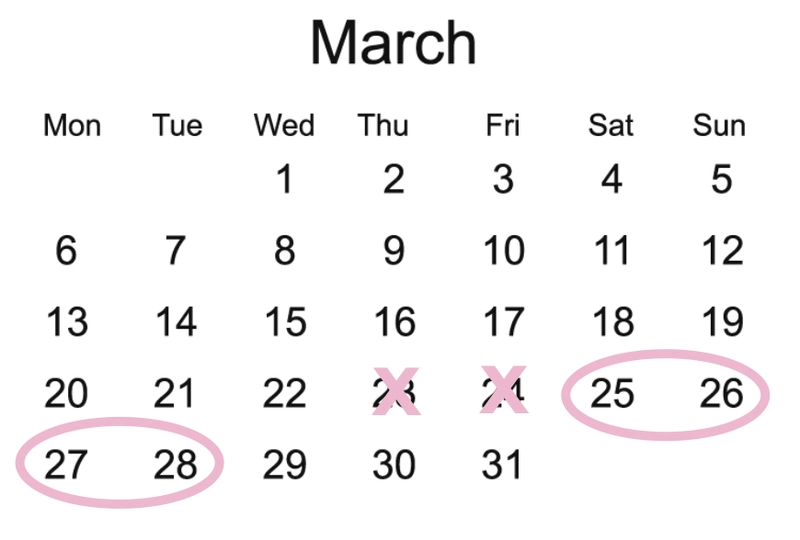

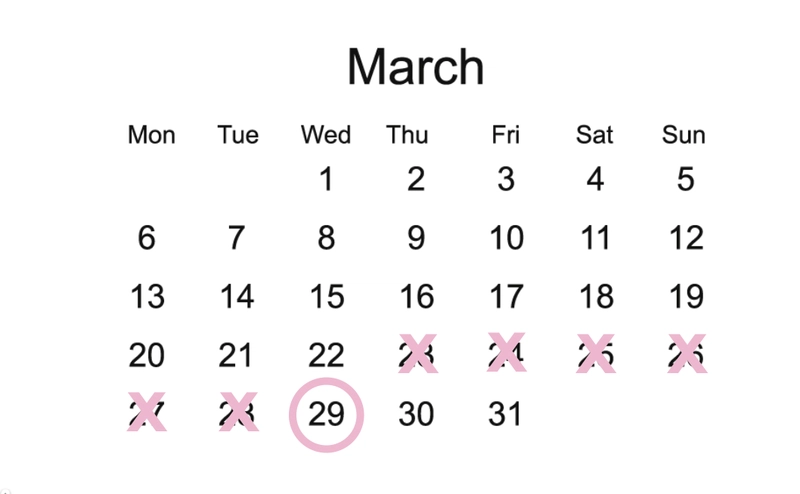

The year was 2017. It was late March and the time had come to upgrade our massive 21 node Elasticsearch cluster. Preparation had been underway for weeks getting the codebase ready for the upgrade and everyone was super excited. The last time we had upgraded Elasticsearch we saw HUGE performance gains and we couldn't wait to see what benefits we would get from upgrading this time. We choose to do the upgrade on March 23rd, which was a Thurs night.

We often choose Thurs nights for our upgrades because if anything needed to be sorted out we had a workday to do it and we wouldn't have to work into the weekend.

The upgrade involved:

- Shutting down the entire cluster

- Upgrading Elasticsearch

- Deploying our code changes for the application

- Turning the cluster back on

All of these steps went off without a hitch and as soon as the cluster was up we took our application out of maintenance mode and took the new cluster for a spin. However, when we started making requests to the cluster we began to see CPU and load spikes on some of the nodes.

This was a bit concerning, but since the cluster was still doing some internal balancing we chalked it up to that and called it a night. This brings us to Friday, March 24th.

In the morning we woke up to find overnight some of our Elasticsearch nodes had crashed. We were not quite sure why but assumed it was related to the cluster trying to balance itself. We restarted the crashed nodes and continued to monitor the cluster. Around 9 am when site traffic started to pick up we saw more CPU spikes and eventually, the entire cluster went down.

At this point, we couldn’t deny it anymore, we knew something was very wrong. We immediately jumped into full-on debugging mode to try and figure out why the hell our cluster was suddenly hanging by a thread. We started reading stack traces and combing through logs trying to find any hints we could about what might be causing all of these load and CPU spikes. We tried Googling anything we could think of but it didn't turn up much.

By Friday afternoon we decided to reach out for help. We posted on the Elastic dicuss forum to see if anyone else had any clue what was going on.

We had no idea if anyone would answer. As many of you know, when you post a question on a forum like this or Stackoverflow either you will get your answer or you might just get a lot of crickets. Lucky for us, it was the former. Much to our surprise, someone offered to help, and not just anyone, one of the senior engineers at Elastic, Jason Tedor!

Not going to lie, we were a bit awe and star-struck when we found out one of the core developers of Elasticsearch was on our case. The thread discussion on the post turned into a private email with us sharing all of the data we could to help Jason figure out what was happening with our cluster. This back and forth lasted all weekend and into the following week.

I’m not going to sugar coat it, during this time our team was in a special level of hell.

We were working 15+ hr days trying to figure out what was causing all of these issues, while at the same time doing everything we could to keep the application afloat. But no matter what we did, that ship just kept sinking and every time Elasticsearch went down we had to boot it back up.

Given that Elasticsearch is at the cornerstone of Kenna’s platform, as you might imagine, customers and management were not happy during all of this.

Our VP of Engineering was constantly getting phone calls and messages asking for updates. With no solution in sight, we started talking about the “R” word. Was it time to rollback? Unfortunately, we had no plan for this but it couldn’t be that hard, right...? Ooooh, how wrong we were in that assumption. We soon learned that once we had upgraded we couldn't actually rollback. In order to "rollback" we would have to stand up an entirely new cluster and reindex(copy) all of our data into it.

We calculated that this would take us 5 days which was not great news since we had already been down for almost a week. However, with no other good options, we stood up a cluster with Elsaticsearch 2.x and started the slog of reindexing all of our data into it. Then Wednesday, March 29th rolled around.

At this point, the team was exhausted but still working hard to try to get Kenna back on its feet. And that's when it happened, we finally got the news we had been waiting for. Jason, the Elasticsearch engineer helping us, sent us a message saying he had found a bug.

HALLELUJAH!!! 🙌 Turns out, the bug he found was in the Elasticsearch source code and he found it thanks to the information we had given him. He immediately issued a patch for Elasticsearch and gave us a workaround to use until the patch was merged and officially released. When I implemented the workaround it was like night and day, our cluster immediately stabilized which you can see in the graph below.

At this point we were so happy I think our entire team cried. This battle we had been fighting for nearly a week was finally over. But, even though the incident was over, the learning had only just begun. Because while it makes for a great story to tell over drinks, as you can imagine, our team learned a few things from that upgrade and those lessons are what I want to share with you.

Lessons Learned the Hard Way

1. Have a Rollback Plan

When doing any sort of upgrade you must know what rolling back in the event of a problem involves. Can you rollback the software inline? If you can't roll it back inline, how would you handle rolling back to the original version? How long and hard would a rollback be? If it is going to take a long time that is something you might want to prepare for ahead of time.

Worst case scenario the shit out of the upgrade so that you are prepared for anything. That way if/when something does come up you have a plan. It can be really easy as a software engineer to focus on your code. The code for the Elasticsearch upgrade could have been rolled back with a simple revert PR, but we never considered if the software and data itself could rollback after it had been upgraded.

2. Do Performance Testing

We went into this upgrade assuming it was going to be just as great as the last. Software only gets better, right? WRONG! But because of that blind assumption we never did any heavy performance testing. We validated that all the code worked with the new version of Elasticsearch, but that was it. Always, always performance test new software.

I don't care what kind of software you are upgrading to. I don't care how stable and widely used that software is, you have to performance test it! While the software as a whole might look good and stable to 99% of its users, you never know if your use case might be the small piece that has a bug or is unoptimized. Many companies had been running on Elasticsearch 5 when we upgraded to it but none of them ran into the particular bug we did. Since the outage, we have implemented a testing strategy that allows us to make Elasticsearch requests to multiple indexes in order to compare performance when making cluster changes.

3. Don't Ignore Small Warning Signs

This is not something I covered in the story since it happened well before the upgrade. When we were initially working on the code changes for this upgrade we did all of the testing locally. During that testing we ended up crashing Elasticsearch in our local environments multiple times. Once again, we figured there was no way it could be an issue with the upgraded software. It had to be an issue with our setup or our configuration. We tweaked some settings to make it stable and went on making code changes for the upgrade.

In hindsight, this was probably the biggest miss. But our trust in the software and our past experiences twisted our bias so much that it never even entered our minds that the problem was with Elasticsearch. If you get small warning signs, investigate, dig further. Don't just get it into a working state and move on. Make sure you understand what is happening before you dismiss the warning sign as not important.

Lessons Learned the Easy Way

That gives us 3 lessons learned. Unfortunately, all of those above lessons were learned the hard way, by not following them during this upgrade. Now I want to shift gears because these next 3 lessons are things that went incredibly well during the upgrade. When I look back on the whole experience it's pretty easy to see that if these next 3 lessons had not gone well the fallout from the upgrade would have been way worse.

I’m sure some of you are thinking, excuse me, worse? You were down for nearly a week, how could it get worse?! Trust me, it could have been way worse and I think that will become clear with these next 3 lessons.

4. Use the Community

Never discount the help that can be found within the community. Whether it is online or simply calling a former coworker, it can be hard and scary to ask for help sometimes. No one wants to be the one that asks the question there is a one-line answer to, but don't let that stop you! Wouldn’t you rather have that one line answer than waste a day or more chasing your tail?!

This is one aspect of this upgrade that I felt our team did very well. The day after the upgrade we immediately reached out to the community for help by posting on the Elastic discuss forums. It took me maybe an hour to write out my question and gather all the information for it and it was by far the most valuable hour of the entire upgrade because that post is what got us our answer.

Many people will reach out to the community as a last resort. I’m here to tell you DONT WAIT! Don’t wait until you are at the end of your rope. Don’t wait until you have suffered through days of downtime. Before you get to the end of your rope and find your back up against a wall, ASK! You might save yourself and your team a whole lot of struggle and frustration. I am very proud that we turned to the community almost immediately and it paid off. Sure, it might not pay off every single time but it is so simple to ask why not give it a shot? Worst case, it will help you organize all your thoughts around your debugging efforts.

5. Leadership and Management Support Are Crucial

During any high risk, software upgrade or change, the leadership and management team you have backing your engineers is extremely important. When you look at this outage story as a whole it is easy to focus on the engineers and everything they did to fix and handle the upgrade. One of the key reasons we as engineers were able to do our jobs as well as we were was because of our Vice President(VP) of Engineering.

It wasn't just the engineers in the trenches on this one, our VP of Engineering was right there with us the entire way. He was up with us late into the evenings and online at the crack of dawn every day during the incident. He not only was there to offer help technically, but he was also our cheerleader and our defender. He fielded all of the calls and questions from upper management which allowed us to focus on getting things fixed. We never had to worry about talking or explaining ourselves to anyone, he handled it all.

Our VP also never wavered in his trust that we would figure it out. He was the epitome of calm, cool, and collected the entire time and that's what kept us pushing forward. His favorite saying is Fail Forward and he never showed that more than during this outage. Today, Fail Forward is one of the cornerstone values in Kenna’s engineering culture and we use this story as an example of that. If we had a different VP I am sure things would have turned out very differently.

So for those of you who are reading this who are VPs, those of you who are managers, those of you who are C-Suite executives, listen up! You may not be the ones pushing the code but I guarantee the role you play is much larger and much more crucial than you know during these scenarios. How you react is going to set an example for the rest of the team. Be their cheerleader. Be their defender.

Be whatever they need you to be for them. But above all else, TRUST that they can do it! That trust will go a long way towards helping the team believe in themselves and that is crucial for keeping morale up especially during a long-running incident such as this one.

6. Your Team Matters

Last but definitely not least I want to highlight the incredible team of engineers that I worked with through this upgrade. It goes without saying during any big software change, upgrade, or otherwise your team matters.

Being a developer is not just about working with computers, you also have to work with people. This outage really drove that point home for me. It was a team of 3 of us working 15+ hour days during the outage and it was brutal! We went through every emotion in the book, from sad to angry to despondent. But rather than those emotions breaking us all down, they banded us together.

This was a big ah-ha moment for me because it made me realize that character is everything in a time of crisis. You can teach people tech, you can show them how to code, you can instill good architecture principles into their brains, but you can't teach character. When you are hiring, look at the people you are interviewing. Really get to know them and assess if they are someone you would want to be back against the wall fighting a fire with. Will they jump in when you need them no questions asked? If the answer is yes, hire them because that is not something you can teach.

In Summary

With that, the list of lessons that we learned from this outage is complete. On the technical side:

- Have a rollback plan

- Do performance testing

- Don’t ignore small warning signs

On the non-technical side of the equation:

- Use the community

- Leadership and management support are crucial

- Your team matters

If I am being honest, while the technical side is important, I firmly believe that those last 3 lessons are the most important. The reason I say this is because you can have a rollback plan, you can do performance testing, you can take note of and investigate every small warning sign that pops up, but in the end, there will still be times when things go wrong. It is inevitable in our line of work, things are going to break.

But if when things go wrong you use the community and have the right team and leadership in place, you will be fine. You can survive any outage or high-stress scenario that comes your way if you remember and put into practice those 3 nontechnical lessons.

The Elasticsearch outage of 2017 is infamous at Kenna Security now, but not in the way that you would think. As brutal as it was for the team and as bad for the business as it was at the time, it helped us build the foundation for the Kenna engineering culture. It gave everyone a story to point to that said this is who we are. What happened there, that’s us! With that in mind, I think there is a bonus lesson here.

BONUS Lesson: Embrace Your Mistakes

When I say “Your” here I mean you personally and I also mean your team and your company. This outage was caused by a team miss. We own that and we embrace that. In our industry, outages and downtime are often taboo. When they happen someone is often blamed, possibly fired, we write a post mortem and then we quickly sweep it under a rug and hope that everyone forgets about it. That is not how it should work.

Embracing outages and mistakes is the only way we can really learn from them. Sharing these stories with others and being open about them benefits everyone and is going to make us all better engineers. The next time you have a big upgrade looming in your future, remember those 6 lessons so that you can prepare your code and your team to make the upgrade the best experience possible. But, in the event that an outage does occur, for any reason, embrace it, learn from it, and then share the experience with others.

Happy Coding! 😃

This post was based off a talk I gave at RubyConf 2019 called Elasticsearch 5 or and Bust!

Top comments (10)

Sounds rough 😨

It looks like you jumped 3 major versions too? For major updates like that, I definitely would require a backup plan.

Also, having a separate prod like environment to test the changes it is really beneficial for such major upgrades. It's saved our team so many times from major issues impacting our actual live environment. Also has allowed us zero-downtime and daytime deployments. 🙂

Going from version 2 to version 5 for Elasticsearch is actually only a single version bump. At the time Elasticsearch changed how it numbered it's versions to match with the underlying language that powers it, Lucene.

Definitely agree a prod like environment is crucial but it has to have prod like load as well which was really what we missed.

Ah yeah - it really is hard to fully duplicate prod like you point out, load is definitely one of those. Although routing traffic to the other environment could be an option too, if the infrastructure can support that?

aaaah we just had a similar "we're in crisis" mode here at work and seeing your post made me think about making one myself! We had our retro yesterday and just like your team, we concluded that we became closer and we communicated so much more than before. Seems like crisis does bring people together huh? 😄

Thanks for sharing!

OMG yes please share!!! So glad that your crisis also went "well" for your team!

What a roller coaster!!? 🎢

Thanks for the awesome post!

Glad you enjoyed it! It definitely was quite the roller coaster but I wouldn't trade the experience for anything 😊

Lessons well noted

Aren't you glad I got that out of my system before coming to DEV?! 😂

Reading this AFTER deploying an upgrade to Rails 6 🤣 (went well)

What a story, Molly 👏🏽. We all can(and should) learn from it.