In Part 1 of this blog I laid out all the techniques my company, Kenna Security, used to speed up indexing while scaling its cluster.

Article No Longer Available

In Part 2, I want to share some of the techniques we used to speed up search while increasing our document count to over 4 billion documents.

Group Your Data

To start, I want to talk about data organization. Many people use Elasticsearch for storing logs. Most logging clusters are set up so indexes are based on dates. One of the biggest reasons logging clusters are set up that way is because that is naturally an easy way to filter down data when you are searching. If you want to search 2 days worth of data, Elasticsearch only has to query two indexes.

This means less shards to search and that leads to faster searches. This concept of grouping data to help speed up search can also be applied to a non logging cluster.

When Kenna first started using Elasticsearch all our data was in a single, small index. As the amount of data increased, we had to increase the number of shards in that index. As our shard count grew, our search speed slowed. To speed things up, we decided to split up our data by client. Each client now lives on it’s own index.

This made the most sense for us because when we look up data, 99% of the time it is by client. Now when we run a search for a client we only have to look at a smaller subset of shards, instead of all of them. This has allowed us to maintain fast search speeds while adding more and more data to the cluster.

Filters Are Friends

In addition to data organization, you can also speed up searches by optimizing their structure. Elasticsearch has two types of search modes, queries and filters.

Queries, which have to score documents, are a lot more work for Elasticsearch. Filters, which don’t score documents, are less work and therefore faster. At Kenna we exclusively use filters because we know their benefits. However, we didn’t fully appreciate those benefits until March, 2016.

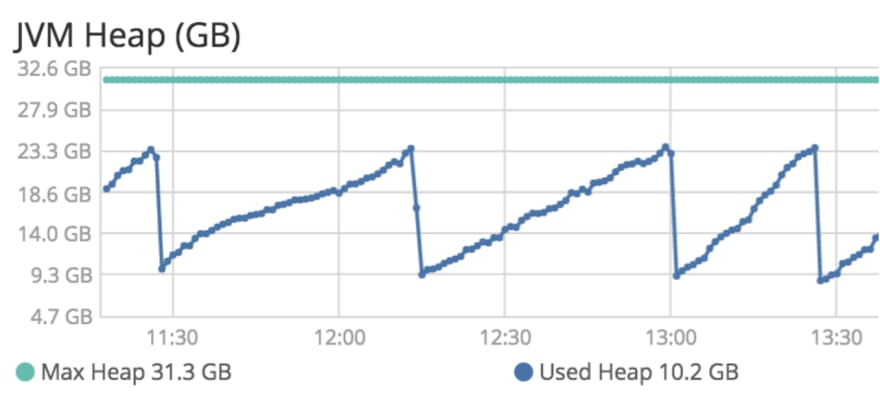

In March, 2016 we upgraded to Elasticsearch 5. During the upgrade we ran into an Elasticsearch bug that caused some of our filters to be scored. Just how much more work was this for our cluster? Well, our heap graphs started to look like this...

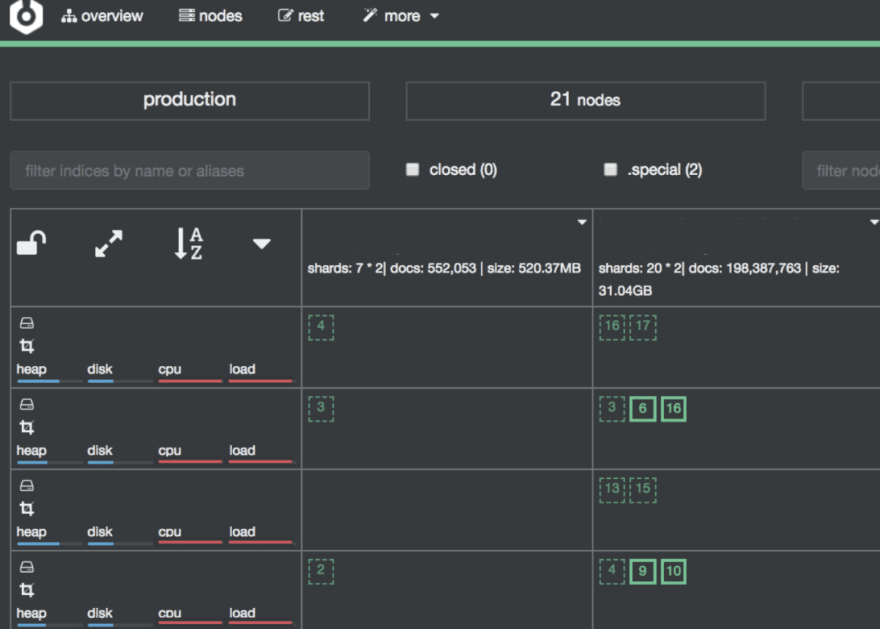

For reference, this is what a heap graph is suppose to look like...

Ours were far from healthy. Scoring a couple of heavily used filters caused so much extra work that our production cluster was rendered unusable for an entire week until the bug was patched. Let me tell you, that was a VERY long week 😬 This experience taught us how important filters truly are and that you should use them whenever possible.

If any part of your search doesn’t need to be scored, move it to a filter block. If you do need parts of your search scored, consider using a bool query. This allows you to combine scoring blocks, like must and non scoring blocks, like filter.

With our data organized and our new found appreciation for filters, we were in a pretty good place with our Elasticsearch cluster by the end of 2017. This was when we finally started making some optimizations we had originally backlogged. One of those optimizations was to store IDs as keywords.

Store IDs as Keywords

This suggestion is one I heard over and over again at Elasticsearch training. (If you have never been to Elasticsearch training, I highly recommend it! I have been to training three times now and every time I have come away with actionable items that we were able to use to improve our cluster.) Basically, whenever you are storing IDs that are never going to be used for range searches, you want to store them as keywords. The reason for this is that keywords are optimized for terms searches. Integers, or numeric mapping types, are optimized for range searches. Upon learning this I didn’t think it would have much of an impact for us at Kenna so I made a ticket and forgot about it.

It was over a year until we finally pulled that ticket out of the backlog and actually did it. The results we got were more than we bargained for. When we finally made the switch from integers to keywords we saw a 30% increase in search speed across the board.

We immediately wished we had made the change sooner, but better late than never!

The last optimization I want to share is yet another one that I heard at Elasticsearch training. However, it also got backlogged because we didn’t think it was that important. Yet again, we were wrong, and had to learn this lesson the hard way.

Don’t Let Your Users Slow You Down

One day we were monitoring Elasticsearch, when out of nowhere, all the nodes maxed out on CPU and load.

My team began scrambling trying to figure out what was causing the load. We started sifting through the slowlogs trying to figure out what queries were running and came across this gem.

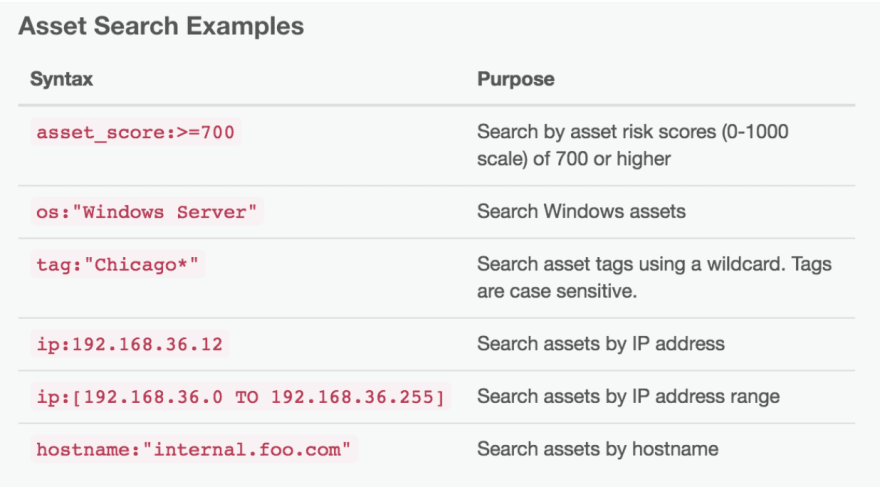

A ton of OR statements paired with a ton of leading wildcards all in one query string from hell. This brings me to my last, and probably one of the most important pieces of advice I have, don't let your users slow you down!

It is crazy easy to slap a search box on your site and then send whatever is put in it to Elasticsearch. DON’T DO IT! Limit what users can and can’t search. We solved this problem at Kenna by defining keywords for users that they can use to search. We also spent time writing up more documentation to educate users about the fields available for searching.

With these changes, users’ searches are now more targeted, accurate, and much easier for Elasticsearch to handle. At the end of the day, everyone wins!

Recap: Speed up Searching at Scale

- Group your data

- Use filters whenever possible

- Store IDs as keywords

- Don’t let your users slow you down

Planning Ahead

When we started out using Elasticsearch to handle all our client’s searching needs it seemed like we could do no wrong. Once our data size started growing though, we quickly realized we were going to have to be smarter about how we were using Elasticsearch. Try to apply these search techniques when your cluster is small and it will make scaling a whole lot easier. Thanks to all these indexing and searching optimizations, Kenna’s Elasticsearch cluster is now one of the most stable pieces of its infrastructure and we plan to keep it that way for a long time.

Knock on wood 😉

This blog was originally published on elastic.co

Top comments (1)

I wish I could heart articles more than once, this is great stuff! I like the idea of keeping a separate index by customer / day. In addition to keeping this quick, it makes it really easy to drop the old indexes as they fall out of the retention window.

Thanks for another great read!