Let's say you've been tasked to produce an excel document containing the sizes of all the s3 buckets in multiple AWS accounts. This is a tedious task and would take a lot of time.

I was in a similar situation but then I came up with a simple python script to do that.

All the accounts were on SSO so it was simpler to achieve this having already configured SSO.

This tutorial assumes that you have set up AWS SSO and have the necessary permissions to access the S3 buckets in the specified account. You may also need to install the boto3 library if it is not already installed on your system.

Initializing the boto3 client

The boto3 client is initialized first. A session is created for the SSO profile.

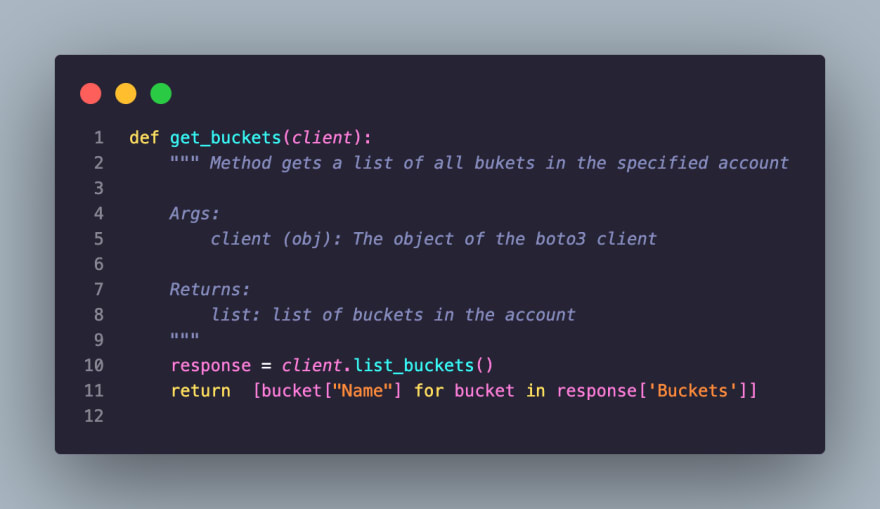

Getting the list of all buckets in the account

Next, the list of all the buckets in the account are retrieved.

Calculating the size of objects in each bucket

For each of the buckets listed above, the size of the objects in the bucket(GB) are calculated. The code reads the contents of the objects and calculates the size of each. A key error is raised for buckets that are empty since there are no contents.

A dictionary of the buckets and their sizes is then created and returned.

Write to csv

A csv file named after the sso profile is then generated with the columns being the bucket name and the object size.

Bringing it all together

To run this code you need to pass the sso profile as an argument.

For example if your code file is s3_size.py and the sso profile is production, you execute it by entering the following command in the terminal

python s3_size.py production

Top comments (0)