What is spaCy

spaCy is a free, open-source popular python library for advanced Natural Language Processing.

Guess you are curious to know about what spaCy is capable of, don't worry in this article you will understand about that.

image by memegenerator.net

spaCy Capabilities and Features

Text Classification: spaCy can be used in classifying text on various categories or assigning labels to the whole document or even part of the document.

Named Entity Recognition: spaCy capable can be used in the extraction of entities from large documents and naming real-world objects like person, locations, organization, etc

but this can be applied to resume keywords extraction on the specific role depending on what a recruiter wants from candidates.

Tokenization: one of the most common tasks when it comes to working with unstructured text data. I can say is the process of splitting a phrase, sentence, paragraph, or an entire text document into smaller units, such as individual words or terms. Each of these smaller units is called a token.

spaCy can segment text, words, punctuations, etc

Lemmatization: refers to doing things properly with the use of a vocabulary and morphological analysis of words, normally aiming to remove inflectional endings only and to return the base or dictionary form of a word.

spaCy can assign the base form of words like the lemma of "running" is "run", "jumped" is "jump", and the lemma of “writing” is “write”.

Similarity: spaCy can be used on comparison of documents, words, and texts similarity. For example, you can build a tool capable of checking similarities between documents in your organization with spaCy.

Rule-based Matching: spaCy capable of finding sequences/patterns of tokens based on their texts and linguistic annotations, similar to regular expressions.

Most of the spaCy features can help in performing large-scale text analysis, for more about spaCy features and capabilities take a look at this.

But the main topic of this article is process pipelines of spaCy let's dive into this

spaCy Process Pipelines

spaCy currently offers trained pipelines for a variety of languages, which can be installed as individual Python modules. Pipeline packages can differ in size, speed, memory usage, accuracy, and the data they include. The package you choose always depends on your use case and the texts you’re working with.

spaCy has the nlp() function which is always invoked with text arguments or parameters.

text = "My article about spaCy"

doc = nlp(text)

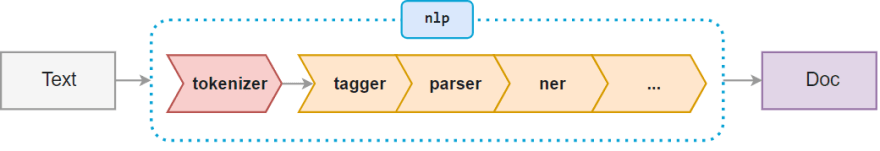

whenever the nlp function is invoked, it transforms text into a Doc object by tokenizing the text. Then followed by the series of pipeline components that applied to the Doc in order to process it and set attributes.

The final output of this pipeline is the processed Doc.

spaCy pipeline process starts by involving the nlp function on a text, spaCy tokenizes the text to produce a Doc object. The Doc is then processed in several different steps.

spaCy pipeline

spaCy Pipeline Components: spaCy has built-in components which used to perform processing tasks whenever the nlp function is invoked. These components can be modified depending on the use case.

Some of the spaCy components and their descriptions:

- tokenizer - for segmenting text into tokens

- tagger - Part-of-speech tagger

- parser - dependency parser

- ner - named entity recognizer

- lemmatizer - for token lemmatization

- textcat - for text classification task

The capabilities of a processing pipeline always depend on the components, their models, and how they were trained. Each spaCy pipeline has two attributes:

- pipe_names

- pipeline(name ,component)

To view the spaCy pipeline attributes can be achieved by inspecting the pipeline

# import spacy

import spacy

# Load the en_core_web_sm pipeline

nlp = spacy.load("en_core_web_sm")

# Print the names of the pipeline components

print("pipeline name", nlp.pipe_names)

print("============================================================================")

# Print the full pipeline of (name, component) tuples

print("pipeline tuples", nlp.pipeline)

Output

pipeline name ['tok2vec', 'tagger', 'parser', 'attribute_ruler', 'lemmatizer', 'ner']

============================================================================

pipeline tuples [('tok2vec', <spacy.pipeline.tok2vec.Tok2Vec object at 0x7fd65b7407d0>), ('tagger', <spacy.pipeline.tagger.Tagger object at 0x7fd65b78c650>), ('parser', <spacy.pipeline.dep_parser.DependencyParser object at 0x7fd65ba55b40>), ('attribute_ruler', <spacy.pipeline.attributeruler.AttributeRuler object at 0x7fd65b70b3c0>), ('lemmatizer', <spacy.lang.en.lemmatizer.EnglishLemmatizer object at 0x7fd65b6c6500>), ('ner', <spacy.pipeline.ner.EntityRecognizer object at 0x7fd65ba55de0>)]

spaCy Custom Components: spaCy provide a way of customizing component depending on the use cases at hand, but require to follow two guidelines in order to solve challenges with custom components:

- Computing your own values based on tokens and their attributes

- Adding named entities, for example, based on a dictionary

Let's see how to apply custom components

The first step is to define custom components using functions and then add the custom components on the spaCy pipeline.

How about adding digits extractor components in the spaCy pipeline? Yes, Let's do it.

First, let's define a component for extracting digits from documents as a function named digit_component_function

digit_component_function will accept the spaCy object doc, then convert the object into a string from the string we can extract all digits contained in the spaCy document, then finally our function will return the doc object.

The final task is to add digit_component_function in the spaCy pipeline.

Here are the full codes for adding custom digit components in spaCy

# import spaCy

import spacy

from spacy.language import Language

# Define the custom component

@Language.component("digit_component")

def digit_component_function(doc):

# convert object to string

txt = str(doc)

# extract digits from the string

print("This document contain the following digits:",[int(s) for s in txt.split() if s.isdigit()])

# Return the doc

return doc

# Load the small English pipeline

nlp = spacy.load("en_core_web_sm")

# Add the component first in the pipeline and print the pipe names

nlp.add_pipe("digit_component", first=True)

print(nlp.pipe_names)

# Process a text

text = "XLnet outperforms BERT on 20 tasks, often by a large margin. The new model achieves state-of-the-art performance on 18 NLP tasks including question answering,natural language inference, sentiment analysis,etc"

doc = nlp(text)

Output

'digit_component', 'tok2vec', 'tagger', 'parser', 'attribute_ruler', 'lemmatizer', 'ner']

This document contain the following digits: [20, 18]

spaCy Custom Attributes: spaCy provide a way of extentending attributes depending on the use cases at hand, there are two ways to perform this task:-

- Attribute extensions – Set a default value that can be overwritten

- Property extensions – Work like properties in Python. Define a getter and an optional setter function

Custom attributes are available via ._ property. This is done to distinguish them from the built-in attributes. Attributes need to be registered with the Doc,Tokenor Spanclasses using the set_extensionmethod.

Let's try to extend with our own attributes for the country we are living in. using set_extension method we should add is_country attributes. Then after we can inspect the attribute that we have added using _.attributename.

# import spacy

import spacy

from spacy.tokens import Token

nlp = spacy.blank("en")

# Register the Token extension attribute "is_country" with the default value False

Token.set_extension("is_country", default=False)

# Process the text and set the is_country attribute to True for the token "Tanzania"

doc = nlp("I live in Tanzania.")

doc[3]._.is_country = True

# Print the token text and the is_country attribute for all tokens

print([(token.text, token._.is_country) for token in doc])

Output

[('I', False), ('live', False), ('in', False), ('Tanzania', True), ('.', False)]

Wow! now you are familiar with how to work with the spaCy pipelines, you can opt to go far with it by looking at components with extension, Entity extractions, scaling & performance, selective processing, etc

Thank you for taking part by reading this article, please share with the community.

Top comments (0)