The Problem

As Kubernetes doesn't provide an internal container registry, the DigitalOcean Kubernetes Challenge, challenged us to build one with the help of tools like Trow or Harbor.

Introduction

In this guide:

- I will try and explain how to create a Kubernetes Cluster on DigitalOcean using terraform.

- Then I will deploy Trow in the same cluster as an internal container registry.

- Then I will deploy an Nginx sample application on the pod via trow.

Prerequisites:

As we will be working with

- DigitalOcean

- Kubernetes

- Terraform

- Trow it is natural we will have to set them up on our host machine configured to our liking.

Now onto THE CHALLENGE! :

Firstly set up a Personal Access Token on the API Page of DO console, just click on the "Generate New Token" button and voila a token with read and write privileges to your console will be generated! - So simple right!

Export the newly generated token as :

export DigitalOcean_Token=tokenid

- In your project directory(I set it up as do-kubernetes-challenge-2021), make a terraform configuration file as yourfilename.tf

#Deploy the actual Kubernetes Cluster

resource "digitalocean_kubernetes_cluster" "kubernetes_cluster" {

name = "do-kubernetes-challenge"

region = "blr1"

version = "1.19.15-do.0"

tags =["my-tag"]

#This default node pool configuration is mandatory

node_pool{

name = "default-pool"

size = "s-1vcpu-2gb" #minimum size, list available options with 'doctl compute size list'

auto-scale = false

node_count = 2

tags = ["node-pool-tag"]

labels = {

"pratiktamgole.xyz"="up"

}

}

}

Make an accompaning file known as provider.tf

terraform{

reqiured_providers {

digitalocean = {

source ="digitalocean/digitalocean"

version = "~>2.0"

}

}

}

Perform Terraform Deployment :

terraform init

patglitch19@patglitch19-HP:~/do-kubernetes-challenge-2021$ terraform init

Initializing the backend...

Initializing provider plugins...

- Reusing previous version of digitalocean/digitalocean from the dependency lock file

- Using previously-installed digitalocean/digitalocean v2.16.0

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

terraform plan

patglitch19@patglitch19:~/do-kubernetes-challenge-2021$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# digitalocean_kubernetes_cluster.kubernetes_cluster will be created

+ resource "digitalocean_kubernetes_cluster" "kubernetes_cluster" {

+ cluster_subnet = (known after apply)

+ created_at = (known after apply)

+ endpoint = (known after apply)

+ ha = false

+ id = (known after apply)

+ ipv4_address = (known after apply)

+ kube_config = (sensitive value)

+ name = "terraform-do-cluster"

+ region = "blr1"

+ service_subnet = (known after apply)

+ status = (known after apply)

+ surge_upgrade = true

+ tags = [

+ "my-tag",

]

+ updated_at = (known after apply)

+ urn = (known after apply)

+ version = "1.19.15-do.0"

+ vpc_uuid = (known after apply)

+ maintenance_policy {

+ day = (known after apply)

+ duration = (known after apply)

+ start_time = (known after apply)

}

+ node_pool {

+ actual_node_count = (known after apply)

+ auto_scale = false

+ id = (known after apply)

+ labels = {

+ "dev.to/patglitch" = "up"

}

+ name = "default-pool"

+ node_count = 2

+ nodes = (known after apply)

+ size = "s-1vcpu-2gb"

+ tags = [

+ "node-pool-tag",

]

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run "terraform

apply" now.

terraform apply --auto-approve

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021$ terraform apply --auto-approve

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# digitalocean_kubernetes_cluster.kubernetes_cluster will be created

+ resource "digitalocean_kubernetes_cluster" "kubernetes_cluster" {

+ cluster_subnet = (known after apply)

+ created_at = (known after apply)

+ endpoint = (known after apply)

+ ha = false

+ id = (known after apply)

+ ipv4_address = (known after apply)

+ kube_config = (sensitive value)

+ name = "terraform-do-cluster"

+ region = "blr1"

+ service_subnet = (known after apply)

+ status = (known after apply)

+ surge_upgrade = true

+ tags = [

+ "my-tag",

]

+ updated_at = (known after apply)

+ urn = (known after apply)

+ version = "1.19.15-do.0"

+ vpc_uuid = (known after apply)

+ maintenance_policy {

+ day = (known after apply)

+ duration = (known after apply)

+ start_time = (known after apply)

}

+ node_pool {

+ actual_node_count = (known after apply)

+ auto_scale = false

+ id = (known after apply)

+ labels = {

+ "dev.to/patglitch" = "up"

}

+ name = "default-pool"

+ node_count = 2

+ nodes = (known after apply)

+ size = "s-1vcpu-2gb"

+ tags = [

+ "node-pool-tag",

]

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

digitalocean_kubernetes_cluster.kubernetes_cluster: Creating...

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [50s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [1m0s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [1m10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [1m20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [1m30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [1m40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [1m50s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [2m0s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [2m10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [2m20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [2m30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [2m40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [2m50s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [3m0s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [3m10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [3m20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [3m30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [3m40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [3m50s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [4m0s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [4m10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [4m20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [4m30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [4m40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [4m50s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [5m0s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [5m10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [5m20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [5m30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [5m40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [5m50s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [6m0s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [6m10s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [6m20s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [6m30s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Still creating... [6m40s elapsed]

digitalocean_kubernetes_cluster.kubernetes_cluster: Creation complete after 6m47s [id=88b84043-6683-4342-b7e2-2d68a2a691d9]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

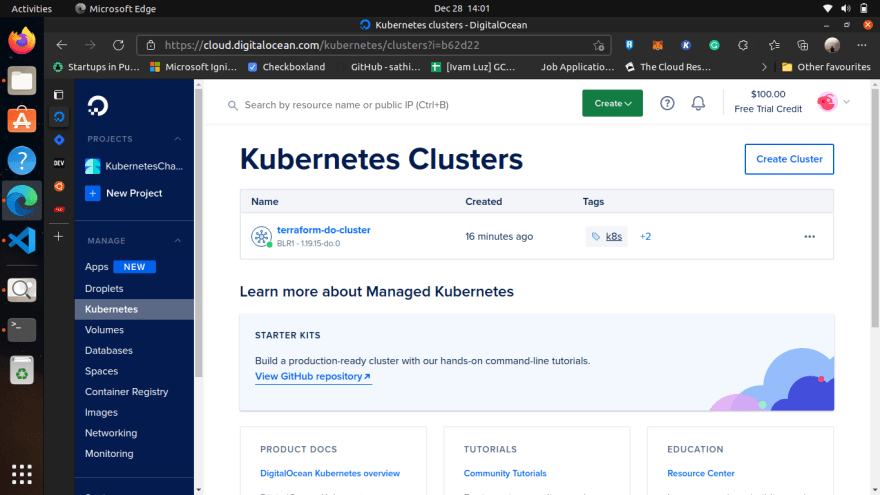

With the last command, we will be able to see the cluster forming on our DigitalOcean Cloud Console:

Connect to the Kubernetes Cluster

Now to connect to our newly formed cluster, we need to to download the kubeconfig file but first create an enviroment variable with our clusterID

export CLUSTER_ID= CLUSTERID

Download the configuration file of the cluster:

curl -X GET -H "Content-Type:application/json" -H "Authorization:Bearer $DIGITALOCEAN_TOKEN" "https://api.digitalocean.com/v2/ kubernetes/clusters/$CLUSTER_ID/kubeconfig" > config

Now set the KUBECONFIG enviroment variable to point to the downloaded config file :

export KUBECONFIG=$(pwd)/config

Hooray! We've got out shiny new Kubernetes Cluster running on our DO Console Account. Now to deploy a registry running with TLS. We can check it with :

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021$ kubectl cluster-info

Kubernetes control plane is running at https://88b84043-6683-4342-b7e2-2d68a2a691d9.k8s.ondigitalocean.com

CoreDNS is running at https://88b84043-6683-4342-b7e2-2d68a2a691d9.k8s.ondigitalocean.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

clone the trow project repository :

git clone git@github.com:ContainerSolutions/trow.git

cd into the cloned folder :

cd trow && cd quick-install

Install Trow on your Cluster :

./install.sh

-To check it was properly installed :

kubectl get pod -A

The above commands will look like this:

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021$ git clone git@github.com:ContainerSolutions/trow.git

Cloning into 'trow'...

Warning: Permanently added the ECDSA host key for IP address '13.234.176.102' to the list of known hosts.

remote: Enumerating objects: 7063, done.

remote: Counting objects: 100% (923/923), done.

remote: Compressing objects: 100% (492/492), done.

remote: Total 7063 (delta 554), reused 721 (delta 427), pack-reused 6140

Receiving objects: 100% (7063/7063), 2.89 MiB | 1.97 MiB/s, done.

Resolving deltas: 100% (4469/4469), done.

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021$ cd trow

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow$ ./install.sh

bash: ./install.sh: No such file or directory

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow$ cd quick-install

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow/quick-install$ ./install.sh

Trow AutoInstaller for Kubernetes

=================================

This installer assumes kubectl is configured to point to the cluster you want to

install Trow on and that your user has cluster-admin rights.

This installer will perform the following steps:

- Create a ServiceAccount and associated Roles for Trow

- Create a Kubernetes Service and Deployment

- Request and sign a TLS certificate for Trow from the cluster CA

- Copy the public certificate to all nodes in the cluster

- Copy the public certificate to this machine (optional)

- Register a ValidatingAdmissionWebhook (optional)

If you're running on GKE, you may first need to give your user cluster-admin

rights:

$ kubectl create clusterrolebinding cluster-admin-binding --clusterrole=cluster-admin --user=$(gcloud config get-value core/account)

Also make sure port 31000 is open on the firewall so clients can connect.

If you're running on the Google cloud, the following should work:

$ gcloud compute firewall-rules create trow --allow tcp:31000 --project <project name>

This script will install Trow to the kube-public namespace.

To choose a different namespace run:

$ ./install.sh <my-namespace>

Do you want to continue? (y/n) Y

Installing Trow in namespace: kube-public

Starting Kubernetes Resources

serviceaccount/trow created

role.rbac.authorization.k8s.io/trow created

clusterrole.rbac.authorization.k8s.io/trow created

rolebinding.rbac.authorization.k8s.io/trow created

clusterrolebinding.rbac.authorization.k8s.io/trow created

deployment.apps/trow-deploy created

service/trow created

Adding entry to /etc/hosts for trow.kube-public

Exposing registry via /etc/hosts

This requires sudo privileges

312

253

206.189.137.94 trow.kube-public # added for trow registry

Successfully configured localhost

Do you want to configure Trow as a validation webhook (NB this will stop external images from being deployed to the cluster)? (y/n) y

Setting up trow as a validating webhook

WARNING: This will limit what images can run in your cluster

By default, only images in Trow and official Kubernetes images will be

allowed

validatingwebhookconfiguration.admissionregistration.k8s.io/trow-validator created

The script has set up the domain trow.kube-public to point at your cluster. We can now tag and push our local image with the following three commands:

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow/quick-install$ docker pull nginx:latest

latest: Pulling from library/nginx

Digest: sha256:366e9f1ddebdb844044c2fafd13b75271a9f620819370f8971220c2b330a9254

Status: Image is up to date for nginx:latest

docker.io/library/nginx:latest

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow/quick-install$ docker tag nginx:latest trow.kube-public:31000/nginx:test

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow/quick-install$ docker push trow.kube-public:31000/nginx:test

The push refers to repository [trow.kube-public:31000/nginx]

51a4ac025eb4: Pushed

4ded77d16e76: Pushed

32359d2cd6cd: Pushed

4270b63061e5: Pushed

5f5f780b24de: Pushed

2edcec3590a4: Pushed

test: digest: sha256:8ba07d07b05bf4a026082b484ed143ab335cbe5295e13f567cde3440718dcd93 size: 1570

And finally run it inside Kubernetes! :

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow/quick-install$ kubectl run trow-test --image=trow.kube-public:31000/nginx:test

pod/trow-test created

patglitch19@patglitch19-HP-Pavilion-15-Notebook-PC:~/do-kubernetes-challenge-2021/trow/quick-install$ kubectl get pods

NAME READY STATUS RESTARTS AGE

trow-test 1/1 Running 0 39s

Good Lord! I have done it!!

And I hope you could follow this very easily and

successfully deployed Trow onto your cluster and pushed the Nginx image into the registry, after following this post!

Top comments (0)