This is an article in a story format, about the design of the space segment software for the FUNcube missions, from FUNcube-1 (AO-73) in 2013 to the present day with JY1SAT (JO-97, launched 3 Dec 2018 - finally!).

[edit to add] JY1SAT signals received in Australia, we have another worker! http://data.amsat-uk.org/ui/jy1sat-fm

First, it takes a number of widely skilled, experienced and professional people to build spacecraft software, I take very little credit for the working satellites :)

The Dev Team

The FUNcube team, in particular for the space segment software:

- Duncan Hills (M6UCK) - the main man: seasoned hacker, relentless worker, practicality expert, LED enthusiast..

- Wouter Weggelaar (PA3WEG) - the ringer: by day a mild mannered space technology company employee, by night: AMSAT-NL guy!

- Gerard Aalbers - the professional: eagle eyed reviewer, standards enforcer, bug hunter, puzzle solver and unflappable dude.

- Howard Long (G6LVB) - the SDR wizard: algorithm creator, Microchip PIC abuser, RF engineer, fancy 'scope owner.

- Jason Flynn (G7OCD) - the hardware guru: PCB layout wizard, Freescale blagger, KISS guy, watchdog layering fan, Verilog god.

- Phil Ashby (M6IPX) - me, the troublemaker: daft ideas, prototypes, fail fast breakages, repository guardian, loudmouth.

TL;DR

When designing, creating & testing software for a satellite, apply a few rules to keep your sanity:

- Keep It Simple Stupid (KISS),

- Use your language wisely, we tried to apply the NASA JPL coding standard C

- Trace everything like a logic analyser (esp. from space),

- Build in remote debug features (you are gonna need 'em),

- Create one tested feature at a time (agile behaviour driven design),

- Use idempotent, absolute commands, no toggles.

- If you think it's done test it again (too many external variables),

- Use the LEDs Luke, because flashing lights are fun!

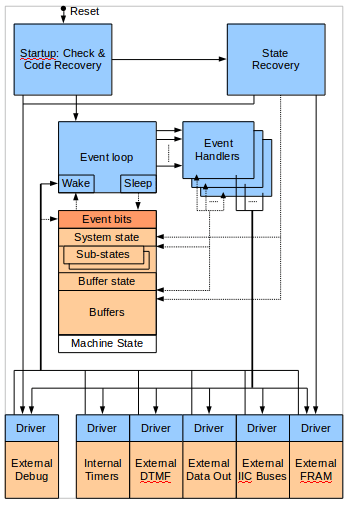

The Obligatory Diagram

This is almost how it works..

A Little Background

More is available at The FUNcube Website.

AMSAT-UK & AMSAT-NL started development of a new satellite in 2009, our colleague Jason, a member of AMSAT-UK, volunteered to design and develop the Command, Control & Telemetry (CCT) board, as he had previous experience with other satellite design work. The CCT provides the 'brains' that allow operators on the ground to control the satellite, and collects lots of telemetry from on board sensors for educational purposes. The CCT had to meet a number of objectives:

- The functional specification :)

- Low power (under 100mW), we wanted all the juice for the transmitter.

- Fail safe, part of the Cubesat regulations to protect other systems from interference, we can't 'go rogue'.

- Persistant storage, to meet the educational brief we needed to have historical data available at all times.

- Resilience, specifically in the face of failing peripheral devices & the battery wearing out.

- Low maintenance, needs to keep doing what it was last told to without lots of commanding from the ground.

With hardware design underway, Jason asked Duncan & I to assist with the software, as AMSAT-UK / AMSAT-NL were unsure of the available development time (launch schedules are tricky things for Cubesats) and wanted to ensure that the CCT was adequately tested before launch.

So how does one respond when asked 'would you like to write code to go into space?' - hell yeah!

So What Are We Programming?

The CCT hardware consists of a two layer design (did I mention Jason likes layers?):

- A programmable logic chip (CPLD) that has just enough logic to: decode a simple command protocol (sorry, we can't discuss details!) enabling other to parts to be turned on/off; provide a digital to analogue convertor (DAC) for modulating the downlink radio. This is a Xylink CoolRunner-II.

- A micro-controller (MCU) to: collect telemetry, encode it into an audio signal for the DAC / modulator; decode and implement more complex commands that provide the majority of the functional specification. This is a Freescale (now NXP) HCS08 series 8-bit device, with 128k of banked ROM, 6k of RAM and a 16-bit address bus. It has a maximum clock speed of 40MHz.

These parts were chosen for appropriate I/O ability (such as I2C, SPI), low power (eg: no external oscillators), previous design experience with them and available tooling (ie: Code Warrior Free Edition).

Where Do You Start?

Well, Jason concentrated on the hardware & CPLD, his area of expertise and a critical part of the fail safe design that needed to be proven early on. Much as I'd love to talk you though the Verilog, it's not where my skills are, let's assume that all worked just fine, although the DAC was challenging :)

Duncan & I took on the MCU:

- Working with Jason to define an interface protocol with the CPLD, including a watchdog mechanism (did I mention Jason likes watchdogs?), received command transfers and DAC sample output.

- Working with Howard to embed the data encoding and forward error correction (FEC) software: we use a variant of the scheme created by Phil Karn (KA9Q), James Millar (G3RUH) et al. for an earlier AMSAT spacecraft, Oscar-40, commonly known as AO-40 FEC

- Working with Gerard to ensure compliance with Cubesat & coding standards, safety & reliability in operation.

- Working with Wouter to ensure correct radio modulation and reception (Wouter is also in the RF design team).

- Communication with a number of inter-intergrated circuit (I2C) devices for telemetry data and other purposes.

- Managing a persistent ferro-electric random access memory device (FRAM) over serial peripheral interface (SPI).

- Sampling a number of local analogue input pins via the built-in analogue to digital convertor (ADC) for yet more telemetry.

- Controlling the deployment of the spacecraft: the remove before flight switch, the launch delay and the aerials.

Did I mention that our satellite power supply has a watchdog that we need to reset over I2C or it turns everything off and on again? Can't have too many watchdogs it seems...

Can We Clock It?

So we have a tiny MCU by modern expectations, it's not much more than a fast 6805 or Z80, can it do all the things we need to? We can reduce the clock frequency (yes, underclocking!) to save power, how fast do we need to go?

We choose to work through the actions required, the timing requirements for inputs and outputs and the processing load (cycle counts), to find out:

- Actions are a regular affair: data collection schedules for each sensor (1/5/60 seconds), packing that data into frames and encoding them for transmission, something that a state machine & sequential code could handle just fine given a clock.

- Timing for inputs is non-critical: commands are asynchronous with response times in seconds, telemetry collection is on a schedule where repeatability matters more than absolute sample timing.

- Timing for outputs turns out to be more stringent, as we are generating audio samples at 9600Hz that have to be accurate to a few microseconds, and telemetry frames need to be sent continuously.

- Processing load (measured in CPU cycles - determined by prototyping and clock counting), is minimal during data collection, however there is significant work required to apply the FEC which mustn't disrupt other activities.

Once we have an idea of the processing load, we can choose a clock frequency that gives us good headroom while keeping the power levels down - we end up at 14MHz and an estimated power consumption of 50mW with twice the number of cycles than we expect to use.

Our MCU also has a specific power saving WAIT instruction that suspends the CPU clock until an interrupt wakes it up, so by designing out busy waits / polling we should save more power - can we clock it? Yes we can.

Will an OS Help?

An internet search for MCU operating systems will find libraries such as FreeRTOS, CMX-TINY or uC/OS-II. With the RAM we have these can typically squeeze in a task manager with preemption, message queues and timers.

Do we need these features? Do we want to be debugging 3rd party RTOS code?

Applying the KISS principle: we choose to avoid complex, non-deterministic things like memory managers, task pre-emption / scheduling, priority queues, etc. as we feel our challenges can be largely met by a state machine and some careful timing. In addition we still have to provide all the device drivers ourselves, so a 3rd party OS isn't really helping much. We choose to work with the bare MCU and the device libraries provided by the tooling (thanks Metroworks/Freescale/NXP!)

Event Loops & Buffers

The only way we can meet the real time audio output timing is under interrupt from a hardware timer. With this interrupt in place, we have a means to 'wake up' the MCU from the WAIT instruction and feed it timing events (in practice a 100 millisecond pulse) via a bit in shared variable. This leads to a design where the main loop is a single threaded event processor, dispatching timing or other input data events, delivered under interrupt to avoid busy waiting. While processing events we also reset the various watchdogs, which allows them to detect / correct any failure in the wake up mechanism.

See the diagram above :)

Our other challenges are continuous telemetry and fitting in enough cycles to apply FEC. These can be met by double buffering the telemetry data, thus we are sending a processed frame as audio output under interrupt while encoding the next. This adds a delay to the telmetry (one frame, 5 seconds) but allows us to spread out the FEC processing over a frame period, slotting in bursts of activity on a predictable schedule.

Double buffering consumes a big chunk of our RAM (each frame buffer is 650 bytes), and on numerous occasions we have to re-arrange variables or refactor the code to remove them so eveything fits back into 6k!

Persistance, Resilience & Low-mantenance

All the -ences (almost). Did I mention that we like watchdogs? Our MCU has an internal reset watchdog that we enable early on, and have to clear every 125msecs. This gives us confidence that should something go very wrong and we deadlock or spin while processing an event, we get restarted. We make use of this to detect bad devices while performing I/O, by storing a device ID just before collection, and on restarts, checking for a watchdog timeout (it usefully tells us it had to restart the MCU), then checking for a device ID, and if found, excluding that device from the next execution (ground commands can reset this state however to retry failed devices).

We also have little faith in RAM keeping it's value during power glitches (brown-outs), that are likely as the battery loses capacity over time, thus all important data structures have a cyclic redundancy check (CRC) in, which is checked during restarts. If that fails we can take appropriate action, either resetting to defaults or obtaining a backup from persistent FRAM. We CRC the FRAM contents too - there are cosmic rays in space that can mess up storage!

For that low-maintenance requirement, we have FRAM storage for all system configuration structures and restore these on restarts as above.

Debugging, Tracing and Avoiding Toggles

It's really easy to debug an embedded device when it's on the desk in front of you - attach the programming pod and hit F5 in your IDE. Because of this, our initial thoughts on using a serial port as a debug console were scrapped, not worth it when there are better tools to hand. However, once working with a built satellite that cannot have the pod attached, we find a stack of LEDs on spare I/O pins to be very useful, you can show critical state bits / important transitions (like starting the event loop) and see timing heartbeats.

Once out of pod or visible range (aka in space), we need a way to examine internal state / manipulate peripherals / trace execution, so we include a number debug commands that allow memory block dumps, I2C bus transactions and can hard reset the CCT.

Our favorite feature in this area is the execution trace - based on hardware logic analyser design, we write short messages (2-4 chars) into a trace buffer during execution, and include this buffer in regular telemetry output, always from the most recent message and going backwards in time. This allows us to see things like our startup logic flow at each reset or identify unusual data inputs, giving us opportunities to reproduce unusual conditions and debug on the local engineering model that's still within reach!

From remote debugging we learnt never to have 'toggle' commands, it's unsafe and difficult to determine if the command worked, you have to try to read back the old and new states to compare them... much better to always set a specific state and have a command acknowledgement scheme (we use a command counter). This makes commands idempotent, allowing blind commanding by resending the same one multiple times (aka, shouting at your satellite!), very useful if that command is 'deploy the aerials'...

FIN

Top comments (14)

Awesome project, Phil.

A few questions if you don't mind...

You mentioned the ability to debug from telemetry and send commands to do stuff like turn off misbehaving peripherals. Do you also have the ability to flash new code to the sats while they are in space (to fix a particularly nasty bug, for example)?

What did you (the builders of the cubesats) see as the biggest risks to mission success? How did you prioritize and mitigate those risks?

Any idea how many hours went into each sat from start to finish?

Is there anything you'd change with the experience you gained and with the technology available if you were building the same functionality starting today? Is anything easier about building a cubesat in 2020?

So: reflashing was the one thing we couldn't do from the ground, the uplink bandwidth (5 baud) makes it impossible to push significant amounts of code (ROM image was ~35k for the first deployment) during a pass where the satellite is communicable, and flash memory has an unfortunate sensitivity to radiation when the programming voltage is applied. We calculated that we would end up with a bit corruption approx every 1000 bits. For this reason we also used ferromagnetic storage for data.

Risk management: launching a brick was the biggest risk (total mission failure) - deployment is the most complex part of the process (actual moving parts, battery condition after months of storage, interaction with launching pod, etc.) and there is significant empirical evidence that this is where many other cubesats have failed. After that, ongoing ability to provide the primary mission function: telemetry for schools, then secondary function: the ham radio transponder. We also knew that this was not our only chance, additional launches were coming along, which provided mitigation through learning cycles too.

Technically, we didn't have a formal risk management process (the joy of amateur work), and cubesats are specifically aimed at higher risk experimental stuff through reduced costs. We did have a lot of testing from experienced colleagues at our launch partner company which provided a good deal of confidence (well placed I think - we have never had a catastrophic problem so far).

Hours - wow, in the low thousands I think over the 4 years of sporadic working and several people, I suspect the project managers know better than I :)

What would I change and use of modern tech? It's ongoing right now with the creation of our next gen design this year: primarily moving more stuff into software (especially radio technology), so we launch a platform with safe dynamic storage, much faster configurable radio systems, sensors and core functions, that supports ourselves & 3rd party developers running ongoing experiments on the platform after launch. A big challenge with FC-1 was having to specify and build everything up front, people really don't like committing to features as 'final', so this new approach removes some of that difficulty and makes the platform useful over longer time periods. We also really, really want to take 4k video of the actual deployment process from the satellite itself :)

From a software architecture / choice of hardware viewpoint, we would look at more distributed / autonomous processing capability to protect against cascade failures, to offer a range of processors (RISC, FPGA, DSP/hybrid) for experiments and take advantage of modern sensors (cameras, IR, magnetics, accelerometers, particles) as they are much smaller thanks to phones!

5 baud uplink. That's crazy. I took another look at your cpu specs and realized you guys are running a spacecraft with less processing power than a microbit. That's quite the engineering achievement. Well done.

Thanks for taking the time to reply. It's all fascinating to me.

Cool! We are on the same rocket!

I am from the MOVE-II team. My job was ADCS software

Space is just amazing

Excellent! Is this your first launch?

Mine yes, our university already did one (that died after 3 month)

3 months is better than zero :)

I hope you find launch #1 as exciting as I did, I was on a high for several days, here's a photo about 5 seconds after first signal:

..aaand repeat :) data.amsat-uk.org/ui/jy1sat-fm

I posted a link to this article on r/programming and someone mentioned using Ada/Spark. Did you guys consider something like that instead of C?

Interesting question! We chose to work with the supported tooling for the MCU (MetroWorks Code Warrior for MCUs, which supports C/C++ and ASM), and didn't really consider anything else. CW4MCUs also comes with the Processor Expert device driver templates, giving us tested hardware access code (yes, we are lazy and risk averse :))

I briefly looked at SDCC as an open source toolchain alternative, because CW4MCUs is bloated Windows-only goop. I got a demo program to boot then the team reminded me they all used Windows, it was just me on Linux being weird.

I should note that we found two compiler bugs during development, one issue with the optimiser (register aliasing two variables together), another with 'smart' parameter passing conflicting with C permitting calls to functions without prototypes, oh the joy of stepping through assembly :)

A brief search for 8-bit Ada tooling only find Ada for AVR targets, although GNAT might be able to generate HCS08 output, looks like they aren't popular devices for missile guidance or medical gear.

Ah, that makes sense. At reddit a user linked to the following video based on a cubesat deployed by a college in vermont:

blog.adacore.com/carl-brandon-vid

On closer inspection, the hardware they are using is on a totally different scale though. Toward the end of the video, they mention a proton 400k. I looked this up ( cubesatlab.org/IceCube-Hardware.jsp ) and it appears to have a dual core powerpc CPU running at 1GHz.

You guys were operating with something not so far from Apple II hardware, though about 10 times the clock speed thankfully, so probably C/ASM would be the only realistic option! I should have noticed that in your post myself - sorry about that!

Yup - I went and found the Reddit post (thank you for the publicity), would have replied but I'm not a Reddit user (and not sure I wish to be!) Their target system for the first satellite (cubesatlab.org/BasicLEO.jsp) was a TI MSP430, which is a 16-bit CPU, with 16k+ RAM. Later work used larger devices.

Also their toolchain was interesting: since there is no native Ada/Spark compiler for MSP430 they transpiled to C... underpinned by ~2k lines of driver code in C.

We have ~10k lines of C (including comments) in the latest build for JY1SAT.

I'm curious, was solar power used to charge the battery or was it truly 100% battery powered?

They are (all still working 5 years in) solar powered, with a battery for eclipse-side operation, lots of hardware detail from here for FUNcube-1:

funcube.org.uk/space-segment/