Introduction:

Responsible AI has been the focus of most of the IT Service providers to AI business solutions recently. I have developed some of the excellent AI-enabled business solutions some of which are game-changers- well!! Until I realized the hidden trade-offs and risks to it. AI solutions can be as damaging as we can think of the benefits from them.

Consider an algorithm that detects your voice, as a part of your biometric test. What if someone with a similar voice uses the system, or for that matter what if some good mimic uses the system. While I was working on one of the solutions for Speech Analytics to identify the emotions of a person in a complete conversation identifying the right pitch and the tone of the user becomes important. In all such cases and many more such examples “Data” plays an important role.

AI works on Data, and a lot of data and sometimes the most critical and personal data. “Personal data”! exactly where the government regulatory plays an important role.

RAI or Responsible AI is a shield under which all the ethics and governance issues are addressed. This helps them create a trustworthy and standard AI solutions.

Organizations like Google and Microsoft are coming up with their own governance models. This may be more considered as a common framework to deliver a Hassel free solution. The term “Responsible” before AI plays an important role in the complete framework.

Now that we are somewhat familiar with the term and what it might be let’s try to have a bird eye view of how it exactly looks like when we implement it.

Considerations in Responsible AI:

In simple terms Responsible AI defines what AI solution should be like and what it should not be. Some of the key focuses while defining a Responsible AI framework in an organization are:

- Human centric and socially beneficial

- Trustworthy

- Transparent

- Should be accountable to the people

- Auditable.

- Avoid creating or reinforcing bias

- Should be designed developed and tested for safety

- Designed in a way to incorporate privacy principles. What AI should not be like:

- Programmed to do something devastating

- Developed to do something beneficial but it develops a destructive method.

- Purpose of the solution contravenes the international law

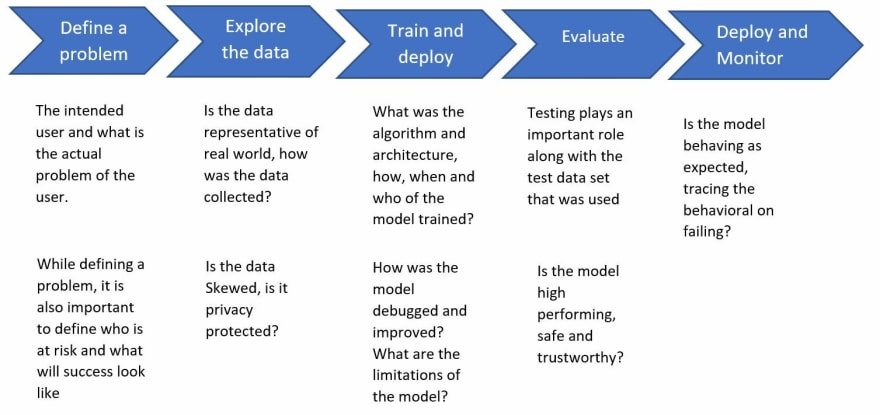

- Surveillance violating international law Bird-eye View: I like the quote below: “Principles that remain on paper are meaningless” -Sunder Pichai- Let’s have a look at how Google Responsible AI defines itself. Human Bias can occur at any stage of a typical Data exploration, be it data collection, labeling, training, filter, or aggregations. Implementing RAI: Various phases of defining an AI solution can be defined below at a generic level.

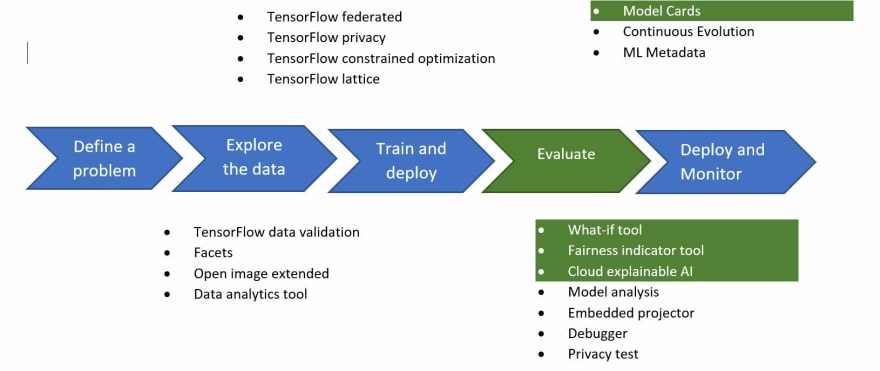

A subset of the Governance model while the solution is in execution can be seen in the below diagram which highlights various tools that can be useful for evaluation (marked in green).

Conclusion:

Responsible AI will play a major role in shaping up the AI solutions in right direction. Now is the right time for all the major giants to stand up and form a matured framework for a governed AI. If the market solutions or readily available solutions are governed, checked and audited, the chances for a better AI for a better human kind solutions increases.

Top comments (0)