Kubernetes: Helm — “x509: certificate signed by unknown authority”, and ServiceAccount for Pod

We have Github runners in our AWS Elastic Kubernetes service cluster, that are used to build Docker images and deploy them with Helm or ArgoCD.

On the first helm install run in a Github runner's Pod, we are getting the " x509: certificate signed by unknown authority" error:

$ helm --kube-apiserver=https://kubernetes.default.svc.cluster.local list

Error: Kubernetes cluster unreachable: Get “https://kubernetes.default.svc.cluster.local/version?timeout=32s": x509: certificate signed by unknown authority

Or, if not set an API URL, then we can see a permissions error:

$ helm list

Error: list: failed to list: secrets is forbidden: User “system:serviceaccount:dev-1–18-backend-github-runners-helm-ns:default” cannot list resource “secrets” in API group “” in the namespace “dev-1–18-backend-github-runners-helm-ns”

So, the cause is obvious: Helm in the Pod is trying to access the Kubernetes API server using the default ServiceAccount, that was created during Github runner deployment.

Checking a ServiceAccount’s permissions

As already discussed in the Kubernetes: ServiceAccounts, JWT-tokens, authentication, and RBAC authorization post, to authenticate on an API server we need to have its Certificate Authority key and a token.

Connect to the pod:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns exec -ti actions-runner-deployment-7f78968949-tmrtt bash

Defaulting container name to runner.

Use ‘kubectl describe pod/actions-runner-deployment-7f78968949-tmrtt -n dev-1–18-backend-github-runners-helm-ns’ to see all of the containers in this pod.

root@actions-runner-deployment-7f78968949-tmrtt:/actions-runner#

Set variables:

root@actions-runner-deployment-7f78968949-tmrtt:/actions-runner# CA_CERT=/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

root@actions-runner-deployment-7f78968949-tmrtt:/actions-runner# TOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)

root@actions-runner-deployment-7f78968949-tmrtt:/actions-runner# NAMESPACE=$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace)

And try to access API now:

root@actions-runner-deployment-7f78968949-tmrtt:/actions-runner# curl -s — cacert $CA_CERT -H “Authorization: Bearer $TOKEN” “https://kubernetes.default.svc.cluster.local/api/v1/namespaces/$NAMESPACE/pods" | jq ‘{message, code}’

{

“message”: “pods is forbidden: User \”system:serviceaccount:dev-1–18-backend-github-runners-helm-ns:default\” cannot list resource \”pods\” in API group \”\” in the namespace \”dev-1–18-backend-github-runners-helm-ns\””,

“code”: 403

}

A similar error you’ll get if try to run the kubectl get pod:

root@actions-runner-deployment-7f78968949-tmrtt:/actions-runner# kubectl get pod

Error from server (Forbidden): pods is forbidden: User “system:serviceaccount:dev-1–18-backend-github-runners-helm-ns:default” cannot list resource “pods” in API group “” in the namespace “dev-1–18-backend-github-runners-helm-ns”

Okay, and what we can do here?

Creating a Kubernetes ServiceAccount for a Kubernetes Pod

Actually, instead of mounting the default ServiceAccount to the pod, we can create our own with permissions set with Kubernetes RBAC Role amd Kubernetes RBAC RoleBinding.

Creating an RBAC Role

We can use a pre-defined role from the list here — User-facing roles, for example, cluster-admin:

$ kubectl get clusterrole cluster-admin

NAME CREATED AT

cluster-admin 2020–11–27T14:44:52Z

Or, we can write our own with strict limitations. For example, let’s grant access to the verbs get and list for the resources pods:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: "github-runner-deployer-role"

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list"]

Create that role in a necessary Kubernetes Namespace:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns apply -f github-runner-deployer-role.yaml

role.rbac.authorization.k8s.io/github-runner-deployer-role created

Check it:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns get role

NAME CREATED AT

github-runner-deployer-role 2021–09–28T08:05:20Z

Creating a ServiceAccount

Next, create a new ServiceAccount in the same namespace:

apiVersion: v1

kind: ServiceAccount

metadata:

name: "github-runner-deployer-sa"

Apply it:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns apply -f github-runner-deployer-sa.yaml

Creating a RoleBinding

Now, we need to create a binding — a connection between this ServiceAccount and the role, which was created above.

Create a RoleBinding:

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: "github-runner-deployer-rolebinding"

subjects:

- kind: ServiceAccount

name: "github-runner-deployer-sa"

namespace: "dev-1-18-backend-github-runners-helm-ns"

roleRef:

kind: Role

name: "github-runner-deployer-role"

apiGroup: rbac.authorization.k8s.io

Apply it:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns apply -f github-runner-deployer-rolebinding.yaml

rolebinding.rbac.authorization.k8s.io/github-runner-deployer-rolebinding created

Mounting the ServiceAccount to a Kubernetes Pod

And the final thing is to attach this ServiceAccount to our pods.

First, let’s do it manually to check, without a Deployment:

apiVersion: v1

kind: Pod

metadata:

name: "github-runners-deployer-pod"

spec:

containers:

- name: "github-runners-deployer"

image: "nginx"

ports:

- name: "web"

containerPort: 80

protocol: TCP

serviceAccountName: "github-runner-deployer-sa"

Create this Pod:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns apply -f github-runner-deployer-pod.yaml

pod/github-runners-deployer-pod created

Connect to it:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns exec -ti github-runners-deployer-pod bash

root@github-runners-deployer-pod:/#

And check API access:

root@github-runners-deployer-pod:/# CA_CERT=/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

root@github-runners-deployer-pod:/# TOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)

root@github-runners-deployer-pod:/# NAMESPACE=$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace)

root@github-runners-deployer-pod:/# curl -s --cacert $CA_CERT -H “Authorization: Bearer $TOKEN” “https://kubernetes.default.svc.cluster.local/api/v1/namespaces/$NAMESPACE/pods"

{

“kind”: “PodList”,

“apiVersion”: “v1”,

“metadata”: {

“selfLink”: “/api/v1/namespaces/dev-1–18-backend-github-runners-helm-ns/pods”,

“resourceVersion”: “251020450”

},

“items”: [

{

“metadata”: {

“name”: “actions-runner-deployment-7f78968949-jsh6l”,

“generateName”: “actions-runner-deployment-7f78968949-”,

“namespace”: “dev-1–18-backend-github-runners-helm-ns”,

…

Now we are able to use the get and list for Pods, but not for the Secrets, as we didn't add this permission:

root@github-runners-deployer-pod:/# curl -s — cacert $CA_CERT -H “Authorization: Bearer $TOKEN” “https://kubernetes.default.svc.cluster.local/api/v1/namespaces/$NAMESPACE/secrets"

{

“kind”: “Status”,

“apiVersion”: “v1”,

“metadata”: {

},

“status”: “Failure”,

“message”: “secrets is forbidden: User \”system:serviceaccount:dev-1–18-backend-github-runners-helm-ns:github-runner-deployer-sa\” cannot list resource \”secrets\” in API group \”\” in the namespace \”dev-1–18-backend-github-runners-helm-ns\””,

…

Helm ServiceAccount

Let’s go back to our Github Runners and helm in there.

So, as this Helm will be used to deploy anything and everywhere in the EKS cluster, we can give it admin access by using the cluster-admin ClusterRole.

Delete RoleBinding created before:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns delete rolebinding github-runner-deployer-rolebinding

rolebinding.rbac.authorization.k8s.io “github-runner-deployer-rolebinding” deleted

Create a new one, but at this time by using the ClusterRoleBinding type to give access to the whole cluster:

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: "github-runner-deployer-cluster-rolebinding"

subjects:

- kind: ServiceAccount

name: "github-runner-deployer-sa"

namespace: "dev-1-18-backend-github-runners-helm-ns"

roleRef:

kind: ClusterRole

name: "cluster-admin"

apiGroup: rbac.authorization.k8s.io

Attach this ServiceAccoun to this ClusterRole:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns apply -f github-runner-deployer-clusterrolebinding.yaml

clusterrolebinding.rbac.authorization.k8s.io/github-runner-deployer-rolebinding created

Go back to the Pod, and try to access a Kubernetes Secrets now:

root@github-runners-deployer-pod:/# curl -s --cacert $CA_CERT -H “Authorization: Bearer $TOKEN” “https://kubernetes.default.svc.cluster.local/api/v1/namespaces/$NAMESPACE/secrets"

{

“kind”: “SecretList”,

“apiVersion”: “v1”,

“metadata”: {

“selfLink”: “/api/v1/namespaces/dev-1–18-backend-github-runners-helm-ns/secrets”,

“resourceVersion”: “251027845”

},

“items”: [

{

“metadata”: {

“name”: “bttrm-docker-secret”,

“namespace”: “dev-1–18-backend-github-runners-helm-ns”,

“selfLink”: “/api/v1/namespaces/dev-1–18-backend-github-runners-helm-ns/secrets/bttrm-docker-secret”,

…

Nice — now our Helm has full access to the cluster!

And let’s update the Github Runners Deployment to use this ServiceAccount.

Edit it:

$ kubectl -n dev-1–18-backend-github-runners-helm-ns edit deploy actions-runner-deployment

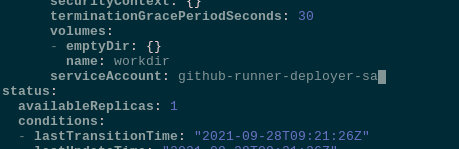

Set a new ServiceAccount by adding the serviceAccount:

Wait for new pods will be created (check the Kubernetes: ConfigMap and Secrets — data auto-reload in pods), connect and check the Pod’s permissions now:

root@actions-runner-deployment-6dfc9b457f-mc7rt:/actions-runner# kubectl auth can-i list pods

yes

And check access to another namespace, as we’ve created the ClusterRoleBinding, which is applied to the whole cluster:

root@actions-runner-deployment-6dfc9b457f-mc7rt:/actions-runner# kubectl auth can-i list pods — namespace istio-system

yes

Good — and we have access to the istio-system Namespace too.

And check the Helm:

root@actions-runner-deployment-6dfc9b457f-mc7rt:/actions-runner# helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

github-runners dev-1–18-backend-github-runners-helm-ns 2 2021–09–22 19:50:18.828686642 +0300 +0300 deployed github-runners-1632329415 v1.0.0

Done.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)