We have a project running in Kubernetes that needs to run SQL migrations during deployment.

To run migrations need to clone a Github repository and run actually migrations stored in it.

Currently, this is done with Kubernetes initContainers , and there are two of them — the first one with git clones the repository with migrations files into a Kubernetes Volume, and then another one with sql-migrate runs those migrations from this shared volume.

Still, there are a few issues with this approach:

- each time when we are running a new pod it runs its initContainers and migrations

- if a Deployment spin up a couple of pods — each will run migrations

- if migrations will run for w while and will not respond to Kubernetes readiness — it could be killed without finishing migrations

To avoid all the above let’s reconfigure the process by using a Kubernetes Job to run only one pod and by adding Helm Hooks to trigger migrations during deployment.

Note: the kk here is an alias for the kubectl.

Preparation

Docker image

At first, let’s create our own Docker image with blackjack and git and https://github.com/rubenv/sql-migrate.

Create a Dockerfile:

FROM golang:alpine AS builder

RUN apk add --no-cache git gcc g++

RUN go get -v github.com/rubenv/sql-migrate/sql-migrate

RUN mv /go/bin/sql-migrate /bin/sql-migrate

Build and push it:

$ docker build -t projectname/sql-migrate-git .

$ docker push projectname/sql-migrate-git

Git authentification

The second thing is the Github authentification.

At this moment our git-container authenticates via an RSA key which is held in a Kubernetes Secrets, then it passes to a pod via an environment variable from where it’s taken by a bash script /opt/git/git.sh which is used to create a key-file /root/.ssh/id_rsa inside of the container, and this key finally is used to authenticate.

The initContainers in our Deployment currently looks like the next:

...

initContainers:

- name: git-clone

image: projectname/git-cloner

env:

- name: SSH_PRIVATE_KEY

valueFrom:

secretKeyRef:

name: git-ssh-key

key: id_rsa

- name: REPOSITORY_URL

value: {{ .Values.git.repo }}

- name: GIT_BRANCH

value: {{ .Values.git.branch }}

command: ['sh', '-c', '/opt/git/git.sh']

volumeMounts:

- name: git-volume

mountPath: "/git"

readOnly: false

- name: init-migration

image: fufuhu/sql-migrate:latest

command: ['sh', '-c', 'while [! -d /git/db/migrations]; do sleep 2; done && sleep 2; /bin/sql-migrate up -config=/config/config.yaml -env=main']

volumeMounts:

- name: migration-config

mountPath: "/config/"

readOnly: true

- name: git-volume

mountPath: "/git"

readOnly: false

...

A lot of steps, a lot of objects, complicated process.

Instead, let’s use a login and a Github token which will be passed into our container from environment variables, and then we can clone the repository via HTTPS.

Let’s test it:

~ # export GIT_AUTHUSER=backend-user

~ # export GIT_AUTHKEY=cdc***0fe

~ # git clone

[https://$GIT_AUTHUSER:$GIT_AUTHKEY@github.com/projectname-dev/backend-services.git](https://%24GIT_AUTHUSER:%24GIT_AUTHKEY@github.com/projectname-dev/backend-services.git)

Cloning into ‘backend-services’…

…

Receiving objects: 100% (5115/5115), 846.55 KiB | 1.30 MiB/s, done.

Resolving deltas: 100% (2826/2826), done.

Nice, “It works” ©

SQL migrations in Kubernetes

Now, we can start writing a manifest file templates/appname-api-migrations.yaml to describe our Kubernetes Job which later will be triggered by a Helm hook.

Kubernetes Job

git clone

First, to make sure the Job is working — let’s write it without Helm variables and values, all pod’s environment variables will be set as plaintext values, and the action here will be the git clone for now:

apiVersion: batch/v1

kind: Job

metadata:

name: "migration-job"

labels:

annotations:

spec:

backoffLimit: 0

template:

metadata:

name: "migration-job-pod"

spec:

restartPolicy: Never

containers:

- name: db-migrations

image: projectname/sql-migrate-git:latest

command: ["/bin/sh", "-c"]

args:

- git clone --single-branch --branch develop [https://backend-user:cdc***0fe@github.com/projectname/backend-services.git](https://backend-user:cdc***0fe@github.com/projectname/backend-services.git) &&

ls -l backend-services/db/migrations

Here in the restartPolicy we've set to not to restart a container if it fails, as we'd like to see that migrations were failed, and the same in the backoffLimit=0 - to not to re-create a pod if it fails and just leave the job with the Failed status.

In the git clone a branch will be set from a Jenkins job, user and URL will be set in a values.yaml, and the authentication token will be kept with Helm secrets, later will move it to an environment variable.

Create the Job:

$ kk -n eks-dev-1-appname-api-ns apply -f appname-api-jobs.yaml

job.batch/migration-job created

Check its logs:

$ kk -n eks-dev-1-appname-api-ns logs job/migration-job

Cloning into ‘backend-services’…

total 20

-rw-r — r — 1 root root 538 Oct 24 12:20 BS_1_init_schema.up.sql

-rw-r — r — 1 root root 180 Oct 24 12:20 BS_2_add_brand_field.up.sql

-rw-r — r — 1 root root 225 Oct 24 12:20 BS_3_alter_table.up.sql

-rw-r — r — 1 root root 194 Oct 24 12:20 BS_4_add_created_at_field.sql

-rw-r — r — 1 root root 272 Oct 24 12:20 BS_5_alter_table_nourishment_diet.up.sql

The repository was cloned, migration fils now are accessible.

Check the pod’s status:

$ kk -n eks-dev-1-appname-api-ns get pod

NAME READY STATUS RESTARTS AGE

migration-job-f72vs 0/1 Completed 0 9s

And the Job’s status:

$ kk -n eks-dev-1-appname-api-ns get job

NAME COMPLETIONS DURATION AGE

migration-job 1/1 2s 5s

Now can proceed with the exact migrations process.

Secret

To run migrations we need to create a config file that will be stored in a Kubernetes ConfigMap, but in this file, a database’s password must be set.

It’s not a good idea to store it in plaintext in a ConfigMap and the file but sql-migrate allows us to use an environment variable in the file, check its documentation - https://github.com/rubenv/sql-migrate#as-a-standalone-tool

So, we will create a variable for the pod called $DB_PASSWORD, and will keep the actual password in a Kubernetes Secrets, and later in Helm, we will use the Helm secrets to store it encrypted in a chart's values.

Also in this Secrets, we will store a value for a $GIT_TOKEN environment variable to be used in the git clone command.

Still in the templates/appname-api-migrations.yaml add a Secret:

...

---

apiVersion: v1

kind: Secret

metadata:

name: backend-db-password

type: Opaque

stringData:

db_password: password

git_token: cdc***0fe

In the spec.containers.env of the Job add variables and update the git clone to use the $GIT_TOKEN variable:

...

containers:

- name: db-migrations

image: projectname/sql-migrate-git:latest

command: ["/bin/sh", "-c"]

args:

- git clone --single-branch --branch develop [https://backend-user:$GIT_TOKEN@github.com/projectnamev/backend-services.git](https://backend-user:%24GIT_TOKEN@github.com/projectnamev/backend-services.git) &&

ls -l backend-services/db/migrations;

cat /config/config.yaml

env:

- name: GIT_TOKEN

valueFrom:

secretKeyRef:

name: backend-db-password

key: git_token

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: backend-db-password

key: db_password

...

ConfigMap

Next, create a ConfigMap to keep the /config/config.yaml content for the sql-migrate and use the $DB_PASSWORD variable in it:

...

---

apiVersion: v1

kind: ConfigMap

metadata:

name: migration-config

data:

config.yaml: |

main:

dialect: mysql

datasource: backend-user:${DB_PASSWORD}@tcp(stage.backend-db3-master.example.com:3306)/dbname?parseTime=true

dir: backend-services/db/migrations

table: backend_services_migrations

In the pod’s template of the Job add volumes, and in the spec.containers via volumeMounts mount the volume with the ConfigMap as the /config/config.yaml file.

The Job’s full manifest now is the next:

apiVersion: batch/v1

kind: Job

metadata:

name: "migration-job"

labels:

annotations:

spec:

backoffLimit: 0

template:

metadata:

name: "migration-job-pod"

spec:

restartPolicy: Never

containers:

- name: db-migrations

image: projectname/sql-migrate-git:latest

command: ["/bin/sh", "-c"]

args:

- git clone --single-branch --branch develop [https://backend-user:$GIT_TOKEN@github.com/projectname/backend-services.git](https://backend-user:%24GIT_TOKEN@github.com/projectname/backend-services.git) &&

ls -l backend-services/db/migrations;

cat /config/config.yaml

env:

- name: GIT_TOKEN

valueFrom:

secretKeyRef:

name: backend-db-password

key: git_token

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: backend-db-password

key: db_password

volumeMounts:

- name: migration-config

mountPath: "/config/config.yaml"

subPath: "config.yaml"

readOnly: true

volumes:

- name: migration-config

configMap:

name: migration-config

items:

- key: "config.yaml"

path: "config.yaml"

...

Run it:

$ kk -n eks-dev-1-appname-api-ns apply -f appname-api-jobs.yaml

job.batch/migration-job created

secret/backend-db-password created

configmap/migration-config created

Check:

$ kk -n eks-dev-1-appname-api-ns logs job/migration-job

Cloning into ‘backend-services’…

total 20

-rw-r — r — 1 root root 538 Oct 24 13:41 BS_1_init_schema.up.sql

-rw-r — r — 1 root root 180 Oct 24 13:41 BS_2_add_brand_field.up.sql

-rw-r — r — 1 root root 225 Oct 24 13:41 BS_3_alter_table.up.sql

-rw-r — r — 1 root root 194 Oct 24 13:41 BS_4_add_created_at_field.sql

-rw-r — r — 1 root root 272 Oct 24 13:41 BS_5_alter_table_nourishment_diet.up.sql

main:

dialect: mysql

datasource: backend-user:${DB_PASSWORD}@tcp(stage.backend-db3-master.example.com:3306)/dbname?parseTime=true

dir: backend-services/db/migrations

table: backend_services_migrations

Good — the repository was cloned, the config file was created.

Running migrations

And now we can describe the migrations process, for now with the -dryrun option, and with the second command - check its status:

...

args:

- git clone --single-branch --branch develop [https://backend-user:$GIT_TOKEN@github.com/projectname/backend-services.git](https://backend-user:%24GIT_TOKEN@github.com/projectname/backend-services.git) &&

ls -l backend-services/db/migrations &&

cat /config/config.yaml &&

/bin/sql-migrate up -config=/config/config.yaml -env=main -dryrun &&

/bin/sql-migrate status -config=/config/config.yaml -env=main

...

Run, and check its logs:

$ kk -n eks-dev-1-appname-test-migrations-ns logs job/migration-job

Cloning into ‘backend-services’…

total 20

-rw-r — r — 1 root root 538 Oct 24 14:02 BS_1_init_schema.up.sql

-rw-r — r — 1 root root 180 Oct 24 14:02 BS_2_add_brand_field.up.sql

-rw-r — r — 1 root root 225 Oct 24 14:02 BS_3_alter_table.up.sql

-rw-r — r — 1 root root 194 Oct 24 14:02 BS_4_add_created_at_field.sql

-rw-r — r — 1 root root 272 Oct 24 14:02 BS_5_alter_table_nourishment_diet.up.sql

main:

dialect: mysql

datasource: backnd-user:${DB_PASSWORD}@tcp(stage.backend-db3-master.example.com:3306)/dbname?parseTime=true

dir: backend-services/db/migrations

table: backend_services_migrations

+ — — — — — — — — — — — — — — — — — — — — — + — — — — — — — — — — — — — — — -+

| MIGRATION | APPLIED |

+ — — — — — — — — — — — — — — — — — — — — — + — — — — — — — — — — — — — — — -+

| BS_1_init_schema.up.sql | 2020–05–07 12:21:25 +0000 UTC |

| BS_2_add_brand_field.up.sql | 2020–05–12 14:31:17 +0000 UTC |

| BS_3_alter_table.up.sql | 2020–05–13 06:17:25 +0000 UTC |

| BS_4_add_created_at_field.sql | 2020–07–21 09:55:49 +0000 UTC |

| BS_5_alter_table_nourishment_diet.up.sql | 2020–07–21 09:55:49 +0000 UTC |

+ — — — — — — — — — — — — — — — — — — — — — + — — — — — — — — — — — — — — — -+

Can go to the Helm chart now.

Helm template

What do we need to do in the old chart?

- remove

initContainers - remove old Secrets

- move values for our new variables in the

values.yaml - move the token and database’s password into the

secrets.yaml

And the main part here is to add annotations to trigger the migrations process during Helm deploy

Add the annotations to the Job:

apiVersion: batch/v1

kind: Job

metadata:

name: {{ .Chart.Name }}-migration-job

labels:

annotations:

"helm.sh/hook": pre-install,pre-upgrade

"helm.sh/hook-weight": "-1"

"helm.sh/hook-delete-policy": before-hook-creation

spec:

backoffLimit: 0

...

Here:

-

"helm.sh/hook": pre-install,pre-upgrade: run the Job beforehelm installorupgrade(in our Jenkins pipeline it's started with thehelm secrets upgrade --install) -

"helm.sh/hook-weight": "-1": priority of the creation of the resources, as first we need to create the ConfigMap and Secret that will be used by our Job, so set their weight less than for the Job -

"helm.sh/hook-delete-policy": the default value is the before-hook-creation (check the documentation), set if for testing purpose, and then it can be changed to the hook-succeeded (but in that case you'll not be able to check the logs if the migration will fail)

Add the annotations block to the ConfigMap and Secrets with the hook-weight less than in the Job.

The ConfigMap manifest now full content:

---

apiVersion: v1

kind: ConfigMap

metadata:

name: migration-config

annotations:

"helm.sh/hook": pre-install,pre-upgrade

"helm.sh/hook-weight": "-5"

"helm.sh/hook-delete-policy": before-hook-creation

data:

config.yaml: |

main:

dialect: {{ .Values.backendConfig.db.driver }}

datasource: {{ .Values.backendConfig.db.user }}:${DB_PASSWORD}@tcp({{ .Values.backendConfig.db.host }}:{{ .Values.backendConfig.db.port }})/{{ .Values.backendConfig.db.database }}?parseTime=true

dir: backend-services/db/migrations

table: {{ .Values.backendConfig.db.migrationsTable }}

The Secret one:

---

apiVersion: v1

kind: Secret

metadata:

name: {{ .Chart.Name }}-migration-secrets

annotations:

"helm.sh/hook": pre-install,pre-upgrade

"helm.sh/hook-weight": "-10"

"helm.sh/hook-delete-policy": before-hook-creation

type: Opaque

stringData:

backend-db-password: {{ .Values.backendConfig.db.password }}

git_token: {{ .Values.git.token }}

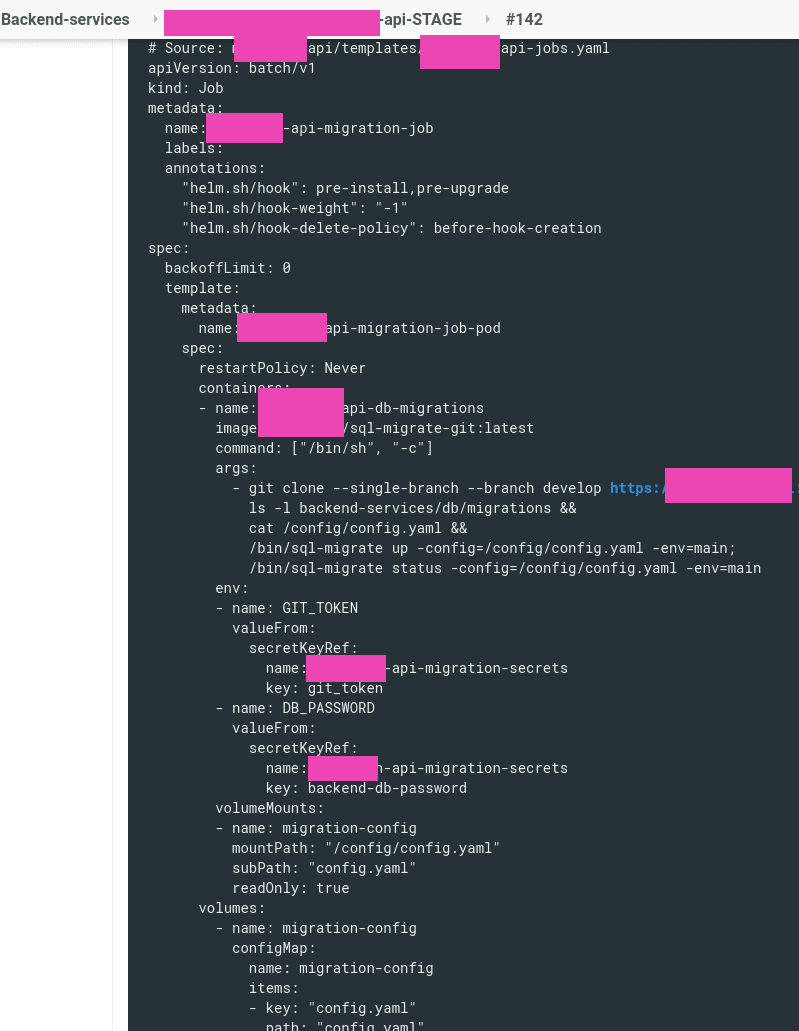

And the Job:

apiVersion: batch/v1

kind: Job

metadata:

name: {{ .Chart.Name }}-migration-job

labels:

annotations:

"helm.sh/hook": pre-install,pre-upgrade

"helm.sh/hook-weight": "-1"

"helm.sh/hook-delete-policy": before-hook-creation

spec:

backoffLimit: 0

template:

metadata:

name: {{ .Chart.Name }}-migration-job-pod

spec:

restartPolicy: Never

containers:

- name: {{ .Chart.Name }}-db-migrations

image: projectname/sql-migrate-git:latest

command: ["/bin/sh", "-c"]

args:

- git clone --single-branch --branch {{ .Values.git.branch }} [https://{{](https://%7B%7B) .Values.git.user }}:$GIT_TOKEN@{{ .Values.git.repo }} &&

ls -l backend-services/db/migrations &&

cat /config/config.yaml &&

/bin/sql-migrate up -config=/config/config.yaml -env=main || exit 1;

/bin/sql-migrate status -config=/config/config.yaml -env=main

env:

- name: GIT_TOKEN

valueFrom:

secretKeyRef:

name: {{ .Chart.Name }}-migration-secrets

key: git_token

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: {{ .Chart.Name }}-migration-secrets

key: backend-db-password

volumeMounts:

- name: migration-config

mountPath: "/config/config.yaml"

subPath: "config.yaml"

readOnly: true

volumes:

- name: migration-config

configMap:

name: migration-config

items:

- key: "config.yaml"

path: "config.yaml"

Here I’ve added the exit 1 to the /bin/sql-migrate u so the Job will Fail in case of errors during migrations and thus will not start the deployment process.

Run it in the Jenkins:

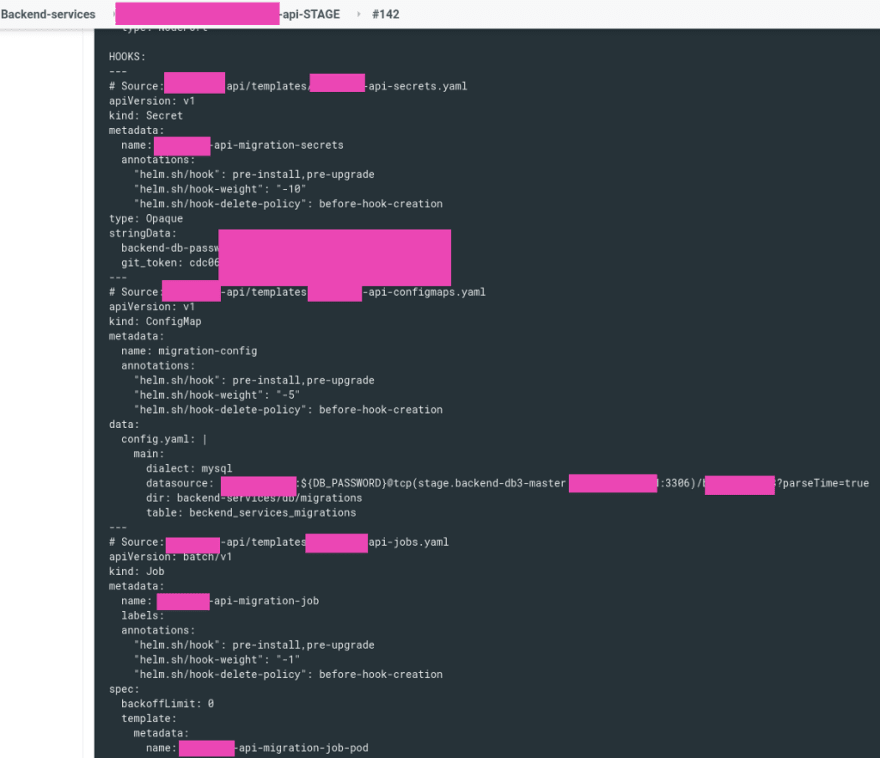

In the HOOKS we can see that first the Secret was created as it has hook-weight": "-10", then the ConfigMap, and the Job at last.

And the deployment process looks now like the next:

At first, the Secret, ConfigMap and Job resources are removed (as per the "helm.sh/hook-delete-policy": before-hook-creation), and then are created.

Check the Job’s status:

$ kk -n eks-stage-1-appname-api-ns get job

NAME COMPLETIONS DURATION AGE

appname-api-migration-job 1/1 3s 6m21s

Its logs:

$ kk -n eks-stage-1-appname-api-ns logs job/appname-api-migration-job

Cloning into ‘backend-services’…

total 20

-rw-r — r — 1 root root 538 Oct 26 11:32 BS_1_init_schema.up.sql

-rw-r — r — 1 root root 180 Oct 26 11:32 BS_2_add_brand_field.up.sql

-rw-r — r — 1 root root 225 Oct 26 11:32 BS_3_alter_table.up.sql

-rw-r — r — 1 root root 194 Oct 26 11:32 BS_4_add_created_at_field.sql

-rw-r — r — 1 root root 272 Oct 26 11:32 BS_5_alter_table_nourishment_diet.up.sql

main:

dialect: mysql

datasource: backend-user:${DB_PASSWORD}@tcp(stage.backend-db3-master.example.com:3306)/dbname?parseTime=true

dir: backend-services/db/migrations

table: backend_services_migrations

Applied 0 migrations

+ — — — — — — — — — — — — — — — — — — — — — + — — — — — — — — — — — — — — — -+

| MIGRATION | APPLIED |

+ — — — — — — — — — — — — — — — — — — — — — + — — — — — — — — — — — — — — — -+

| BS_1_init_schema.up.sql | 2020–05–07 12:21:25 +0000 UTC |

| BS_2_add_brand_field.up.sql | 2020–05–12 14:31:17 +0000 UTC |

| BS_3_alter_table.up.sql | 2020–05–13 06:17:25 +0000 UTC |

| BS_4_add_created_at_field.sql | 2020–07–21 09:55:49 +0000 UTC |

| BS_5_alter_table_nourishment_diet.up.sql | 2020–07–21 09:55:49 +0000 UTC |

+ — — — — — — — — — — — — — — — — — — — — — + — — — — — — — — — — — — — — — -+

Applied 0 migrations, as there are no changes in the migrations files after the last APPLIED.

All done.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)