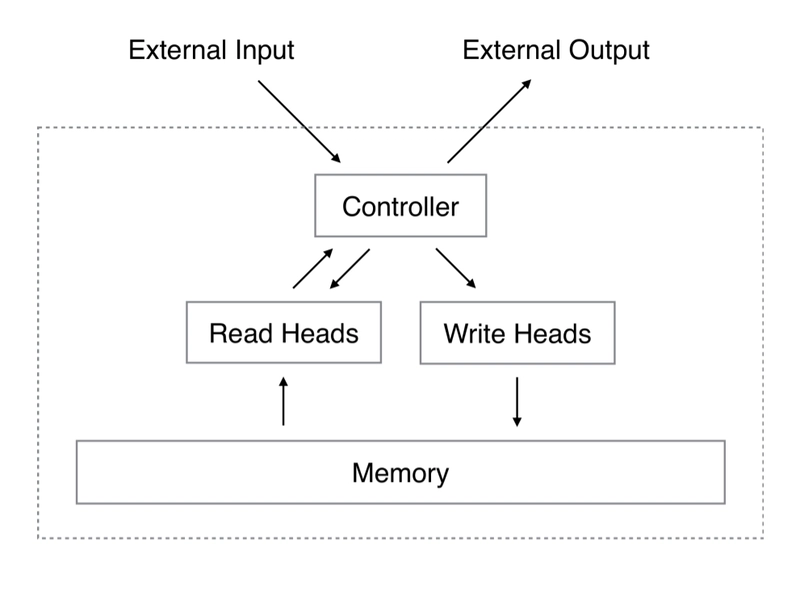

Memory-Augmented Neural Networks (MANNs) are neural network architectures enhanced with an external memory module. In simple terms, a MANN is like a regular neural network (e.g. an RNN or Transformer) that has an extra “memory bank” it can read from and write to, somewhat akin to a computer’s RAM. This design allows the network to store information over long time spans and recall it later – a capability beyond what standard neural nets can typically do. The concept is inspired by how humans use working memory to temporarily hold information, and by the classic von Neumann computer architecture where a CPU interacts with external memory. By integrating dynamic memory, MANNs can tackle tasks requiring long-term context, complex reasoning, or sequential decision-making that were once challenging for AI. In recent years, MANNs have gained attention as a cutting-edge advancement in AI, opening up new possibilities in areas like language understanding, decision support, and more. How MANNs Work: Core Architecture and Components. At the heart of a MANN is a two-part design: a neural network controller plus an external memory store. The controller is typically a neural network (often a recurrent network or nowadays sometimes a Transformer) that processes input and decides when and what to read or write in the external memory. The external memory is usually a matrix or bank of vectors that the controller can use to stash information and retrieve it later. The controller interacts with this memory via read heads and write heads, which are mechanisms that perform differentiable read/write operations (learned through training). To figure out where in memory to read from or write to, MANNs use attention-like mechanisms. Essentially, the controller produces addressing vectors (“keys”) that softly select memory locations by similarity, enabling the network to focus on relevant memory slots. All these operations are end-to-end differentiable, meaning the whole system (controller + memory) can be trained with gradient descent just like a normal neural net.

A conceptual diagram of a Memory-Augmented Neural Network. The controller (neural network) processes input and interacts with an external memory via read and write heads, allowing it to store and retrieve information on the fly.

In practical terms, when a MANN processes data, it can write certain intermediate results or facts to its memory and then read them back later when needed. For example, if processing a long text or a sequence of events, a MANN might write down important details early on and recall them many steps later – addressing the long-term dependency problem that vanilla RNNs or LSTMs struggle with. The external memory in a MANN is separate from the network’s weights, which means it isn’t limited by what the neural network can encode in its parameters. This separation often leads to improved capacity and generalization, since the network can offload information to memory instead of squeezing it all into its weight matrices. In essence, the MANN’s controller learns how to use the memory: it learns what to write, when to write or erase, and how to retrieve – all optimized to solve the task at hand.

Notable MANN Architectures

Researchers have developed several core architectures under the MANN umbrella, each with a different twist on how the memory is structured and accessed:

- Neural Turing Machine (NTM) – The NTM was one of the first MANNs, introduced by Alex Graves et al. in 2014It consists of an RNN controller paired with a matrix memory. The NTM uses differentiable attention to read/write, with learned “heads” analogous to a Turing Machine’s tape reader.Remarkably, an NTM with an LSTM controller was shown to learn simple algorithms like copying a sequence, sorting numbers, and associative recall just from examples (no hardcoding). This was a breakthrough demonstration that a neural network equipped with memory can exhibit algorithmic-like behavior such as remembering a long sequence and reproducing it.

- Differentiable Neural Computer (DNC) – The DNC, developed by DeepMind in 2016, builds on the NTM and improves its memory addressing mechanisms. The DNC introduced features like a linking mechanism to track memory usage and better read-write controls, making it more stable as the memory grows. In experiments, DNCs could store and recall complex data structures (like graphs) and even generalize knowledge to new scenarios. For example, a DNC learned to navigate a public transit map and then was able to apply that memory to a different transit system without retraining. It also outperformed traditional LSTM networks on tasks like graph traversal and planning, highlighting the power of external memory for structured reasoning.

- Memory Networks – Introduced by Facebook (Weston et al., 2015), Memory Networks were geared towards question-answering (QA) and language tasks. In a memory network, the “memory” is a set of textual facts or embeddings, and the model learns to retrieve relevant facts to answer a query. Weston’s work showed that using an external memory as a dynamic knowledge base can greatly help QA systems. A later variant called End-to-End Memory Networks refined this approach to train the whole system in one go. These networks perform multiple hops over the memory: essentially reading from memory repeatedly to gather more evidence before producing an answer. This multi-hop retrieval is crucial for tasks like reading comprehension or chatbot dialog, where the system might need to recall several pieces of information from a knowledge base. In fact, memory networks have been extended to build conversational chatbots that can remember the dialogue history or facts provided, enabling more coherent and informed responses.

- Key-Value Memory Networks – An improvement over basic memory nets, key-value memory networks (Miller et al.) store data as key–value pairs (e.g., in QA, the key might be a query term or representation and the value a sentence). This lets the model attend over keys (for addressing) and retrieve the corresponding values, which is useful for scaling up QA to large knowledge bases. This architecture was used in some early open-domain QA systems to fetch supporting facts efficiently.

- Transformer-Based Memory Models – More recently, researchers have explored adding external memory to Transformer architectures. One example is Memformer (2022), a memory-augmented Transformer that maintains a set of memory slots in addition to the normal self-attention layers. Memformer’s external memory allows it to encode long sequences with linear complexity by offloading information into the memory slots, which are periodically read/written with a slot-based attention mechanism. Impressively, Memformer was able to match the performance of standard Transformers on long sequence tasks while using significantly less memory and achieving faster inference. This kind of research is addressing one of the key limitations of vanilla Transformers – the fixed-length context – by giving them a extendable memory. Another angle has been retrieval-based models in NLP, where a language model retrieves documents or facts from a database (an external memory) to inform its output. We’ll discuss that more in the trends section, but it’s worth noting here that the line between “memory networks” and “retrieval-augmented models” is blurring. Both involve an external repository of information that the model taps into.

Each of these architectures shares the same spirit: combining neural nets with an external memory to extend their capabilities. They differ in how they implement the memory (continuous vs discrete, how big, how addressed) and what the controller is (RNN, LSTM, Transformer, etc.), but fundamentally they all allow the model to remember information over long durations and even perform discrete reasoning steps by reading/writing memory.

Recent Advancements and Trending Developments

The field of MANNs is evolving rapidly, with new research tackling previous limitations and exploring novel applications. Some of the trending developments and breakthroughs include:

- Meta-Learning and Few-Shot Learning: MANNs have shown to be highly effective for meta-learning tasks – that is, “learning to learn” scenarios. A prime example is the work by Santoro et al. (2016) on one-shot learning. In their approach, a memory-augmented network learns to rapidly adapt to new data by leveraging an external memory to store representations of a few examples and their labels. During training it sees sequences of (input, label) pairs and learns to use the memory to bind them. This allows the network to correctly classify new inputs after seeing just one or a few examples. In simpler terms, the MANN acts like a quick notebook: given a new small dataset, it “writes” the examples into memory and when a query comes, it looks them up to generalize the answer. This idea was demonstrated on tasks like one-shot image classification (Omniglot characters) with great success. The key innovation is that the external memory can retain information across different tasks, so the model doesn’t forget earlier examples when new ones arrive. This fast-learning ability is very appealing in business settings where you might need to quickly customize an AI model to a new problem with minimal data – something a memory module can facilitate.

- Retrieval-Augmented Generation (RAG) in NLP: One of the hottest trends in NLP for large language models is retrieval augmentation. While not always labeled as “MANN,” the concept is closely related: you give the model access to an external knowledge base (documents, database, etc.) and let it retrieve relevant information to influence its output. This effectively augments the model with a form of dynamic memory. For instance, a generative model like GPT can be coupled with a document search system so that when asked a question, it first fetches relevant text from a knowledge repository and uses that to formulate an answer. This approach, formally called retrieval-augmented generation, has been shown to significantly improve accuracy and factual correctness. The external data serves as a live memory that the model can query as needed, rather than trying to cram all facts into its parameters. In practice, many enterprise QA systems and chatbots in 2025 use this strategy: the AI will pull information from company documents or databases (the memory) in real time to answer user queries. RAG and related techniques demonstrate that the idea of memory-augmentation is very much alive in cutting-edge AI deployments, especially for B2B applications like customer support bots, knowledge management, and documentation assistants. They blend classic MANN ideas (content-based memory retrieval) with modern large-scale models. As Patrick Lewis (one of the authors who popularized RAG) noted, this family of methods has exploded in recent years, and is seen as a crucial way to ground AI responses in real data.

- Long Horizon Reinforcement Learning: Another area of advancement is using memory-augmented networks in reinforcement learning (RL), where an agent must make a sequence of decisions. Traditional RL agents (like those using simple neural networks) might struggle with tasks that require remembering events far in the past or planning many steps ahead. MANNs provide a solution by giving the agent a memory to remember important past states or outcomes. Research by DeepMind and others have integrated DNCs and similar MANNs into RL agents. For example, a DNC-based agent was used to solve a block puzzle environment by learning how to store intermediate steps in memory and recall them as needed, using a form of curriculum learning to gradually build up the skill. Similarly, the concept of Neural Episodic Control (by Pritzel et al., 2017) introduced an agent that maintains an episodic memory of high-reward states, enabling much faster learning in games by recalling “experiences” from memory rather than having to re-learn them repeatedly. In summary, memory augmentation in RL is trending because it helps address one of the big RL challenges: long-term credit assignment and memory of past events. For businesses, this research can translate into smarter decision-support systems or planning algorithms that learn from historical episodes. Imagine a supply chain optimization AI that remembers the outcomes of past strategy sequences, or an autonomous drone that keeps a memory of key observations during a long mission – these are facilitated by memory-augmented models.

- Hybrid Neuro-Symbolic Systems: A more nascent but interesting development is combining MANNs with symbolic or logic-based systems to get the best of both worlds. For instance, some researchers are exploring using external knowledge graphs or databases as the “memory” for a neural network. The neural controller can query this structured memory when it needs factual or commonsense knowledge. This approach can make AI systems more interpretable (because the memory contains discrete facts) and reliable, which is very relevant for enterprise AI applications that require traceability of decisions. While not strictly a “neural network memory” (since the memory might be a traditional database), the interface is often learned with attention or key-value addressing, so it shares the spirit of MANNs. We can expect future AI systems to seamlessly integrate learned neural memories with existing data stores.

- Improvements in Memory Efficiency and Scalability: Traditionally, one challenge for MANNs was that as the memory size increased, the computation and addressing became a bottleneck. Recent work is tackling this through techniques like sparse attention (only focusing on a subset of memory at a time), hierarchical memories (organizing memory into tiers, e.g. short-term vs long-term storage), or even hardware advancements (using faster memory chips or content-addressable memory hardware to speed up lookups). For example, the Neural Attention Memory (NAM) architecture explores efficient memory access patterns, and Memformer (mentioned earlier) achieved linear complexity by not attending to all past tokens at once but rather using a fixed-size external memory that summarizes the past. These developments are making it more feasible to use MANNs in real-time applications and on large-scale data, which is critical for global B2B deployments where datasets can be huge.

In summary, the trend is that memory-augmented models are moving from research curiosities to practical tools. They are being blended with other AI advances (like big transformers or specialized hardware) to overcome prior limitations. The result is AI systems that are more context-aware, better at reasoning, and more adaptable – all of which are very appealing for business use cases that involve complexity and large information flows.

Key Applications of MANNs in B2B Sectors

Memory-augmented neural networks unlock capabilities that are valuable in many business-to-business contexts. Here we highlight some key application areas across industries where MANNs (or the ideas behind them) are making an impact:

- Knowledge Management and Customer Support: Businesses often have massive amounts of documentation – manuals, FAQs, product specs, client communications, etc. MANN-based systems can serve as intelligent knowledge bases that understand queries and fetch relevant information from memory. For instance, an AI customer support agent backed by a memory-augmented model can maintain a long conversation history with a customer and recall details from earlier in the dialogue, leading to more personalized and accurate responses. It can also pull in facts from a company’s knowledge base on the fly (using a RAG approach as discussed) to answer technical questions. Unlike a traditional chatbot that might forget context after a few turns, a memory-augmented chatbot can keep important context in an external memory module, effectively remembering the customer’s issue and preferences over the entire session (or even across sessions). This improves customer experience and reduces the need for customers to repeat information. Memory networks were actually used to prototype QA chatbots that treat each user query as a question and the chat history as memory – enabling the bot to answer based on the conversation context. For B2B, this could mean better internal helpdesk bots or client-facing support systems that handle complex, multi-turn problem solving.

- Healthcare Data Analysis: In healthcare, diagnostic decision-making often requires synthesizing a patient’s history, lab results, imaging, and latest symptoms. MANNs are well-suited here because they can hold a patient’s longitudinal data in memory and draw connections over time. For example, a memory-augmented model could store previous lab trends or notes and use them to interpret a new test result. Researchers have noted that MANNs can leverage dynamic memory to cross-reference diverse medical data sources and assist in diagnosis. Imagine an AI that reads a patient’s entire medical record (which could be hundreds of pages) – a standard model might struggle, but a MANN could write important facts (allergies, prior conditions, recent treatments) into memory as it reads, and then use that memory to answer specific questions or detect anomalies. This ability to remember complex patient data could help in personalized treatment recommendations or in flagging if current symptoms match something in the past. Some prototypes in medical research use memory-augmented networks to continuously learn from new patient data while retaining crucial past knowledge, improving as they encounter more cases.

- Financial Modeling and Forecasting: Finance is another domain dealing with sequences and large data streams – e.g., stock price histories, economic indicators, transaction sequences. MANNs can be employed to model these time series with long-term dependencies. A traditional model might only look at recent data due to memory constraints, but a MANN could, say, remember patterns from months or years back if they become relevant again. For instance, a memory-augmented model could store key market events in memory (such as “Fed interest rate change” or “Earnings report shock”) and later use that info when similar conditions occur, leading to better predictive accuracy. In algorithmic trading or risk management, such a model could recall precedents (like how the market reacted to X in the past) to inform current decisions. As a concrete example, a MANN was described to incorporate historical financial data, market trends, and even external signals in its memory module to improve forecasting. Traders and analysts using such AI might get more context-aware forecasts that consider both recent signals and deep historical analogs, which could be a competitive advantage. Moreover, the memory component can be updated with new data on the fly, allowing the model to adapt quickly to regime changes (e.g., a sudden market crash) by writing that event to memory for future reference.

- Supply Chain and Operations Management: Large-scale operations involve complex processes with many steps – think of a supply chain with procurement, manufacturing, distribution, etc. MANNs can help optimize and manage these processes by remembering the state over long sequences and making informed recommendations. For example, in process automation, a MANN-based recommender might guide a user on the next best action by recalling similar situations from memory. In fact, researchers have built a prototype called “DeepProcess” that uses a memory-augmented neural network to support business process execution decisions. It can look at an ongoing workflow (say processing an insurance claim) and suggest the next step, based on learning from historical cases stored in memory. This is essentially a form of case-based reasoning where the MANN’s memory contains traces of past process instances and their outcomes. The memory network can then retrieve relevant past cases to help decide what to do in the current case, thereby improving efficiency and outcomes. Similarly, for supply chain logistics, a MANN could potentially learn routing or scheduling strategies and generalize them. Recall the DNC example of learning public transit navigation – in a business context, that idea could apply to routing delivery trucks or scheduling production runs, where the model learns an algorithmic solution but also can adapt to new scenarios by recalling prior knowledge.

- Strategic Planning and Simulation: In fields like economics, strategic game theory, or even complex multiplayer business negotiations, there’s a need to simulate many steps of interaction. MANNs have been used in game-playing AI (DeepMind’s work hinted at using memory for games like Go or chess). For businesses, this translates to things like market simulation, wargaming, or long-term strategy planning. A MANN could simulate a multi-step scenario (like a competitor’s series of actions and the market responses) by keeping track of the evolving state in memory and exploring various outcomes. Because the model can retain a memory of “what happened if scenario A played out” versus “scenario B,” it can compare and help find optimal strategies. This is somewhat experimental, but it’s not far-fetched that future AI advisors for businesses will include a memory component to evaluate long sequences of hypothetical events.

- Document and Contract Analysis: Legal and compliance departments in enterprises deal with very long documents (contracts, regulations) where consistency and cross-referencing are key. A memory-augmented model could read a long contract and store clauses in memory, then answer questions like “does this contract have a clause about X and what does it say?” by pulling from that memory. Essentially it would treat the long document as its memory and reason over it, which is a more dynamic approach than simple keyword search. This can ensure nothing important is missed across hundreds of pages, assisting lawyers or compliance officers. Early versions of this capability are seen in some AI contract analysis tools that use transformer models with extended context or retrieval (which, again, is akin to memory augmentation).

Benefits of MANNs for Businesses

Why should businesses care about memory-augmented neural networks? Here are some of the standout benefits that MANNs can offer in a B2B context:

- Handling Long-Term Dependencies: MANNs excel at tasks where information from far back in the input sequence is relevant to the current output. In business data, context can span long periods (e.g., a customer’s purchase history, or a machine’s sensor readings over months). Traditional models might forget earlier details, but a MANN can remember because of its external memory. This leads to better accuracy in scenarios like forecasting, where events from a year ago might influence today, or in support interactions, where the first thing a customer said is crucial 30 minutes later.

- Enhanced Reasoning and Algorithmic Capabilities: Because they can store and manipulate information like a working memory, MANNs can learn algorithmic or logical tasks that normal neural nets struggle with. This means they can perform multi-step reasoning – for example, following a chain of facts to answer a question, or performing a series of calculations or lookups in a controlled manner. For a business, this could translate to an AI that can troubleshoot a problem by systematically checking possible causes (storing intermediate results in memory), or an AI that can plan a sequence of actions (like scheduling deliveries) while keeping track of constraints in memory. Essentially, MANNs bring a bit of a “thinking component” to neural networks, not just pattern recognition. This improved reasoning can lead to more reliable and insightful AI decisions.

- Flexible Knowledge Storage: The external memory in a MANN is not fixed to the training data in the way weights are. This means a model can be given new information after training by simply writing it to memory, and it can use that information immediately. In a business setting, think of updating an AI system with the latest data or rules without retraining from scratch. For instance, if tax regulations change, a tax advisory AI could be updated by inserting the new rules into its memory module. MANNs can also generalize better to new tasks by separating memory from the core network weights– the weights handle general strategies of how to use memory, while the memory contents can be specific to the task or context. This modularity is beneficial for reusing models across different but related tasks (transfer learning becomes easier when you can swap in a different memory content).

- Improved Generalization and Sample Efficiency: By offloading information to memory, MANNs often avoid overfitting to particular training sequences and can generalize to variations more robustly. Also, as seen in meta-learning cases, they can achieve good performance with fewer examples because they effectively store those examples and interpolate from them. For a company, this means potentially lower data requirements and better performance when encountering new situations that weren’t explicitly in the training set. The model learns a procedure (how to use memory) that can be applied broadly, which is great for dynamic business environments.

- Continuously Learning Systems: MANNs open the door to AI that can learn online, in production. A deployed MANN-based system could keep a memory of important events and gradually accumulate knowledge. Whereas traditional models would typically require an offline retraining to update their knowledge, a MANN might be able to integrate new data on the fly. This is advantageous for scenarios like anomaly detection (remembering novel anomalies), personalized recommendations (remembering user behavior), or adaptive process control (remembering what adjustments worked before). It pushes us closer to lifelong learning AI in the enterprise.

Limitations and Challenges

Despite their promise, Memory-Augmented Neural Networks come with several challenges and limitations that businesses should be aware of:

- Increased Complexity and Compute Cost: MANNs are more complex than standard neural networks because of the additional memory component and the operations to manage it. This complexity often means higher computational and memory requirements during both training and inference. Reading and writing to a large memory can be slow – imagine a database lookup happening at each step of a neural network’s forward pass. For very large memories or long sequences, this can become a bottleneck. In practical terms, deploying a MANN might require more powerful hardware (TPUs/GPUs with larger memory) and careful optimization. Businesses need to consider whether the performance gain from a MANN justifies the infrastructure cost, especially if the application requires real-time responses. There is also a higher engineering complexity; more components can mean more points of failure or bugs in implementation.

- Training Difficulty: Teaching a neural network how to use an external memory is not trivial. The model has to learn to balance between storing too much (which could clutter memory with irrelevant data) and storing too little (which defeats the purpose). It also must learn a sort of strategy: what to write, when to write, when to read, etc., which is a more complicated behavior than typical neural nets learning an input-output mapping. As a result, training MANNs can be unstable or slow. Researchers have reported issues like gradients becoming NaN (not-a-number) or very slow convergence when training NTMs and similar models. It may require extensive hyperparameter tuning, use of techniques like curriculum learning (start with easier tasks or smaller memory and gradually increase difficulty), or even special training algorithms. For businesses, this means longer development cycles and the need for AI experts who understand these architectures. It’s not as straightforward as throwing data at a standard model architecture; one might have to guide the model or monitor its memory usage patterns during training. In short, the stakes are higher when training MANNs – they can achieve more, but you have to get them to learn the right behaviors.

- Scalability of Memory Size: While in theory you can give a MANN a very large memory, in practice scaling to really huge memory (e.g., thousands or millions of slots) is challenging. The controller might struggle to find the needle in the haystack unless the addressing mechanism is very efficient. Also, memory reading/writing typically has at least linear complexity in the size of memory (if not more), so bigger memory = slower operations. There’s ongoing research on this, but for now, there’s a trade-off between memory capacity and speed. If an enterprise application demands an extremely large knowledge base, a pure MANN might not be the best approach yet; a hybrid system that uses classical databases or search engines alongside neural components might be more practical. Additionally, large memory could introduce new failure modes – e.g., if the model starts writing everywhere (thrashing the memory) or can’t decide where to write, it might degrade performance in unpredictable ways.

- Interpretability: Neural networks are often black boxes, and adding an external memory doesn’t automatically make them transparent. Although one can inspect the memory content (since it’s explicit), it may not be human-understandable vectors. Sometimes you might catch a glimpse of what the model is storing (for example, in a simple task, maybe it stores a copy of the input sequence), but in complex tasks, it could be encoding info in an abstract way. From a business perspective, this can be a concern for compliance or trust. If a model makes a decision based on something in its memory, can we trace why or what that was? There is active research in memory-augmented models that are more interpretable, or methods to probe the memory (like nearest-neighbor search to see what content is similar to a given query). But it remains a caveat – using MANNs in sensitive applications might raise questions from stakeholders or regulators about how the AI reached a conclusion.

- Limited Availability of Expertise and Tools: MANNs are on the cutting edge, and there aren’t as many off-the-shelf tools or frameworks for them as for, say, standard deep learning. There are some libraries (e.g., MemLab, as an academic project) and research code for NTMs/DNCs, but integrating these into a production pipeline might require bespoke development. The relative novelty means there are fewer engineers experienced in debugging or tuning these systems. A business considering MANNs might need to invest in R&D or upskilling their team. However, this is gradually improving – community examples and open-source implementations are emerging, and concepts from MANNs are trickling into mainstream AI libraries.

- When Not to Use MANNs: It’s worth noting that MANNs are not a silver bullet for all problems. If your task doesn’t actually require long-term memory or complex sequential reasoning, a simpler model might do just as well with less cost. For instance, many NLP tasks are now handled well by Transformers with positional encodings for fairly long context (say 512 tokens or more). If that covers your needs, introducing an external memory might complicate things unnecessarily. The overhead of memory is only justified when you truly need it. Businesses should evaluate the specific problem – e.g., do we really need to remember a 1000-step sequence, or can we get by with a window of 100 steps? If the simpler approach works, it might be more robust and easier to maintain. MANNs shine in the niche where other networks hit a wall.

Future Outlook and Potential

Looking ahead, the potential of Memory-Augmented Neural Networks in B2B applications is vast. As the kinks are worked out and technology advances, we can expect:

- More Seamless Integration with Large-Scale Systems: Future MANNs will likely integrate better with databases, knowledge graphs, and other enterprise data systems (achieving that neuro-symbolic synergy). We might see AI that can learn from structured company data by writing it into a neural memory, effectively creating a bridge between classical data storage and neural reasoning. This could revolutionize how business analytics are done, moving from static reports to AI agents that dynamically leverage company memory to answer questions.

- Specialized Hardware for Differentiable Memory: There is ongoing research into hardware accelerators that can support the kind of random read/write operations MANNs perform. For example, content-addressable memory chips or memristor-based memory units that can do associative lookup in hardware. If these become available, it could supercharge MANNs, making memory operations as fast as matrix multiplications are today on GPUs. That would remove a big bottleneck and allow much larger scale MANNs, enabling things like real-time video analysis with memory, or nation-scale economic simulations by an AI, etc. For businesses, that means previously infeasible AI tasks might come within reach.

- Better Algorithms for Memory Management: We expect new training techniques and architectures that handle memory more elegantly. Ideas like learnable memory compression (where the model can compress old memories to make room for new ones), memory tagging (where pieces of data in memory have meta-tags to help retrieval), or hierarchical memory (short-term vs long-term) will likely be refined. The goal is to make MANNs more autonomous in managing their knowledge, much like a human brain consolidates important facts to long-term memory and discards irrelevant details. This will improve their scalability and reliability. In a future scenario, a business might have an AI system running for years, accumulating knowledge in its memory – essentially becoming more seasoned and “wise” over time, without forgetting important lessons. Achieving this would mark a big step towards continual learning AI in the enterprise.

- Applications We Can’t Yet Imagine: Just as no one predicted all the killer apps of the internet in the early days, giving AI a powerful memory could lead to novel applications. We might see AI financial analysts that remember every market situation in history and can warn of impending crises by analogy. Or industrial robots that have a memory of all the items they’ve ever handled, enabling them to instantly recognize if something is out of place or a part is defective (because they recall what it should look like). In complex project management, an AI with memory might track thousands of tasks and their interdependencies, foreseeing risks that no human could keep in mind. The combination of memory with creativity (generative models) could also lead to AIs that generate solutions to problems by recombining past knowledge in new ways – a kind of innovation support tool.

- Broader Adoption in B2B Software: As the benefits become clearer, we anticipate MANN concepts being built into B2B software products. For example, future ERP (enterprise resource planning) systems might have AI co-pilots that use memory networks to learn the ins and outs of a particular company (policies, typical procedures, exceptions) and provide guidance or automation tailored to that company. Since the AI can store that company-specific knowledge in memory, it effectively becomes institutional memory in machine form, which is valuable especially when human employees turn over. This could transform corporate training and knowledge transfer – new employees might consult an AI mentor that has “memorized” the expertise of veteran employees (culled from documents, emails, records). While this is somewhat speculative, the building blocks are being laid by current advances in memory-augmented AI.

Best Practices for Implementing MANNs in Business

If you’re considering implementing a Memory-Augmented Neural Network for a business application, here are some best practices and tips to keep in mind:

- 1. Ensure the Use Case Demands Memory: Carefully assess whether your problem genuinely requires the long-term memory and complex reasoning that MANNs provide. If the task can be solved with simpler models (perhaps using moderate sequence lengths or standard attention), start there. Only opt for a MANN if you identify clear benefits like needing to handle extremely long sequences, recall specific rare events, or perform algorithmic steps. MANNs shine in niches like long-context NLP, meta-learning, or sequential decision making with long horizons. Having a well-defined requirement (e.g., “model must recall events from 1000 steps ago”) will guide your design and justify the added complexity.

- 2. Leverage Existing Architectures and Libraries: You don’t have to build a MANN from scratch. The research community has open-sourced implementations of NTMs, DNCs, memory networks, etc. Use these as a starting point. Libraries like MemLab (from Stanford) provide tools for building and training memory-augmented networks, and while they might have a learning curve, they are designed for these architectures. Similarly, some deep learning frameworks have primitives for attention mechanisms that you can repurpose for memory addressing. Starting from a proven implementation can save months of development and help avoid subtle bugs in the read/write logic. If your use case is NLP-centric, consider whether a retrieval-based approach (using something like HuggingFace’s RAG pipeline) could achieve the effect of a MANN with less custom code – essentially using a search index as memory. It might not be as elegant as a learned memory, but it’s effective and there are tools readily available.

- 3. Design the Memory with the Task in Mind: Decide what form of memory is appropriate – e.g., a fixed-size matrix vs. a growing list, or content-addressable vs. position-addressable. For instance, if your data is naturally key-value (say user ID -> user profile data), a key-value memory might fit better. If the task requires sequential access (like reading a story), maybe an array-like memory with location-based addressing works. You might also consider memory size and slot size (vector dimensions) as hyperparameters. A good practice is to start small (a smaller memory size) to ensure the model learns to use it properly, then scale up as needed. Think about whether you need one memory for everything or perhaps multiple memory modules (e.g., one for recent info, one for long-term info). Some advanced implementations use multiple memory heads for different purposes – this could help if your task has, say, two types of information that should not mix. The key is aligning the memory design with the knowledge structure of your domain.

- 4. Monitor and Guide Training Closely: When training the MANN, pay close attention to how it’s using the memory. It’s very useful to include logging or periodic evaluation of memory contents. For example, for a toy problem, you might inspect if the model is indeed writing the expected info (like copying the sequence in an NTM copy task). For real tasks, you can devise probes – maybe feed a known sequence and check if a specific item ends up in memory, or if the read heads attend to relevant memory when producing output. If you find the model isn’t using memory as intended, you might need to adjust the training regime. Techniques that can help include:

- Curriculum Learning: start with shorter sequences or fewer distractors, so the model has an easier time learning the concept of using memory. Gradually increase difficulty (longer sequences, more data) once it shows basic proficiency.

- Auxiliary Losses: sometimes researchers add extra loss terms to encourage proper memory usage. For instance, an autoencoder loss on memory content (forcing the memory to store reconstructable info) or a sparsity penalty to not write too often. Use these carefully to nudge behavior without crippling the model’s freedom.

- Pre-training on Synthetic Tasks: If your end goal is complex, it might help to first train the model on a simpler but related task that exercises the memory. E.g., before training a financial prediction MANN on real market data, pre-train it on a synthetic sequence memorization or pattern copy task to initialize the memory mechanism.

- Gradient clipping and smaller learning rates: These can prevent the training from diverging, which is somewhat common in early epochs as the model tries to figure out memory usage.

- Remember, training a MANN might take longer to converge. Patience and careful tuning are part of the process – you are essentially teaching the network to “think” in steps, which is more involved than pattern recognition.

- 5. Manage Computational Load: As you implement, be mindful of the computation and memory overhead. If using a very large memory, ensure you have batch sizes and parallelism set such that it fits in GPU memory. It may be beneficial to implement a sparse update (only a subset of memory accessed per step) if possible, or use techniques like caching frequent memory access results. Profiling the model to find bottlenecks is a good practice – e.g., is most time spent in the attention over memory? If so, maybe limit the attention width or use approximate nearest neighbor search for large memory. During deployment, you might also consider whether the memory can be pruned or reset periodically if it grows over time. Some applications might allow a sliding window memory (forgetting old entries after a while) – implement such logic if appropriate to keep inference times consistent.

- 6. Plan for Monitoring and Maintenance: Once deployed, a MANN-based system might require a different monitoring approach than a standard model. You might want to track metrics like how often the model is writing to memory, or the distribution of attention weights, to detect drift or malfunction. If the model has an evolving memory (e.g., online learning scenario), you should have a strategy for when to clear or compress memory to avoid it accumulating noise or outdated info. In addition, because these models are complex, having fallback options is wise – for example, if the MANN isn’t confident (perhaps measured by low attention focus or high uncertainty), the system could default to a simpler rule-based outcome or ask for human input. This kind of fail-safe is important in business-critical applications. Maintenance-wise, be prepared to retrain or fine-tune the model as new types of data come in. The separate memory might make the model more adaptable, but the controller’s policy might still need occasional updates if the nature of data shifts a lot.

- 7. Keep It Interpretable (if possible): Try to maintain some level of interpretability. One approach is to label memory slots or at least have a way to decode them. For example, if your memory stores vectors that correspond to certain known items (like a particular event or record), keep a dictionary to map memory vectors back to nearest human-readable content. This can help in debugging and in explaining the model’s actions to stakeholders. Another tip: use attention visualization – heatmaps of what memory positions or keys the model attended to for a given output can be insightful. If you show that to a domain expert, they might validate that “yes, those are the relevant pieces of info for that decision” or catch if the model is focusing on irrelevant info. Building trust in the model’s use of memory is important for acceptance in a business setting.

Implementing a MANN is certainly an advanced project, but following these best practices can make it more manageable. Start with clear objectives, use existing resources, and iterate carefully. With time, you’ll harness the power of memory in neural networks to solve problems that were previously out of reach for AI.

Conclusion

Memory-Augmented Neural Networks bring a new dimension to what AI systems can do by giving them the ability to remember and reason more like humans. In global B2B applications, this capability can be transformative – enabling AI to tackle tasks involving extensive context, historical data, or complex multi-step decisions that traditional models would stumble on. We’ve explored how MANNs work, their core architectures, the latest developments, and how they can be applied in domains from customer service to finance to operations. We also discussed the tangible benefits for businesses, as well as the challenges and best practices to keep in mind.

In summary, MANNs are an exciting technology at the frontier of AI. They are bridging the gap between pattern recognition and true sequential reasoning, allowing enterprises to build smarter systems that learn from the past to make better decisions in the present. While they come with added complexity, the potential rewards – in accuracy, adaptability, and capability – are significant. As research continues and the tools improve, memory-augmented networks could very well become a staple of the AI solutions landscape, especially in environments that demand both intelligence and a good memory. Businesses that invest in understanding and leveraging this technology may gain an edge by unlocking insights and automation that lesser systems simply couldn’t achieve. The journey of implementing MANNs may be challenging, but it aligns with a clear trend: the future of AI isn’t just about computing more, it’s about remembering more – and using that memory to drive smarter outcomes.

Top comments (0)