One remarkable byproduct of the AI proliferation boom bought about by ChatGPT is the amount of AI-generated content out there on the internet. Recently I've found a need to be able to identify them at scale, so I decided to tackle two problems at once.

The AWS Community Builder Program's Machine Learning Team recently started a Hackathon around Generative AI and the Hugging Face Transformers Library, so this could be a fun experiment. If you'd like to know more about the program, check my other blog post.

So, I decided to build an AI Content Detection solution, and document my journey.

📦 The Solution

The goal was to build an API which could receive a text, and provide an analysis on whether it was likely to be written by a real human, or generated by an AI.

Additionally, I wanted to leverage a serverless architecture so I could easily embed it into a future event-driven solution, and to scale it effectively with minimal cost.

For the Hackathon, I also wanted to provide it as a synchronous API that could return a result in a reasonable period of time, so single-second latency was important.

With that, let's get into the journey of building this thing:

🤖 Part 1) Exploring the transformers library

First thing was to learn how the library actually works, and use it in a simple Python script. It was... amazing to get working. How simple?

from transformers import pipeline

pipe = pipeline("text-classification", model="roberta-base-openai-detector")

print(pipe("Hello! I'm ChatGPT, a large language model trained by OpenAI. I'm here to assist you with any questions or information you may need. How can I help you today?"))

...I gotta say, I'm impressed how the transformers library abstracted away all of the stuff with tokenization, inference, and pytorch. Once the libraries are installed, it's a breeze to work with. Success!

🔨 Part 2) Getting transformers into Lambda

AWS Lambda excels natively with many different workloads. Machine Learning inference isn't one of them... Between the libraries and model totalling up into several gigabytes of dependencies, we can't exactly just toss it all into a ZIP file. At that size, even Lambda Layers aren't going to cut it.

First I thought I could use some sneaky-jutsu by building a ZIP file of the packages which I could host on S3. At initialization, I'd use the increased ephemeral storage capacity to download and unzip the package.

But that didn't work quite so well. Between some issues with the packages, and the amount of time it took to unzip... it just wasn't worth it. So I refactored back into using EFS to host the packages and model files.

This did mean I needed to build a whole VPC to come along for the ride. Really just writing a bunch of extra resources for the SAM Template and some minor costs like a NAT Gateway. Still, building a whole VPC just to make a Lambda function run better isn't ideal.

There's always some reason to keep VPC's...

⚡ Part 3) Orchestration with Step Functions

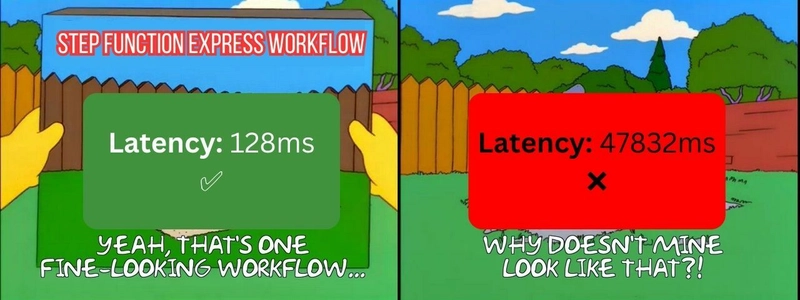

Since I want to extend the functionality further, I chose to build this around a Step Function Express Workflow to orchestrate the solution.

Okay fair enough, that's not very interesting. Instead, let's make this smarter by adding some caching functionality. API Gateway does have caching, but we want to do something more ambitious than just simple strings.

By keeping our individual Lambda functions short and single-purpose too, it also makes it far easier to rip out components and replace them. With the native integration of the AWS SDK, working with DynamoDB is incredibly easy too.

Getting API Gateway to perform a synchronous execution also doesn't integrate quite as smoothly, but one of the articles in the Serverless Land Pattern Collection solved that.

🌐 Part 4) Downloading whole pages

Analyzing individual strings is nice and all, but let's make this thing more powerful - I want to be able to give it a URL, and let it go do it's thing. So we just get requests to download it, bs4 to strip the bones off, and viola, we have a page! Easy days...

Umm, okay - maybe this is a bit more complicated after all.

Webpages have a lot of elements, and not all of it is relevant. After some poking, I've stripped it back just to look at the contents of the <article> tags. This could be made to support custom targeting with parameters to the request, but didn't have a particular need in this case. But there are other problems.

The model can only handle up to 512 tokens at once, which is a bit of a challenge. Splitting up the payload to be processed in reasonable sized chunks that processed to produce meaningful results was surprisingly hard. No joke, I rewrote the damn thing about a dozen times, and it made me deeply question my skills.

Eventually, I came up with a solution that mostly works. Using the RoBERTa tokenizer and breaking it down per line works pretty reliably (don't check the TODO I left behind in the code).

Also, first time using Amazon CodeWhisperer on a full project too, and it was a helpful little thing!

👀 Part 5) Observability & Optimization

So, everything's done and working now, right?! Sure!

Except for one problem... It takes quite a while to run. Like, stupidly long execution times, even for simple workloads.

In my eventual use case, this is minor since it'll be run asynchronously, and can take as long as it generally pleases. But that's not as great for our synchronous demo API. So it's time to enable AWS X-Ray to run the distributed tracing over the whole thing.

No surprise, but our detection function is a pure chonk (technical term), and slow as hell - especially in initialization. The invocation itself is actually relatively quick.

There's many ways to tackle this problem. After a few hours looking at the underlying code, tuning the resourcing, and exploring the possibility of implementing my own snapshot system (roll on SnapStart for Lambda), I settled on one solution...

Provisioned Concurrency means AWS will keep several instances of the function warm and ready for invocations, bypassing the slower start times. Along with Reserved Concurrency setting an upper-limit, we shouldn't hit any bottlenecks caused by long init times.

This does come with a much higher cost, but for this case, it's a worthwhile trade-off. Eventually Lambda SnapStart for Python would let me work around easily being a stateless workload, but for now I'll work with what I have.

💪 Try it yourself!

The API is available for use at the path below!

https://hackathon.api.ssennett.net/run

If you'd like to try it out yourself (including the curl/Powershell commands), or even fork your own copy, check out the code on GitHub under my serverless-ai-content-detector repository.

✨ Building a Custom Model

The roberta-base-openai-detector model isn't infallible, which they make explicitly clear in their model card. It was also trained on a dataset by GPT-2, which while being a technological marvel is also relatively old as hell, while the globally proliferous ChatGPT runs on GPT-3.5 and GPT-4.

I did try building my own model on RoBERTa using Common Crawl and the open-source Falcon-40B model, but it just wasn't reliable enough to warrant implementation. Not for lack of trying though:

Thank goodness for free AWS credits 😅

🎉 Conclusion

In the end, this is a high-level of the solution I ended up with:

This was a really fun project to build.. The Hugging Face Transformers Library was surprisingly easy to use, and once I got over the hurdles, it was a breeze to use with native AWS Services.

Nothing will ever detect AI generated content with complete reliability - that's a fact of the modern world. But this kind of solution can drive signals for us to investigate.

Let me know in the comments if you end up trying it out, and how accurate the prediction was!

Top comments (1)

@ssennettau This is very cool indeed, would be interesting to see it's benchmarks with existing state of the art AI Detection tools