A couple months ago I was scrolling through my Twitter feed and came across this little gem:

Introducing the #CloudResumeChallenge. I'm volunteering my network to help you get your first job in the cloud. But I can only share a certain kind of resume. Thread -> forrestbrazeal.com/2020/04/23/the…17:19 PM - 27 Apr 2020

At the time I was studying for an AWS certification, and with time constraints being what they were, I filed the Cloud Resume Challenge away in my brain as "a cool idea, but no thanks". After I passed the exam in early July, I started thinking about the challenge again. Taking a test is one thing, actually doing something with that knowledge is another. So, with less than a month left before the challenge deadline, and armed with a lot of theoretical knowledge and very little coding experience, I said "Let's go!"

The Requirements

The requirements of the challenge were as follows:

- Get an AWS Certification (Done!)

- Create a resume website using HTML

- Use CSS to style the site

- Host the site using AWS S3

- Use HTTPS for security, using an AWS CloudFront distribution

- Buy a custom domain name and set up the DNS using AWS Route 53

- Write some JavaScript to create a hit counter for the site

- Set up a DynamoDB database to store the hit count

- Build an API & an AWS Lambda function that will communicate between the website and the database

- Write the Lambda function in Python

- Write and implement Python tests

- Deploy the stack by using infrastructure-as-code, not by clicking around in the AWS console

- Use source control for the site via GitHub

- Implement automated CI/CD for the front end

- Implement automated CI/CD for the back end

- Write a blog post about the experience

Getting Started

The first few steps were relatively easy. Having learned HTML/CSS long ago, formatting my resume and getting the initial index.html and CSS ready took less than a day. In the interest of time - and because HTML/CSS isn't the main focus of this project - I found a nice Bootstrap template to use for my site and modified it as needed.

Next up was buying my domain name, configuring IAM roles and permissions, creating an AWS S3 bucket to host the files, creating a CloudFront distribution to secure and distribute the web traffic, and getting Route 53 DNS configured correctly. I had done all of this many times before while preparing for the certification, so it went smoothly as well.

Enter SAM

I decided to skip ahead a bit and work on deploying the serverless resources I would need for the backend of the site. I wanted to try something that I hadn’t used before, so I started researching the AWS Serverless Application Model (SAM), which uses AWS CloudFormation to deploy resource stacks via infrastructure-as-code. While I was going through the official AWS SAM documentation, Chris Nagy came in clutch with his blog Get Your Foot In The Door with SAM. Seriously folks, this is exactly the kind of documentation and content that will inspire & motivate cloud newbies. Once I went through his tutorial, it all made perfect sense. Within a day or two, I had not only built my first stack via code and the AWS CLI, but I had blown away all the work I had previously done with the front end and built it into the stack properly.

Once I got the stack started using SAM, I played around with it for a few days. I added a DynamoDB database, made changes, deleted resources, learned how to troubleshoot errors, then I deleted the entire stack and rebuilt it again from the start. The AWS documentation was extremely helpful here, as I would find something that I would need to change in the AWS console, then go through the documentation to find out how to do it via code instead. By the end, I was rarely looking at the console at all. Being able to save your configuration code, version it, and build/modify/delete resources so quickly and easily was my favorite part of this challenge. I absolutely fell in love with the wizardry of infrastructure-as-code with SAM/CloudFormation, and I can't wait to do more with it.

Doing Hard Things

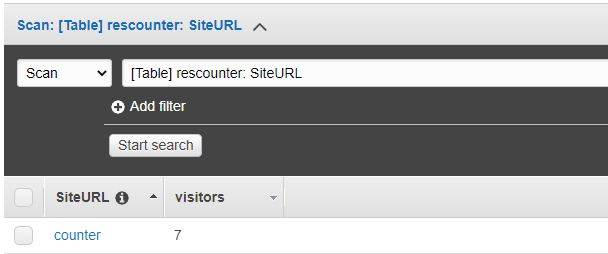

With the wind in my sails and seeing everything starting to come together, it was time to tackle the more difficult tasks. I diagrammed out how the API/Lambda function/database pieces would flow, broke it down into small chunks and worked backwards, starting with DynamoDB. I decided to write my code to take advantage of DynamoDB's atomic counter feature to keep track of the number of visits to the site, and I set up a simple partition key and schema on the table because I only needed to track that one item.

I eventually figured out coding the Lambda function thanks to some Python tutorials, a helpful person on Stack Overflow, and a LOT of experimentation. This was easily the most frustrating part of the challenge due to my lack of previous Python coding experience, but also where I learned the most, and where I got my biggest victory in the end. Sadly, I can't show you exactly what that looks like since others are still working on the challenge, but trust me, under the below pic there was a beautiful snippet of Python code. It was talking to the database and counting, but I still wasn’t finished yet.

Once I had the API, Lambda, and DynamoDB working together, I needed to write some JavaScript on the front end to call the API. By this point I was in a more of a coding mindset, so this was went fairly quickly until I hit the dreaded Cross-Origin-Resource-Sharing (CORS) error. Fortunately I was using a Lambda proxy in my API, and after some research I learned that I could pass the appropriate CORS headers from the Lambda function itself. Such an elegant solution for such a wicked little stumbling block. Just like that, I had a working hit counter!

The Finish Line

I took a break for a day or two and then it was time to get this project finished. The last requirements were creating Python unit tests, and implementing CI/CD to maintain the stack. I experimented with both GitHub Actions and AWS CodePipeline, and ended up going with GitHub Actions. Setting up the CI/CD for the front end was simple - I set up my GitHub repository and configured it to sync commits to the repository to S3.

The CI/CD for the back end was considerably more difficult, as I needed to configure multiple stages to install Python dependencies, lint the code, and test the code with Pytest prior to actually building and deploying the code. While writing the Python test, I found that using the moto library to mock AWS resources was a fantastic way to test my database code without risking changes to production. The moment that I finally saw all the green checkmarks in the various stages, I may have jumped out of my chair.

With that completed, I had fulfilled all the technical requirements of the project, and with this blog post I am officially done! You can see the results at https://stacystipes.com.

This entire experience has been extremely rewarding. The hours that I spent every night going down Google rabbit holes, experimenting with code, and configuring the various AWS components just solidified and built upon the knowledge that I had going into the challenge. More importantly, I learned the importance of breaking down complex problems into small manageable pieces, and not giving in to frustration when errors occur. It's a cliché but it's true: if you're not making mistakes, you're not learning! Finally, I discovered that I really, really enjoy working on projects like this, and I especially love coding. Once I got into the flow, I would completely lose track of time because I was having so much fun with it.

I want to say a big thank you to Forrest Brazeal for creating the challenge to help those of us who are new to cloud development, and also a huge shout-out to the fantastic Cloud Resume Challenge community. It was so helpful to be able to ask questions of incredible AWS mentors, and to brainstorm with and encourage other participants who were encountering the same challenges.

What's next for me? More projects! I'm looking into more projects to work on with the #100DaysofCloud community, and I have ideas for a project or two that I'd like to design on the subject of migrating from on-premises virtualized datacenters to public cloud solutions. In addition I'm going to continue working on my Python coding skills, and start diving into Terraform, Docker and Kubernetes as well. There are still lots more rabbit holes to explore, and I’m just getting started.

Top comments (0)